IS MATH REAL? Me, a Harvard-trained data analyst speaks out ...  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

You can think of math as unrelated to reality similar to a board game. In that case, saying that "2+2=4 is true" is equivalent to saying "The rules of Monopoly are such and such". I find that a very limited and unsatisfying definition of true.

For instance, it leaves the door open for me to create my own game. In my version, I can set "2+2=5". It would be like playing checkers with chess pieces. Why not? There are after all logically consistent mathematical systems where "2+2=5" is a perfectly valid statement!

You might want to say "2+2=4" is the "real" version of arithmetic. That way you win the argument through branding. Yours is the real ARITHMETIC™. You know — the good stuff, from when we were kids.

But if you ask me, it seems a bit silly to claim ultimate authority about mathematical semantics based on a grade school level understanding of the topic. If it& #39;s just semantic games then everybody can agree to disagree. Nobody& #39;s right.

Where the argument really heats up is when you bring reality into it. How much does arithmetic line up with the real world? This is a much more interesting question than you might imagine.

One argument, I& #39;ve used in the past is to talk about "2.4+2.4 = 4.8". If we round this statement, it becomes "2+2=5" because 2.4 rounds to 2 and 4.8 rounds to 5. People often object to this example because the underlying truth is 2.4 + 2.4 = 4.8 which is just regular arithmetic.

But in the real world, we can& #39;t measure things perfectly. If you& #39;re just thinking about physics. Don& #39;t. There are other numerical phenomena in the world. There& #39;s restaurant ratings and credit scores and all manner of systems that we model mathematically.

Do you think most humans can meaningfully distinguish between a 2.1 and 2.2 rating out of 10? Consider for a second that we may live in a world so irreducibly complex that the most precise measurement you could get in some cases is 2 even if the underlying reality is 2.4.

We are always limited as observers. Now I& #39;ve so far implied that we& #39;re seeing a fuzzy version of a more precise reality. This is a crutch for the sake of explanation. Imagine a system that& #39;s so noisy and chaotic that we can& #39;t say for sure what lies at the bottom if anything.

Perhaps the fuzziness is the reality. Ratings produced by humans are a good example of this. The same human might rate the same meal a 2 or 5 or 10 at different times. The best representation of their feelings might be a collection of all their ratings of the same meal.

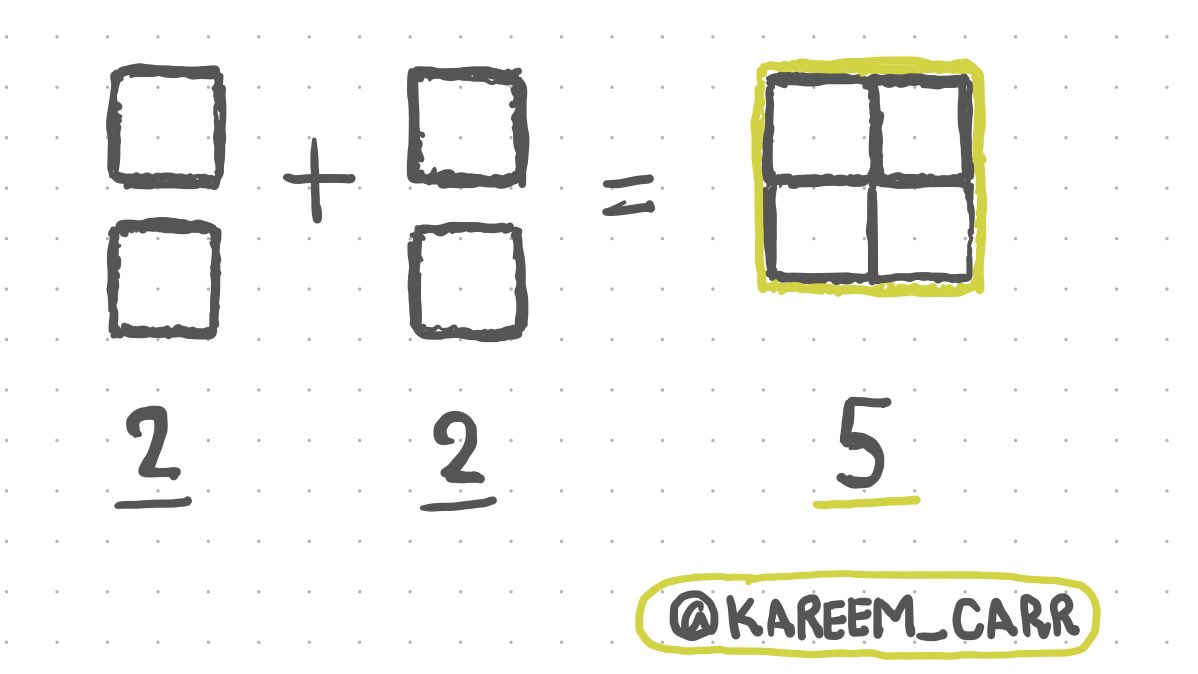

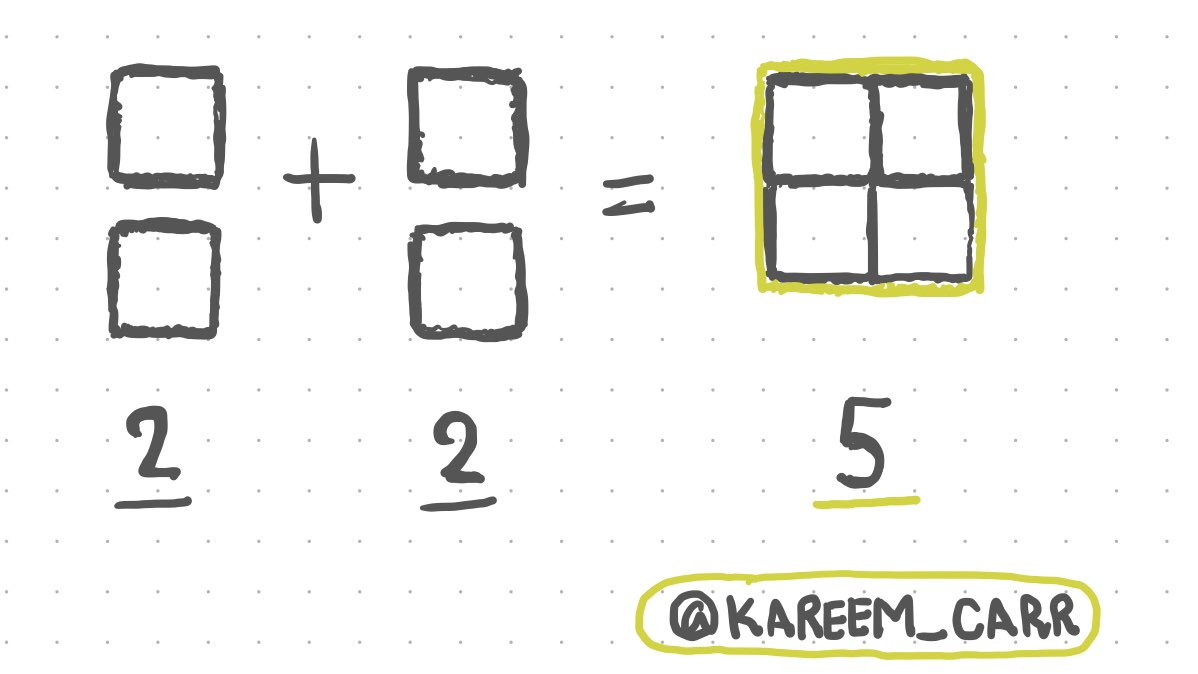

Look at the diagram below. Would it feel like cheating if I said this was a system where "2+2=5"? I& #39;ll ask you to look at it again after my explanation.

Let& #39;s talk about "2+2=4" in real life. In order to see if it& #39;s true, we have to apply it to the vast variety of things we humans perceive and verify that it works. This is an incredibly complicated task which we humans perform effortlessly so I& #39;d like to unravel the magic trick.

To count things, first we must consider what a "thing" is. As a data scientist, I count things for a living. Let me tell you. It& #39;s hard. We don& #39;t have to define a "thing" to the satisfaction of a philosopher but we need to define the entity well enough to count it.

Our definition of a "thing" needs to be stable over the course of our analysis, somehow mutually exclusive of other "things" like it, etc. If you& #39;re counting covid cases? Should they be by diagnosis, antibody test, PCR test, temperature check, etc?

If you& #39;re studying creativity in writers, how would you count the number of ideas? Would you get a panel of humans to count "ideas" and compare counts and then back engineer a definition? This is a real problem people who study creativity face!

Once a definition is found, how do we know how many things we have? Often we imagine moving past each thing in a linear order over time and counting as we go. To interpret "+", we need to define an operation of combination. Often we think of this as gathering things into piles.

How do we define "="? We could enumerate things before we do the "+" action and afterwards and if the number is the same then this is what "=" means but how long before the "+" action is OK? How long after?

In chemistry, we might worry about counting too early, before the chemicals have all mixed or reacted. If it& #39;s food, we might worry about waiting too late, after some it has rotted or been eaten by vermin.

Look again. We talked about defining things (in this case squares) and the combining them (in this case placing them besides each other) and then counting before and after combining them. Does it seem fairer now that I could interpret this as saying "2+2=5" in this system?

Many people when confronted with a situation like this will go back and modify their definitions so that normal arithmetic applies. This is why they can claim that normal arithmetic is universal and always applies. But there is another way of doing this.

You could modify your math so it describes the entities and your interaction with them as things really are! This might mean building the fuzziness and uncertainty directly into the mathematics!

It doesn& #39;t matter which way you do it. My hope is that you understand the flexible relationship between our mathematical systems, our perceptions of the world, and the symbolic manipulations we use to reason about reality. We are not passive observers.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

Addendum: We statisticians, data scientists and other data analysts modify the math to fit reality in a hacky way. We attach reality to math with duct tape. The duct tape is called a mathematical model. We take advantage of standard math because it& #39;s a common language.

Our mathematical models are where all the fuzziness goes. Two data scientists can do the exact same analysis with the exact same general approach and get different numbers that more or less give essentially the same predictions! This is the reality of data analysis!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤯" title="Explodierender Kopf" aria-label="Emoji: Explodierender Kopf">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤯" title="Explodierender Kopf" aria-label="Emoji: Explodierender Kopf">

Read on Twitter

Read on Twitter