I managed to get simple 3D physics working in the prototype for my WebXR game for #js13k. Best part is, I& #39;m not using any extra libs! It& #39;s all vanilla JS, clocking in at 9 KB right now. The hand mesh alone is 3 KB so I& #39;m sure there& #39;s plenty room for optimization, too.

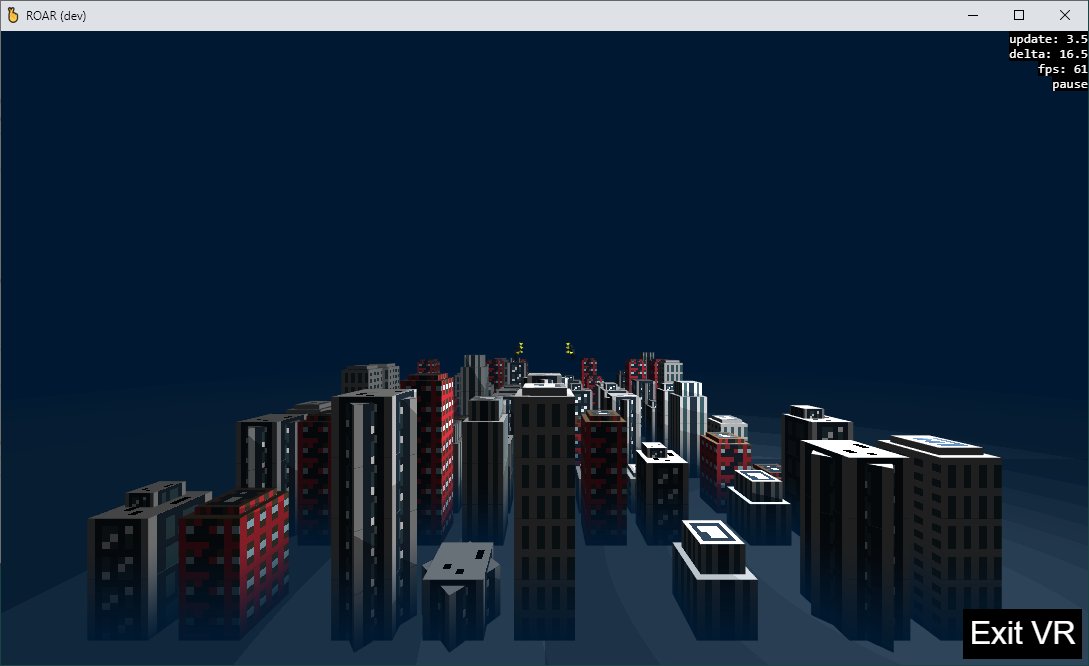

More #js13k progress! I added toon shading, a few textures, and replaced the hand mesh with a paw which has fewer vertices. This is now still around 9 KB zipped!

Have you ever wanted to be a Godzilla wreaking havoc in a city?

Have you ever wanted to be a Godzilla wreaking havoc in a city?

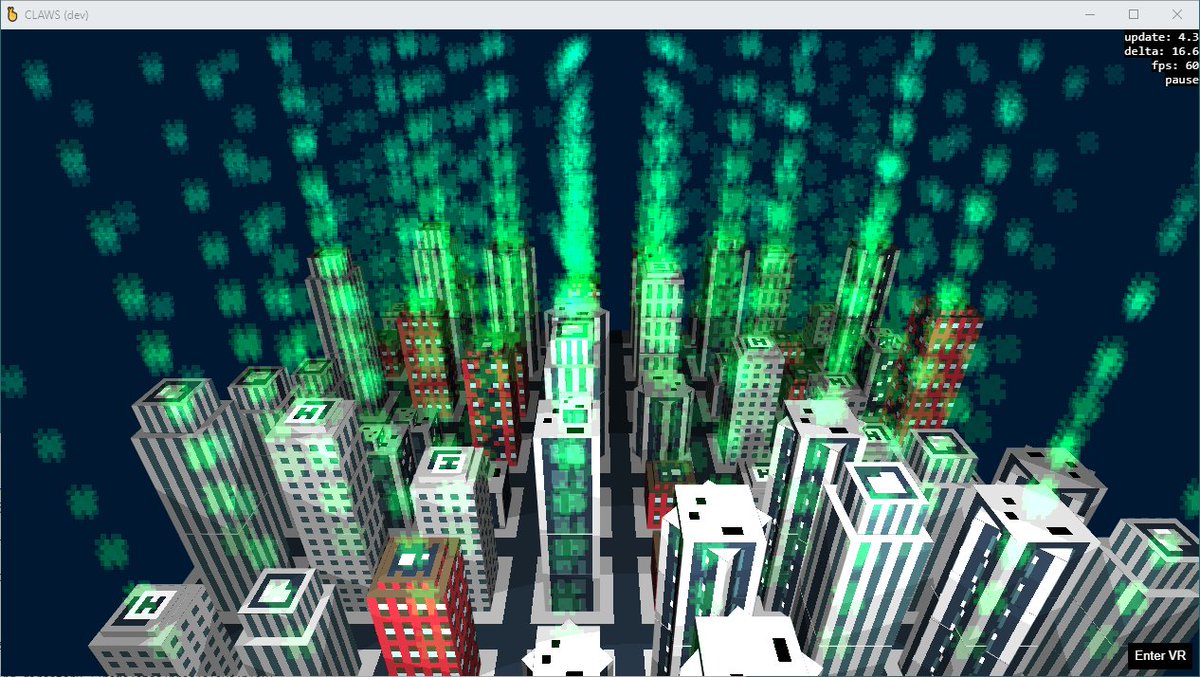

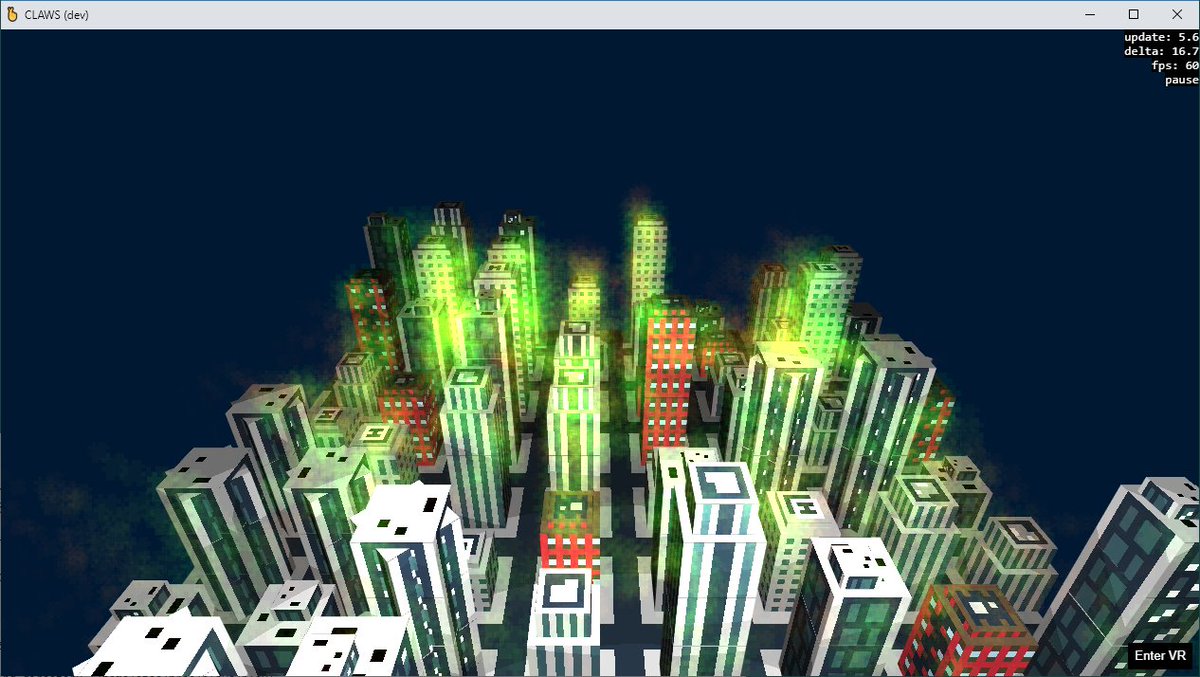

I added rooftops to each building type and a road texture, but really, this tweet is about the fire breath.

Check out the fire breath, everyone!

Build size: 11 KB. #js13k

Check out the fire breath, everyone!

Build size: 11 KB. #js13k

Tonight I learned a few things about blending and animating UVs. I feel like I& #39;m discovering the rendering techniques from the early 2000s—and I& #39;m absolutely in love.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🔥" title="Feuer" aria-label="Emoji: Feuer">

Build size: 11.5 KB #js13k

Build size: 11.5 KB #js13k

Here are a few work-in-progress shots back when I was using different colors to tell building fire from the fire breath apart. It& #39;s somewhere between Warcraft 3 poison effect and The Matrix :) #js13k

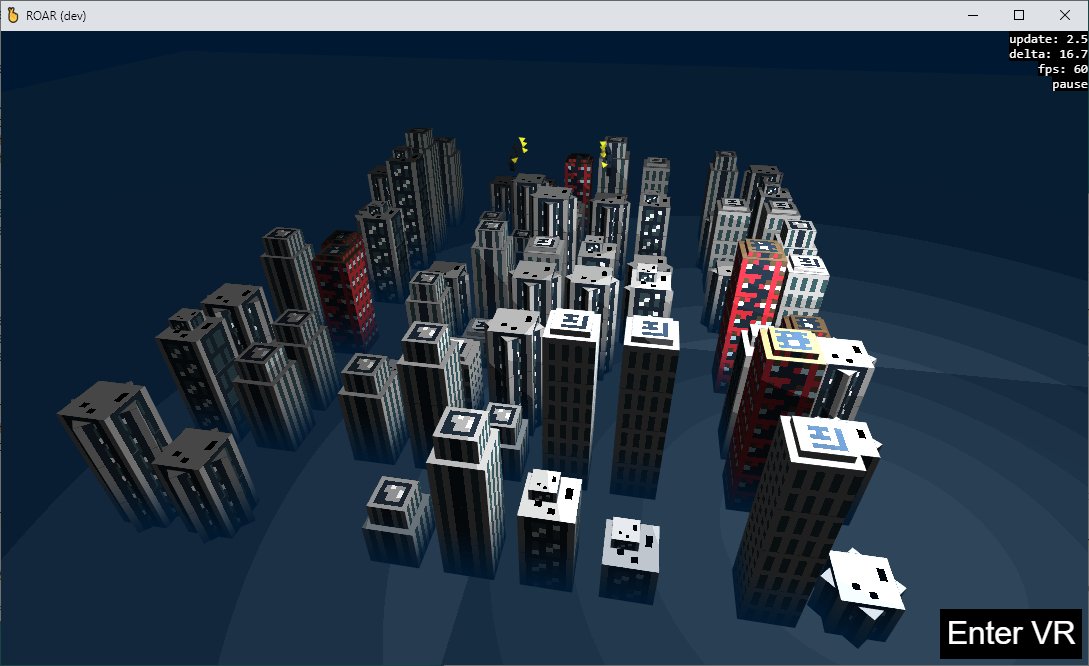

Lots of updates to my #js13k #webxr entry about the zen of destruction:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎶" title="Mehrere Musiknoten" aria-label="Emoji: Mehrere Musiknoten"> Spatial sound effects

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎶" title="Mehrere Musiknoten" aria-label="Emoji: Mehrere Musiknoten"> Spatial sound effects

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚓" title="Polizeiwagen" aria-label="Emoji: Polizeiwagen"> Police cars

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚓" title="Polizeiwagen" aria-label="Emoji: Polizeiwagen"> Police cars

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚀" title="Rakete" aria-label="Emoji: Rakete"> Homing missiles

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚀" title="Rakete" aria-label="Emoji: Rakete"> Homing missiles

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎆" title="Feuerwerk" aria-label="Emoji: Feuerwerk"> Explosions

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎆" title="Feuerwerk" aria-label="Emoji: Feuerwerk"> Explosions

…and…

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏈" title="American Football" aria-label="Emoji: American Football"> Grabbing buildings and throwing them!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🏈" title="American Football" aria-label="Emoji: American Football"> Grabbing buildings and throwing them!

Build size: 13.5 KB (oops!)

#gamedev #screenshotsaturday #vr

…and…

Build size: 13.5 KB (oops!)

#gamedev #screenshotsaturday #vr

I& #39;ve been experimenting with a Pistol Whip-like gameplay for my #js13k #webxr entry today. To be fair I& #39;m not sure I like it. I& #39;ll try to tweak it a bit but without a good soundtrack this might be a dead end.

Build size: 12.7 KB (yay optimizations!)

Build size: 12.7 KB (yay optimizations!)

I came to gamedev for games, I stayed for the odd bugs. Here are the buildings moving in the direction they& #39;re facing rather than towards the player.

BUILDING POPCORN MACHINE!

I didn& #39;t post updates in this thread during the last week of the competition because I was stressed out by the deadline. I did, however, take notes and work-in-progress screen captures which I& #39;d like to share now that the competition is over. #js13k

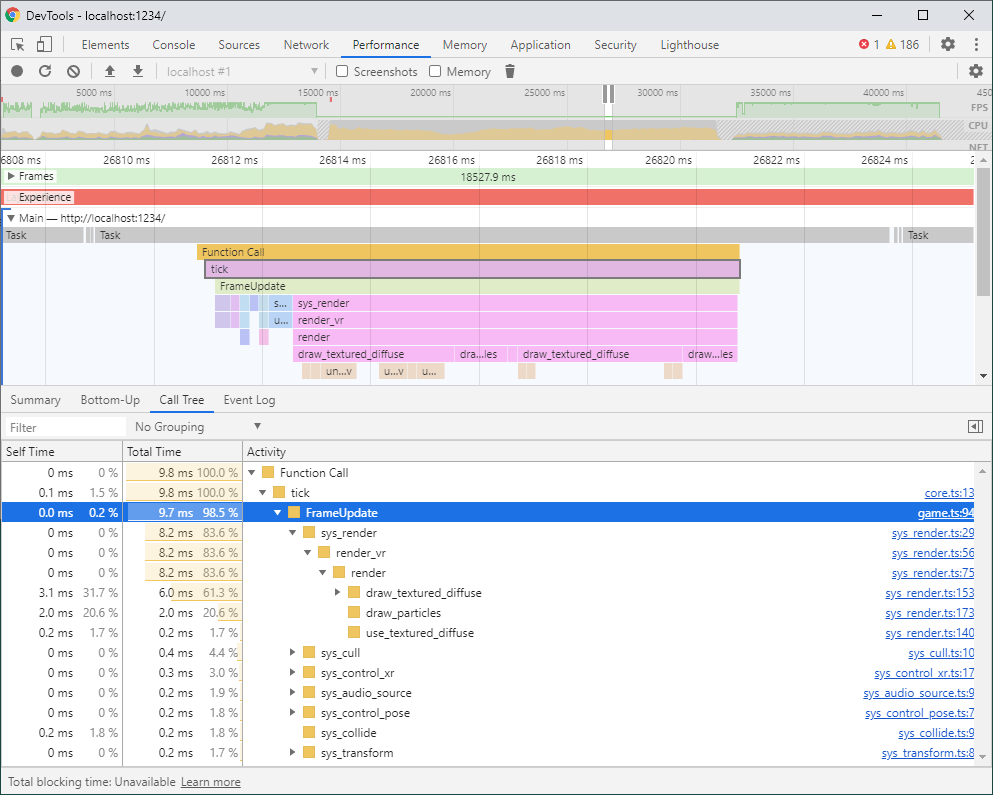

I was pleasantly surprised by the JavaScript and WebGL performance on the Oculus Quest. With a simple frustum-culling system which skips unnecessary draw calls, ROAR runs at a smooth 72 FPS.

The game is GPU-bound, and the cost of the extra in-frustum check is negligible. Here& #39;s a typical frame profile: sys_render takes 8 ms (83% of the frame), while the CPU-bound systems like sys_transform, sys_collide, and sys_cull add up to less than 1 ms!

It shouldn& #39;t be surprising that so much time is spent rendering. To start, the headset has to render everything twice, one for each eye!

Let me walk you through some numbers and measurements which made me realize I needed frustum culling.

Let me walk you through some numbers and measurements which made me realize I needed frustum culling.

The scene typically consists of 64 buildings, each made of 5 cubes on average. That& #39;s ca. 300 draw calls and over 10,000 vertices just for the environment. There are also another ~50 draw calls for the hands and other props.

Each building cube has a dormant particle emitter which activates when the building is set on fire. A control system slows the fire down and finally puts it out after ~20 seconds to limit the number of particles on the screen.

An emitter is additionally limited to at most 200 particles. Still, if you go crazy and put the entire city on fire, the GPU would need to render ca. 40,000 particles, each drawn as a textured point with additive blending.

Keep in mind that each emitter is its own draw call, too. In the extreme case, that& #39;s almost 500 draw calls and up to 50,000 vertices per frame. This was a problem for performance.

When I tested it, I saw around 50-60 FPS with a reasonable amount of fires started (still pretty good!), and down to 15 FPS for the extreme case when all of the city was burning.

(At this point I considered solving this through game design rather than through optimizations. E.g. I could have made the fire breath require some kind of "fuel" which would be in limited quantity. Breathing fire is fun, though, so I decided to try a technical solution.)

The first iteration of the culling system turned off the Render and EmitParticles components for entities outside the camera& #39;s frustum, normalized into the NDC. This was enough to get the number of draw calls to around 150 per frame.

Oculus docs recommend ~50-100 draw calls and ~50,000-100,000 triangles or vertices per frame. I suspect that ROAR got away with more because it only has two materials and changes them only once per frame. https://developer.oculus.com/documentation/unreal/unreal-debug-android/?device=QUEST">https://developer.oculus.com/documenta...

(All textured objects, almost all of which are cubes, are drawn first, and then in a second pass all particles are rendered. This happens to work great for blending: translucent particles are drawn on top of all the textured objects.)

The story of the culling optimization is not over, though. 150 draw calls per frame would be good enough if I could guarantee there couldn& #39;t ever be more.

Spoiler: there could be more.

Spoiler: there could be more.

At first, the culling only applied to entities behind the player or to the sides of the peripheral vision. This was good enough because at the time the player couldn& #39;t really move too far from the center of the scene.

Once I implemented locomotion, however, it became possible to move away from the center of the scene, turn around, and see ALL the buildings. I was back at 300+ draw calls per frame! The rendering performance was bad again.

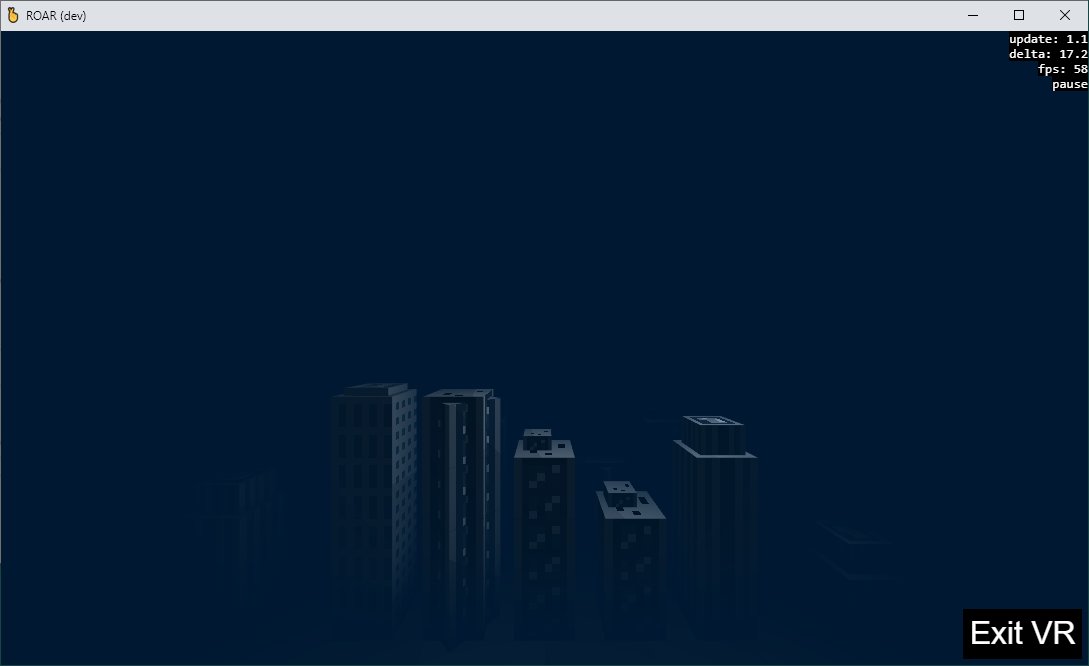

The solution was to use the oldest trick in 3D programming: the fog! I also decoupled the camera& #39;s far distance from the fog distance so that the missiles launched from far away are still rendered.

Thanks to the fog, I can turn off rendering of buildings fairly close to the player which would otherwise be in their plain sight. The number of draw calls is now usually well under 100!

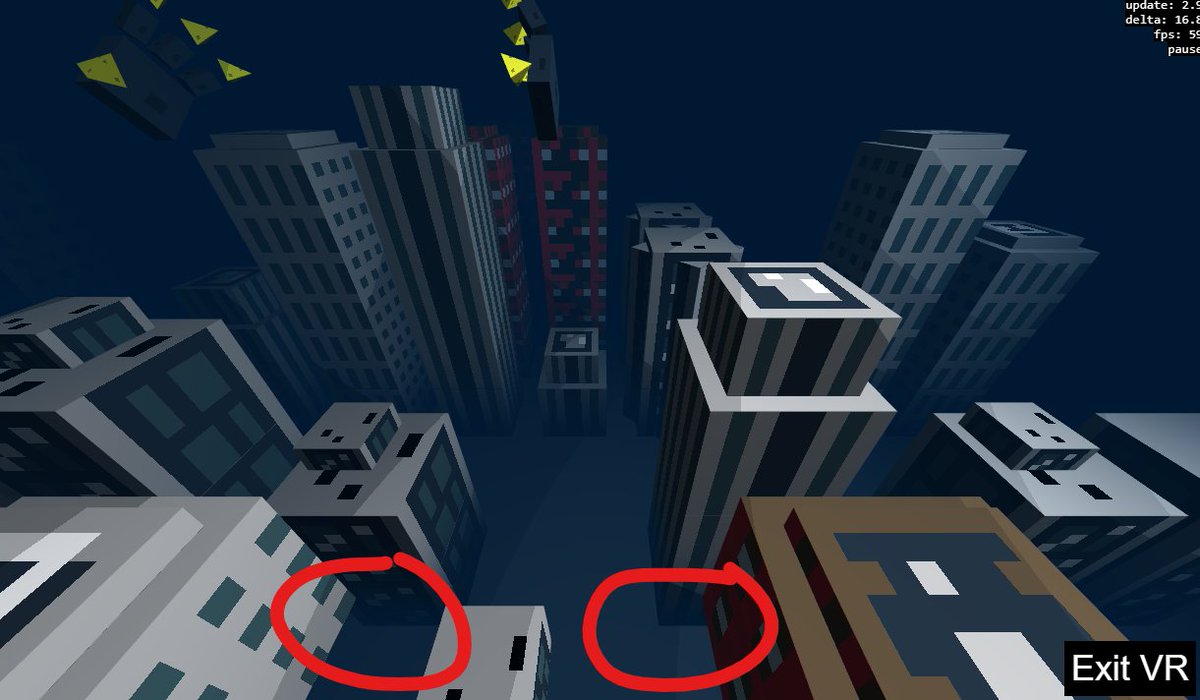

The culling systems still isn& #39;t perfect: it only considers objects& #39; positions rather than the bounding boxes. Due to this, you can sometimes see objects at the edges of the screen disappear too early. Cf. these floating buildings:

To sum up: I was able to achieve very good performance on the Oculus Quest thanks to two optimizations:

1. a culling system combined with the fog, and

2. a control system for fire which slows down the release of new particles and puts the fire out completely after some time.

1. a culling system combined with the fog, and

2. a control system for fire which slows down the release of new particles and puts the fire out completely after some time.

PS. I used the OVR Metrics Tools to monitor the performance on the Oculus Quest during most of the development. It displays an overlay with performance measurements on the screen; it& #39;s super helpful!

https://developer.oculus.com/documentation/tools/tools-ovrmetricstool/">https://developer.oculus.com/documenta...

https://developer.oculus.com/documentation/tools/tools-ovrmetricstool/">https://developer.oculus.com/documenta...

Read on Twitter

Read on Twitter