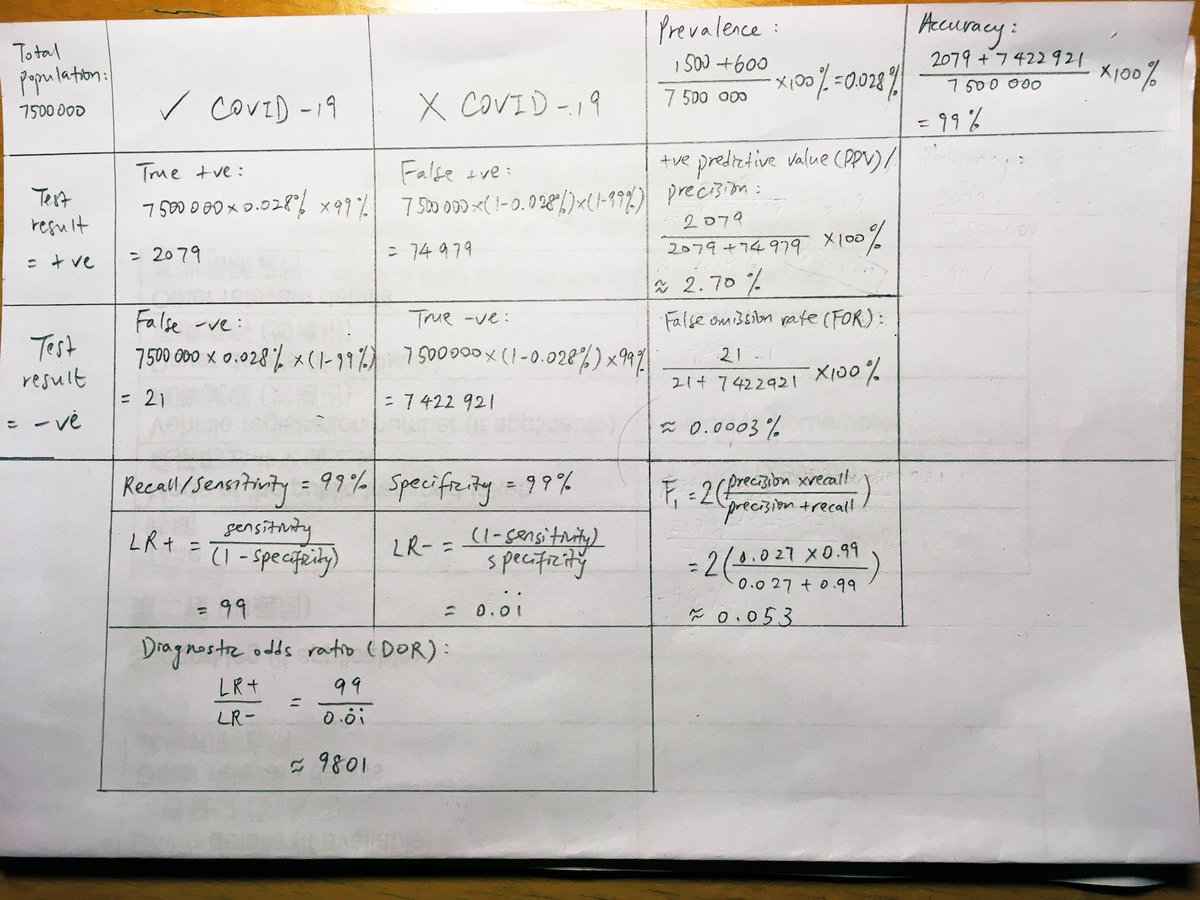

So the HK gov has said that there could be 1500 silent COVID carriers in town and a whole-of-population mass screening scheme will effectively identify them. This is a grid that anyone can replicate and use to predict the outcome of the gov’s test and evaluate its performance.

The prevalence (calculated based on the gov’s claim of the 1500 silent cases within the population and a (pessimistically) predicted addition of 600 active cases at the time the test) is very low in HK. This has an impact on how many false +ve’s we get out of the +ve results.

Taking that the kit used (made by the BGI) has a sensitivity (the ability to correctly identify +ve cases) and a specificity (that for -ve cases) of 99% respectively, we can begin to calculate how many true/false +/-ve’s we’ll get from our modelled whole-of-population screening.

In the model, a total of 77,058 people will be tested +ve. ≈2.70% of these are true +ve’s. This means that the test will have a positive predictive value (PPV) or a precision of ≈2.70%, which is due low, and that ≈97.30% of the +ve results (that’s 74,979 people) will be false.

We’re also interested in the false -ve’s. The model result indicates that we’ll get 21 of these, which is a very small number out of the volume of -ve results we’ll get. This means that the test has a negligibly low false omission rate (not shown in my grid) and an NPV of ≈100%.

A few relationships between the numbers/metrics can be seen:

1) The low prevalence has effected the low PPV, which is an indicator of how well the test confirms a +ve case of COVID;

2) The test does way better at identifying -ve cases correctly, because only very few are ill;

1) The low prevalence has effected the low PPV, which is an indicator of how well the test confirms a +ve case of COVID;

2) The test does way better at identifying -ve cases correctly, because only very few are ill;

3) The 99% accuracy, and I hope this interpretation is correct because accuracy is always tricky, comes from the very high probability to detect true -ve’s at low prevalences, and that +ve results (where the kit we use here tend to underperform) are proportionately less common.

Recall tolerates bycatch to avoid missing any +ve’s; precision would rather miss so we get only the true +ve’s. F1 measures the balance between them. The low F1 here means that either there’s too much bycatch (our problem here) or the kit is missing too many cases than it should.

(The likelihood ratios (LR) & the DOR (the ratio of a carrier’s odds of being correctly diagnosed to a healthy person’s of being misdiagnosed) are independent of the prevalence & fixed by the manufacturing spec, but less relevant to us as we aim to model with a given prevalence.)

So in this theoretical screening, 74,979 false +ve’s will be generated; we’ll correctly capture 2079 carriers, and inevitably let 21 loose (because all test will generate false -ve’s but some do it more often than others) to become the new silent cases unless they show symptoms.

The 75k false +ve’s will need further diagnostic tests (& resources). The 21 that we send back into the community should pose a risk (Rt-adjusted?) no higher than what we’re getting now from our daily cases, but probably less than subjecting medics to people’s sneeze/gag reflex.

More info:

A spreader (a/symptomatic) doesn’t stay infectious indefinitely. We hear the gov say 1500 silent cases, but 1500 starting when? They might no longer carry enough virus (which is by roughly the 14th day since infection) to infect others by the time they get tested.

A spreader (a/symptomatic) doesn’t stay infectious indefinitely. We hear the gov say 1500 silent cases, but 1500 starting when? They might no longer carry enough virus (which is by roughly the 14th day since infection) to infect others by the time they get tested.

The 1500 silent carriers have been among us all this time yet we’re coming out of the 3rd wave all the same. The prevalence will only go lower day by day, making the test even more ineffective. HKers must think hard about the *point* of the scheme and if it endangers more people:

Is it truly necessary to centrally subject healthy individuals doing/taking the test to 2100 carriers, and crash the medical system with 74,979 false -ve’s, just to reduce the number of silent cases from 1500 down to 21 (plus however many resulting new infections out of this)?

Read on Twitter

Read on Twitter