@pyliu47 and I have recently updated the #covidcompare framework (for COVID-19 mortality forecasting model predictive validity comparisons) in a few ways.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread:

https://www.medrxiv.org/content/10.1101/2020.07.13.20151233v4">https://www.medrxiv.org/content/1...

https://www.medrxiv.org/content/10.1101/2020.07.13.20151233v4">https://www.medrxiv.org/content/1...

1. We added a new model from the @USC Data Science Lab: SIKJalpha. The authors went to great lengths to make their code and data #openaccess, transparent and reproducible, which we greatly appreciate.

Their forecasts can be found here: https://github.com/scc-usc/ReCOVER-COVID-19">https://github.com/scc-usc/R...

Their forecasts can be found here: https://github.com/scc-usc/ReCOVER-COVID-19">https://github.com/scc-usc/R...

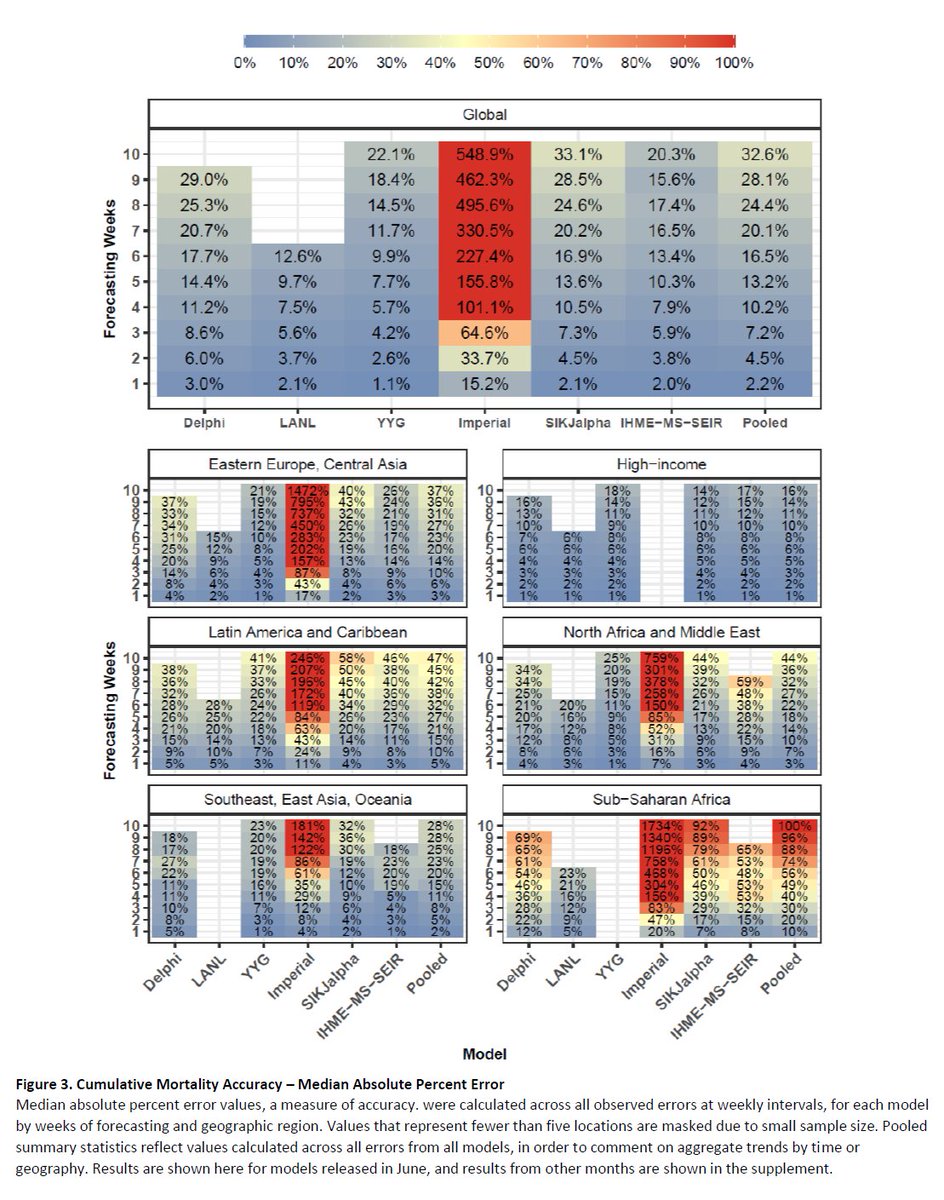

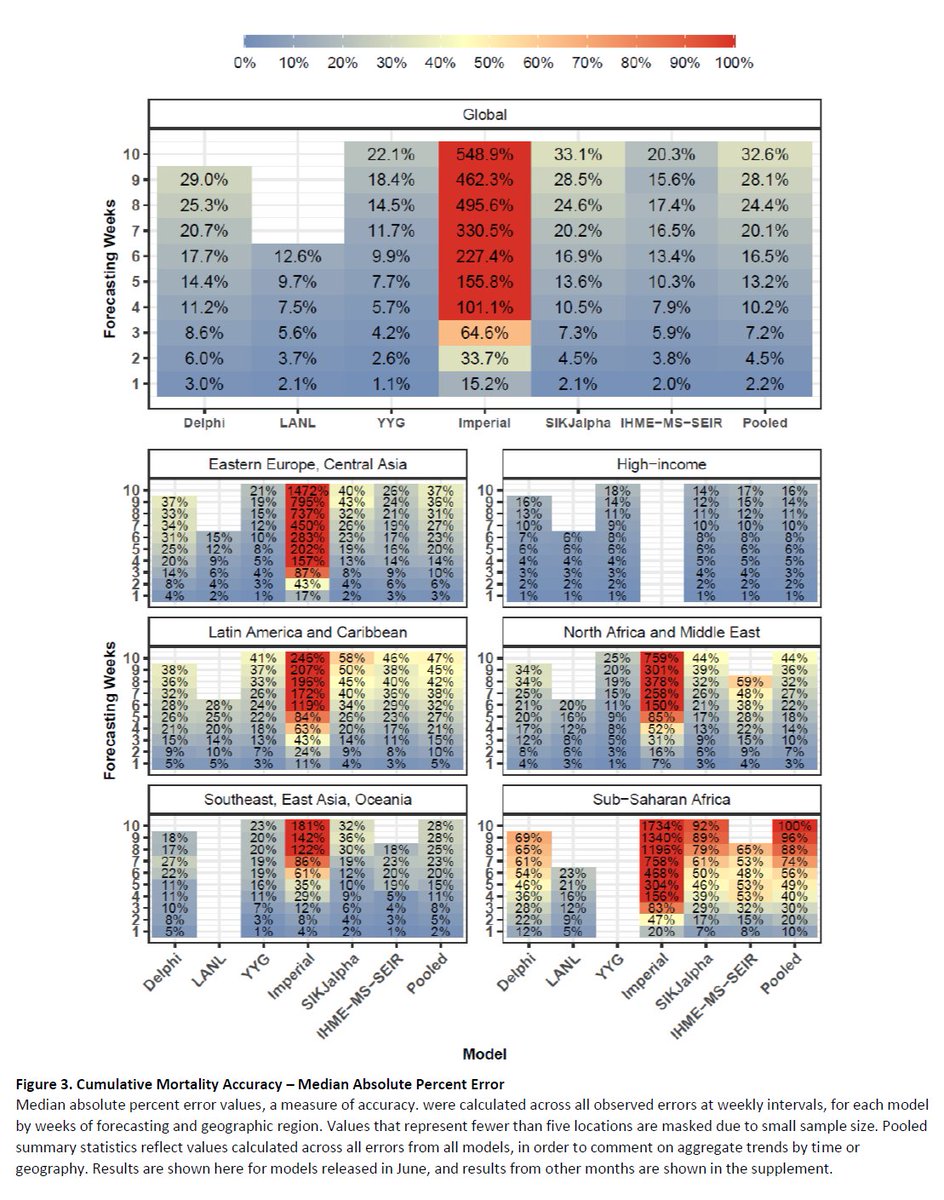

2. Given wide interest in longer-term assessments (past 6 weeks of extrapolation), we also extended the summary statistics to reflect a greater number of weeks of forecasting.

Many of the top models continue to have similar predictive performance, varying slightly by month.

Many of the top models continue to have similar predictive performance, varying slightly by month.

For example, we now show results from models in June through 10 weeks of extrapolation. Here are the global median average percent errors at 10 weeks:

@IHME_UW: 20.3%

@youyanggu: 22.1%

@USC SIKjalpha: 33.1%

@MIT Delphi: 29.0% (at 9 wks)

@Imperial_IDE: 548.9%

Pooled: 32.6%

@IHME_UW: 20.3%

@youyanggu: 22.1%

@USC SIKjalpha: 33.1%

@MIT Delphi: 29.0% (at 9 wks)

@Imperial_IDE: 548.9%

Pooled: 32.6%

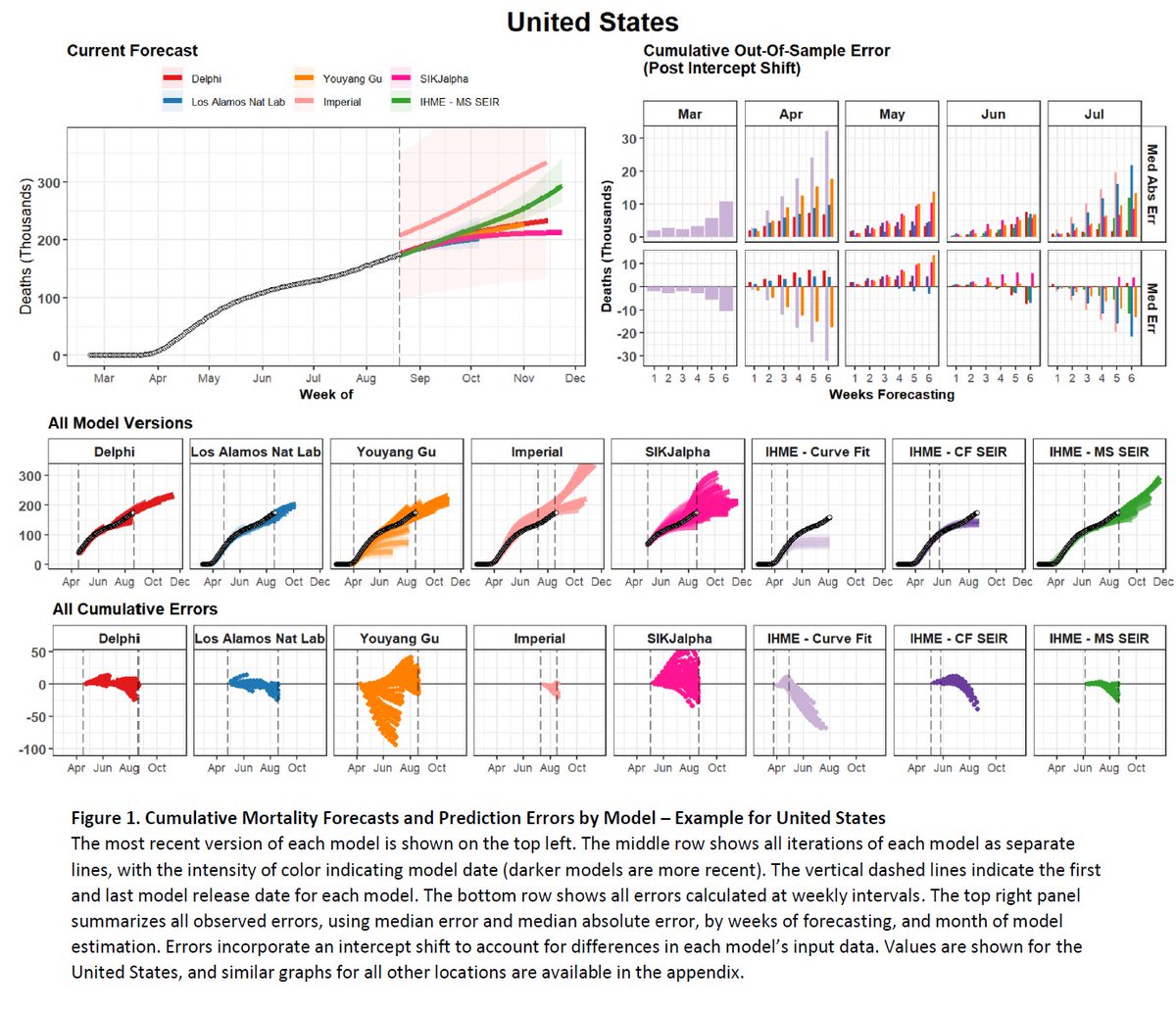

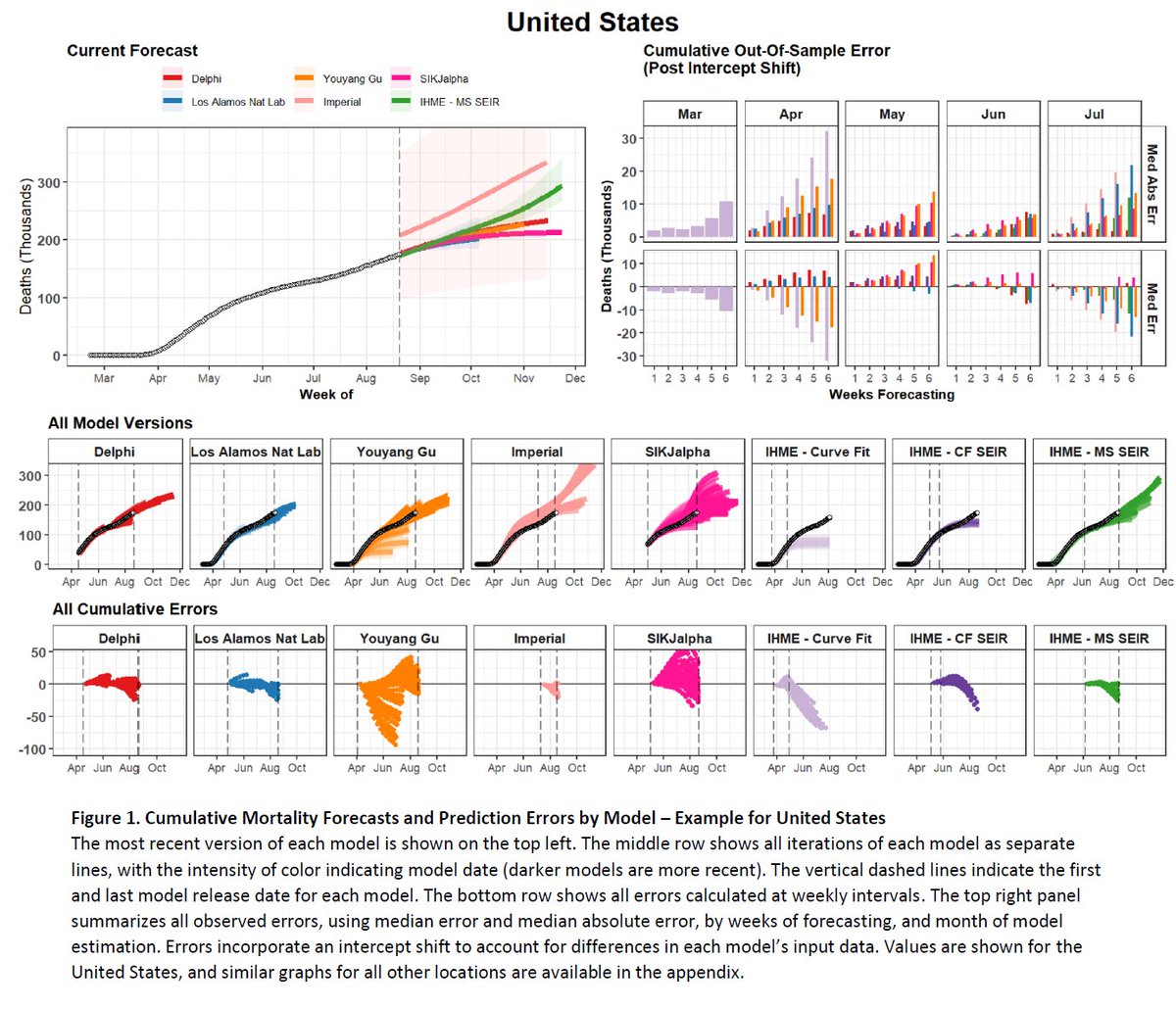

This is what each model has been recently saying about US trajectories. There is less agreement than a few weeks ago:

@IHME_UW: 310K by 12/1

@USC SIKjalpha: 213K by 11/29

@MIT Delphi: 233K by 11/15

@Imperial_IDE: 334K by 11/14

@YYG: 227K by 11/1

@LosAlamosNatLab: 202K by 10/6

@IHME_UW: 310K by 12/1

@USC SIKjalpha: 213K by 11/29

@MIT Delphi: 233K by 11/15

@Imperial_IDE: 334K by 11/14

@YYG: 227K by 11/1

@LosAlamosNatLab: 202K by 10/6

New results can be found on our GitHub: https://github.com/pyliu47/covidcompare

And">https://github.com/pyliu47/c... in an updated version of the preprint: https://www.medrxiv.org/content/10.1101/2020.07.13.20151233v4">https://www.medrxiv.org/content/1...

And">https://github.com/pyliu47/c... in an updated version of the preprint: https://www.medrxiv.org/content/10.1101/2020.07.13.20151233v4">https://www.medrxiv.org/content/1...

Read on Twitter

Read on Twitter Thread: https://www.medrxiv.org/content/1..." title=" @pyliu47 and I have recently updated the #covidcompare framework (for COVID-19 mortality forecasting model predictive validity comparisons) in a few ways.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread: https://www.medrxiv.org/content/1...">

Thread: https://www.medrxiv.org/content/1..." title=" @pyliu47 and I have recently updated the #covidcompare framework (for COVID-19 mortality forecasting model predictive validity comparisons) in a few ways.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread: https://www.medrxiv.org/content/1...">

Thread: https://www.medrxiv.org/content/1..." title=" @pyliu47 and I have recently updated the #covidcompare framework (for COVID-19 mortality forecasting model predictive validity comparisons) in a few ways.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread: https://www.medrxiv.org/content/1...">

Thread: https://www.medrxiv.org/content/1..." title=" @pyliu47 and I have recently updated the #covidcompare framework (for COVID-19 mortality forecasting model predictive validity comparisons) in a few ways.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">Thread: https://www.medrxiv.org/content/1...">