Tonight, we& #39;ll discuss some of the little known low level optimizations made to async/await in .NET Core 3.x! By now, most of you know what asynchronous programming is, if not look at https://docs.microsoft.com/en-us/dotnet/csharp/async">https://docs.microsoft.com/en-us/dot... #dotnet

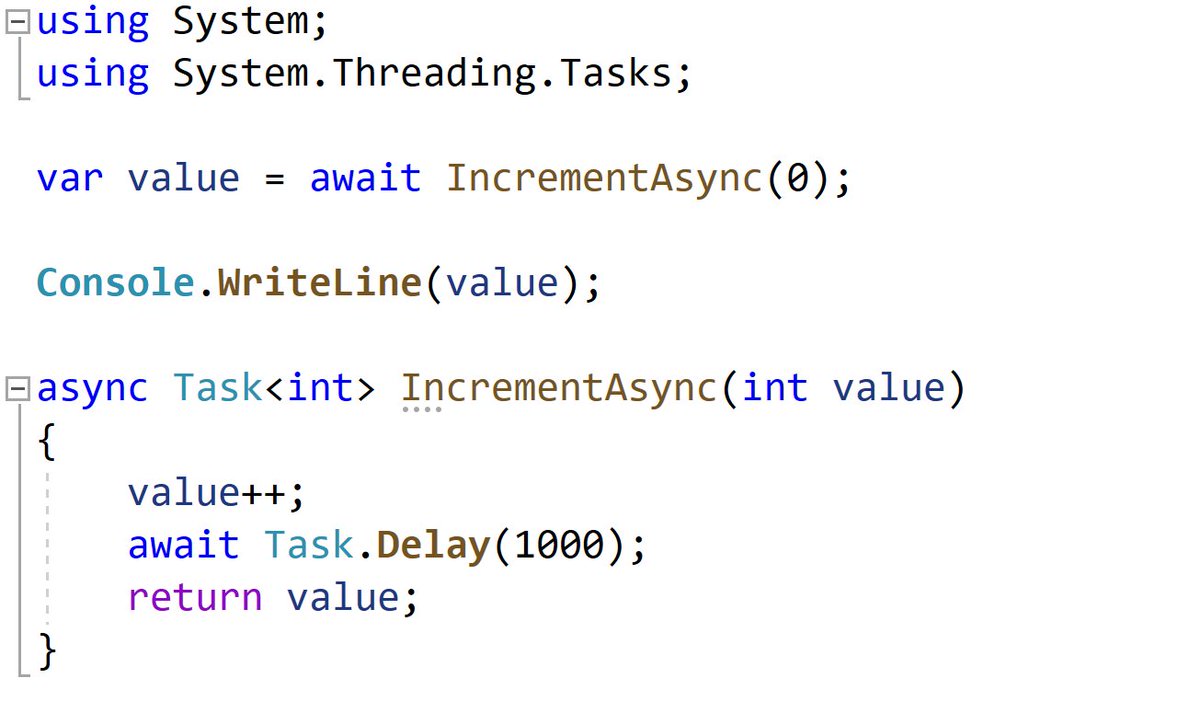

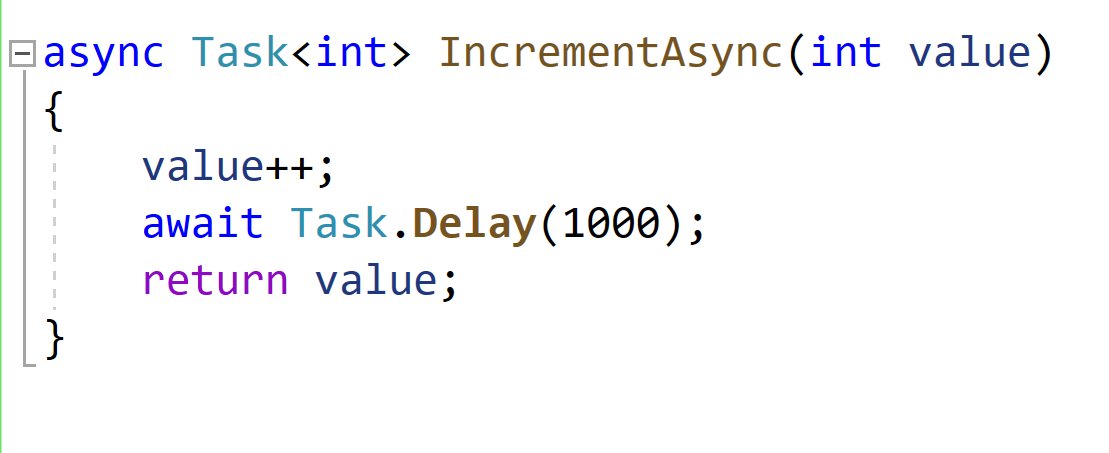

As a primer, remember I can write asynchronous logic in a sequential manner. The keywords async/await originated in C# 5.0 8 years ago, though there were inspirations in other languages that lead to this final syntax. I get sad when people think javascript invented async/await ;)

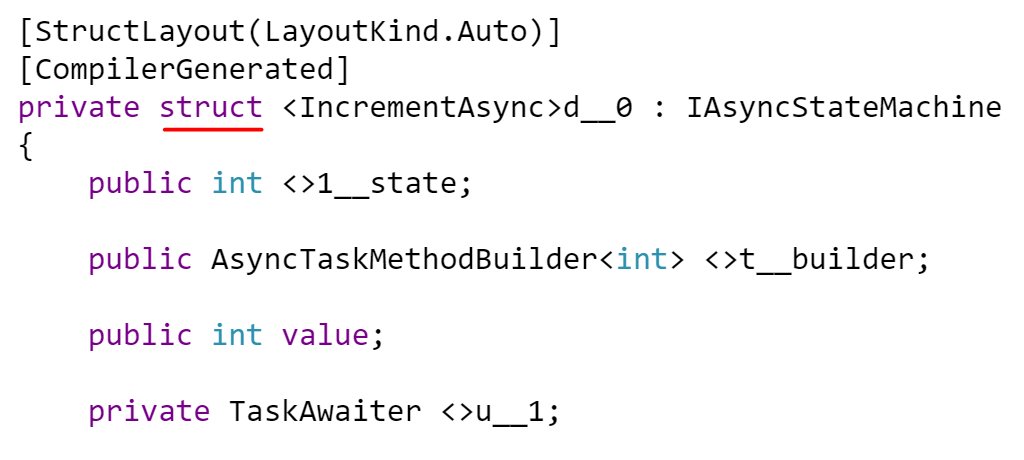

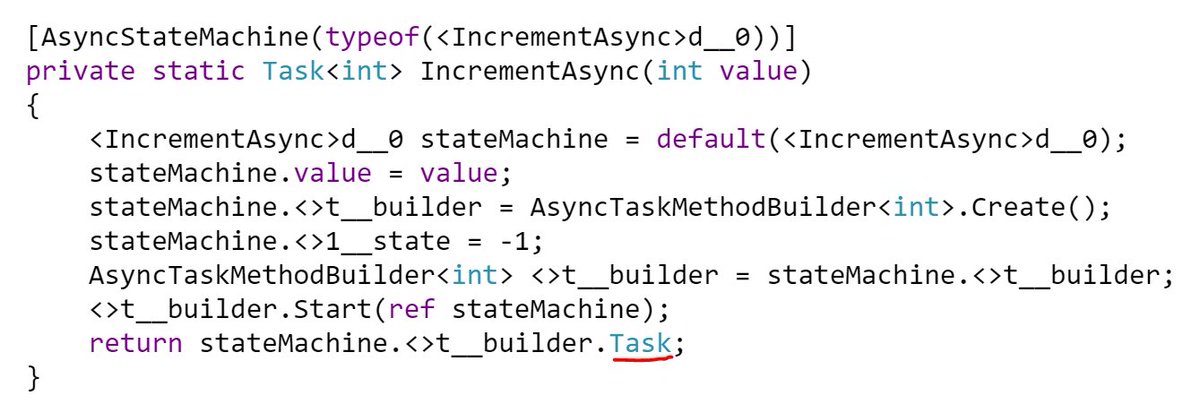

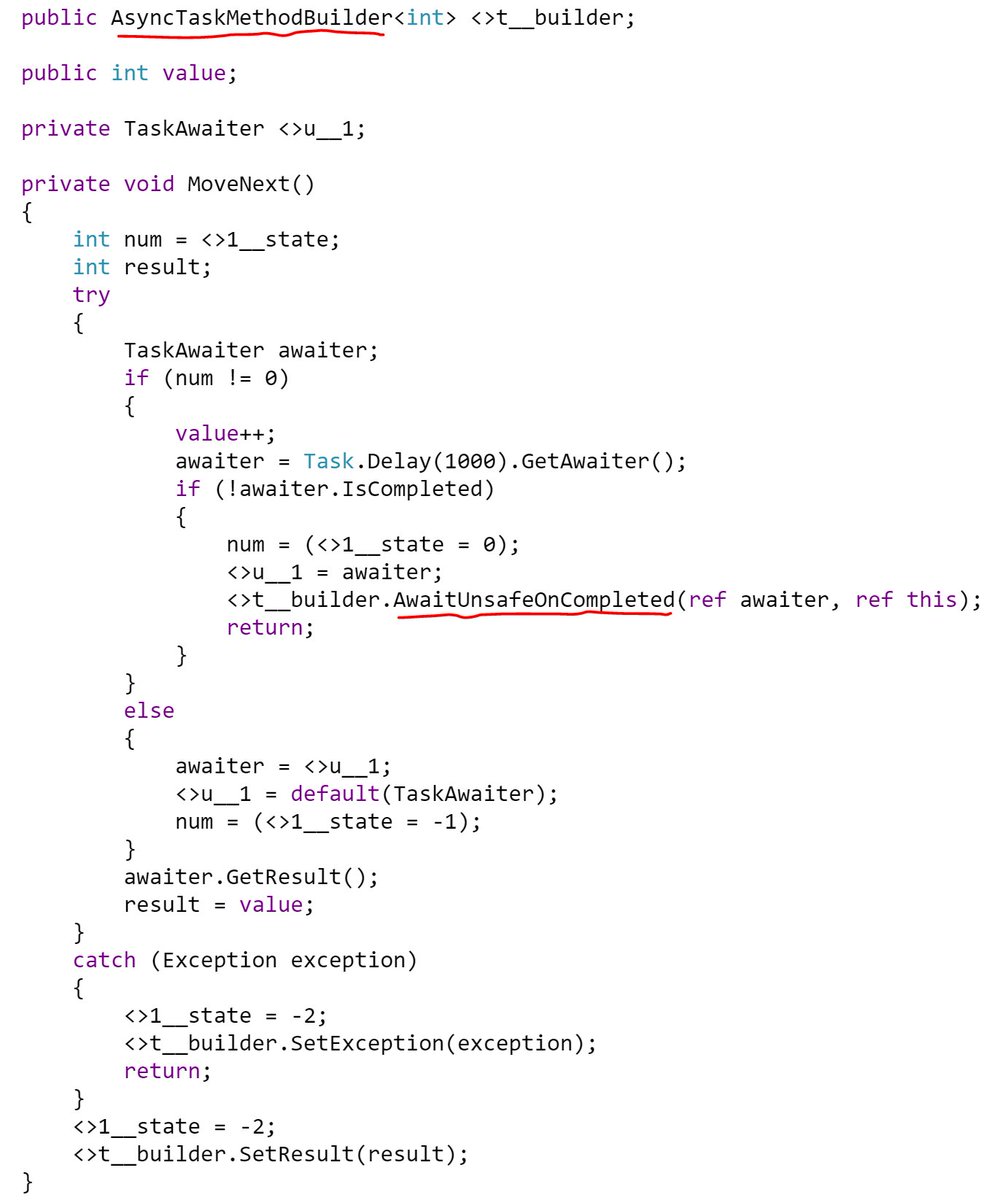

To see what the compiler generates, we decompile the async state machine back into C# to compare #v2:D4AQTAjAsAUCAMACEECsBuWsQGZlkQGFEBvWRC5CANmQA5lqAeASwDsAXAPkQEk2AxgCcApgFsRnAIIBnAJ6CAFOw6IAbgEMANgFcRASnKUyMSmfXa9AaiuZT5iiACcjAHQAREVo1zFEeAH6dg6OAOwWuiLBlAC+WDAxQA==">https://sharplab.io/ #v2:D4AQTAjAsAUCAMACEECsBuWsQGZlkQGFEBvWRC5CANmQA5lqAeASwDsAXAPkQEk2AxgCcApgFsRnAIIBnAJ6CAFOw6IAbgEMANgFcRASnKUyMSmfXa9AaiuZT5iiACcjAHQAREVo1zFEeAH6dg6OAOwWuiLBlAC+WDAxQA==.">https://sharplab.io/... The state machine keeps track of local state and all of the required context to pause and resume at each await point.

For my non .NET followers, Task = Promise. It& #39;s the return type used to represent a value that will be produced in the future.

The state machine starts off as a struct to avoid heap allocations if the methods runs completely synchronously. Because this method returns a Task<int> though, it still needs to allocate the result on the heap.

Allocations like these can add up causing more GC pressure which lead to poor application performance. To reduce these allocations for synchronous completion, we introduced ValueTask. This allows async methods that complete synchronously to avoid allocating on the heap

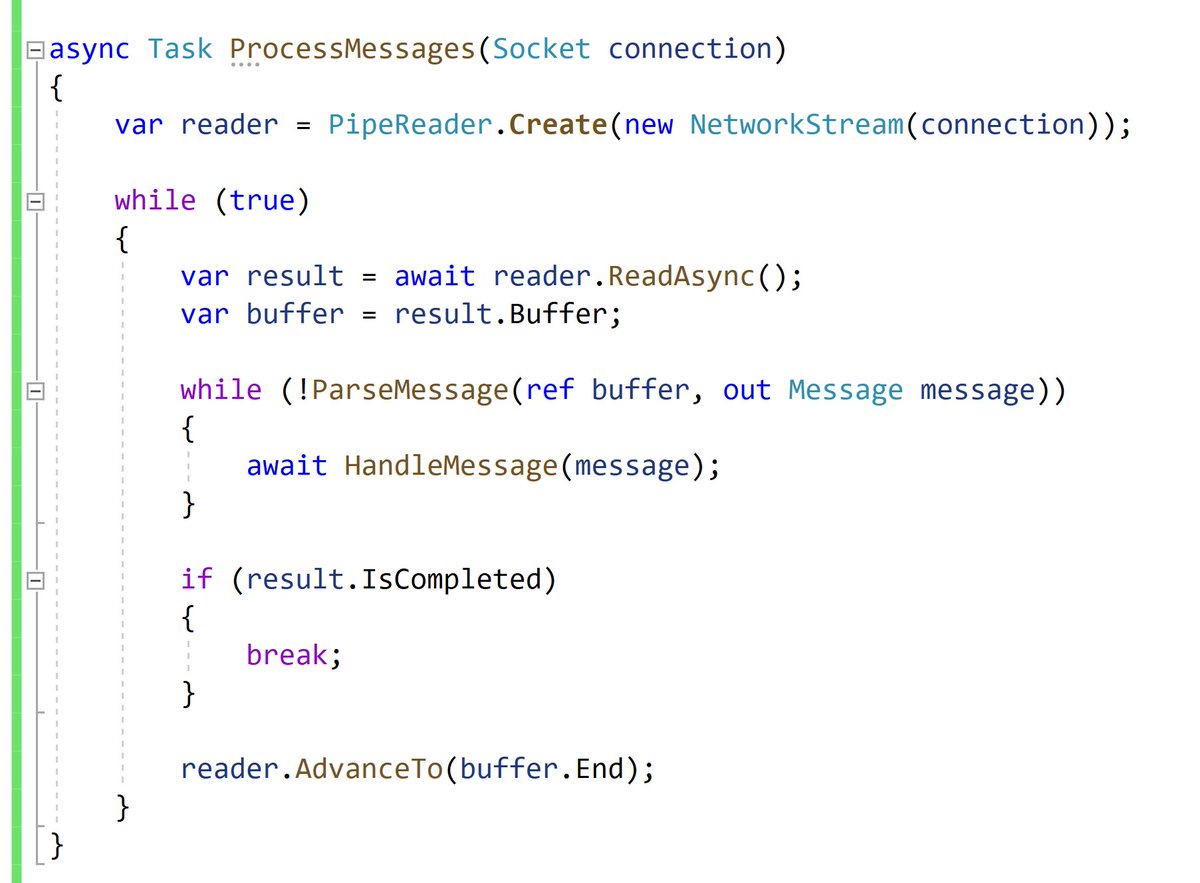

But what about allocations in the asynchronous case? Why do we care? Consider the below code that& #39;s trying to allocate parse some incoming payload off a socket and handling a message.

This is a long lived operation that lives for as long as a connection lasts. We want to reuse as much memory as we can because there will be a large number of these running concurrently (see the C10K problem https://en.wikipedia.org/wiki/C10k_problem).">https://en.wikipedia.org/wiki/C10k...

Lets look at how we optimized each of these allocations for long running asynchronous operations. First, the easy part, lets avoid allocation per socket read. You can read this epic post by Stephen Toub https://devblogs.microsoft.com/dotnet/understanding-the-whys-whats-and-whens-of-valuetask/">https://devblogs.microsoft.com/dotnet/un... but TL;DR we gave ValueTask super powers

It& #39;s now possible to re-use state to removed allocations for repeated non-overlapping allocations. When you issue a Socket.ReadAsync, there& #39;s a new overload that will return ValueTask<int> instead of Task<int>, but there& #39;s more...

Lets go back to the state machine, what happens when you go asynchronous? The struct needs to now exist on the heap since the asynchronous operation is going to be kicked off and the current thread can be used for something else.

When the method goes async (that is, the awaiter isn& #39;t completed), there& #39;s a call into AwaitUnsafeOnCompleted, passing in the awaiter and the state machine (how often do you see ref this?!)

As an aside, "ref this" is to avoid copying this giant struct around. The state machine contains all of the will hoist locals into fields when they cross an await boundary so the initial struct can get big and copying around big structs is expensive.

Each state machine has an Async*MethodBuilder field that& #39;s responsible for doing a couple of things:

- Moving the state machine from the stack to the heap (boxing)

- It needs to capture the execution context (remember this!?)

- Moving the state machine from the stack to the heap (boxing)

- It needs to capture the execution context (remember this!?)

- It needs to give the "awaiter" the continuation. This allows the awaiter to resume execution of the state machine when it& #39;s ready.

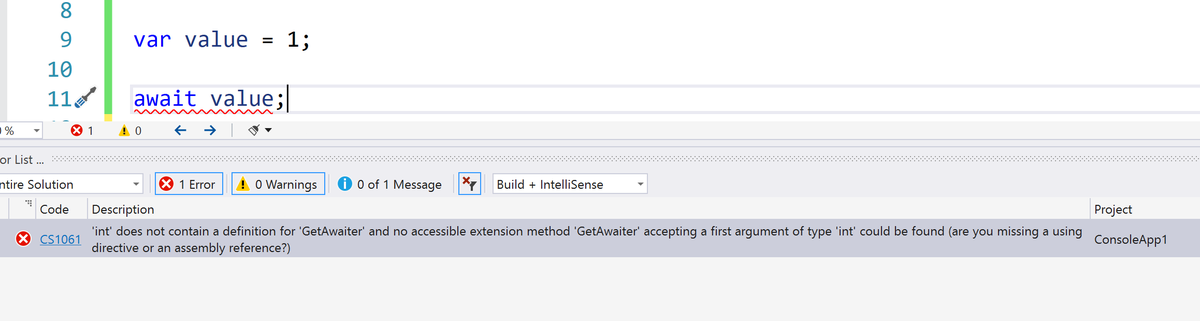

Side note: C# allows you to write your own Task-like types (invent your own promise type) and you can make any object awaitable.

Side note: C# allows you to write your own Task-like types (invent your own promise type) and you can make any object awaitable.

Let& #39;s talk about awaiters for a minute. In C# you can make something awaitable by exposing a couple of methods on a type. You can see this by trying to await a number. It& #39;s looking for a method called GetAwaiter (there are a couple more methods you need to expose).

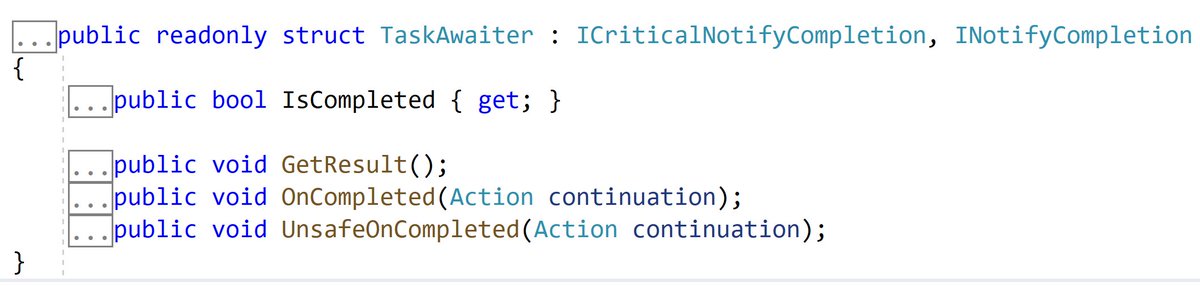

Awaiters look like the following. This is the contract that the compiler generated code and the framework use to interact with the awaiter. Somebody feeds a continuation action to the awaiter and at some point in the future, the awaiter will invoke it to resume execution.

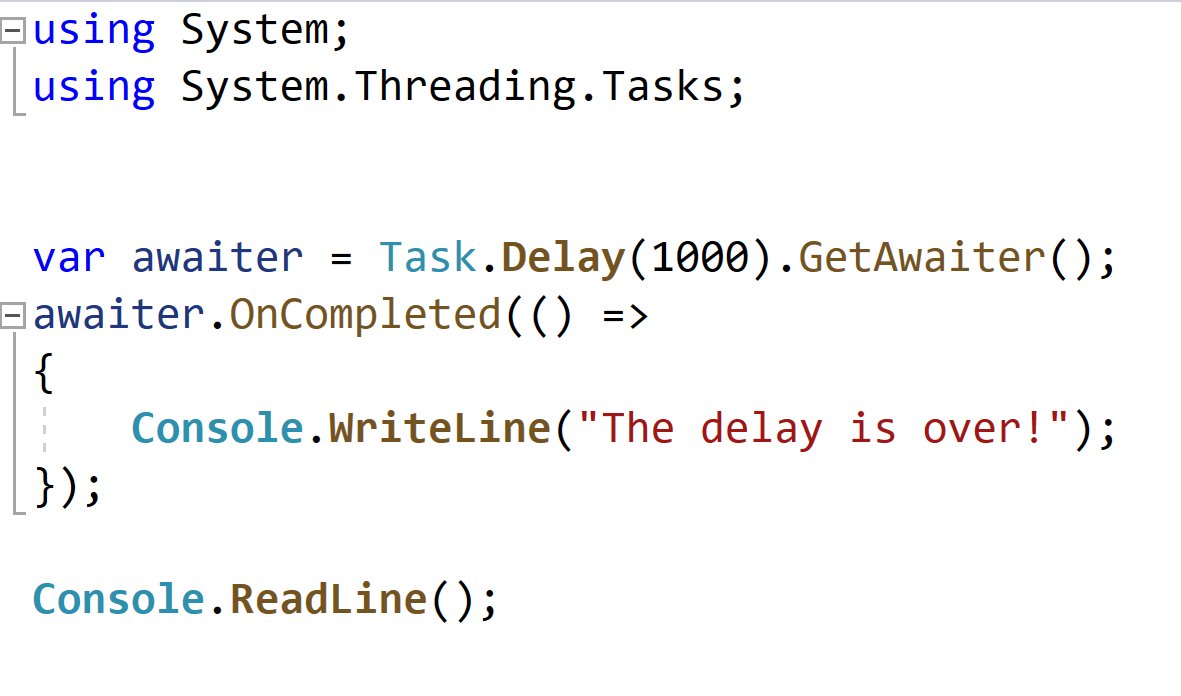

You can use it manually like this. But who wants to write call backs? This is all machinery for async/await to use. The key point here is that awaiters are responsible for scheduling continuations. We& #39;ll see why that& #39;s important to the optimizations that were made.

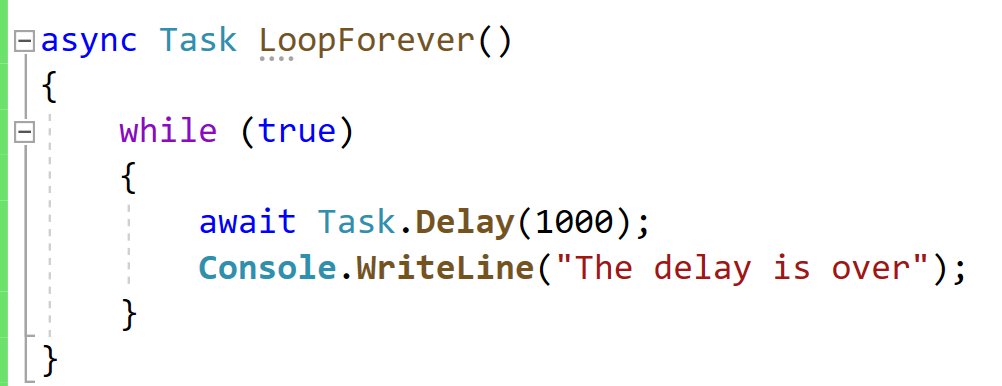

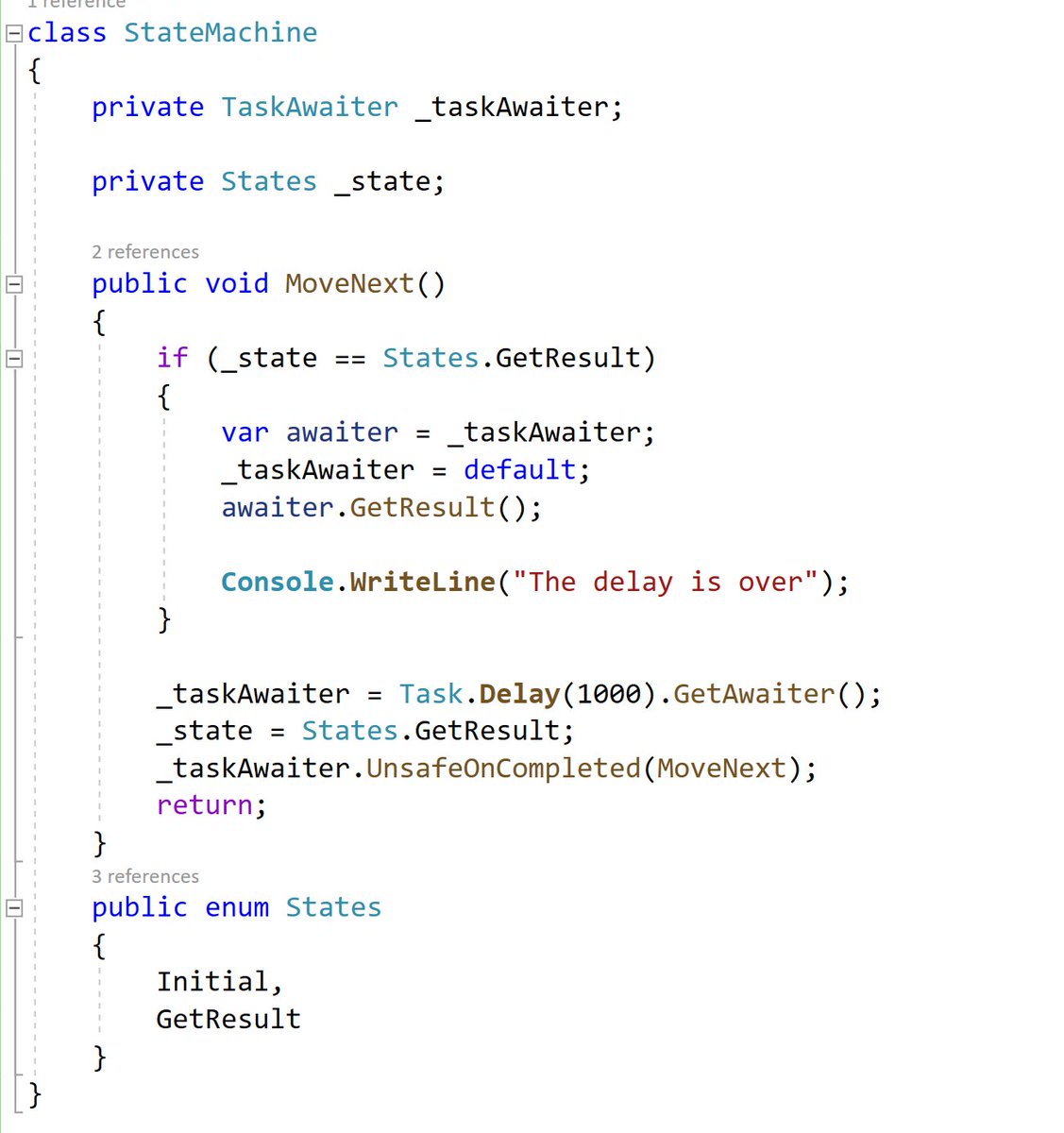

How does this all tie together? Well the state machine contains some code with awaits and each awaiter will stash a reference to the state machine and call MoveNext whenever it& #39;s supposed to continue execution.

That code is a simplified version of what the compiler generates (that probably has bugs) but it shows the interaction. Now where does the overhead come from? There& #39;s a bunch of hidden code in the framework to make this all work.

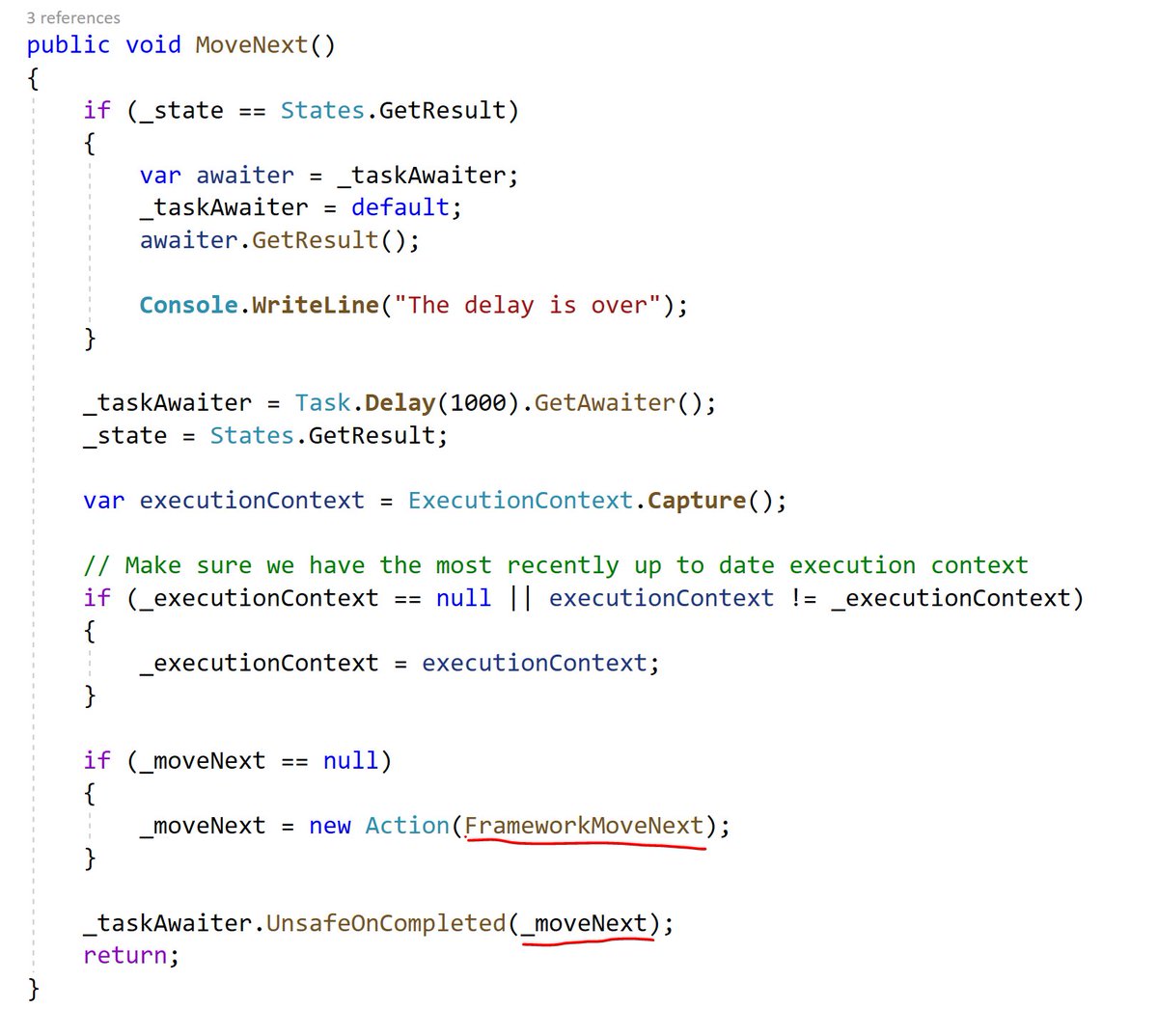

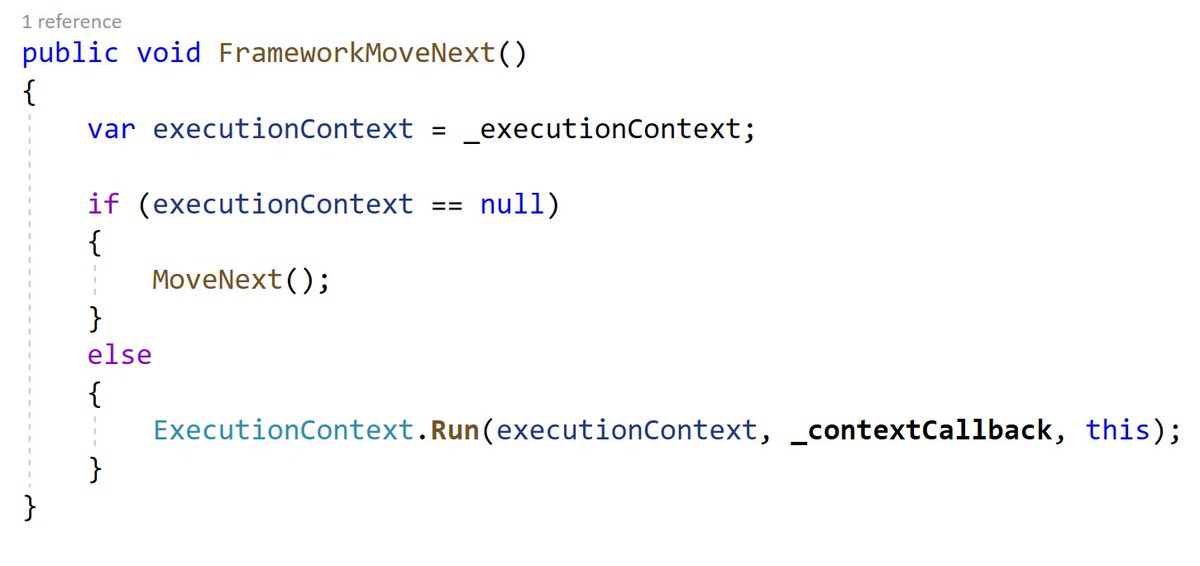

First we introduce a FrameworkMoveNext, that tries to capture the execution context and execute MoveNext under that execution context. This is what makes async locals work. We also updated the original code to call into FrameworkMoveNext as the continuation.

Historically, state machines allocate a Task object for the result, it boxes the state machine to put it on the heap and it allocated a delegate to pass to the awaiter continuation. It would also allocate when scheduling the continuation to the thread pool.

Read on Twitter

Read on Twitter