NPR asked @ngleicher about my reporting on QAnon& #39;s growth on Facebook, which is assisted by Facebook& #39;s own recommendation algorithms. The responses are a bit non sequitur

https://www.npr.org/2020/08/13/901915537/top-facebook-official-our-aim-is-to-make-lying-on-the-platform-more-difficult">https://www.npr.org/2020/08/1...

https://www.npr.org/2020/08/13/901915537/top-facebook-official-our-aim-is-to-make-lying-on-the-platform-more-difficult">https://www.npr.org/2020/08/1...

I& #39;m confused about why @ngleicher brought up "adversarial behavior" with regards to QAnon and Facebook recommendations, since Facebook has yet to take an adversarial stance toward QAnon.

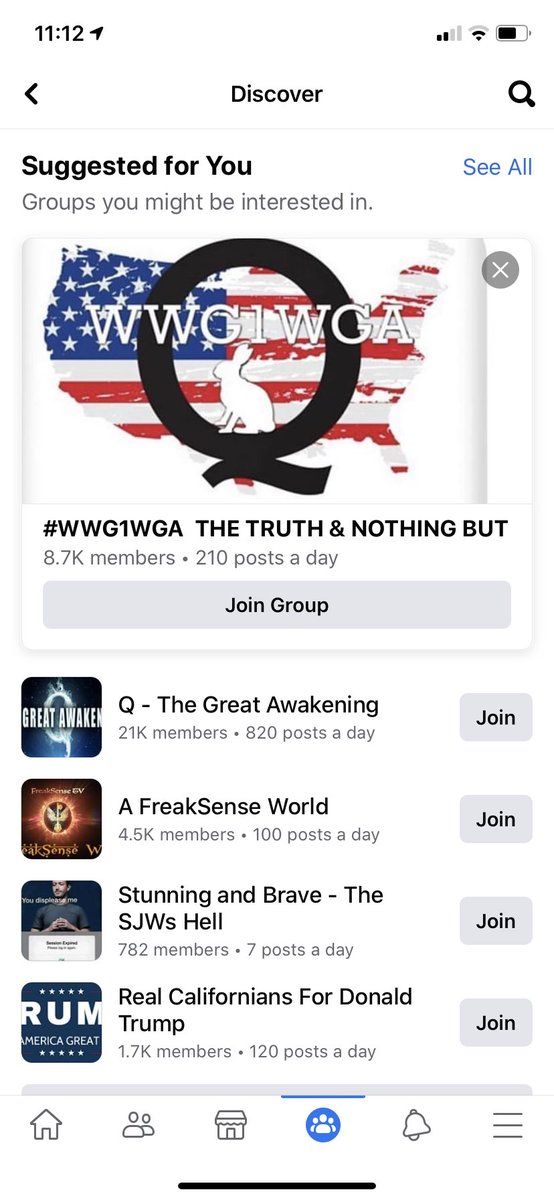

FB cannot deny that it is promoting QAnon through recommendations right now, because it is

FB cannot deny that it is promoting QAnon through recommendations right now, because it is

If FB wants to be neutral toward QAnon, it could stop recommending that users join QAnon groups. If it wants to be adversarial toward QAnon, it could ban it.

But as things stand, FB continues to *help* QAnon find new followers through its algorithms. https://www.theguardian.com/us-news/2020/aug/11/qanon-facebook-groups-growing-conspiracy-theory">https://www.theguardian.com/us-news/2...

But as things stand, FB continues to *help* QAnon find new followers through its algorithms. https://www.theguardian.com/us-news/2020/aug/11/qanon-facebook-groups-growing-conspiracy-theory">https://www.theguardian.com/us-news/2...

Here’s what I see (literally at just this moment) when I log into Facebook and click on the groups tab. This is not an adversarial relationship. @ngleicher

Read on Twitter

Read on Twitter