The Ofqual paper is quite wince-inducing. The data *doesn’t* allow that. ThIs puts idea that grade inflation, school level results and maintaining the distribution shape is more important than the fairness of individual results

I don’t blame Ofqual, but they’re being asked to correctly estimate the size of each egg that went into an omelette, based on a different omelette.

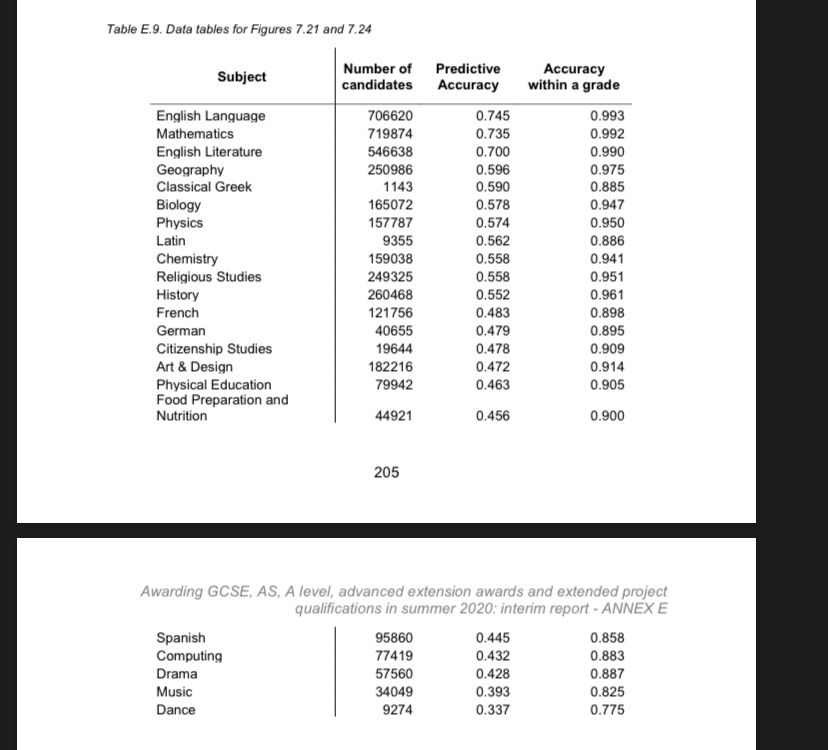

There’s a really curious and slightly under-explained table in the technical notes, where they discuss testing the model by back-casting the 2019 results. And they find that, even in history, the easiest subject to forecast, they get one in three grades wrong.

(I say it’s under-explained because I can’t see how this model-testing deals with a lack of teacher rank-ordering - an additional source of error in the real thing.)

Jon is making the same point here: these are hopelessly poor accuracy figures given the importance of a one-grade error. https://twitter.com/JonColes01/status/1293919819805241345?s=20">https://twitter.com/JonColes0...

To take Ester/Holly’s example here - about one in three sociology students will get the wrong grades, according to the model paper. About one in 30 will be wrong by *two grades or more*. And all that assumes zero teacher error in their work. https://twitter.com/kIopptimistic/status/1293880274523217924?s=20">https://twitter.com/kIopptimi...

These numbers are all too generous to the model because they, in effect, assume that teachers can perfectly place the rank order of every kid in their classes. The “true” reliability of the model is going to be worse than that.

Read on Twitter

Read on Twitter