Videos from @StanfordAIMI Symposium are up! I spoke on why we need to expand the conversation on bias & fairness.

I will share some slides & related links in this THREAD, but please watch my 17-minute talk in full (the other talks are excellent too!) 1/

https://www.youtube.com/watch?v=1Uyc9SPeYkA&list=PLe6zdIMe5B7IR0oDOobXBDBlYY1eqLYPx&index=10&t=41s">https://www.youtube.com/watch...

I will share some slides & related links in this THREAD, but please watch my 17-minute talk in full (the other talks are excellent too!) 1/

https://www.youtube.com/watch?v=1Uyc9SPeYkA&list=PLe6zdIMe5B7IR0oDOobXBDBlYY1eqLYPx&index=10&t=41s">https://www.youtube.com/watch...

While using a diverse & representative dataset is important, there are many problems this WON& #39;T solve, such as measurement bias

Great thread & research from @oziadias on what happens when you use healthcare *cost* as a proxy for healthcare *need* 2/ https://twitter.com/oziadias/status/1293598376869507074?s=20">https://twitter.com/oziadias/...

Great thread & research from @oziadias on what happens when you use healthcare *cost* as a proxy for healthcare *need* 2/ https://twitter.com/oziadias/status/1293598376869507074?s=20">https://twitter.com/oziadias/...

Another form of measurement bias is when there is systematic error, such as how pulse oximeters (a crucial tool in treating covid) and fitbit heart rate monitors (used in 300 clinical trials) are less accurate on people of color 3/ https://twitter.com/math_rachel/status/1291512973580623872?s=20">https://twitter.com/math_rach...

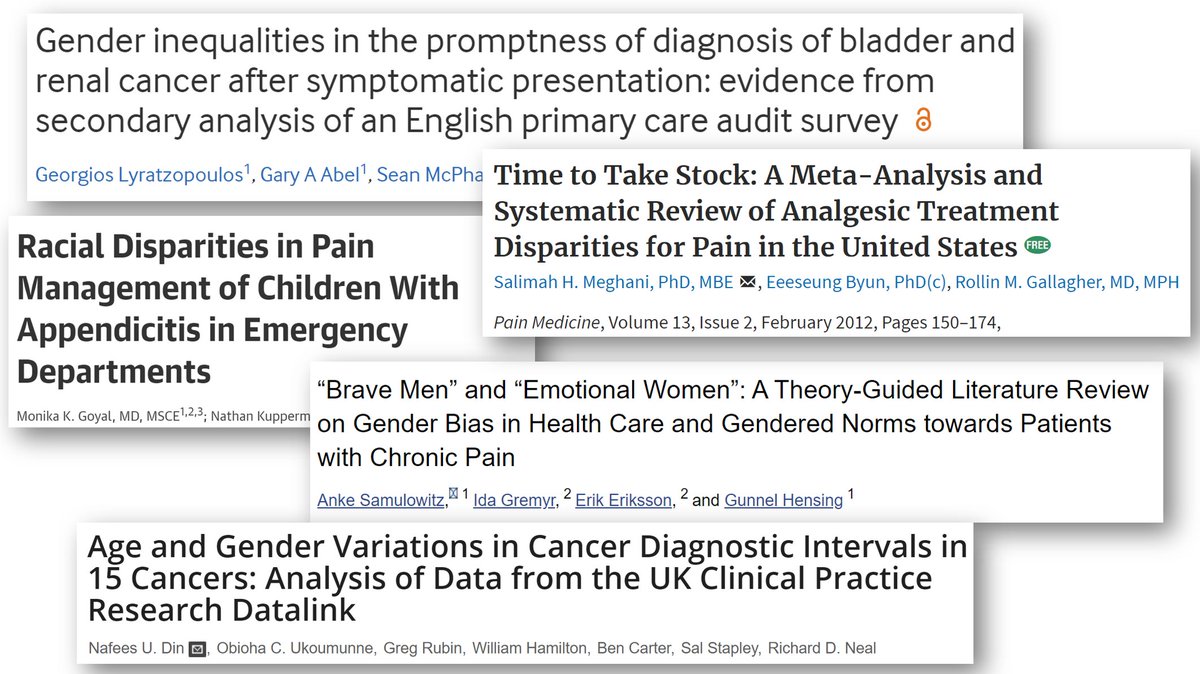

Another type of bias is historical bias (aka systemic racism & systemic sexism). Gathering more data doesn& #39;t fix it 5/ https://twitter.com/math_rachel/status/1191065892341239808?s=20">https://twitter.com/math_rach...

There are scores of studies on racial & gender bias in medicine, showing the pain of women is taken less seriously than of men. The pain of people of color is taken less seriously than pain of white people.

Result: longer time delays, lower quality of care, & worse outcomes 6/

Result: longer time delays, lower quality of care, & worse outcomes 6/

Domain expertise is crucial for any applied machine learning project. In medicine, this must include PATIENTS.

I was invited to speak as an AI researcher, but my experience of being a patient is just as valuable (2 brain surgeries, a life-threatening brain infection, etc) 7/

I was invited to speak as an AI researcher, but my experience of being a patient is just as valuable (2 brain surgeries, a life-threatening brain infection, etc) 7/

Overall I& #39;ve had access to great medical care, but I& #39;ve also had many experiences of being dismissed & disbelieved

Being sent home with aspirin when I needed brain surgery

Being told to "relax" & take melatonin when I had a foreign object in my heart

& others I won& #39;t share 8/

Being sent home with aspirin when I needed brain surgery

Being told to "relax" & take melatonin when I had a foreign object in my heart

& others I won& #39;t share 8/

You must listen to patients to understand the ways their data is incomplete, incorrect, missing, & biased

To understand the gap between what they experience in their bodies and what they can convince a doctor of

The tests that aren& #39;t ordered, the notes that aren& #39;t recorded 9/

To understand the gap between what they experience in their bodies and what they can convince a doctor of

The tests that aren& #39;t ordered, the notes that aren& #39;t recorded 9/

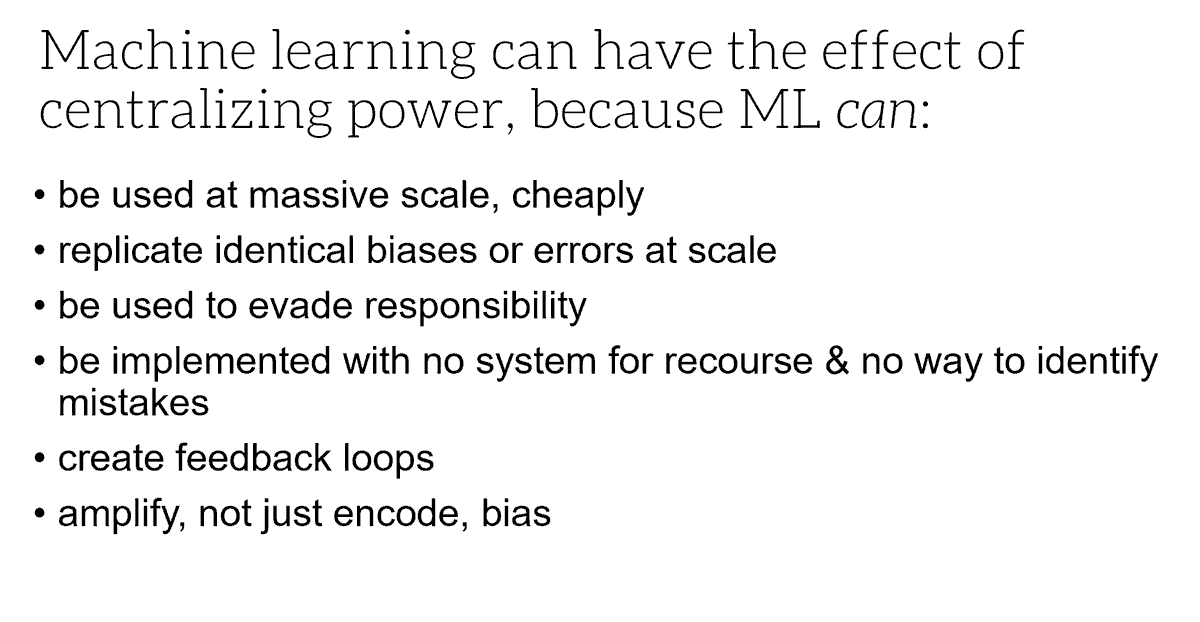

Machine learning can often (unintentionally) have the effect of centralizing power. Since the medical system is already too often disempowering for patients, we need to be extra cautious of this 10/

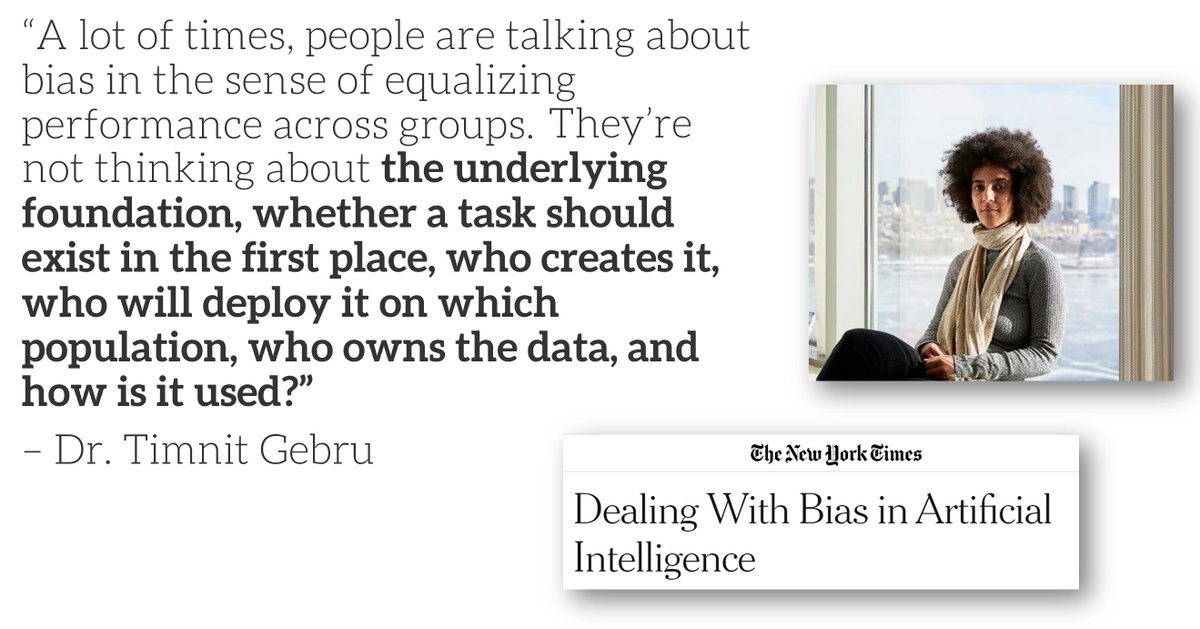

We need to move the conversation on bias & fairness  https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/

https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/

I am excited about work happening in Participatory Machine Learning, moving from

Explainability https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Recourse

https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Recourse

Transparency https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Contestability

https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Contestability

"Is this fair?" https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> "How does this shift power?"

https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> "How does this shift power?"

Predictive accuracy https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Good decision-making

https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> Good decision-making

See this thread: 12/ https://twitter.com/math_rachel/status/1284976543769309184?s=20">https://twitter.com/math_rach...

Explainability

Transparency

"Is this fair?"

Predictive accuracy

See this thread: 12/ https://twitter.com/math_rachel/status/1284976543769309184?s=20">https://twitter.com/math_rach...

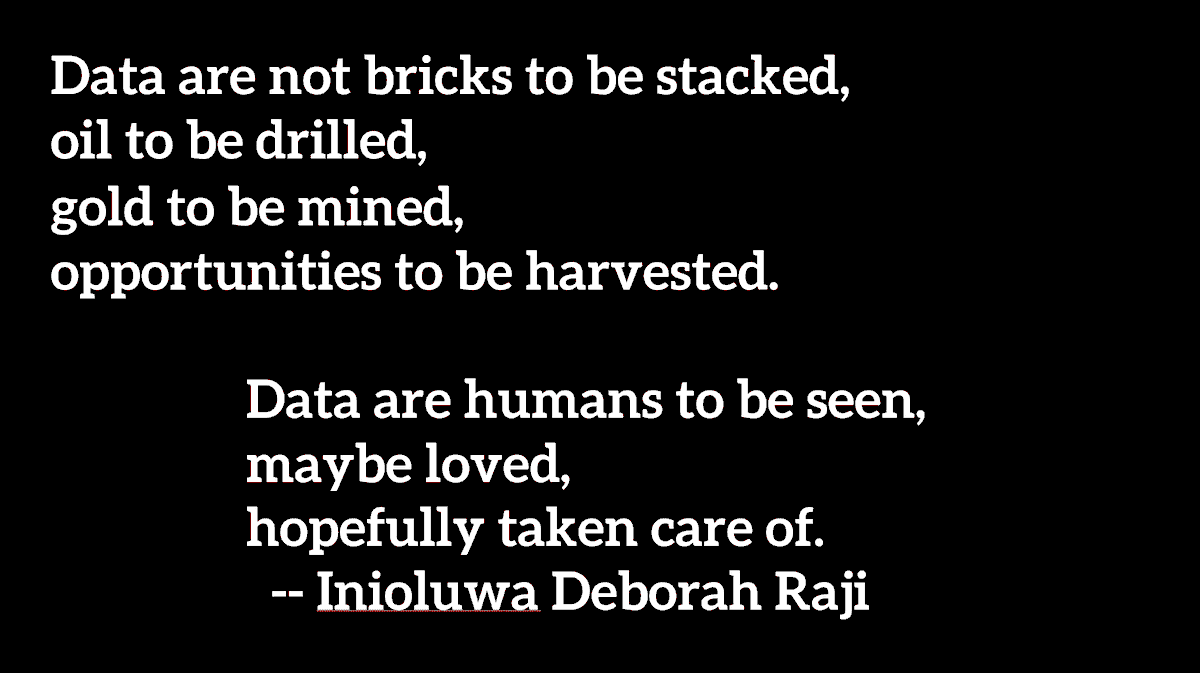

"Data are not bricks to be stacked, oil to be drilled, gold to be mined, opportunities to be harvested. Data are humans to be seen, maybe loved, hopefully taken care of." @rajiinio 13/

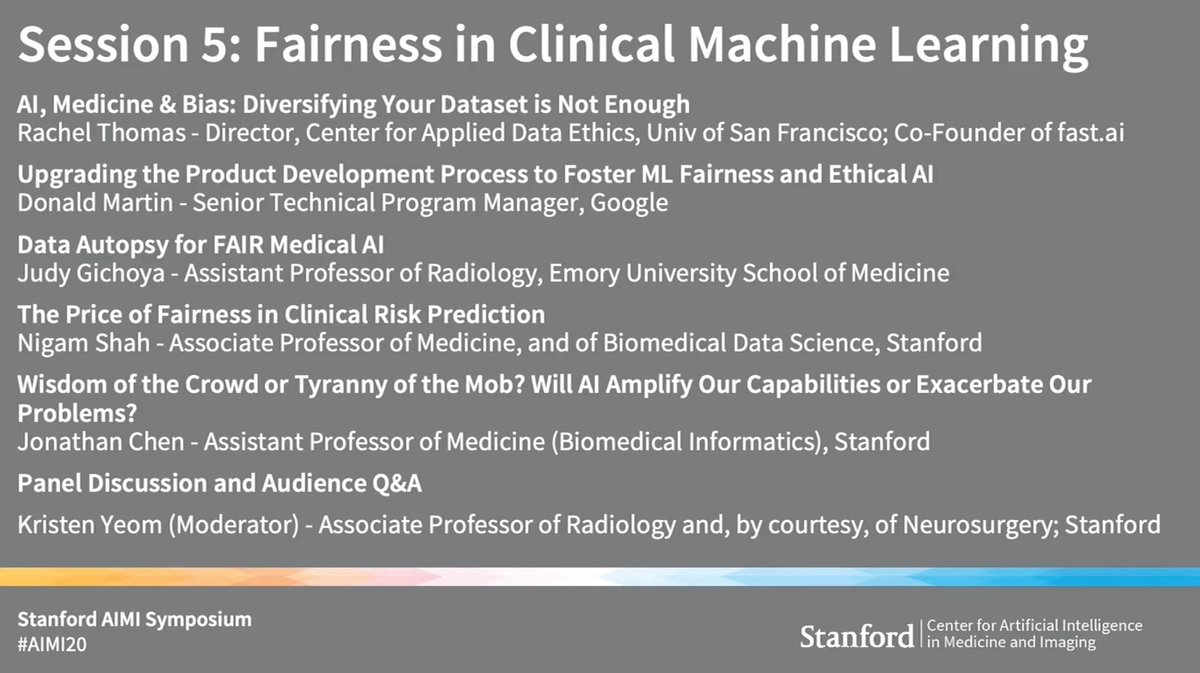

This talk was part of @StanfordAIMI session on Fairness in Clinical Machine Learning, together with @dxmartinjr @judywawira @drnigam @jonc101x

You can watch here: https://www.youtube.com/watch?v=1Uyc9SPeYkA&list=PLe6zdIMe5B7IR0oDOobXBDBlYY1eqLYPx&index=10&t=0s">https://www.youtube.com/watch...

You can watch here: https://www.youtube.com/watch?v=1Uyc9SPeYkA&list=PLe6zdIMe5B7IR0oDOobXBDBlYY1eqLYPx&index=10&t=0s">https://www.youtube.com/watch...

Read on Twitter

Read on Twitter

power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/" title="We need to move the conversation on bias & fairness https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/" class="img-responsive" style="max-width:100%;"/>

power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/" title="We need to move the conversation on bias & fairness https://abs.twimg.com/emoji/v2/... draggable="false" alt="➡️" title="Pfeil nach rechts" aria-label="Emoji: Pfeil nach rechts"> power & participation. As Dr. @timnitGebru wrote, to not just check error rates across groups, but to question the underlying foundation, whether a task should even exist, who creates it, who owns it, how is it used 11/" class="img-responsive" style="max-width:100%;"/>