"The Curious Case of the Convenient Outliers: A Twitter Thread"

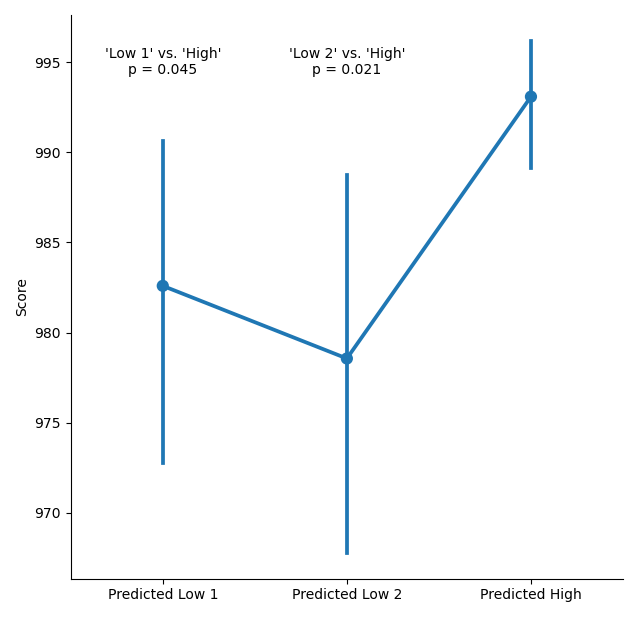

A recent paper in a leading psych. journal reports a pre-registered experiment with significant results: The "Predicted High" condition is significantly different from the two "Predicted Low" conditions.

A recent paper in a leading psych. journal reports a pre-registered experiment with significant results: The "Predicted High" condition is significantly different from the two "Predicted Low" conditions.

This result is obtained after a serie of (pre-registered) exclusions. In particular, the authors state that they will exclude participants whose "scores are extreme outliers identified by Boxplot." This is a bit vague, so I downloaded the (open) data...

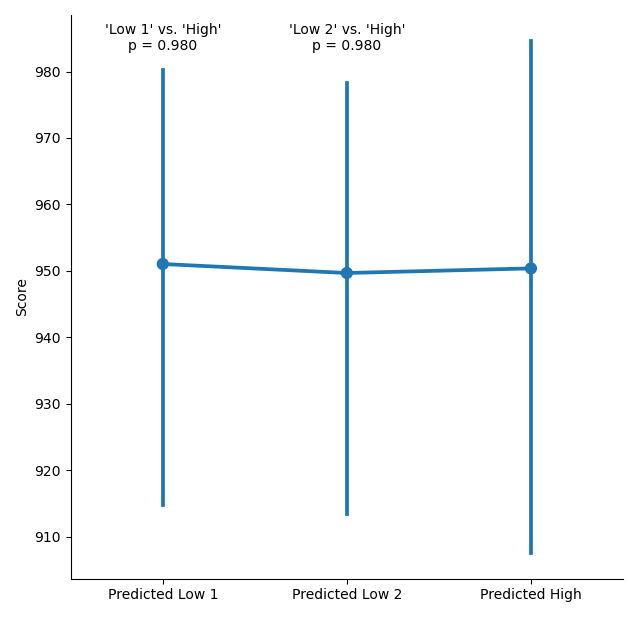

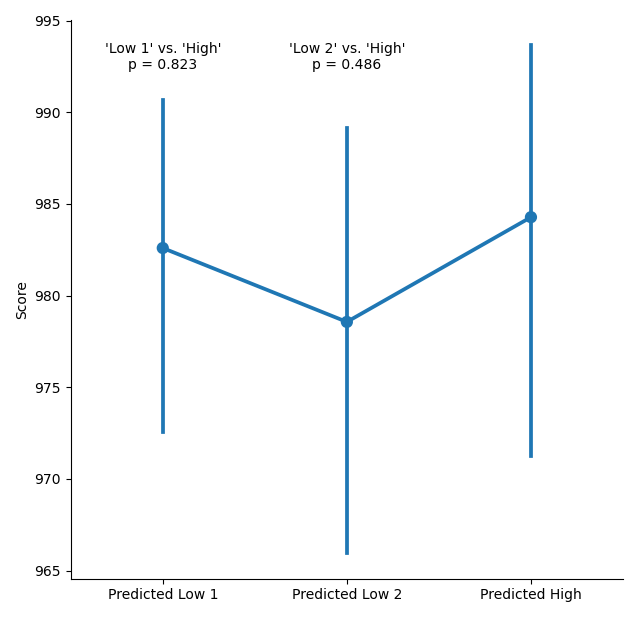

This what the results look like before excluding the outliers. Not very suggestive of significant differences.

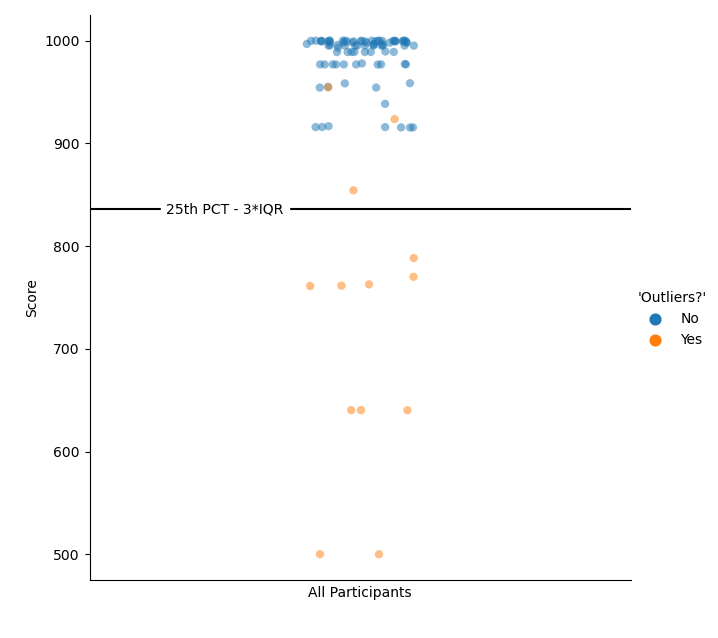

Now, how were the "outliers" defined? The authors are not explicit about this particular experiment. However, another experiment in the paper defines outliers as "three times the interquartile range below the lower quartile." Let& #39;s see if this definition matches the data...

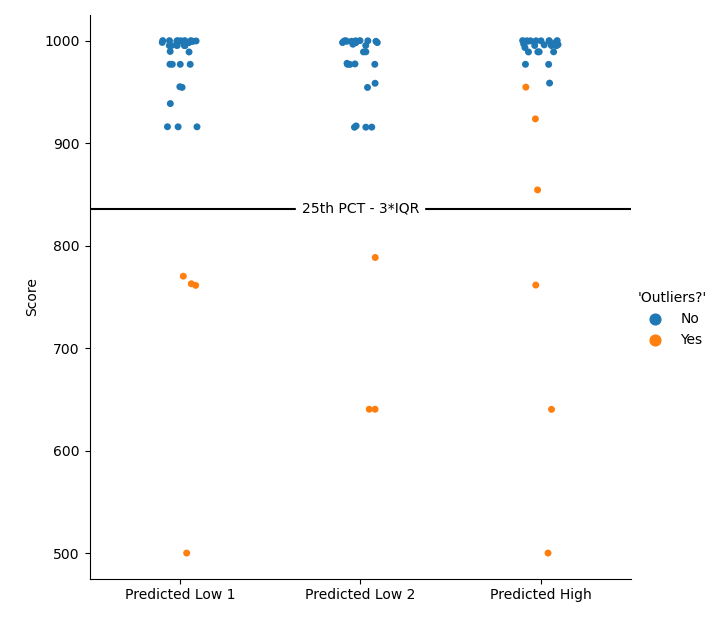

Close enough, but there is something weird: Three observations are above this threshold, but still tagged as "outliers,"and two of them are less extreme than "non-outlier" values. Can you guess to which condition these "outliers" belong?

Uh oh... And what happens when we put those three "outliers" back in? Yep, you guessed it: Suddenly the support for the "pre-registered hypothesis" is not so strong anymore.

Now what happens? I will write to the editor, and let him know. It took me exactly 1h30 to perform the analysis and post this thread. Next time you see a paper that has open data and looks weird, do the field a small service and check the data. Anyone can do it :).

This is also not the first time "exclusions" have been used creatively: See this thread by @LisaDeBruine https://twitter.com/LisaDeBruine/status/1004312215657336832">https://twitter.com/LisaDeBru...

Read on Twitter

Read on Twitter