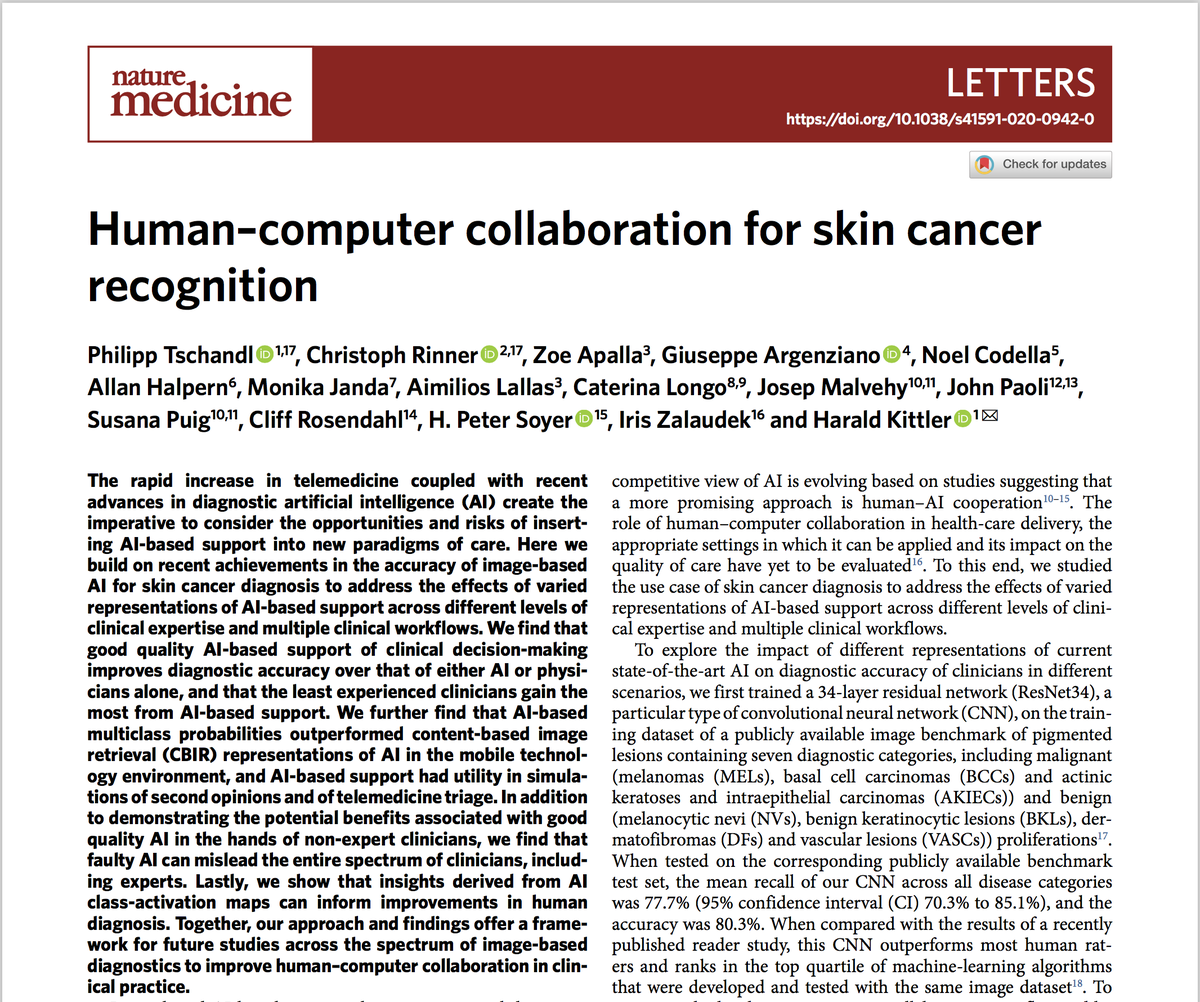

This paper from @NatureMedicine is one of the most interesting studies on human-computer collaborations that I have read. The way that doctors interact with #AI matters.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread">

https://www.nature.com/articles/s41591-020-0942-0?utm_source=nm_etoc&utm_medium=email&utm_campaign=toc_41591_26_8&utm_content=20200811&sap-outbound-id=23B37F2AB9B6A9AB9B184A1D38E49936B996CA9C">https://www.nature.com/articles/...

https://www.nature.com/articles/s41591-020-0942-0?utm_source=nm_etoc&utm_medium=email&utm_campaign=toc_41591_26_8&utm_content=20200811&sap-outbound-id=23B37F2AB9B6A9AB9B184A1D38E49936B996CA9C">https://www.nature.com/articles/...

@ChDLR et al. started with ResNet34 and trained it only on publicly available skin cancer data. Simple enough.

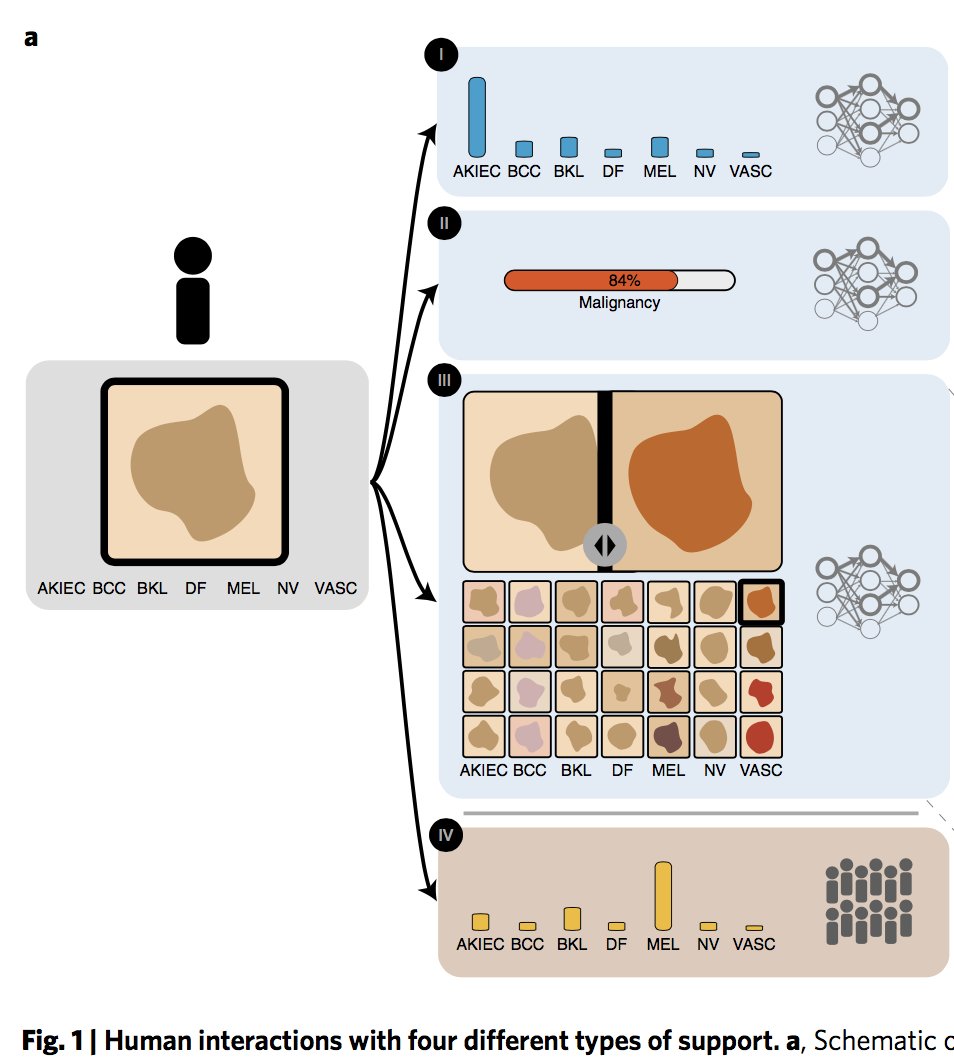

They looked at how doctors changed their dx after receiving:

1) the #AI& #39;s predicted probabilities for each of the 7 possible diagnoses

2) the AI& #39;s predicted chance of benign vs. malignant lesion

3) similar images with known diagnoses

OR

4) results from a crowd-sourcing the dx

1) the #AI& #39;s predicted probabilities for each of the 7 possible diagnoses

2) the AI& #39;s predicted chance of benign vs. malignant lesion

3) similar images with known diagnoses

OR

4) results from a crowd-sourcing the dx

Guess which one helped improve the accuracy of their diagnoses?

Options 1 & 4 did, but options 2 & 3 didn& #39;t.

Option 1 (sharing diagnosis probabilities of each class) improved their diagnostic accuracy from 63.6% to 77.0%

Option 1 (sharing diagnosis probabilities of each class) improved their diagnostic accuracy from 63.6% to 77.0%

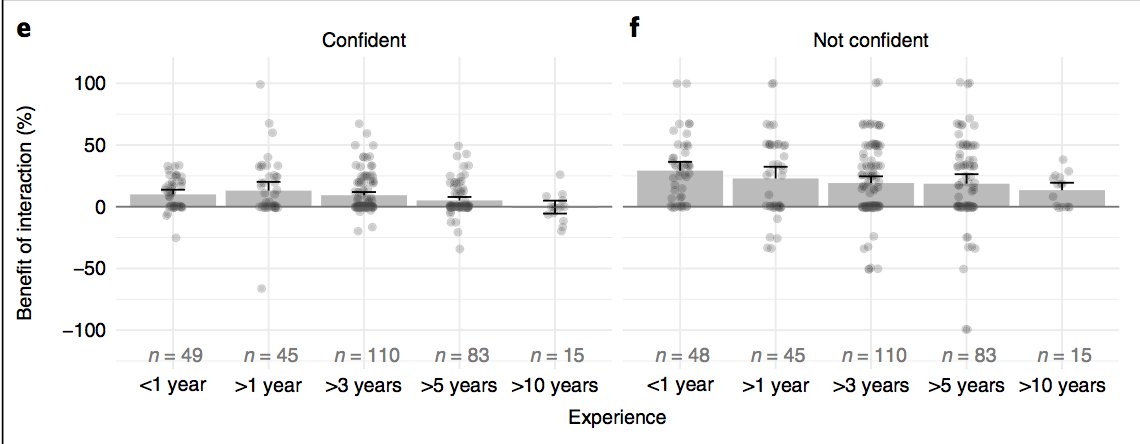

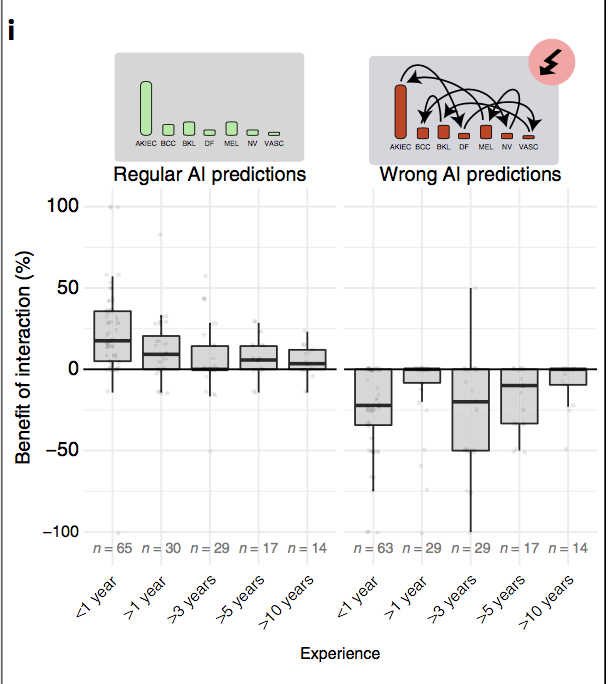

Interestingly, less-experienced docs were more likely to change their initial diagnosis to fit the AI& #39;s prediction. Senior docs only changed their diagnosis in patients they were less confident in.

Faulty AI predictions lead physicians to make incorrect diagnoses.

This is why it is so important to thoroughly validate AI systems before they go live.

This is why it is so important to thoroughly validate AI systems before they go live.

@DrLukeOR: I am curious how this fits with your preference for giving binary decisions. What do you think the results would have been if they just gave the raters the class with the highest probability? https://twitter.com/DrLukeOR/status/1288259300930928640?s=20">https://twitter.com/DrLukeOR/...

Read on Twitter

Read on Twitter https://www.nature.com/articles/..." title="This paper from @NatureMedicine is one of the most interesting studies on human-computer collaborations that I have read. The way that doctors interact with #AI matters.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> https://www.nature.com/articles/..." class="img-responsive" style="max-width:100%;"/>

https://www.nature.com/articles/..." title="This paper from @NatureMedicine is one of the most interesting studies on human-computer collaborations that I have read. The way that doctors interact with #AI matters.https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧵" title="Thread" aria-label="Emoji: Thread"> https://www.nature.com/articles/..." class="img-responsive" style="max-width:100%;"/>