I just got this working; it wasn& #39;t very difficult, but there were a few gotchas. Here& #39;s how to do it on a Mac.

> ElasticSearch (backend)

> Dejavu (frontend)

> Docker

> Homebrew https://twitter.com/KimCrayton1/status/1289561107867279363">https://twitter.com/KimCrayto...

> ElasticSearch (backend)

> Dejavu (frontend)

> Docker

> Homebrew https://twitter.com/KimCrayton1/status/1289561107867279363">https://twitter.com/KimCrayto...

1. install homebrew https://brew.sh/ ">https://brew.sh/">...

2. install ElasticSearch

"brew install elasticsearch"

3. set your OpenJDK env variable

"export JAVA_HOME=/usr/local/opt/openjdk/bin"

> (also add to your .zshrc or .bashrc)

"brew install elasticsearch"

3. set your OpenJDK env variable

"export JAVA_HOME=/usr/local/opt/openjdk/bin"

> (also add to your .zshrc or .bashrc)

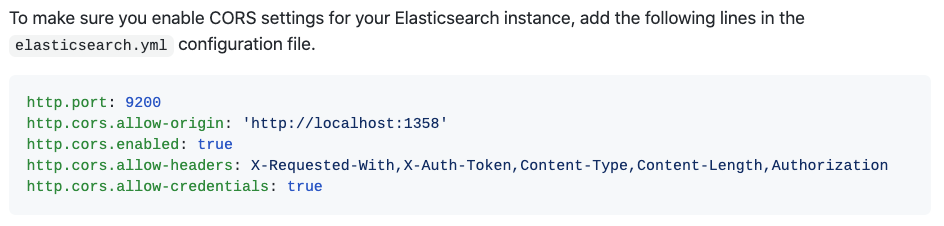

4. modify /usr/local/etc/elasticsearch/elasticsearch.yml to add the following:

> copy/paste text is here: https://github.com/appbaseio/dejavu">https://github.com/appbaseio...

> copy/paste text is here: https://github.com/appbaseio/dejavu">https://github.com/appbaseio...

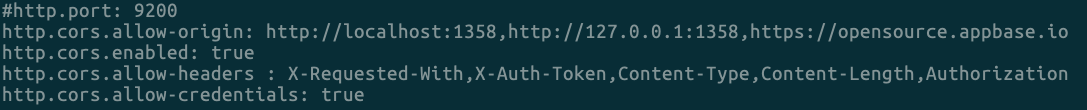

5. also add the following to "http.cors.allow-origin" to allow this frame to call your ES instance:

https://opensource.appbase.io"> https://opensource.appbase.io

> the end of your .yml file should look like this:

https://opensource.appbase.io"> https://opensource.appbase.io

> the end of your .yml file should look like this:

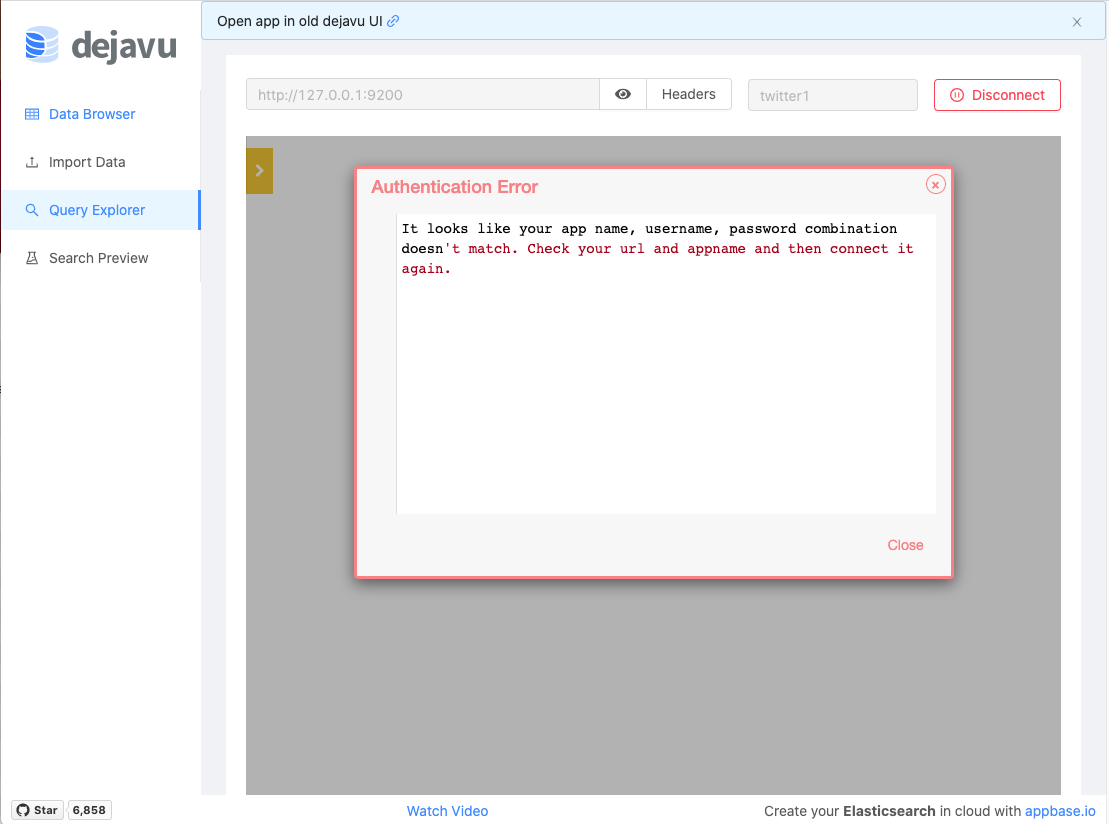

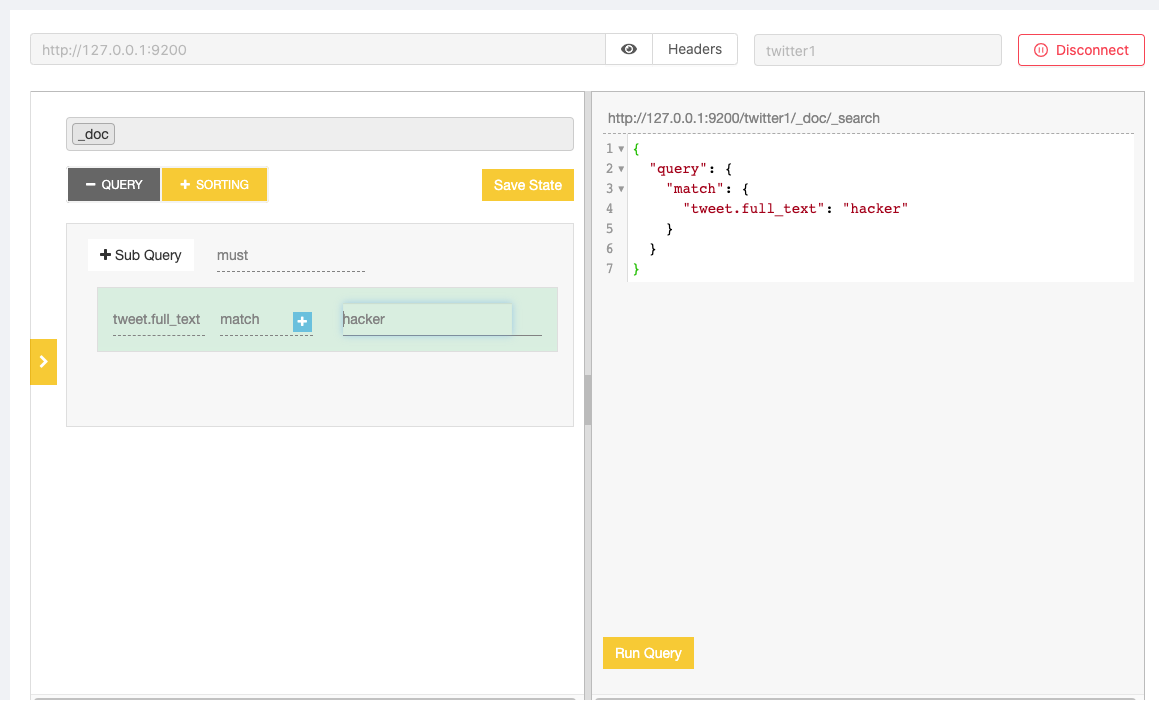

(if you don& #39;t do step 5, you will eventually see this error on the frontend in Query Explorer)

6. start ElasticSearch at the command line by typing "elasticsearch" (this is a non-persistent method and can be easily killed with ctrl+c/restarted)

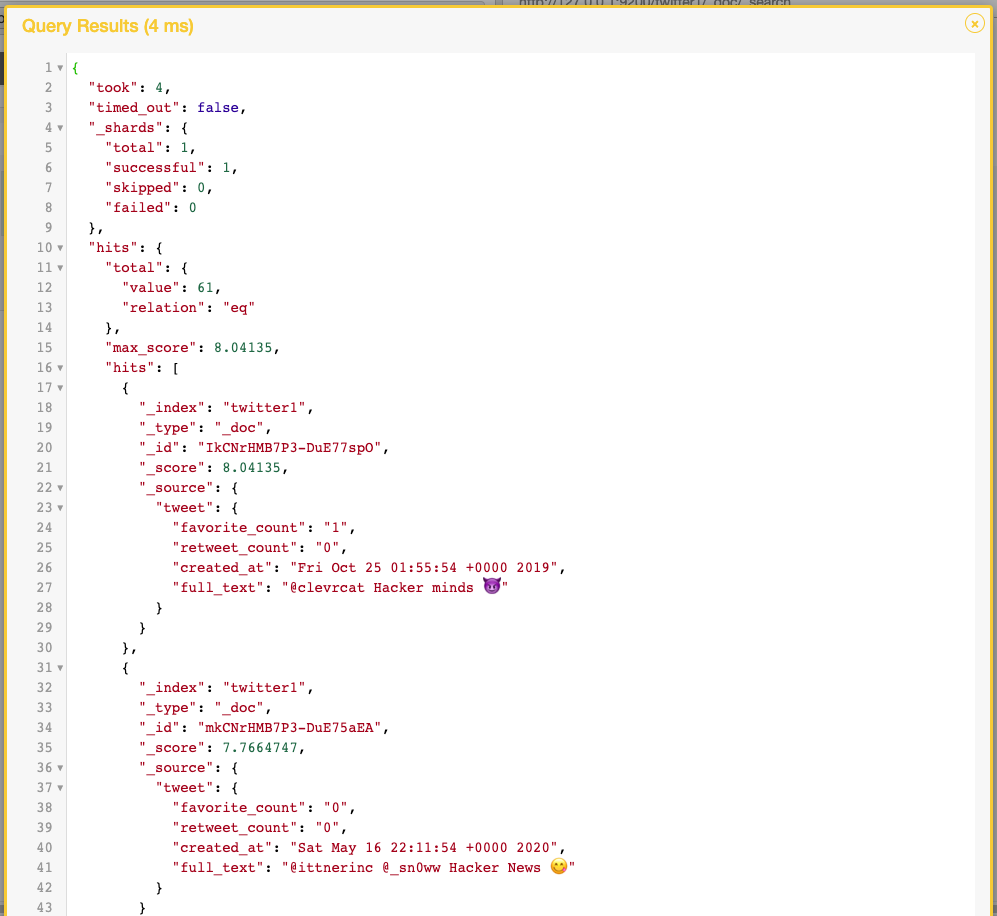

> you should see it running by browsing to http://127.0.0.1:9200

7. install Docker https://www.docker.com/products/docker-desktop">https://www.docker.com/products/...

> you should see it running by browsing to http://127.0.0.1:9200

7. install Docker https://www.docker.com/products/docker-desktop">https://www.docker.com/products/...

8. at a new command prompt, type:

"docker run -p 1358:1358 -d appbaseio/dejavu"

"open http://localhost:1358/"

"docker run -p 1358:1358 -d appbaseio/dejavu"

"open http://localhost:1358/"

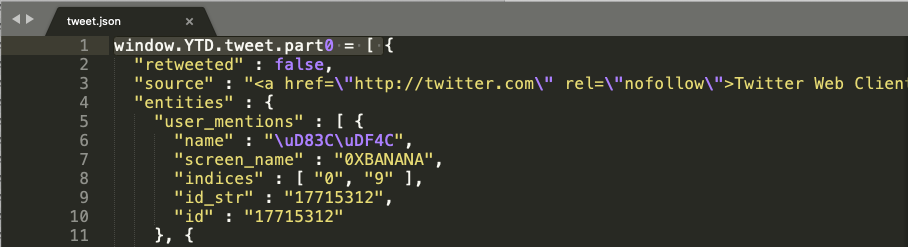

9. take your twitter archive file and find tweet.js or tweet.json (different versions of Twitter& #39;s archive exporter over time have shown slight variations).

> modify the file header by removing this highlighted text

> save and close the file

> ensure the file extension is .json

> modify the file header by removing this highlighted text

> save and close the file

> ensure the file extension is .json

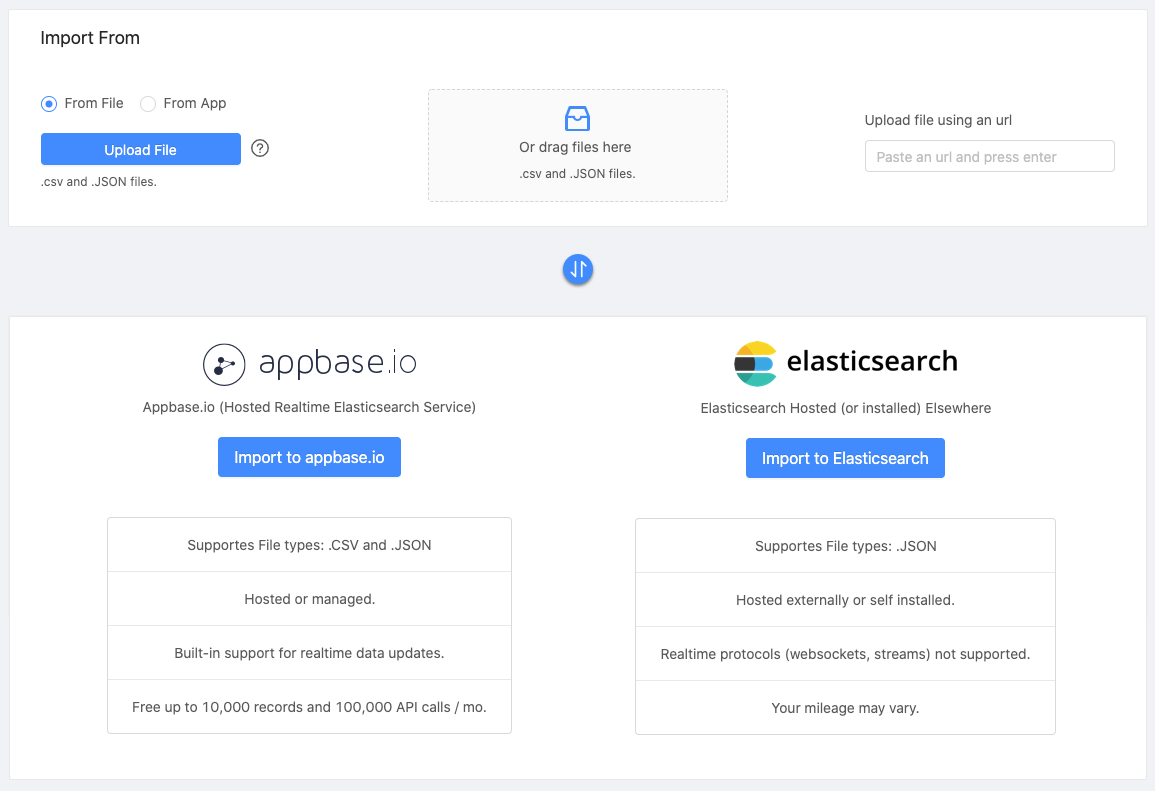

10. upload tweet data to Dejavu

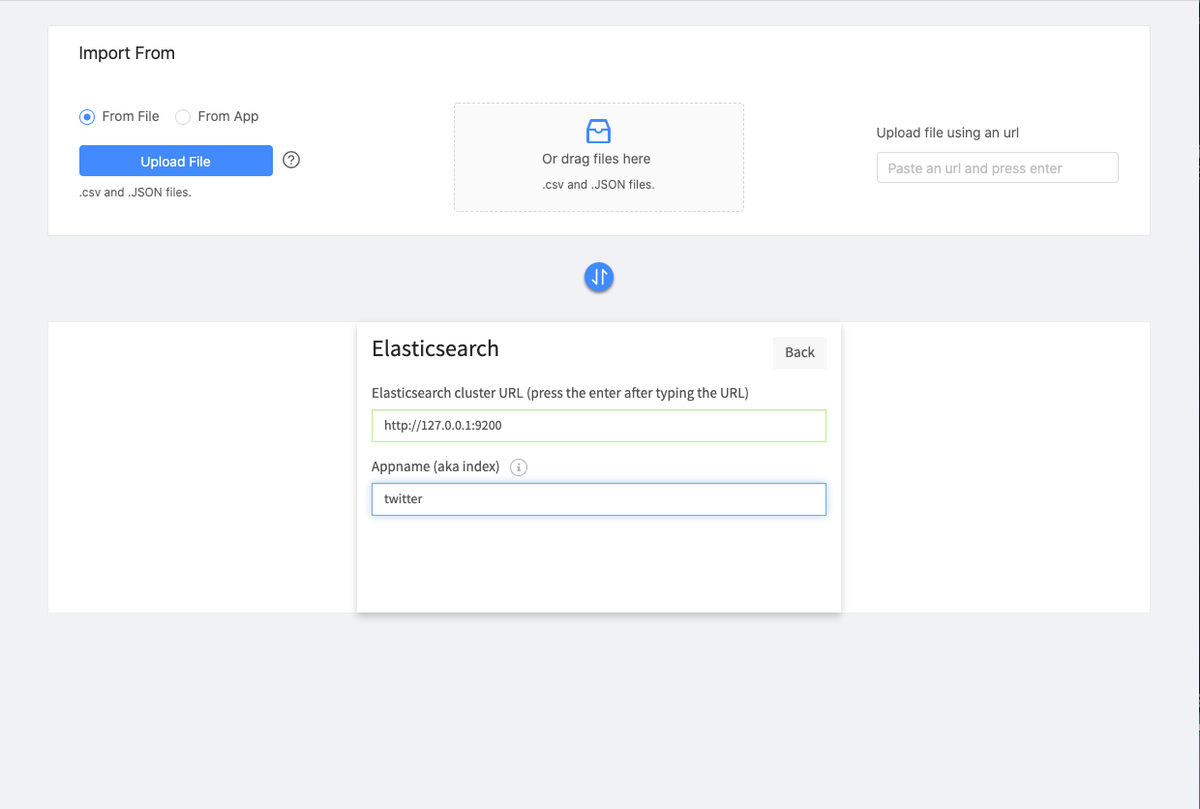

> select "import to ElasticSearch" and type in your local address as shown below

> use a descriptive and unique index name (arbitrary)

> select "import to ElasticSearch" and type in your local address as shown below

> use a descriptive and unique index name (arbitrary)

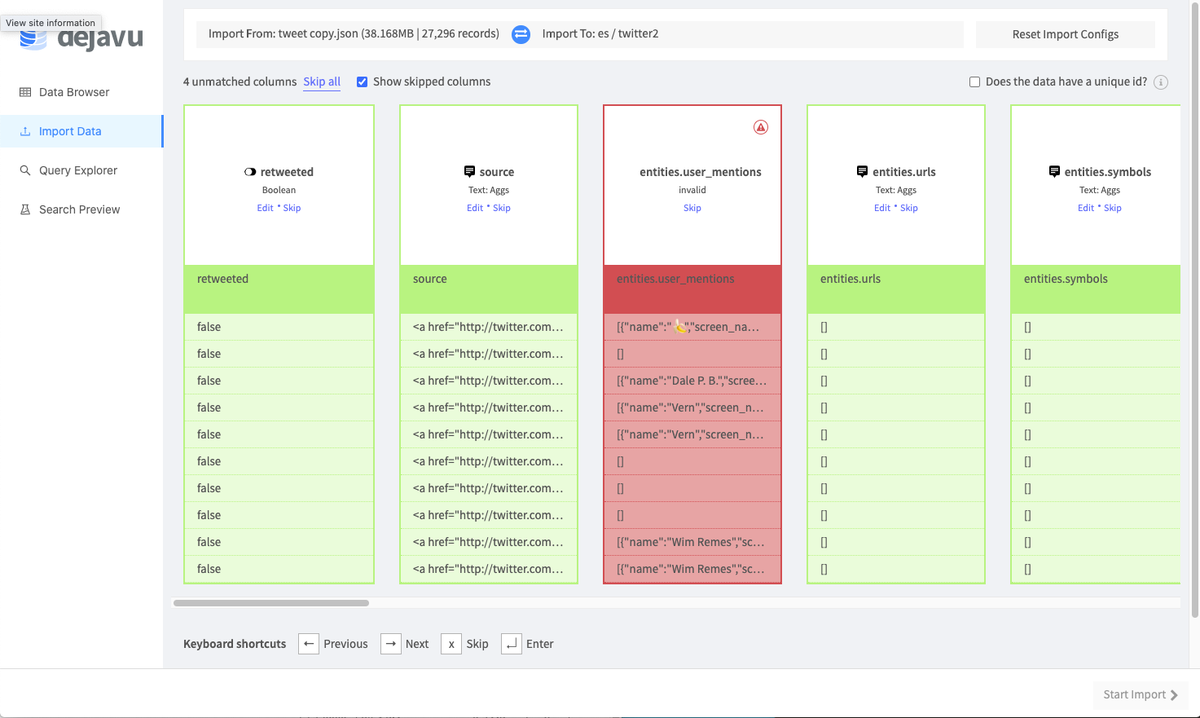

11. once you are successful with your data format (you successfully modified the .json file to look like real JSON) and Dejavu has parsed property, you will see the number of records & will be asked to configure data mappings

11. in the data mapping wizard, you are given the opportunity to tell ElasticSearch what type of data to expect in each field.

(if it throws an error when attempting to proceed, read carefully and try to understand why there is a mismatch between data type and the field you& #39;re trying to import)

> you should feel free to skip importing most fields if all you want is to search through the tweet.full_text

> you should feel free to skip importing most fields if all you want is to search through the tweet.full_text

Read on Twitter

Read on Twitter