A method that: 1) works for high-dimensional, structured inputs, 2) optimizes on low-dimensional latent spaces, 3) enables new data to be incorporated as they come – sounds useful for low-resource NLP. :-) A thread on a paper by @austinjtripp, @edaxberger, & @jmhernandez233.

0/

0/

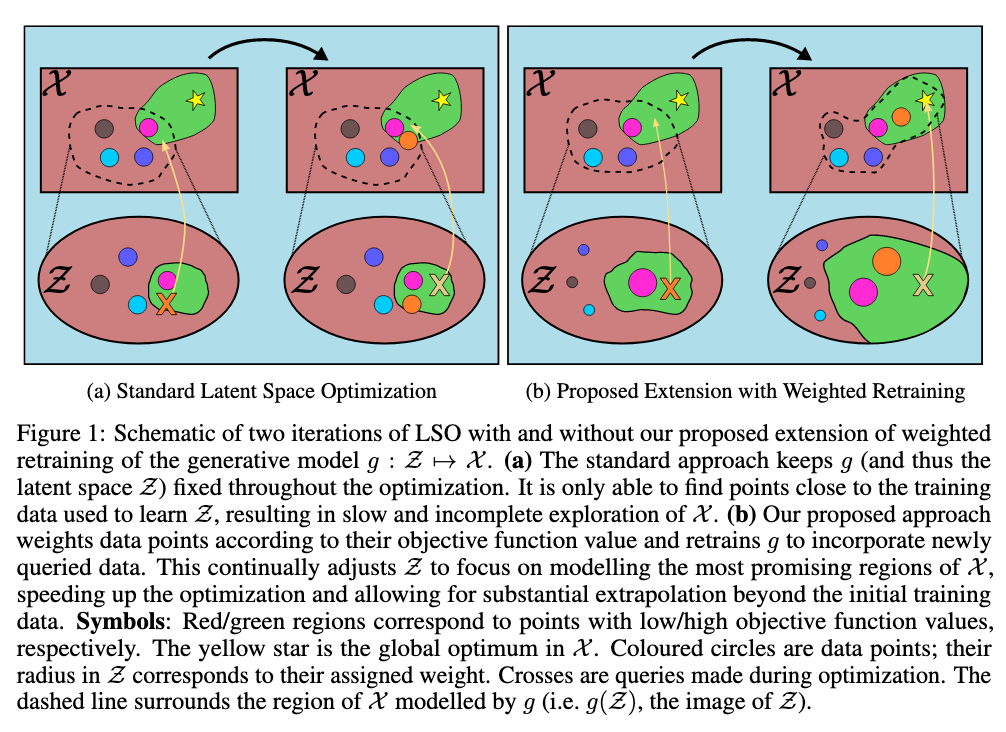

Must the training of generative models be decoupled to the optimization task? “Sample-Efficient Optimization in the Latent Space of DGMs via Weighted Retraining” by A. Tripp∗, E. Daxberger∗ & J.M. Hernández-Lobato argues that decoupling may hinder solutions from being found.

1/

1/

Tripp, et al.: addresses the challenge of generating solutions for high-dimensional structured input spaces when evaluation of the objective function is expensive, e.g. drug, molecule & material design.

Paper: https://arxiv.org/abs/2006.09191

Presented">https://arxiv.org/abs/2006.... at #ICML2020 RealML Workshop.

2/

Paper: https://arxiv.org/abs/2006.09191

Presented">https://arxiv.org/abs/2006.... at #ICML2020 RealML Workshop.

2/

Tripp, et al.: recently, Latent Space Optimization (LSO) is a popular approach in which a generative model mapping the original input space onto a low-dimensional continuous space is first trained. The objective function is then optimized over this learned latent space.

3/

3/

Tripp, et al.: These 2 stages are usually decoupled from each other. In the latent space, a point’s relative volume is roughly determined by its frequency in the training data. However, frequently-occurring does not necessarily equate to high score wrt the objective function.

4/

4/

Tripp, et al.: decoupling prevents solutions far from training data from being found. They propose data weighting & periodic retraining to allow a higher fraction of the feasible region devoted to modelling high-scoring points & substantial exploration beyond initial data.

5/

5/

Tripp, et al.: weights should be strictly positive, chosen such that high-scoring are weighted more & vice versa. The authors assign weight to each data point roughly proportional to its reciprocal rank, with rank based on the data point’s score on the objective function.

6/

6/

Tripp, et al.: weighting also enables efficient retraining of the generative model while avoiding catastrophic forgetting. Combined w/ data weighting, periodic retraining ensures that the latent space is always occupied by the most updated & relevant points for optimization.

7/

7/

Tripp, et al.: weighted retraining substantially improves SOTA algorithms& #39; sample-efficiency. E.g. in arithmetic expression fitting task: MSE approaches zero; in chemical design task: beats best prev. score of 11.84 w/ ~5K samples, with a score of 22.5 w/ only 0.5K samples.

8/end

8/end

Coming up next: a thread in Bahasa Indonesia on this paper.

Read on Twitter

Read on Twitter