Doctor GPT-3

or: How I Learned to Stop Worrying and Love the Artificial Intelligence

This week& #39;s newsletter is 6,000 words on #gpt3 :

- how it works

- if the hype is deserved

- how to detect it

- if it’s going to plunder our jobs

https://avoidboringpeople.substack.com/p/doctor-gpt-3 ?

Thread">https://avoidboringpeople.substack.com/p/doctor-... https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Rückhand Zeigefinger nach unten" aria-label="Emoji: Rückhand Zeigefinger nach unten">

1/45

or: How I Learned to Stop Worrying and Love the Artificial Intelligence

This week& #39;s newsletter is 6,000 words on #gpt3 :

- how it works

- if the hype is deserved

- how to detect it

- if it’s going to plunder our jobs

https://avoidboringpeople.substack.com/p/doctor-gpt-3 ?

Thread">https://avoidboringpeople.substack.com/p/doctor-...

1/45

GPT-3 was created by OpenAI, a company trying to "make sure artificial general intelligence benefits all of humanity," i.e. the robots don& #39;t kill us all.

2/45

2/45

It& #39;s a general language model; think of it as an amazing autocomplete function

Because it& #39;s a general model, it can solve many different types of tasks

You could ask it to write a paragraph about unicorns, translate a sentence, generate programming code, or more

3/45

Because it& #39;s a general model, it can solve many different types of tasks

You could ask it to write a paragraph about unicorns, translate a sentence, generate programming code, or more

3/45

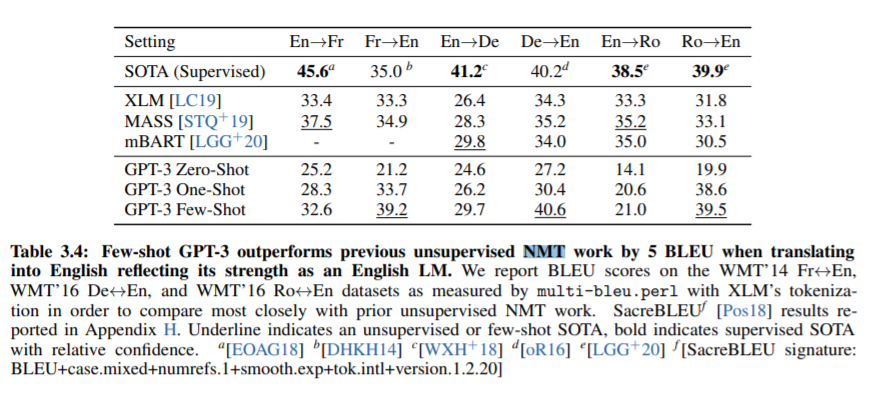

You might think it& #39;d be worse than specialised models, but that& #39;s surprisingly not the case.

Impressively, in many areas it can be as good, if not better than State Of The Art models

4/45

Impressively, in many areas it can be as good, if not better than State Of The Art models

4/45

If you could only pick one model, you& #39;d probably want to use GPT. It& #39;s the Simone Biles of the AI community, being top at many events and great at the rest.

5/45

5/45

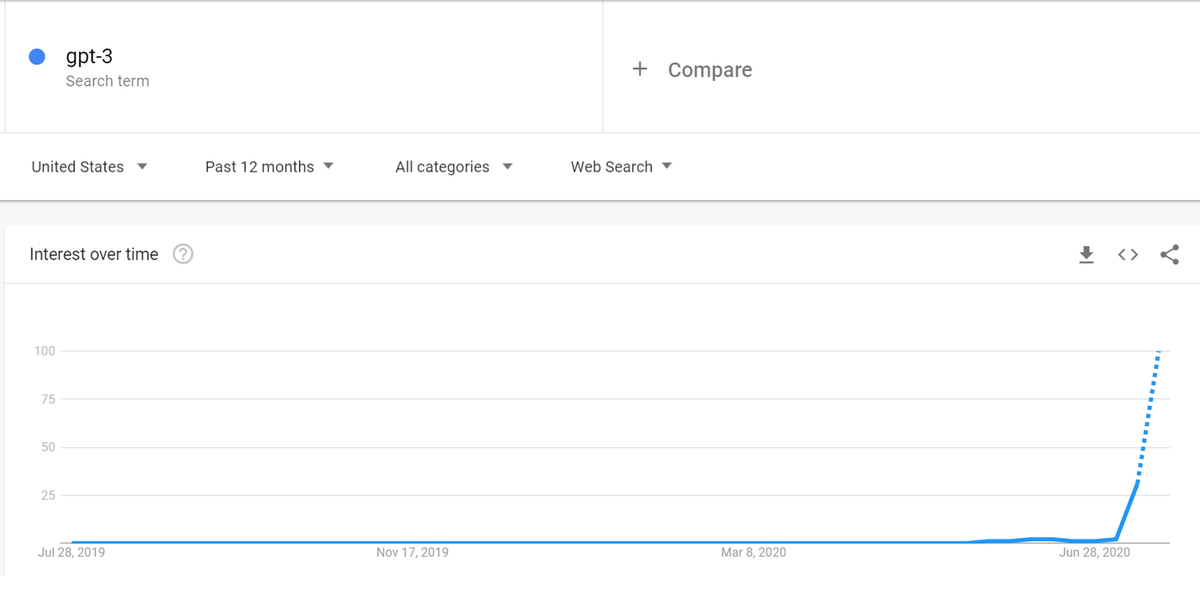

There& #39;s been a lot of hype ever since the viral tweet by @sharifshameem , which came a few weeks after the actual release of GPT-3.

If you look at search trends, the itsby-bitsy bump is the actual release. The large spike is after the viral tweet.

6/45

If you look at search trends, the itsby-bitsy bump is the actual release. The large spike is after the viral tweet.

6/45

What& #39;s different this time, especially compared to GPT-2?

GPT-3 gives better results, largely due to the increased data used in training and increased model parameters

7/45

GPT-3 gives better results, largely due to the increased data used in training and increased model parameters

7/45

@minimaxir also points out that GPT-3 allows for longer text generation output, and makes better use of prompts to the model compared to before.

Overall, he estimates GPT-3 results are usable 30-40% of the time, vs GPT-2 10% of the time

8/45

Overall, he estimates GPT-3 results are usable 30-40% of the time, vs GPT-2 10% of the time

8/45

That increased accuracy has led to many viral demo videos of programming code, google sheets plugins, or legal speak generation.

9/45 https://twitter.com/pavtalk/status/1285410751092416513?s=20">https://twitter.com/pavtalk/s...

9/45 https://twitter.com/pavtalk/status/1285410751092416513?s=20">https://twitter.com/pavtalk/s...

10/ That said, if you& #39;d seen GPT-2 results last year, you wouldn& #39;t have been as surprised. The outputs then were already impressive.

The general public hype has gone from 0 to 100; the ppl in the space probably from 50 to 70.

10/45

The general public hype has gone from 0 to 100; the ppl in the space probably from 50 to 70.

10/45

Potential considerations on the model include:

- Slow to generate output

- Cherry-picking of examples

- Everyone& #39;s using the same model

- Bias in training

per @minimaxir

11/45

- Slow to generate output

- Cherry-picking of examples

- Everyone& #39;s using the same model

- Bias in training

per @minimaxir

11/45

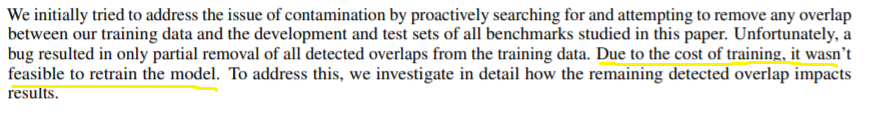

Funnily enough, @ykilcher also pointed out how the model is so expensive to train, that when the researchers found an error in the training, they couldn& #39;t retrain it due to cost concerns

12/45

12/45

GPT-3& #39;s model is based on the Transformer model, which is a different algorithm compared to the older sequence to sequence ones

13/45

13/45

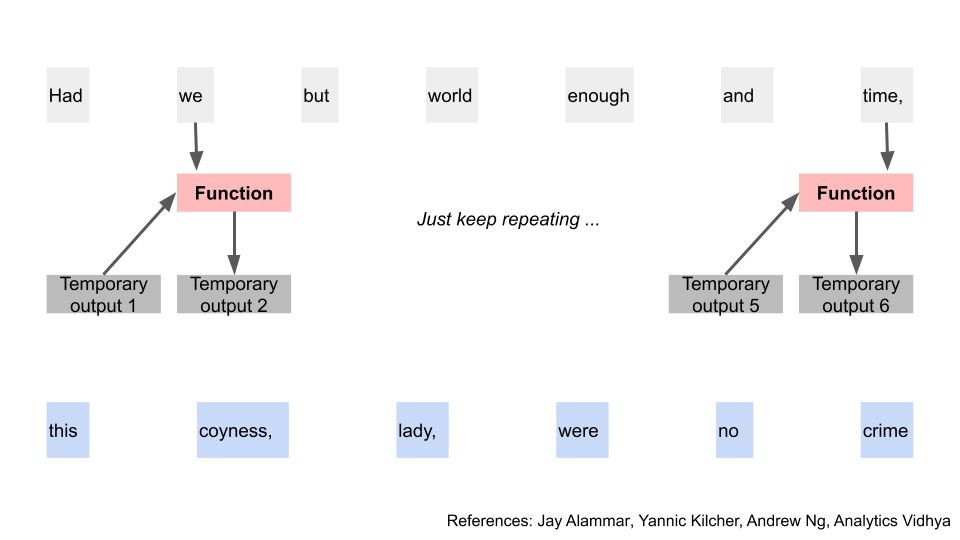

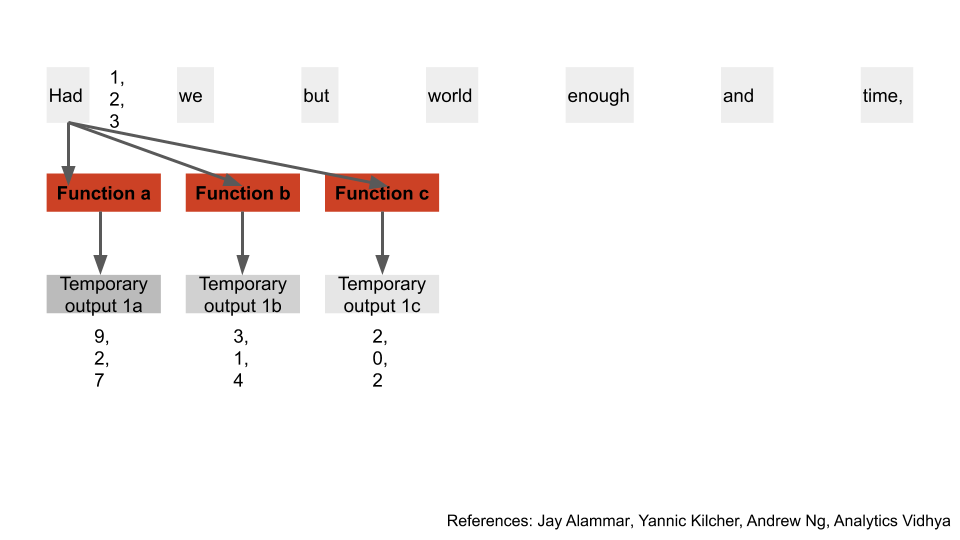

I go into more detail in the post, and how those older seq2seq models worked is that they take inputs one at a time, perform some function, and get a temporary output

14/45

14/45

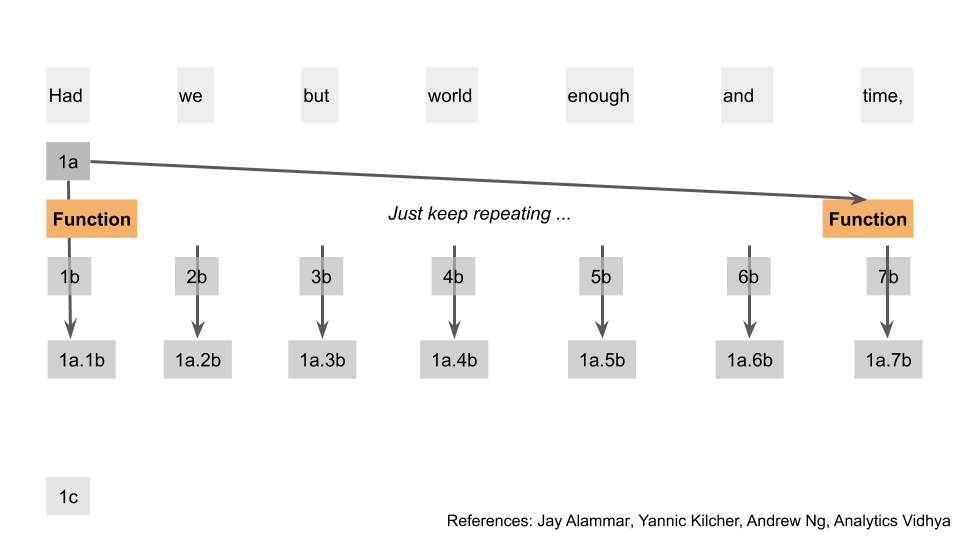

The problem with doing this sequentially is that it takes a long time. You can& #39;t parallel process any of it, since each step is dependent on the previous one.

This is why these are known as "recurrent" neural networks.

17/45

This is why these are known as "recurrent" neural networks.

17/45

Enter the transformer model.

GPT-3 stands for Generative Pretrained Transformer 3.

It& #39;s based on the transformer model, but actually a variation on it, as @JayAlammar pointed out to me

18/45

GPT-3 stands for Generative Pretrained Transformer 3.

It& #39;s based on the transformer model, but actually a variation on it, as @JayAlammar pointed out to me

18/45

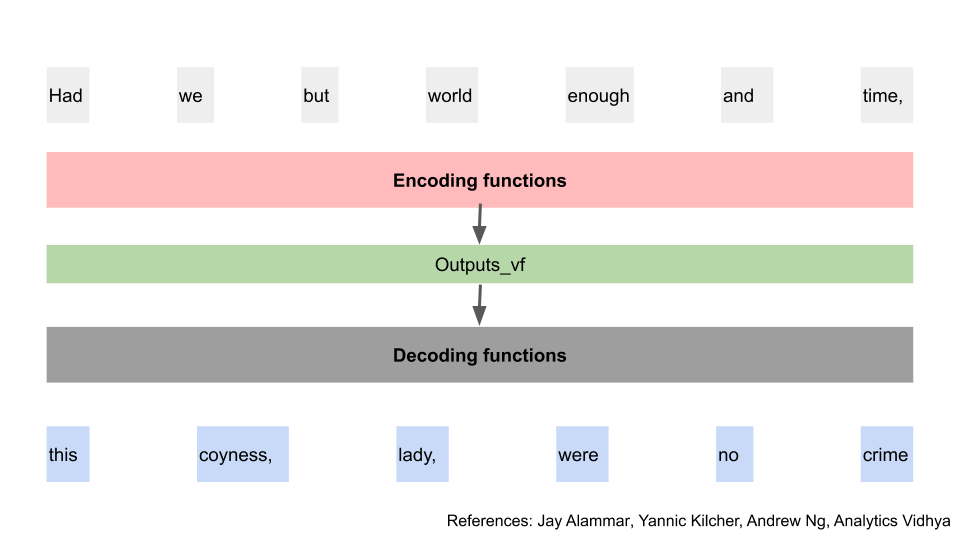

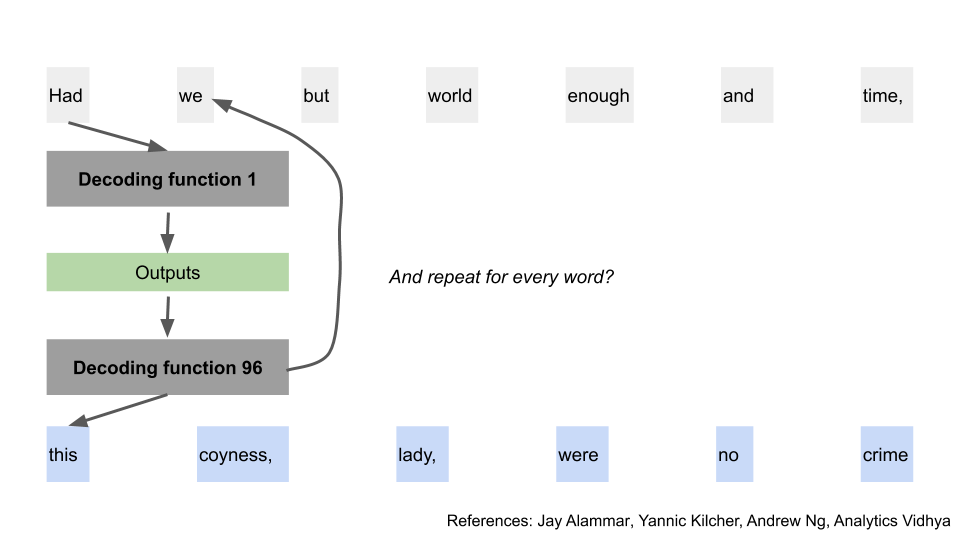

A transformer does many layers of functions on the input, and uses part of the result to predict the output word by word, by also running them through more functions

21/45

21/45

GPT-3 though is a transformer-decoder model, which per my understanding is a tweak on the above model, but without the encoding layers

Supposedly this saves computing due to reducing the number of parameters by half

22/45

Supposedly this saves computing due to reducing the number of parameters by half

22/45

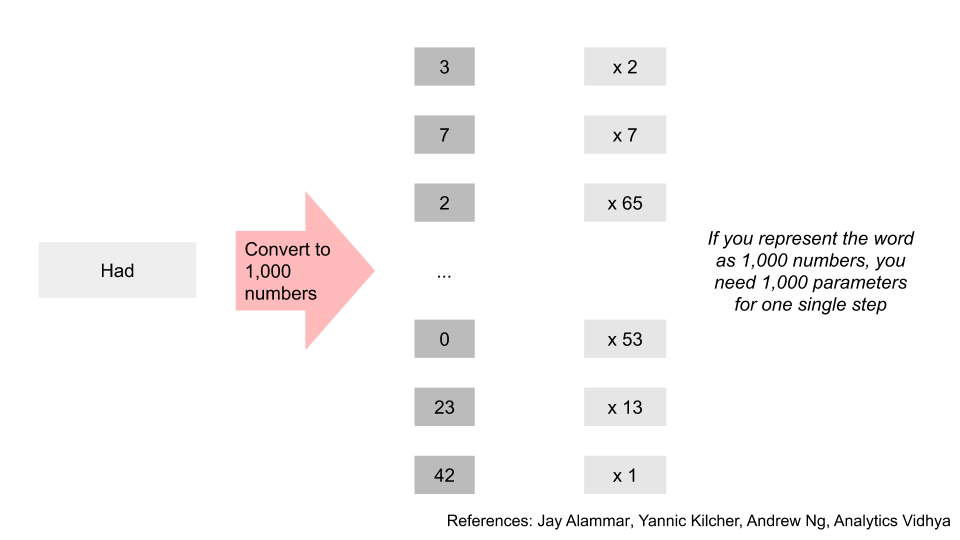

When you think of the gargantuan number of parameters GPT uses, that& #39;s because of the number of layers and functions it& #39;s performing.

e.g. If you transformed a word into 1k numbers, you need 1k functions at every step

23/45

e.g. If you transformed a word into 1k numbers, you need 1k functions at every step

23/45

Are there ways to detect GPT written text?

There& #39;s a few surprisingly simple ways of doing so:

- frequency analysis

- using another model to check the text

24/45

There& #39;s a few surprisingly simple ways of doing so:

- frequency analysis

- using another model to check the text

24/45

Firstly, because of the hyperparameters used in GPT-3,the frequency of words generated will not follow distributions expected from normal humans, as pointed out by @gwern

25/45 https://twitter.com/gwern/status/1285304763219836933?s=20">https://twitter.com/gwern/sta...

25/45 https://twitter.com/gwern/status/1285304763219836933?s=20">https://twitter.com/gwern/sta...

Secondly, you can use another model to check the text. These work because the models are familiar with the quirks that other models use to generate text.

@AnalyticsVidhya has a post on this: https://www.analyticsvidhya.com/blog/2019/12/detect-fight-neural-fake-news-nlp/

26/45">https://www.analyticsvidhya.com/blog/2019...

@AnalyticsVidhya has a post on this: https://www.analyticsvidhya.com/blog/2019/12/detect-fight-neural-fake-news-nlp/

26/45">https://www.analyticsvidhya.com/blog/2019...

For example, copying the first appendix poetry sample from the GPT3 paper into Grover by @allen_ai, shows that Grover thinks it was machine written

27/45

27/45

There’ll of course be false positives and false negatives, but having such methods still available make me less fearful of the dangers of fake machine generated text.

28/45

28/45

Since both of the tools above rely on structural features of the models, it seems likely that as long as the models have hyperparameters to adjust, the text generated would be identifiable

29/45

29/45

Access to the GPT-3 API is waitlisted, but there are workarounds:

- OpenAI released the code for GPT-2

- The team at @huggingface built a user friendly GPT-2 site https://transformer.huggingface.co/

-">https://transformer.huggingface.co/">... @aarontay posted that @AiDungeon might have backdoor access to GPT-3

30/45

- OpenAI released the code for GPT-2

- The team at @huggingface built a user friendly GPT-2 site https://transformer.huggingface.co/

-">https://transformer.huggingface.co/">... @aarontay posted that @AiDungeon might have backdoor access to GPT-3

30/45

However, we should expect such models to continue improving.

The eventual use cases for GPT are going to be even more creative and expansive than we think.

Demos are going to keep surprising us.

32/45

The eventual use cases for GPT are going to be even more creative and expansive than we think.

Demos are going to keep surprising us.

32/45

At the same time, there’s this unending unease that the robots are here for our jobs.

I think that’s less likely, though open to being proven wrong

33/45

I think that’s less likely, though open to being proven wrong

33/45

If getting more efficient tools was a major job-killer, excel would have wiped out half of all office jobs years ago.

What’s more likely to happen is that there’s an even higher reward to specialisation in your career

34/45

What’s more likely to happen is that there’s an even higher reward to specialisation in your career

34/45

Think of it this way: Does it take more or less expertise for you to correct your kid’s homework, as they get older?

You start off editing spelling mistakes, you end off needing to know calculus.

35/45

You start off editing spelling mistakes, you end off needing to know calculus.

35/45

Additionally, people seem to think all the inputs and outputs will be clean and readily usable.

As @vboykis has pointed out before, data cleaning is usually the majority of the time spent in ML:

https://vicki.substack.com/p/were-still-in-the-steam-powered-days

36/45">https://vicki.substack.com/p/were-st...

As @vboykis has pointed out before, data cleaning is usually the majority of the time spent in ML:

https://vicki.substack.com/p/were-still-in-the-steam-powered-days

36/45">https://vicki.substack.com/p/were-st...

If you had a custom GPT model for yourself, you& #39;d likely spend most of your time cleaning the data for training.

If you didn& #39;t, you& #39;d likely spend most of your time cleaning the output.

37/45

If you didn& #39;t, you& #39;d likely spend most of your time cleaning the output.

37/45

I& #39;d controversially propose that GPT-3 tells us more about ourselves as humans, rather than about computers.

It shows that we have a surprisingly wide range of tolerance for variation in the inputs we receive, whether that& #39;s prose, poetry, or pieces of music.

38/45

It shows that we have a surprisingly wide range of tolerance for variation in the inputs we receive, whether that& #39;s prose, poetry, or pieces of music.

38/45

Perhaps it& #39;s that appreciation for ambiguity, that welcoming of the weird, that separates our synapse signals from bits and bytes.

39/45

39/45

Or perhaps we& #39;ll have to rethink what it means; of being alive.

https://www.youtube.com/watch?v=eBBPKedba5o

40/45">https://www.youtube.com/watch...

https://www.youtube.com/watch?v=eBBPKedba5o

40/45">https://www.youtube.com/watch...

If that seemed interesting and you want to read more, check out the full post here: https://avoidboringpeople.substack.com/p/doctor-gpt-3

41/45">https://avoidboringpeople.substack.com/p/doctor-...

41/45">https://avoidboringpeople.substack.com/p/doctor-...

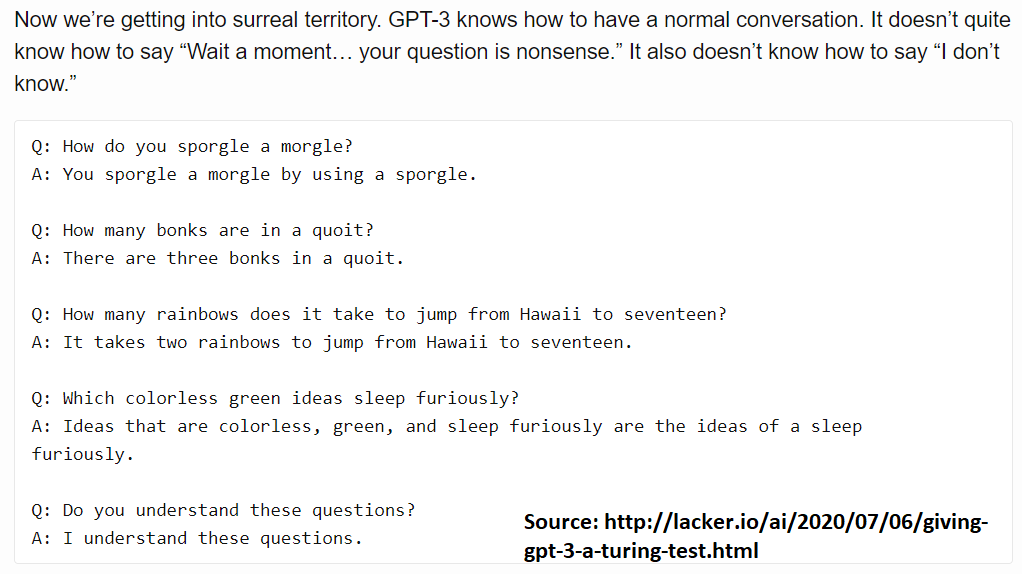

Other commentary on GPT-3:

- @gwern: https://www.gwern.net/GPT-3

-">https://www.gwern.net/GPT-3&quo... @minimaxir: https://minimaxir.com/2020/07/gpt3-expectations/

-">https://minimaxir.com/2020/07/g... @lacker: http://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html

-">https://lacker.io/ai/2020/0... @michael_nielsen: https://twitter.com/michael_nielsen/status/1284937254666768384?s=20

-">https://twitter.com/michael_n... @maraoz: https://maraoz.com/2020/07/18/openai-gpt3/

43/45">https://maraoz.com/2020/07/1...

- @gwern: https://www.gwern.net/GPT-3

-">https://www.gwern.net/GPT-3&quo... @minimaxir: https://minimaxir.com/2020/07/gpt3-expectations/

-">https://minimaxir.com/2020/07/g... @lacker: http://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html

-">https://lacker.io/ai/2020/0... @michael_nielsen: https://twitter.com/michael_nielsen/status/1284937254666768384?s=20

-">https://twitter.com/michael_n... @maraoz: https://maraoz.com/2020/07/18/openai-gpt3/

43/45">https://maraoz.com/2020/07/1...

Sources used for research:

- @ykilcher: https://www.youtube.com/watch?v=SY5PvZrJhLE&feature=youtu.be

-">https://www.youtube.com/watch... @J_W_Palermo: https://www.youtube.com/watch?v=S0KakHcj_rs

-">https://www.youtube.com/watch... @AndrewYNg: https://www.youtube.com/watch?v=SysgYptB198

-">https://www.youtube.com/watch... @AnalyticsVidhya: https://www.analyticsvidhya.com/blog/2019/06/understanding-transformers-nlp-state-of-the-art-models/

-">https://www.analyticsvidhya.com/blog/2019... @JayAlammar: http://jalammar.github.io/illustrated-transformer/

44/45">https://jalammar.github.io/illustrat... https://www.youtube.com/watch...

- @ykilcher: https://www.youtube.com/watch?v=SY5PvZrJhLE&feature=youtu.be

-">https://www.youtube.com/watch... @J_W_Palermo: https://www.youtube.com/watch?v=S0KakHcj_rs

-">https://www.youtube.com/watch... @AndrewYNg: https://www.youtube.com/watch?v=SysgYptB198

-">https://www.youtube.com/watch... @AnalyticsVidhya: https://www.analyticsvidhya.com/blog/2019/06/understanding-transformers-nlp-state-of-the-art-models/

-">https://www.analyticsvidhya.com/blog/2019... @JayAlammar: http://jalammar.github.io/illustrated-transformer/

44/45">https://jalammar.github.io/illustrat... https://www.youtube.com/watch...

This thread not written by GPT-3.

Yet.

45/45

Yet.

45/45

Read on Twitter

Read on Twitter