Since getting academic access, I’ve been thinking about GPT-3’s applications to grounded language understanding — e.g. for robotics and other embodied agents.

In doing so, I came up with a new demo:

Objects to Affordances: “what can I do with an object?”

cc @gdb

In doing so, I came up with a new demo:

Objects to Affordances: “what can I do with an object?”

cc @gdb

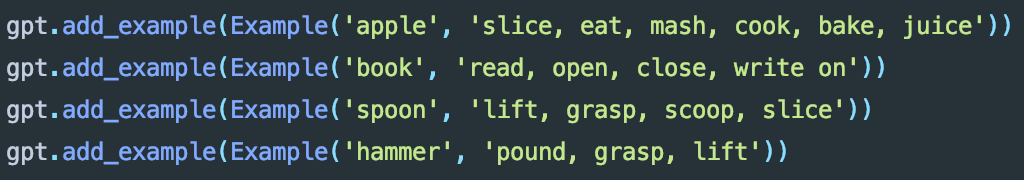

“Priming” the model was pretty straightforward — I just picked four random objects, and chose the first few affordances that came to mind:

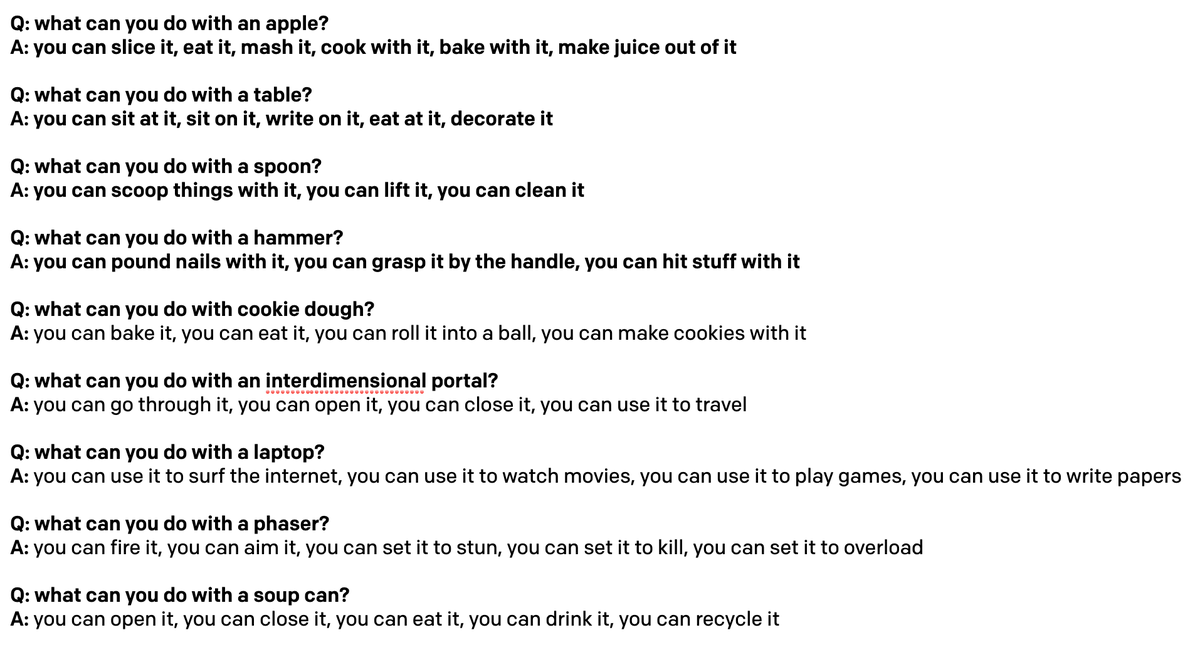

Interestingly, @_eric_mitchell_ and I found that if you “prime” GPT-3 with “natural” (less structured) text, you get less ambiguous action associations (set it to stun vs. stun). Maybe this provides insight on how to structure your “prompts” — the more “natural” the better!

The affordance prediction is fairly good (e.g. interdimensional portal), but it’s not perfect (e.g. soup can).

That being said, I think this has potential for text-based games ( @jaseweston, @mark_riedl), Nethack ( @_rockt, @egrefen), and more importantly robotic manipulation!

That being said, I think this has potential for text-based games ( @jaseweston, @mark_riedl), Nethack ( @_rockt, @egrefen), and more importantly robotic manipulation!

For example, I’d really love to see a robot (equipped with a robust object detection pipeline) use GPT-3 to figure out how to manipulate new objects!

Finally, I’d like to thank @sh_reya and @notsleepingturk for putting together an incredibly easy to use Github Repo ( https://github.com/shreyashankar/gpt3-sandbox)">https://github.com/shreyasha... for putting together these interactive demos.

What an awesome resource!

What an awesome resource!

And lastly - one big thank you to the team at @OpenAI for providing access!

Read on Twitter

Read on Twitter