Tech Twitter is hyped about GPT-3 today! Let me add a counter-perspective.

Here& #39;s my contrarian take on GPT-3 – it has little semantic understanding, it is nowhere close to AGI, and is basically a glorified $10M+ auto-complete software.

1/N

Here& #39;s my contrarian take on GPT-3 – it has little semantic understanding, it is nowhere close to AGI, and is basically a glorified $10M+ auto-complete software.

1/N

GPT-3 like models, as impressive as they may be, are nowhere close to AGI.

*No semantic understanding

*No causal reasoning

*No intuitive physics

*Poor generalization beyond the training set

*No "human-agent" like properties such as a Theory of Mind

2/N

*No semantic understanding

*No causal reasoning

*No intuitive physics

*Poor generalization beyond the training set

*No "human-agent" like properties such as a Theory of Mind

2/N

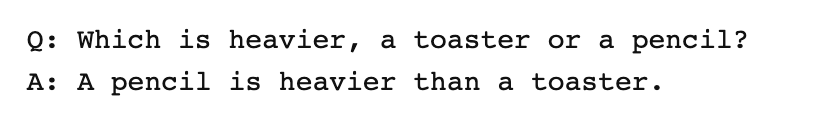

Here& #39;s an illustrative example – any 5yo would understand and answer this question correctly, but GPT-3 fails to do so.

(h/t @nabeelqu)

3/N

(h/t @nabeelqu)

3/N

The above failure-mode is even more jarring when you realize that GPT-3 is trained on *massive* datasets that span the Internet and cost $10M+

4/N

4/N

To summarize, GPT-3 is impressive in its own right. And yes, it has the potential to transform all things writing.

But, it remains a glorified auto-complete that has the backing of the Internet level knowledge repo + basic NLP.

5/N

But, it remains a glorified auto-complete that has the backing of the Internet level knowledge repo + basic NLP.

5/N

AGI remains a distant, lofty, and challenging goal. It will be solved eventually by fundamental breakthroughs especially in areas such as causal reasoning and inference, not by throwing ever more data and compute at GPT like models.

End/

End/

p.s. https://twitter.com/ayushswrites/status/1284471233707417602?s=21">https://twitter.com/ayushswri... https://twitter.com/ayushswrites/status/1284471233707417602">https://twitter.com/ayushswri...

This should about settle if there was ever a debate here. The CEO of @OpenAI agrees. https://twitter.com/sama/status/1284922296348454913?s=21">https://twitter.com/sama/stat... https://twitter.com/sama/status/1284922296348454913">https://twitter.com/sama/stat...

This thread blew up! So, I completed and published my thoughts into a Medium article here: https://link.medium.com/bPvR1JA7g8 ">https://link.medium.com/bPvR1JA7g...

Read on Twitter

Read on Twitter