Paper @icmlconf with @jon_penney @schneierblog & @KendraSerra: https://arxiv.org/abs/2006.16179

Blog:">https://arxiv.org/abs/2006.... https://medium.com/berkman-klein-center/legal-risks-of-adversarial-machine-learning-research-b2e0b8e106ee

Part I: Why should you care?

Adversarial ML Researchers are increasingly point out vulnerabilities in real-world ML systems.

a) For instance, @faizulla_boy showed how @Facebook ad system is vulnerable to microtargetting - https://arxiv.org/pdf/1803.10099.pdf">https://arxiv.org/pdf/1803....

1/

Adversarial ML Researchers are increasingly point out vulnerabilities in real-world ML systems.

a) For instance, @faizulla_boy showed how @Facebook ad system is vulnerable to microtargetting - https://arxiv.org/pdf/1803.10099.pdf">https://arxiv.org/pdf/1803....

1/

b) @moo_hax showed @proofpoint ML system in its email protection system is vulnerable to evasion leading to first CVE - https://twitter.com/ram_ssk/status/1246868317681225728

c)">https://twitter.com/ram_ssk/s... Researchers showed how ML models hosted in Microsoft, Google, IBM, Clairfai can be replicated - https://www.ndss-symposium.org/ndss-paper/cloudleak-large-scale-deep-learning-models-stealing-through-adversarial-examples/

2/">https://www.ndss-symposium.org/ndss-pape...

c)">https://twitter.com/ram_ssk/s... Researchers showed how ML models hosted in Microsoft, Google, IBM, Clairfai can be replicated - https://www.ndss-symposium.org/ndss-paper/cloudleak-large-scale-deep-learning-models-stealing-through-adversarial-examples/

2/">https://www.ndss-symposium.org/ndss-pape...

Part II: CFAA as a Muzzle for Security Research

a) Studying or testing the security of any operational system potentially runs afoul of the Computer Fraud and Abuse Act (CFAA), the primary federal statute that creates liability for hacking.

3/

a) Studying or testing the security of any operational system potentially runs afoul of the Computer Fraud and Abuse Act (CFAA), the primary federal statute that creates liability for hacking.

3/

b) Scholars @OrinKerr @k8em0 @adamshostack @ashk4n @tarah @alexstamos have all argued the CFAA — with its rigid requirements and heavy penalties — has a chilling effect on security research.

But what about adversarial ML research? Is attacking ML systems any different?

4/

But what about adversarial ML research? Is attacking ML systems any different?

4/

c) We think CFAA applies to attacking ML systems. There are others who did so as well: (See @rcalo et. al - https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3150530)">https://papers.ssrn.com/sol3/pape...

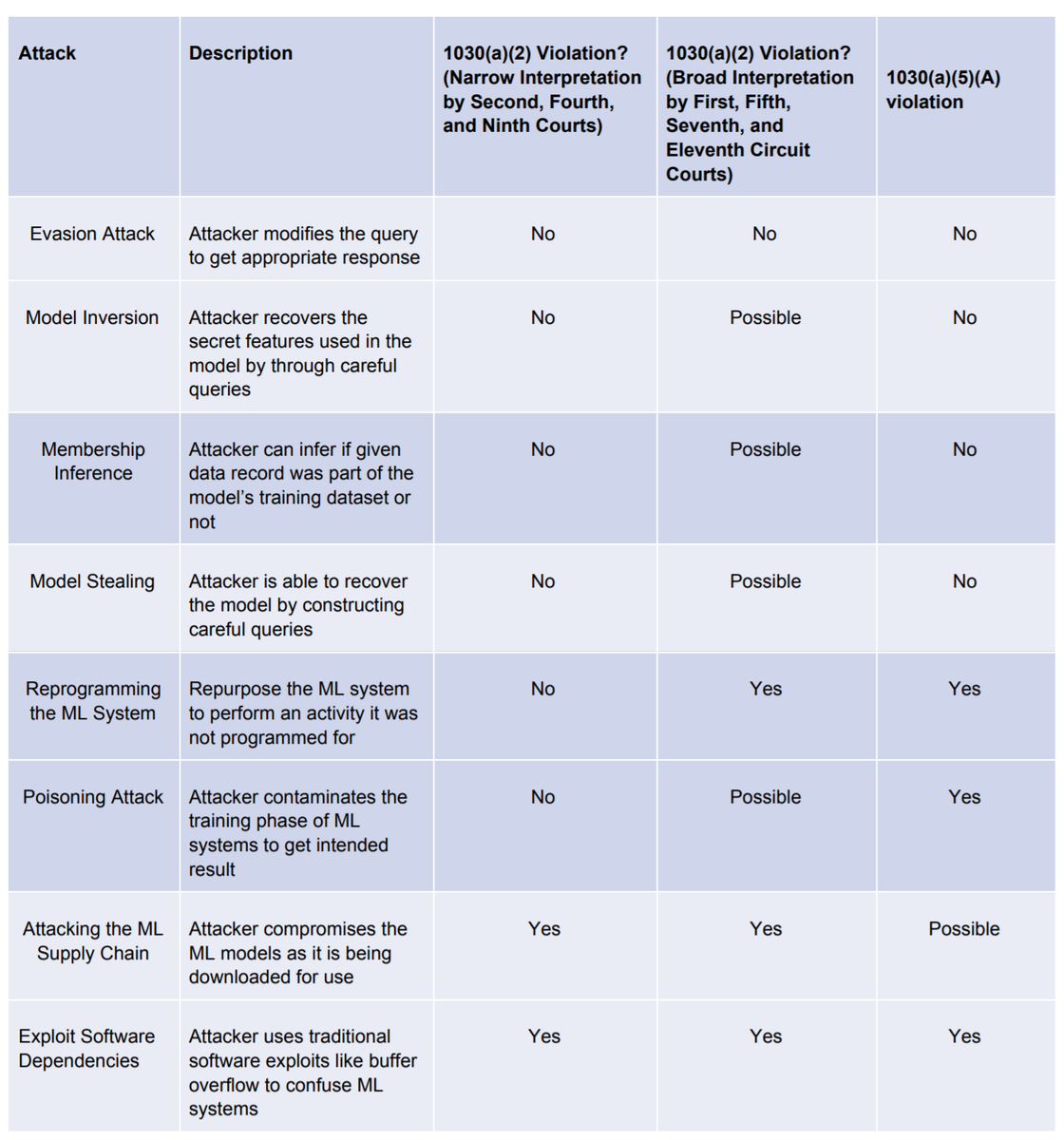

In particular, we focus on two parts of CFAA:

5/

In particular, we focus on two parts of CFAA:

5/

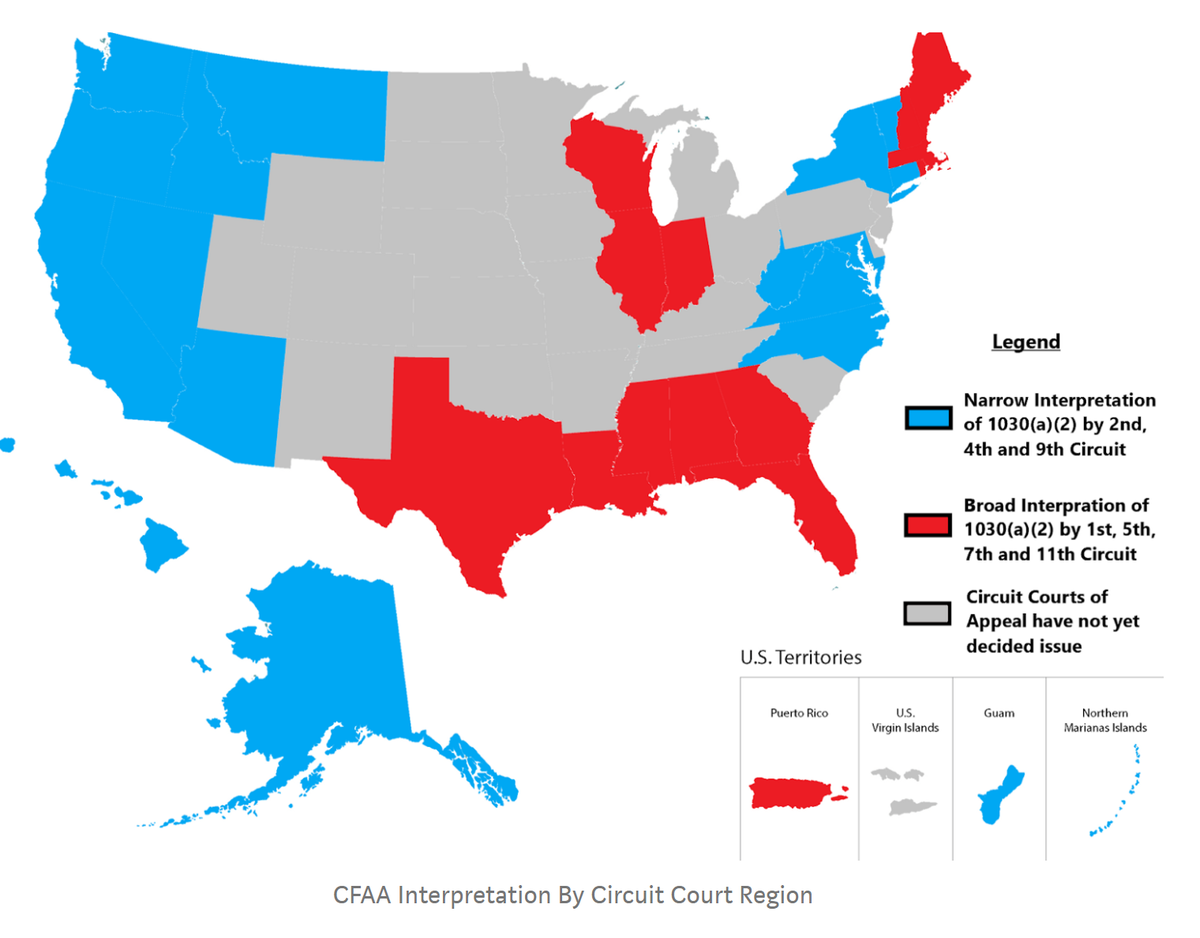

d) Section 1030(a)(2)(C) - Intentionally accessing a computer “without authorization” or in a way that “exceeds authorized access” and as a result obtains “any information” on a “protected computer”.

This landscape is complex and confusing given the current circuit split.

6/

This landscape is complex and confusing given the current circuit split.

6/

e) Second, intentionally causing “damage” to a “protected computer” without authorization by “knowingly” transmitting a “program, information, code, or command” (section 1030(a)(5)(A))

7/

7/

Part III: Set up for analysis

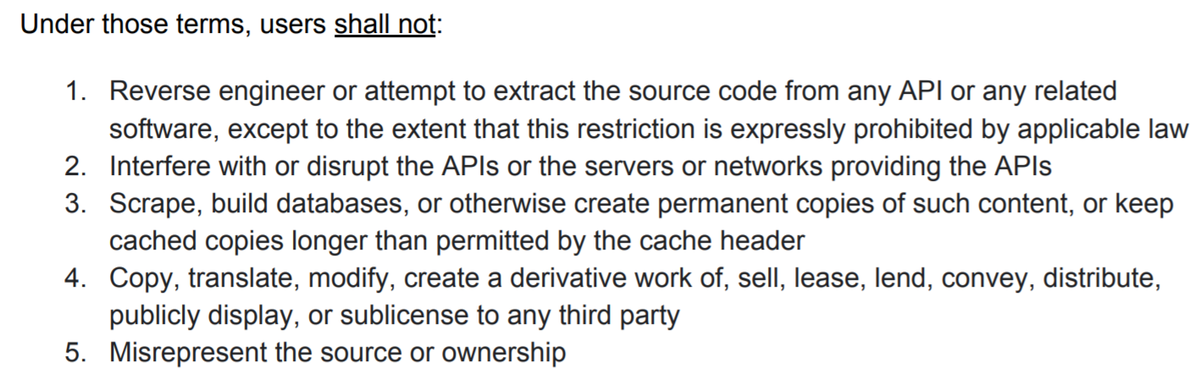

a) We assumed that the adversary is doing a black box ML attack on a system that is governed by ToS very similar to @Google& #39;s API Terms of Service - https://developers.google.com/terms

8/">https://developers.google.com/terms&quo...

a) We assumed that the adversary is doing a black box ML attack on a system that is governed by ToS very similar to @Google& #39;s API Terms of Service - https://developers.google.com/terms

8/">https://developers.google.com/terms&quo...

With ToS, we analyzed possible legal implications of black box attacks...

-- model stealing ( @florian_tramer)

-- model inversion and membership inference ( @jhasomesh)

-- reprogramming neural nets

-- attacking ML supply chain ( @moyix)

-- Exploiting software dependencies

9/

-- model stealing ( @florian_tramer)

-- model inversion and membership inference ( @jhasomesh)

-- reprogramming neural nets

-- attacking ML supply chain ( @moyix)

-- Exploiting software dependencies

9/

...and it is complicated (See table in 1st tweet)

b) Let& #39;s take model stealing, where the attacker recovers the model by sending specially crafted queries and observing response.

10/

b) Let& #39;s take model stealing, where the attacker recovers the model by sending specially crafted queries and observing response.

10/

With respect to section 1030(a)(2), on a narrow interpretation (a la 9th Cir) there is likely no violation for this

attack because no technological access barrier or code-based restriction is circumvented. Queries

are merely sent and inferences made based on observed responses.

attack because no technological access barrier or code-based restriction is circumvented. Queries

are merely sent and inferences made based on observed responses.

BUT 11th Circuit may disagree! On the broader interpretation of 1030(a)(2), which will turn on how broadly a court

interprets the relevant TOS governing the ML system and the insider user.

This, is where I learned the location of where the lawsuit is brought matters....

12/

interprets the relevant TOS governing the ML system and the insider user.

This, is where I learned the location of where the lawsuit is brought matters....

12/

Part III: Way Forward - Pray to #SCOTUS gods

The current Van Buren v US in front of #SCOTUS and should they agree with 9th Cir narrow interpretation, it may mean good news not only for security researchers, but also Adversarial ML researchers, argues brilliant @jon_penney

13/

The current Van Buren v US in front of #SCOTUS and should they agree with 9th Cir narrow interpretation, it may mean good news not only for security researchers, but also Adversarial ML researchers, argues brilliant @jon_penney

13/

a) If Adversarial ML researchers and industry actors cannot rely on expansive TOSs to deter against certain forms of adversarial attacks, it will provide a powerful incentive to develop more robust technological and code-based protections

14/

14/

b) And with a more narrow construction of the CFAA, Adversarial ML security researchers are more likely to be conducting tests and other exploratory work on ML systems, again leading to better security in the long term.

15/

15/

Read on Twitter

Read on Twitter You an Adversarial ML researcher attacking ML systems? There maybe legal risks based on attack and US State the case is brought. #SCOTUS may help us out thoPaper @icmlconf with @jon_penney @schneierblog & @KendraSerra: https://arxiv.org/abs/2006.... https://medium.com/berkman-k... class="Emoji" style="height:16px;" src=" " title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="📢" title="Lautsprecheranlage" aria-label="Emoji: Lautsprecheranlage">You an Adversarial ML researcher attacking ML systems? There maybe legal risks based on attack and US State the case is brought. #SCOTUS may help us out thoPaper @icmlconf with @jon_penney @schneierblog & @KendraSerra: https://arxiv.org/abs/2006.... https://medium.com/berkman-k... class="Emoji" style="height:16px;" src=" " class="img-responsive" style="max-width:100%;"/>

You an Adversarial ML researcher attacking ML systems? There maybe legal risks based on attack and US State the case is brought. #SCOTUS may help us out thoPaper @icmlconf with @jon_penney @schneierblog & @KendraSerra: https://arxiv.org/abs/2006.... https://medium.com/berkman-k... class="Emoji" style="height:16px;" src=" " title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="📢" title="Lautsprecheranlage" aria-label="Emoji: Lautsprecheranlage">You an Adversarial ML researcher attacking ML systems? There maybe legal risks based on attack and US State the case is brought. #SCOTUS may help us out thoPaper @icmlconf with @jon_penney @schneierblog & @KendraSerra: https://arxiv.org/abs/2006.... https://medium.com/berkman-k... class="Emoji" style="height:16px;" src=" " class="img-responsive" style="max-width:100%;"/>