A figure in a recent paper by @talia_konkle and Alvarez ( http://dx.doi.org/10.1101/2020.06.15.153247)">https://dx.doi.org/10.1101/2... reopened an old question for me. The figure shows that an AlexNet architecture with group normalization has more similar representations to the brain than one with batch normalization.

... suggesting GN as an important computation for generating brain-like representations.

Does this observation have anything to do with divisive normalization as suggested by neuroscientists in 90s (review: https://www.nature.com/articles/nrn3136)?">https://www.nature.com/articles/...

Does this observation have anything to do with divisive normalization as suggested by neuroscientists in 90s (review: https://www.nature.com/articles/nrn3136)?">https://www.nature.com/articles/...

I came across a nice paper by Richard Zemel et al ( https://arxiv.org/abs/1611.04520 )">https://arxiv.org/abs/1611.... that finally clarified things for me.

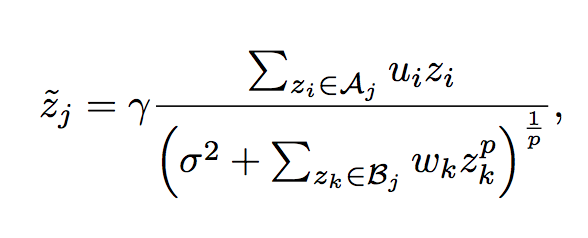

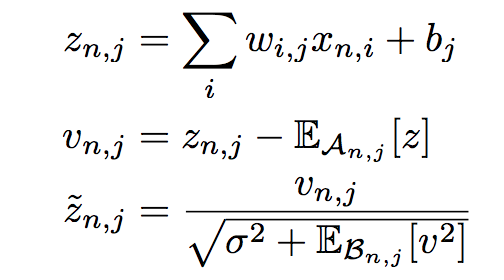

The most important contribution of Zemel et al is the unified formulation of all types of normalization, including divisive normalization (DN) from neuroscience.

The most important contribution of Zemel et al is the unified formulation of all types of normalization, including divisive normalization (DN) from neuroscience.

What do different normalizations do?

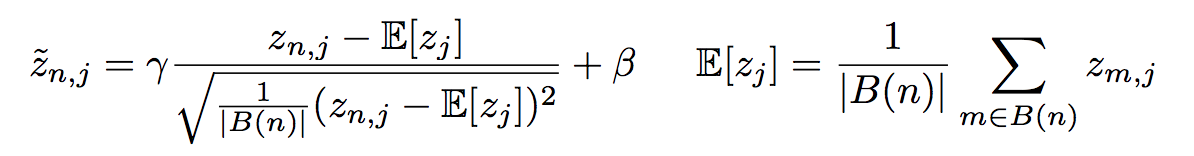

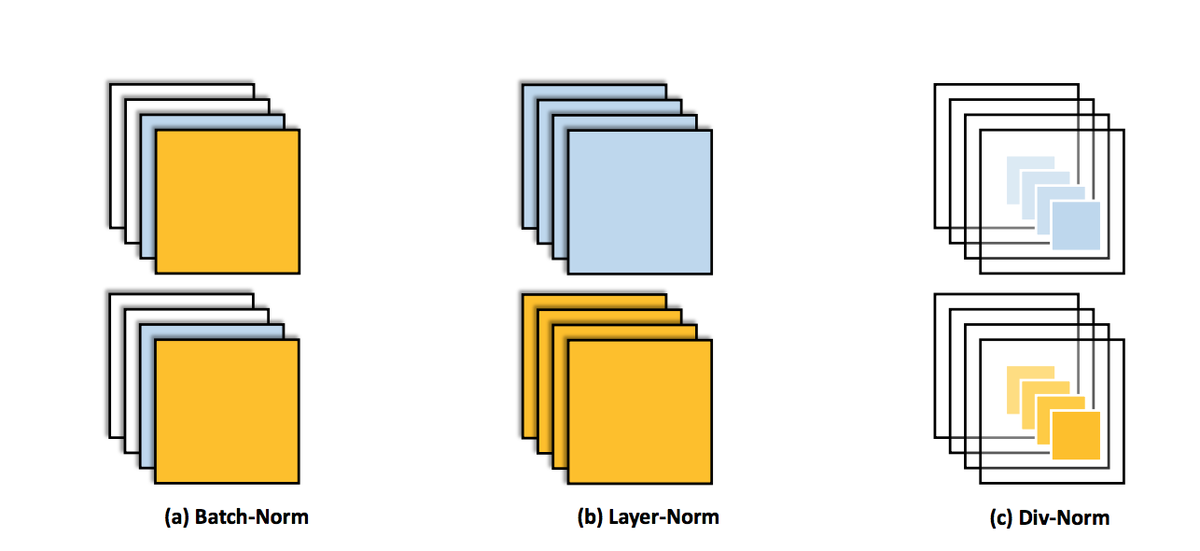

Batch norm (BN): normalization across all the examples in a batch.

Batch norm (BN): normalization across all the examples in a batch.

Divisive norm (DN): normalization across all the units within a layer but in a certain distance from each unit (set by Aj and Bj for unit j)

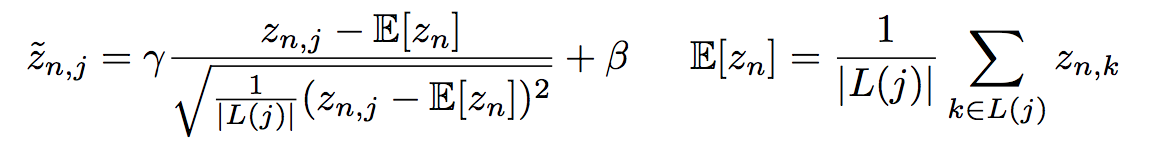

The formulation below unifies the three normalization types, depending on the choice of An and Bn (summation fields - see table 1 in the paper).

In their comparisons, the most similar to divisive normalization (DN) is layer norm (LN) except that, unlike LN, DN normalizes every unit’s activation across a summation/suppression neighbourhood of that unit.

The Group Normalization (GN) is not discussed in the paper, simply because Zemel et al.’s paper was published before GN.

In GN, all the channels are first grouped into a certain number of groups (preset), and the normalization is done across the units within every group.

In GN, all the channels are first grouped into a certain number of groups (preset), and the normalization is done across the units within every group.

With this definition, GN is even more similar to DN compared to LN.

If we think of GN as a simplified version of DN, the good performance of AlexNet+GN model in Konkle and Alvarez& #39;s paper is justified given all the previous studies on the importance of DN in the visual system.

If we think of GN as a simplified version of DN, the good performance of AlexNet+GN model in Konkle and Alvarez& #39;s paper is justified given all the previous studies on the importance of DN in the visual system.

Another interesting preprint on the correspondence between normalization strategies in artificial and biological neural networks https://www.biorxiv.org/content/10.1101/2020.07.17.197640v1">https://www.biorxiv.org/content/1...

Read on Twitter

Read on Twitter