New preprint: "Analytic reproducibility in articles receiving open data badges at Psychological Science: An observational study" with @elManuBohn @macdonaldst @emilyhembacher @MicheleNuijten @peloqube @BennyDemayo @brialong @EricaJYoon @mcxfrank https://doi.org/10.31222/osf.io/h35wt">https://doi.org/10.31222/... 1/13

RATIONALE: Analytic reproducibility should be a minimum standard of any scientific report - if you repeat the original analyses upon the original data, you should get the original results. Do Psychological Science articles with open data badges meet this standard? 2/13

METHOD: For 25 Psych Sci articles with available & reusable data (according to https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1002456),">https://journals.plos.org/plosbiolo... we attempted to repeat a subset of the original analyses. When values differed by >10%, we considered them non-reproducible & contacted original authors for their input. 3/13

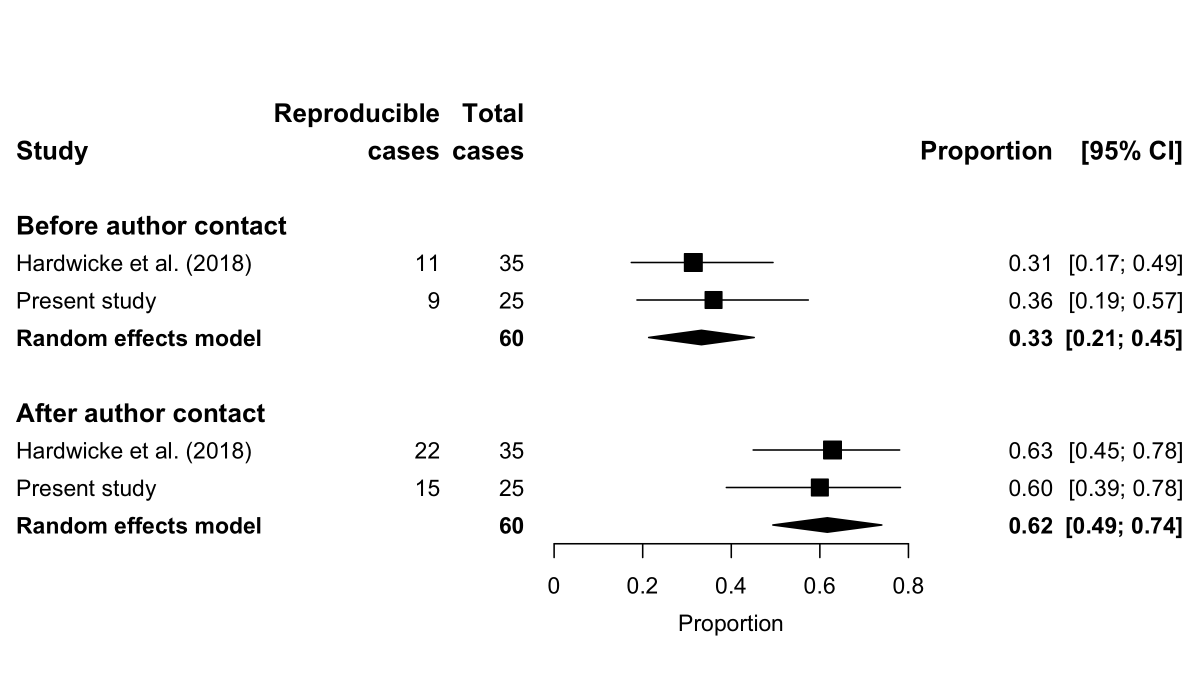

RESULTS 1: Initially, target values in only 9/25 articles (36%) were fully reproducible prompting us to request input from original authors. After authors clarified analyses or corrected issues with data files, target values in 15 (60%, CI [39,78]) articles were reproducible 4/13

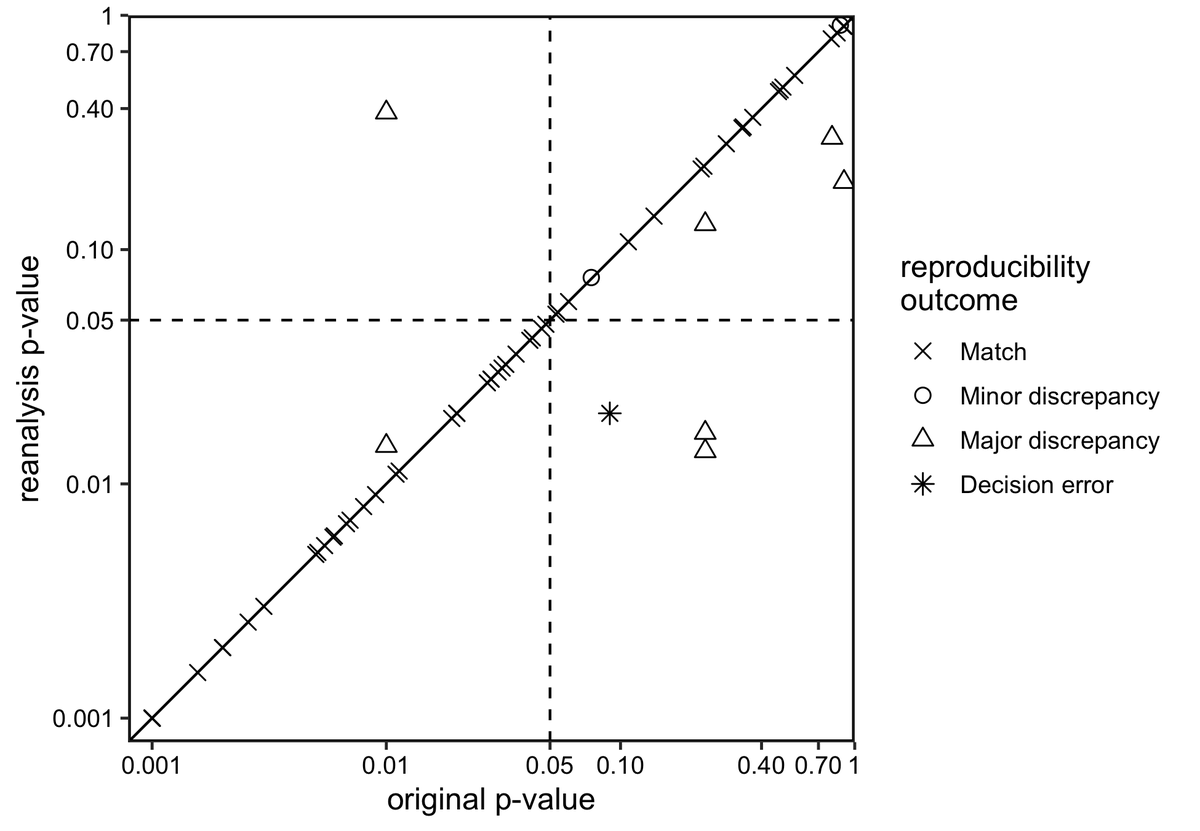

RESULTS 2: Overall, 37/789 (5%) values were not reproducible. Amongst p-values, there was 1 & #39;decision error& #39; (see fig). Importantly, original conclusions did not appear affected in any case. In 3 cases, analyses could be completed due to insufficient information. 5/13

RESULTS 3: Non-reproducibility was primarily caused by unclear reporting of analytic procedures in original papers. Results are consistent with our previous study of analytic reproducibility at Cognition ( https://dx.doi.org/10.1098/rsos.180448)">https://dx.doi.org/10.1098/r... - see mini-meta-analysis in fig. 6/13

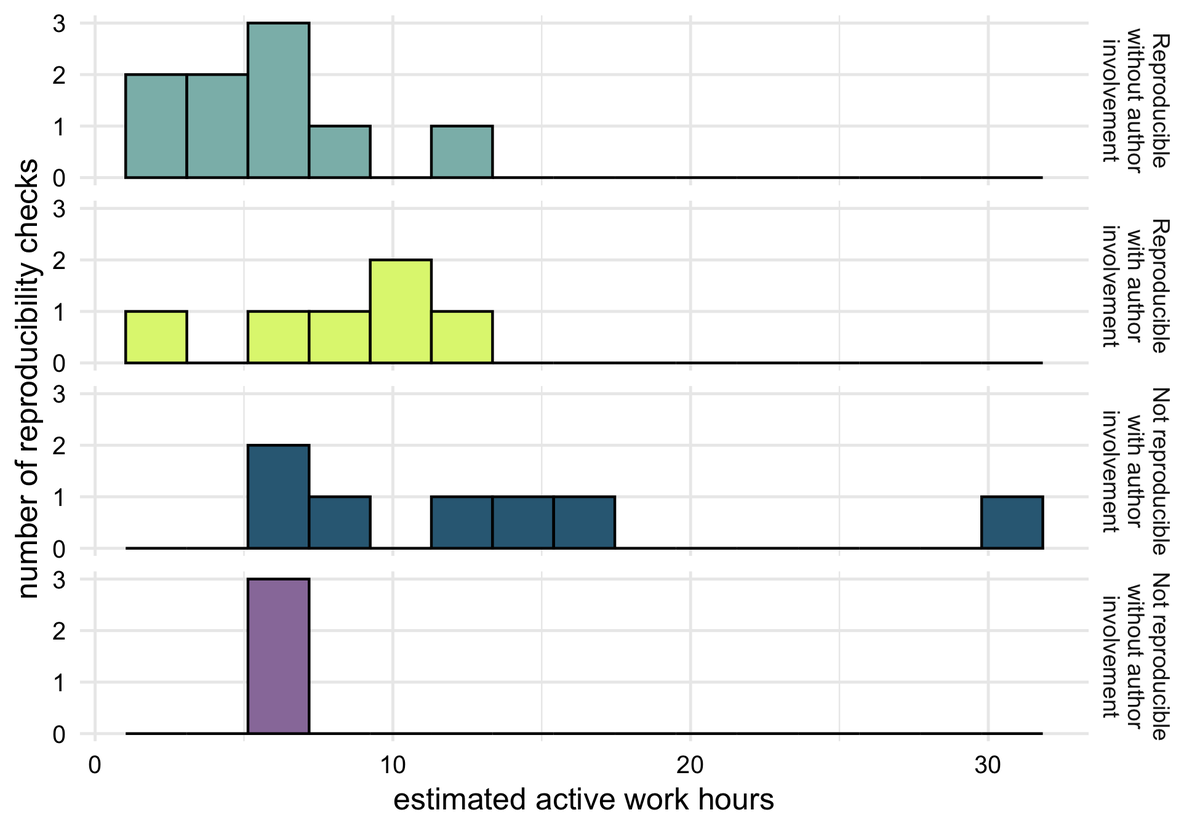

RESULTS 4: Reproducibility checks involved 2+ team members (pilot & co-pilot) and were documented in a detailed R Markdown report. Time-to-complete estimates were between 1 and 30 (median=7, IQR=5) hrs active work (i.e., total time=213 hrs; see fig) 7/13

CAVEAT: Characteristics of sample are likely to skew in favour of reproducibility success - articles shared data (otherwise rare https://doi.org/10.31222/osf.io/9sz2y),">https://doi.org/10.31222/... were pre-screened for reusability, journal had several initiatives to improve rigour & transparency, etc. 8/13

POTENTIAL SOLUTIONS: (1) improve journal policy - e.g., require analysis scripts availability, independently verify reproducibility; (2) write reproducible papers - lots of tools available (for guidance see http://doi.org/10.1525/collabra.158">https://doi.org/10.1525/c... and/or https://crumplab.github.io/vertical/manuscript/manuscript.pdf)">https://crumplab.github.io/vertical/... 9/13

CONCLUSIONS 1: The findings illustrate that the analytic reproducibility of published psychology articles is not guaranteed. Data availability alone is insufficient; further action is required to ensure the analytic reproducibility of scientific manuscripts. 10/13

CONCLUSIONS 2: Errors & imperfections are inevitable - researchers are only human and humans make mistakes. But most issues identified in this study were avoidable. Let& #39;s use systems/tools that anticipate & mitigate error. And let& #39;s check each other& #39;s work. 11/13

NOTE 1: This thread was a summary - paper has more details & context. Pre-reg/data/code etc is available on OSF ( https://osf.io/n3dej/ )">https://osf.io/n3dej/&qu... and there& #39;s a Code Ocean container ( https://doi.org/10.24433/CO.1796004.v1)">https://doi.org/10.24433/... so everything should be reproducible... https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤞" title="Crossed fingers" aria-label="Emoji: Crossed fingers"> Feedback very welcome 12/13

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤞" title="Crossed fingers" aria-label="Emoji: Crossed fingers"> Feedback very welcome 12/13

NOTE 2: For recent related work on analytic and computational reproducibility check out @victoriastodden et al. https://www.pnas.org/content/115/11/2584">https://www.pnas.org/content/1... Pepijn Obels et al. https://doi.org/10.1177%2F2515245920918872">https://doi.org/10.1177%2... @NaudetFlorian et al. http://dx.doi.org/10.1136/bmj.k400">https://dx.doi.org/10.1136/b... & @rianaminocher et al. https://doi.org/10.31234/osf.io/4nzc7">https://doi.org/10.31234/... 13/13

Read on Twitter

Read on Twitter