XGBoost is one of the ultimate weapons for Data Scientists

- w tabular data it will often give you the best performance over everything else

Thread covering everything a DS should know about XGBoost

Paper: https://arxiv.org/abs/1603.02754

Blog:https://arxiv.org/abs/1603.... href=" https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/

-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya

- w tabular data it will often give you the best performance over everything else

Thread covering everything a DS should know about XGBoost

Paper: https://arxiv.org/abs/1603.02754

Blog:

-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya

What is being optimized?

- pred = sum over K regression trees

- loss function needs to be convex and differentiable

- regularization penalty (per tree) penalizes based on number of leaves + L2 regularization on values per leaf

- pred = sum over K regression trees

- loss function needs to be convex and differentiable

- regularization penalty (per tree) penalizes based on number of leaves + L2 regularization on values per leaf

How is it being optimized?

- Instead of optimizing on the exact loss, we optimize on the 2nd order approximation of f(x)

- And bc the loss function is convex and differentiable this allows us to find closed form solutions for each leaf value

- Which extends to split candidates

- Instead of optimizing on the exact loss, we optimize on the 2nd order approximation of f(x)

- And bc the loss function is convex and differentiable this allows us to find closed form solutions for each leaf value

- Which extends to split candidates

How else does XGBoost prevent overfitting?

- Shrinkage: "scales newly added weights by a factor η after each step" similar to lr in sgd, reduces the influence of each individual tree and leaves space for future trees.

- Feature sampling: same as random forest (sample per split)

- Shrinkage: "scales newly added weights by a factor η after each step" similar to lr in sgd, reduces the influence of each individual tree and leaves space for future trees.

- Feature sampling: same as random forest (sample per split)

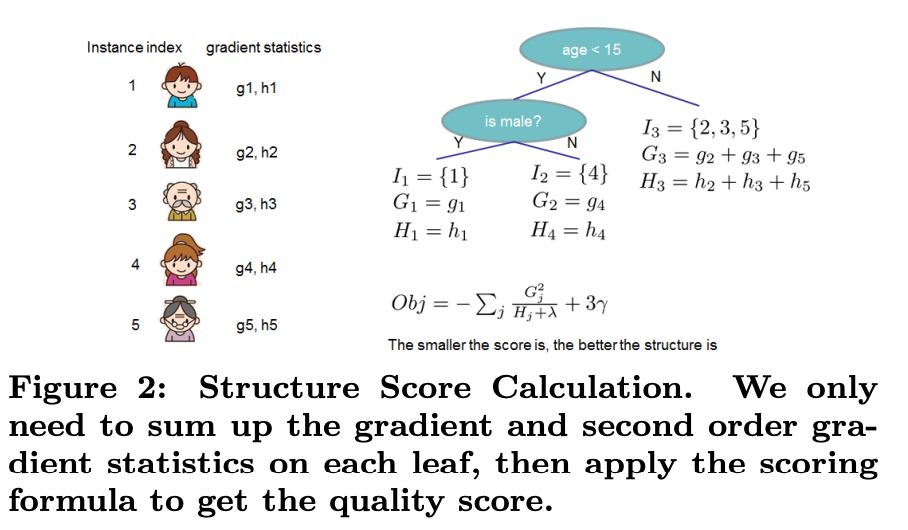

Greedy Split Finding

1. For each value in the feature we compute the loss reduction if we split on it (score)

2. Giving us the loss reduction from the best split per feature

3. Repeat for the rest of the features, resulting in the best possible split across all features

1. For each value in the feature we compute the loss reduction if we split on it (score)

2. Giving us the loss reduction from the best split per feature

3. Repeat for the rest of the features, resulting in the best possible split across all features

How does XGBoost Parallelize?

- Does within a single tree, not >1 trees in parallel

- In order to do this in a single tree it needs to use an approximate (~ percentiles) algorithm for split finding

- Also allows training on data that won& #39;t fit into memory

#39;t%20run%20multiple,and%20run%20with%20n_rounds%3D1">https://stackoverflow.com/questions/34151051/how-does-xgboost-do-parallel-computation#:~:text=1%20Answer&text=Xgboost%20doesn& #39;t%20run%20multiple,and%20run%20with%20n_rounds%3D1.">https://stackoverflow.com/questions...

- Does within a single tree, not >1 trees in parallel

- In order to do this in a single tree it needs to use an approximate (~ percentiles) algorithm for split finding

- Also allows training on data that won& #39;t fit into memory

#39;t%20run%20multiple,and%20run%20with%20n_rounds%3D1">https://stackoverflow.com/questions/34151051/how-does-xgboost-do-parallel-computation#:~:text=1%20Answer&text=Xgboost%20doesn& #39;t%20run%20multiple,and%20run%20with%20n_rounds%3D1.">https://stackoverflow.com/questions...

How does XGBoost "handle" missing values?

- Using the non-missing data we learn per feature which split and direction reduces the loss function the most

- This is then used for all the missing values

- Much faster than encoding missing values

- Using the non-missing data we learn per feature which split and direction reduces the loss function the most

- This is then used for all the missing values

- Much faster than encoding missing values

What should I be monitoring when training?

- Similar to neural networks its good to monitor your train/val loss and evaluation overtime

- This can help inform how you tune your learning rate, regularization, and early stopping parameters

- And is always a good sanity check

- Similar to neural networks its good to monitor your train/val loss and evaluation overtime

- This can help inform how you tune your learning rate, regularization, and early stopping parameters

- And is always a good sanity check

How does early stopping work w XGBoost?

- when fitting you can specify the early_stopping_rounds

- = number of epochs over which no improvement is observed that will cause the training to stop

https://machinelearningmastery.com/avoid-overfitting-by-early-stopping-with-xgboost-in-python/

-">https://machinelearningmastery.com/avoid-ove... @TeachTheMachine

- when fitting you can specify the early_stopping_rounds

- = number of epochs over which no improvement is observed that will cause the training to stop

https://machinelearningmastery.com/avoid-overfitting-by-early-stopping-with-xgboost-in-python/

-">https://machinelearningmastery.com/avoid-ove... @TeachTheMachine

What do I need to be careful about when training XGBoost?

- Overfitting is the biggest concern

- XGBoost is much more likely to overfit than RandomForest (when trained w enough trees)

- So be sure to investigate model biases, and monitor the degree to which is overfitting

- Overfitting is the biggest concern

- XGBoost is much more likely to overfit than RandomForest (when trained w enough trees)

- So be sure to investigate model biases, and monitor the degree to which is overfitting

Can XGBoost handle custom loss functions? If so how?

- Yes it can, xgboost.train takes an obj param corresponding to a custom objective function that returns the gradients and the hessian based on the predictions and labels

- @XGBoostProject

https://github.com/dmlc/xgboost/blob/master/demo/guide-python/custom_objective.py">https://github.com/dmlc/xgbo...

- Yes it can, xgboost.train takes an obj param corresponding to a custom objective function that returns the gradients and the hessian based on the predictions and labels

- @XGBoostProject

https://github.com/dmlc/xgboost/blob/master/demo/guide-python/custom_objective.py">https://github.com/dmlc/xgbo...

How does XGBoost have built in cv? Why is this useful?

- Using OOB error: "OOB is the mean prediction error on each training sample xᵢ, using only the trees that did not have xᵢ in their bootstrap sample." - @Wikipedia

- Useful for tuning the number of trees - @AnalyticsVidhya

- Using OOB error: "OOB is the mean prediction error on each training sample xᵢ, using only the trees that did not have xᵢ in their bootstrap sample." - @Wikipedia

- Useful for tuning the number of trees - @AnalyticsVidhya

What if the function doesn& #39;t approximate well to the 2nd order?

- "Higher order information is indeed not completely used, but it is not necessary, because we want a good approximation in the neighbourhood of our starting point."

https://stats.stackexchange.com/questions/202858/xgboost-loss-function-approximation-with-taylor-expansion

-">https://stats.stackexchange.com/questions... @StackStats

- "Higher order information is indeed not completely used, but it is not necessary, because we want a good approximation in the neighbourhood of our starting point."

https://stats.stackexchange.com/questions/202858/xgboost-loss-function-approximation-with-taylor-expansion

-">https://stats.stackexchange.com/questions... @StackStats

What if your data is very imbalanced?

- To maximize auc adjust scale_pos_weight

- If you need to be predicting the right probabilities though, you can adjust max_delta_step to improve convergence

https://xgboost.readthedocs.io/en/latest/tutorials/param_tuning.html">https://xgboost.readthedocs.io/en/latest... - @XGBoostProject

- To maximize auc adjust scale_pos_weight

- If you need to be predicting the right probabilities though, you can adjust max_delta_step to improve convergence

https://xgboost.readthedocs.io/en/latest/tutorials/param_tuning.html">https://xgboost.readthedocs.io/en/latest... - @XGBoostProject

Can XGBoost be used for survival analysis?

- Yes

- Censoring is very important to properly handle otherwise will bias your model

- To understand how this does it in the backend you can think of transforming it from instead of predict death date to whether its still alive at time

- Yes

- Censoring is very important to properly handle otherwise will bias your model

- To understand how this does it in the backend you can think of transforming it from instead of predict death date to whether its still alive at time

Choosing the loss function

- @XGBoostProject did a great job giving a ton of defaults to choose from for a host of use cases

- various survival models

- multi-class and multi-label

- many regression types too (poisson, gamma ...)

- @XGBoostProject did a great job giving a ton of defaults to choose from for a host of use cases

- various survival models

- multi-class and multi-label

- many regression types too (poisson, gamma ...)

How to tune XGB?

- This guide recommends starting w a higher lr, tune num trees, then parameters and regularization, finalize w learning rate

- Also good to watch the curves, and I’d think you’d want to tune trees again at the end

https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/

-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya

- This guide recommends starting w a higher lr, tune num trees, then parameters and regularization, finalize w learning rate

- Also good to watch the curves, and I’d think you’d want to tune trees again at the end

https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/

-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya

Read on Twitter

Read on Twitter https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya" title="XGBoost is one of the ultimate weapons for Data Scientists- w tabular data it will often give you the best performance over everything elseThread covering everything a DS should know about XGBoostPaper: https://arxiv.org/abs/1603.... href=" https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya" class="img-responsive" style="max-width:100%;"/>

https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya" title="XGBoost is one of the ultimate weapons for Data Scientists- w tabular data it will often give you the best performance over everything elseThread covering everything a DS should know about XGBoostPaper: https://arxiv.org/abs/1603.... href=" https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/-">https://www.analyticsvidhya.com/blog/2016... @AnalyticsVidhya" class="img-responsive" style="max-width:100%;"/>