That& #39;s right--it& #39;s threading time!

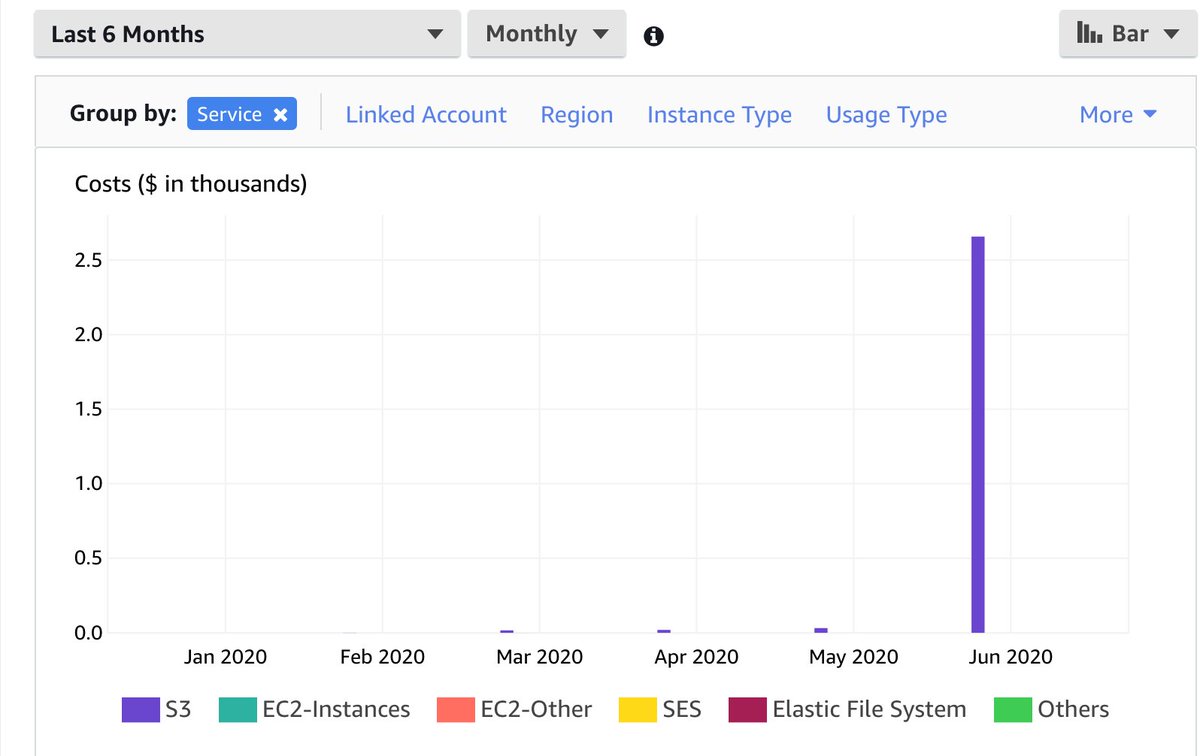

@chrisshort& #39;s surprise @awscloud bill of $2700 looks S3 driven... https://twitter.com/ChrisShort/status/1279406322837082114">https://twitter.com/ChrisShor...

@chrisshort& #39;s surprise @awscloud bill of $2700 looks S3 driven... https://twitter.com/ChrisShort/status/1279406322837082114">https://twitter.com/ChrisShor...

Before we dive in, I want to call out that this is the inherent problem @awscloud has: people with personal accounts vs. corporate accounts.

Personal vs. corporate money is the difference between "HOLY POOP $80 FOR A BURGER" vs. "Can I get a receipt?"

Personal vs. corporate money is the difference between "HOLY POOP $80 FOR A BURGER" vs. "Can I get a receipt?"

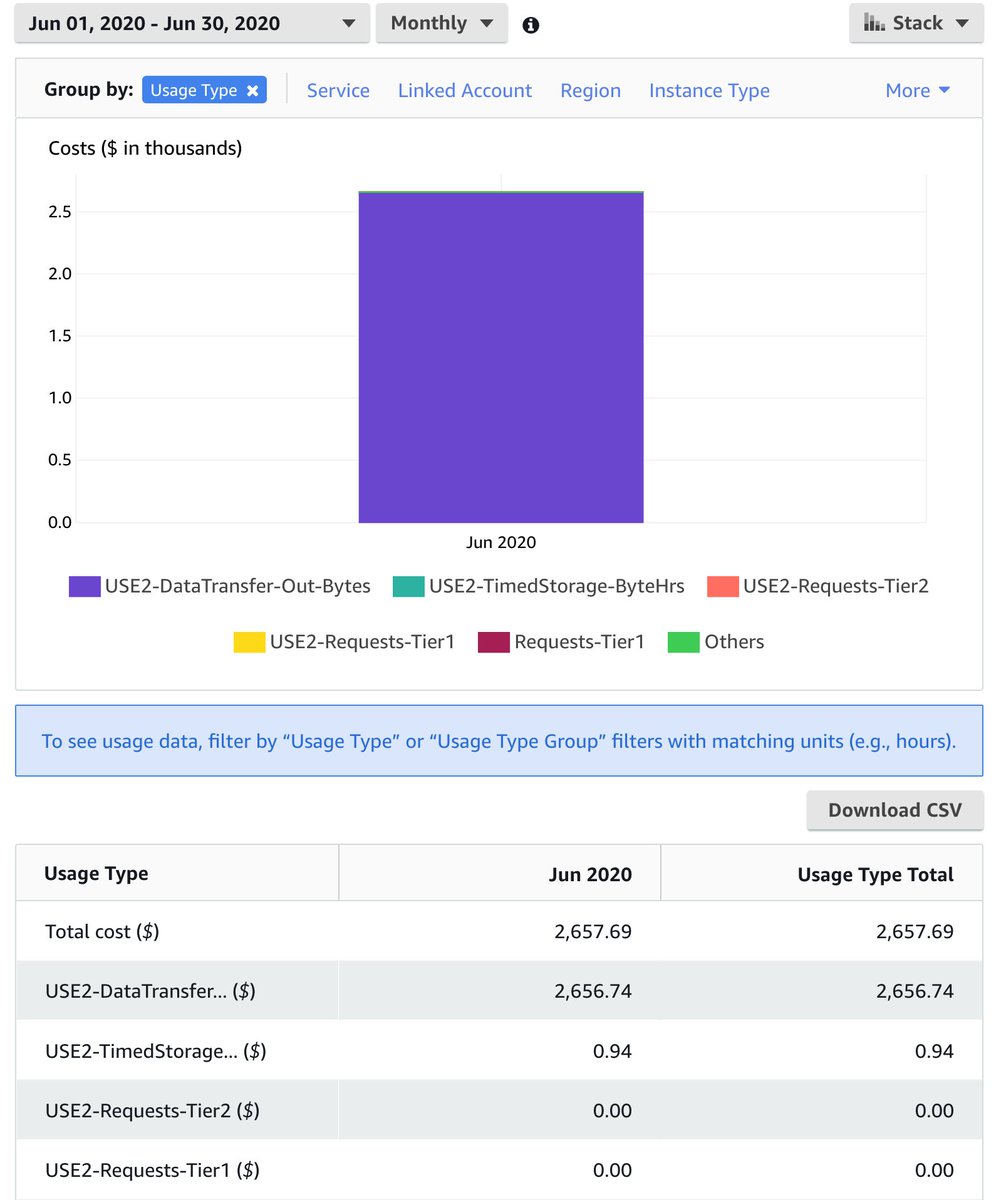

Now, exploding it by service view we see that it& #39;s almost entirely due to us-east-2 data transfer out. My joke is that "data wants to get the hell out of Ohio" but hoo doggy does it ever in this case.

There& #39;s a particular bucket in us-east-2 that& #39;s set to host static websites. This is, contrary to the screaming alarms in the console, a valid use case for S3.

Sure enough, the bill shows 30TB of data transfer out.

Now seems an opportune time to mention that for all of their faults, this exact pattern on @oraclecloud would have caused Chris a $173 bill.

Now seems an opportune time to mention that for all of their faults, this exact pattern on @oraclecloud would have caused Chris a $173 bill.

Now, what caused this?

Nobody knows! Logging wasn& #39;t enabled on the bucket, so we can& #39;t see what objects or requestor IPs were involved. The entire response to @chrisshort here is a pile of Monday morning quarterbacking.

Nobody knows! Logging wasn& #39;t enabled on the bucket, so we can& #39;t see what objects or requestor IPs were involved. The entire response to @chrisshort here is a pile of Monday morning quarterbacking.

Here& #39;s what really cheeses me off. @chrisshort isn& #39;t some random fool who doesn& #39;t get how computers work.

He& #39;s a @CloudNativeFdn Ambassador, works at IBM Hat, is deep in the Ansible community, etc.

He& #39;s a @CloudNativeFdn Ambassador, works at IBM Hat, is deep in the Ansible community, etc.

A "coulda woulda shoulda" response doesn& #39;t cut it here.

"Surprise jackhole, your mortgage isn& #39;t your most expensive bill this month, guess you shoulda enabled billing alarms!" is crappy, broken, and WOULD NOT HAVE SOLVED THE PROBLEM!

"Surprise jackhole, your mortgage isn& #39;t your most expensive bill this month, guess you shoulda enabled billing alarms!" is crappy, broken, and WOULD NOT HAVE SOLVED THE PROBLEM!

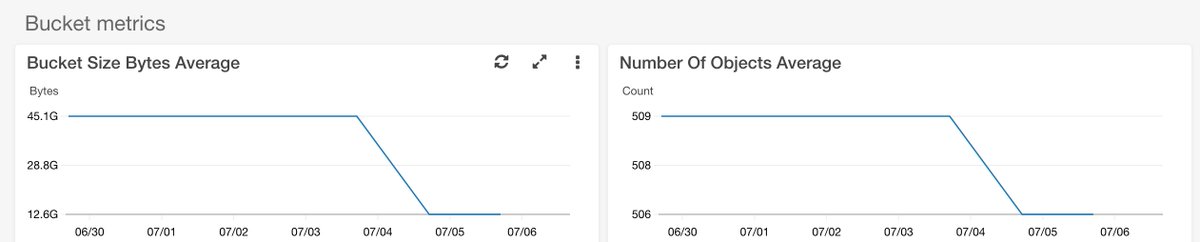

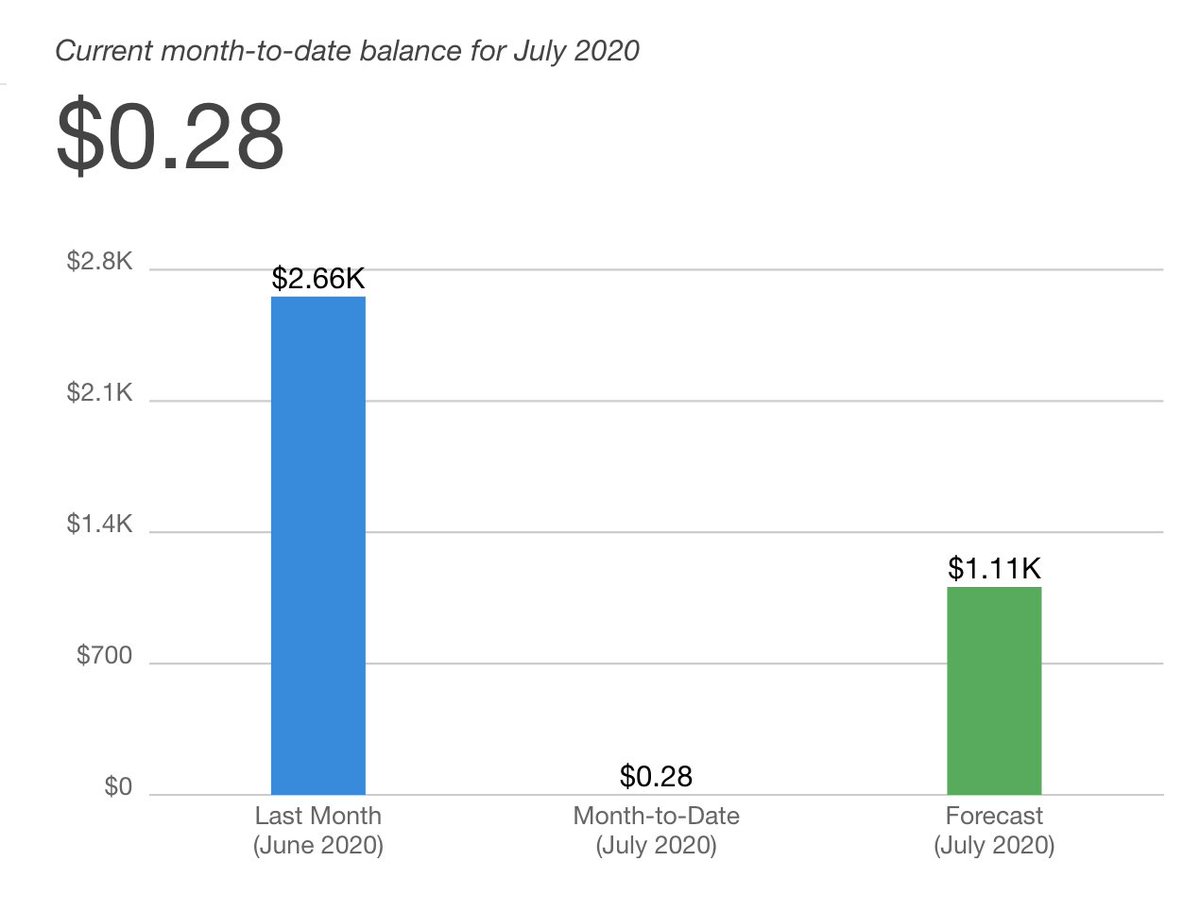

This all took place within a 24 hour span. @chrisshort would still be staring at a $1K+ bill for this if budgets had been set!

What the hell kind of @awscloud "customer obsession" is this?

What the hell kind of @awscloud "customer obsession" is this?

Because Chris didn& #39;t forecast someone or something grabbing large objects repeatedly, he& #39;s being made to feel ashamed and somehow "dumb" for not being able to magically predict this outcome.

What do you imagine that does for his views of @awscloud moving forward?

What do you imagine that does for his views of @awscloud moving forward?

Now, he& #39;s opened an @AWSSupport ticket. What I *think* is going to happen is that Chris is going to sweat bullets for a week or so, then they& #39;ll issue a "one time credit" and make him sit through a lecture on how to avoid this next time, none of which would have actually worked.

I admit to having a bias here. @quinnypiglet really likes @chrisshort& #39;s son, so ensuring that the kiddo has enough to eat is important to me.

I& #39;ve talked about rethinking the @awscloud free tier, and this is why.

"Surprise, here& #39;s a 100x bill multiplier over a 24 hour span from a billing system that aspirationally has an 8 hour resolution" is crap! https://www.lastweekinaws.com/blog/its-time-to-rethink-the-aws-free-tier/">https://www.lastweekinaws.com/blog/its-...

"Surprise, here& #39;s a 100x bill multiplier over a 24 hour span from a billing system that aspirationally has an 8 hour resolution" is crap! https://www.lastweekinaws.com/blog/its-time-to-rethink-the-aws-free-tier/">https://www.lastweekinaws.com/blog/its-...

There& #39;s a very real human cost to bill surprises like this.

Even in a corporate environment there& #39;s the fear of getting rage-fired for a bill surprise like this. https://twitter.com/ChrisShort/status/1280287788194320384?s=20">https://twitter.com/ChrisShor...

Even in a corporate environment there& #39;s the fear of getting rage-fired for a bill surprise like this. https://twitter.com/ChrisShort/status/1280287788194320384?s=20">https://twitter.com/ChrisShor...

So, @awscloud friends: I& #39;m listening. What should Chris do to prevent a recurrence of this? Everything I can think of comes down to "here& #39;s how you can figure out what caused it," none of which prevents the issue in the first place.

I want to be explicitly clear here: @chrisshort didn& #39;t do anything wrong!

This wasn& #39;t a misconfigured bucket. This wasn& #39;t passing data back and forth 50 times. "Using system as intended / designed" and then a surprise bill out of nowhere hit.

This wasn& #39;t a misconfigured bucket. This wasn& #39;t passing data back and forth 50 times. "Using system as intended / designed" and then a surprise bill out of nowhere hit.

I have a few things I can think of, but no client I work with cares a toss about a billing issue this (relatively) small.

This cannot be reasonably exploited to cause $1 million bill surprise, so it’s not in most companies’ risk assessments.

This cannot be reasonably exploited to cause $1 million bill surprise, so it’s not in most companies’ risk assessments.

Miss me with the “you should understand @awscloud’s data transfer pricing better” takes.

Sure!

Enable the `BytesDownloaded` S3 metric for the bucket , then slap some decent deviation monitoring on it that alarms appropriately.

Asking someone to do this for a personal project is a fancy way of telling them to go f*ck themselves. https://twitter.com/somecloudguy/status/1280301729775587330?s=20">https://twitter.com/somecloud...

Enable the `BytesDownloaded` S3 metric for the bucket , then slap some decent deviation monitoring on it that alarms appropriately.

Asking someone to do this for a personal project is a fancy way of telling them to go f*ck themselves. https://twitter.com/somecloudguy/status/1280301729775587330?s=20">https://twitter.com/somecloud...

Surprisingly, this is basically a non-issue in enterprise @awscloud accounts. Someone has to spin an awful lot of instances to mine an awful lot of bitcoin to noticeably impact a $12 million monthly bill!

Virtually anything else you can suggest runs into eventual consistency problems. CloudTrail has ~20 minutes of latency, the billing system between 8 to many-times-8 hours of latency.

And this assumes you& #39;re ready to swing into action when you see it.

And this assumes you& #39;re ready to swing into action when you see it.

"Hey @chrisshort! Want more heart attacks? Imma screw with the predictions for July just to really emphasize how Customer Obsessed we are." -- @awscloud Billing Console

Read on Twitter

Read on Twitter