The ImageNet dataset is built from internet images.

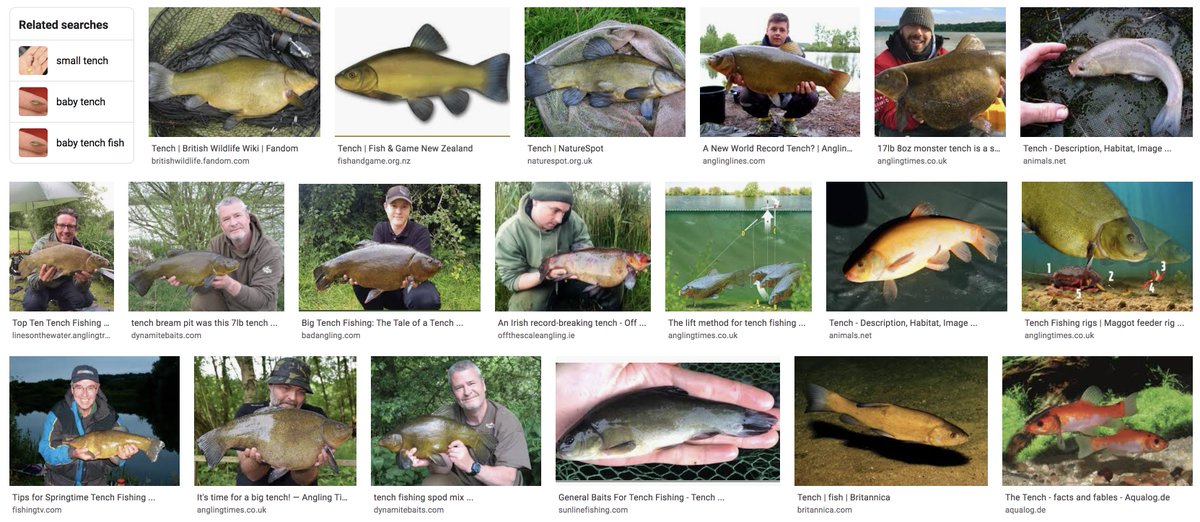

Here& #39;s an example image search result for "tench" (a kind of fish). Does having a consistent category of images in which a dominant element is a human mess things up? Yes. Yes, it does.

https://aiweirdness.com/post/622648824384602112/when-data-is-messy">https://aiweirdness.com/post/6226...

Here& #39;s an example image search result for "tench" (a kind of fish). Does having a consistent category of images in which a dominant element is a human mess things up? Yes. Yes, it does.

https://aiweirdness.com/post/622648824384602112/when-data-is-messy">https://aiweirdness.com/post/6226...

If you train an image recognition algorithm on ImageNet, then ask it which part of the image it found the most useful for recognizing a tench fish, this is what it& #39;ll highlight.

It has no idea the human fingers aren& #39;t part of the fish.

https://medium.com/bethgelab/neural-networks-seem-to-follow-a-puzzlingly-simple-strategy-to-classify-images-f4229317261f">https://medium.com/bethgelab...

It has no idea the human fingers aren& #39;t part of the fish.

https://medium.com/bethgelab/neural-networks-seem-to-follow-a-puzzlingly-simple-strategy-to-classify-images-f4229317261f">https://medium.com/bethgelab...

If you train an image generating algorithm on ImageNet, pretty much every generated image of a tench fish looks like these, including the weird fascination with human fingers.

(generated from BigGAN via http://artbreeder.com"> http://artbreeder.com )

(generated from BigGAN via http://artbreeder.com"> http://artbreeder.com )

In the ImageNet category "microphone" there must have been lots of concert pictures where the microphone is only a small portion of the image. When BigGAN generates microphone images, it often tends to leave out the actual microphone.

The ImageNet category "football helmet" probably has pictures that are not of helmets - when BigGAN does football helmets, some of the humans are very clearly not wearing helmets. One appears to be wearing a baseball helmet? Which would be in line with: https://medium.com/bethgelab/neural-networks-seem-to-follow-a-puzzlingly-simple-strategy-to-classify-images-f4229317261f">https://medium.com/bethgelab...

ImageNet grabbed messy internet data. Some of that mess is alarming. @katecrawford @trevorpaglen and others have documented ImageNet images that are pornographic and/or likely included without the subject& #39;s consent. https://www.excavating.ai/ ">https://www.excavating.ai/">...

@Abebab and @vinayprabhu documented problematic images in ImageNet, as well as some HIGHLY terrible categories in the 80 Million Tiny Images dataset, automatically harvested from internet usage and including super racist terms. https://www.theregister.com/2020/07/01/mit_dataset_removed/">https://www.theregister.com/2020/07/0...

That brings us back to machine learning& #39;s diversity problems (and the bias within the field that perpetuates it).

Here& #39;s a few tweets on where that comes from & things we can do about it, including following all the people I list at the end of the thread https://twitter.com/JanelleCShane/status/1276637149723422720?s=20">https://twitter.com/JanelleCS...

Here& #39;s a few tweets on where that comes from & things we can do about it, including following all the people I list at the end of the thread https://twitter.com/JanelleCShane/status/1276637149723422720?s=20">https://twitter.com/JanelleCS...

Read on Twitter

Read on Twitter