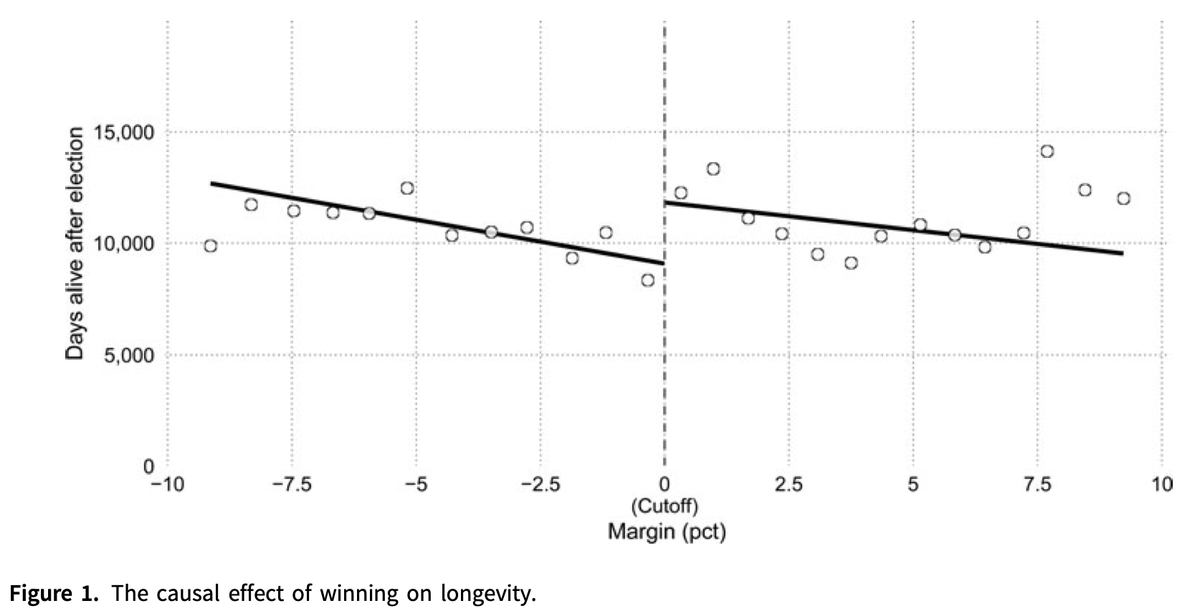

I want to open a discussion about this post by Gelman ( @StatModeling) and various aspects of RDD inference. Gelman criticizes a paper that shows a close-election victory effect of 2-10 years on "days alive after election" (thread 1/n)

#EconTwitter

#more-43001">https://statmodeling.stat.columbia.edu/2020/07/02/no-i-dont-believe-that-claim-based-on-regression-discontinuity-analysis-that/ #more-43001">https://statmodeling.stat.columbia.edu/2020/07/0...

#EconTwitter

#more-43001">https://statmodeling.stat.columbia.edu/2020/07/02/no-i-dont-believe-that-claim-based-on-regression-discontinuity-analysis-that/ #more-43001">https://statmodeling.stat.columbia.edu/2020/07/0...

I think the more important of Gelman& #39;s points is that "causal identification + statistical significance = discovery" is the wrong paradigm for scientific inquiry. But here I want to discuss whether one& #39;d credibly say an RD effect is there and whether our methods "fail" us (2/n)

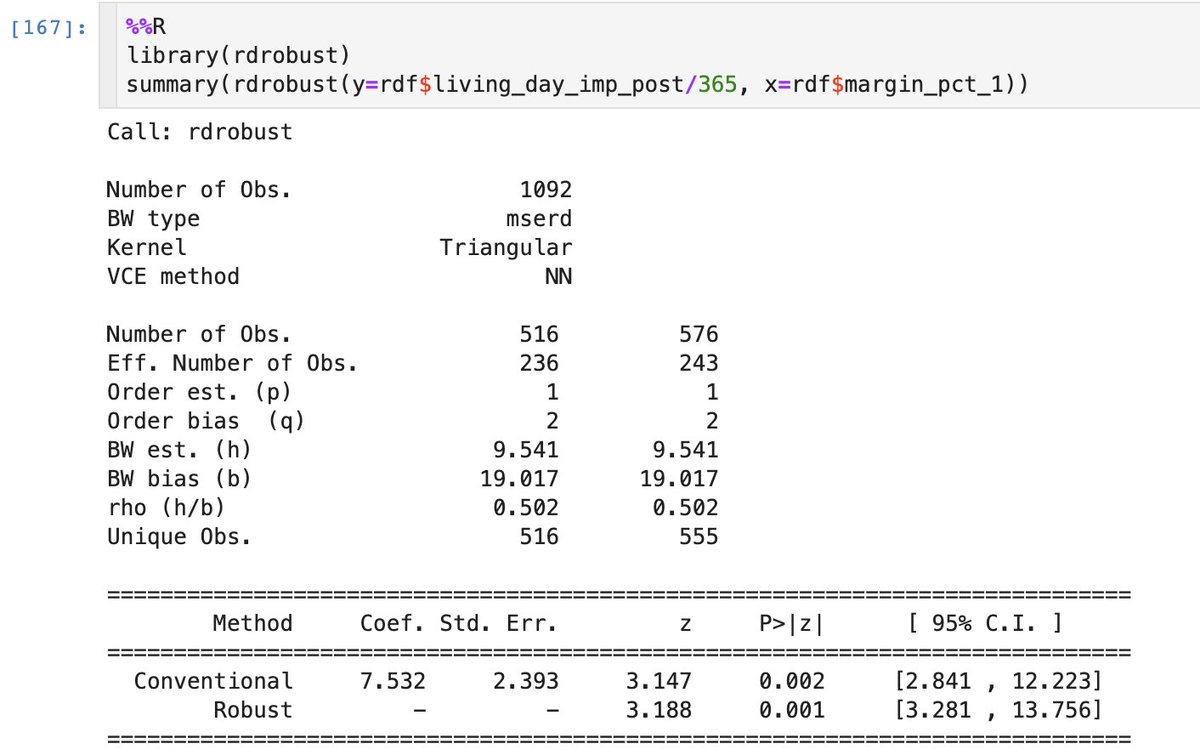

The authors did a standard, state-of-the-art analysis. Used Calonico et al.& #39;s rdrobust, checked McCrary& #39;s test, and plotted an effect against bandwidth robustness check. Lots of received wisdom in econ/finance doesn& #39;t have this level of detail (3/n)

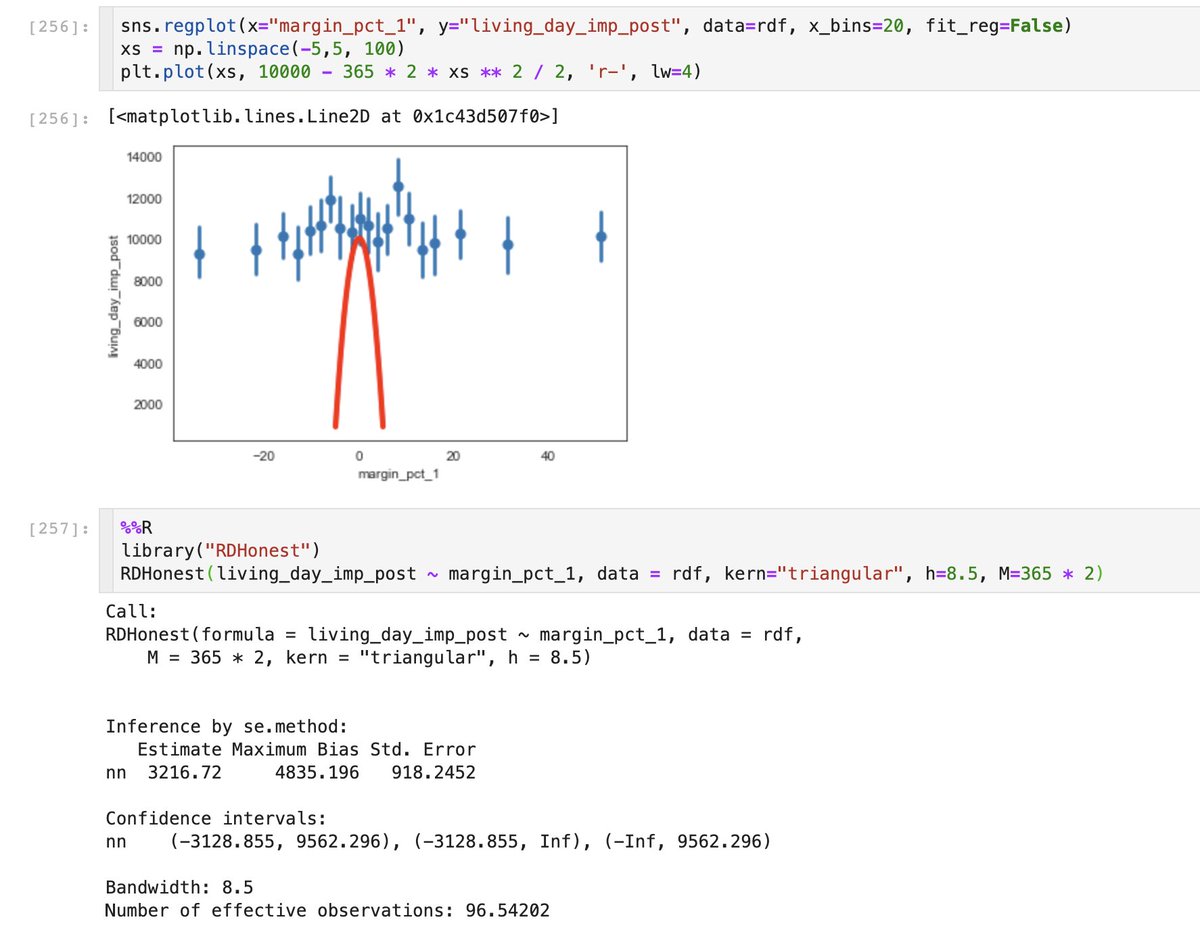

One of Gelman& #39;s critique is that the raw data is very noisy. This is perhaps not entirely fair since we care about uncertainty in E[y|x]. Using rdrobust::rdplot& #39;s default selection binselect with the ci=.95 option, we see sth like this, which is not very visually compelling (4/n)

But the effect looks much more compelling if we crank up the number of bins... you can zoom in on [-10, 10] which is the chosen bandwidth, which now looks better than some of the RDs you and I ran and convinced ourselves of an effect (5/n)

So looks like graph aesthetics affects our ability to do inference visually quite a bit. What about statistical methods? RD inference is difficult because nonparametric regression at the boundary is delicate---tuning parameters, sub-root-n rates, bias, etc (6/n)

IIRC, Calonico et al.& #39;s rdrobust procedure tries to de-bias a plug-in bandwidth estimator. Gives z = 3 with default settings (triangular kernel), robust to bandwidth choice. Effect is only z = 1.7 if uniform kernel is used, suggesting the obs near the cutoff really matter (7/n)

A recent alternative is Armstrong and Kolesar& #39;s RDHonest procedure. AK asks the user for a bound on the second deriv. of E[Y(w)|x] and maximizes the bias an estimator can theoretically incur s.t. the 2nd deriv bound on the truth. Then do inference by accting for the bias (8/n)

This is an appealing procedure since the second deriv. needs to be bounded to be able to do anything, so the tuning parameter in this procedure is necessary. The bias is accted for in a transparent way. So how does RDHonest do? (9/n)

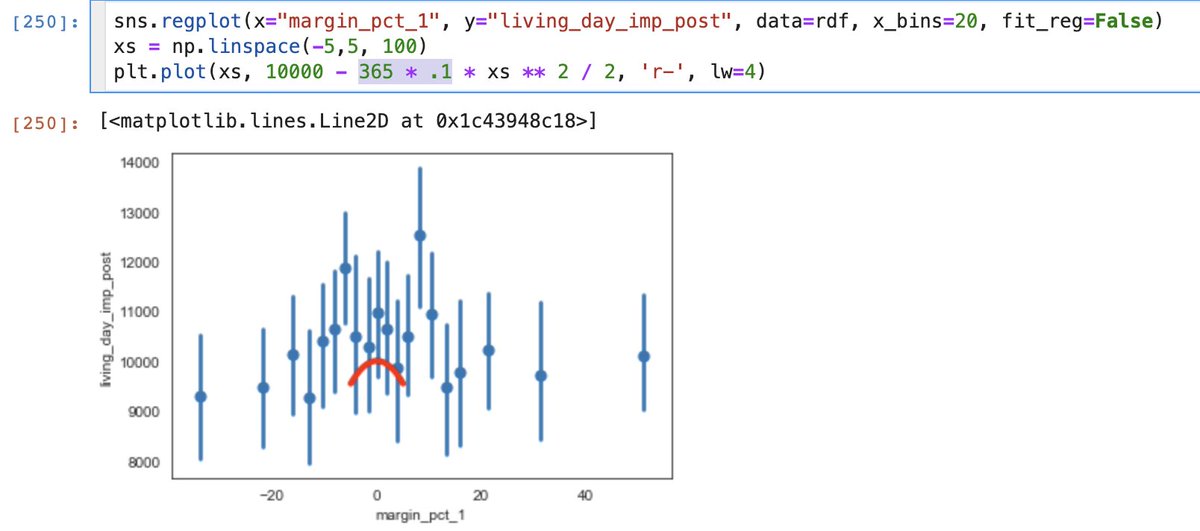

With an aggressive bound of |f& #39;& #39;| < B = .1 year/margin^2 (red line is B*x^2/2) and at the Imbens-Kalyanaraman optimal bw with triangular kernel, RDHonest outputs something that& #39;s quite similar (1300 days to 5000 days). (10/n)

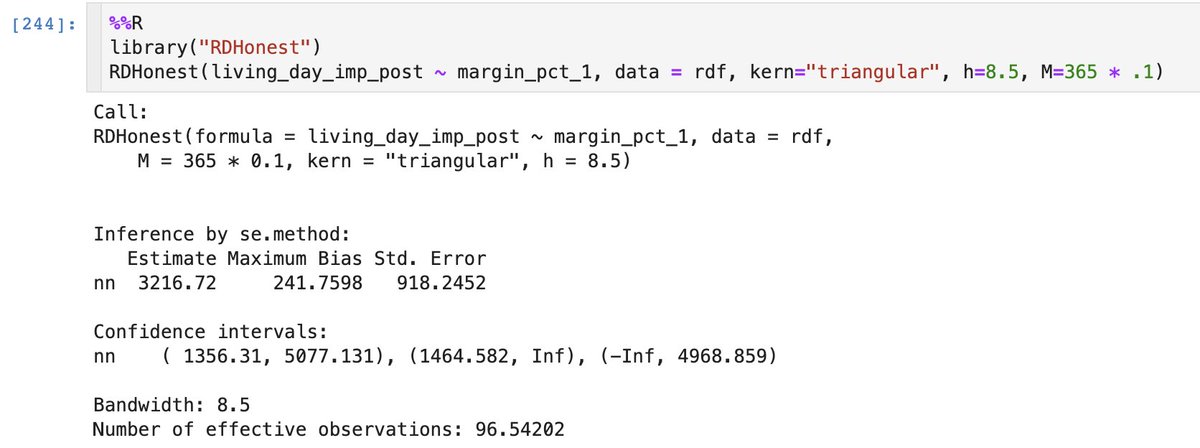

A much more conservative bound of B = 2 year/margin^2 widens the CI to something completely uninformative. (11/n)

Similar to rdrobust, CI shifts to zero if we use the uniform kernel (since you really need to squint at the cutoff to see the discontinuity), but RDHonest is perhaps a little more sensitive to bandwidth. (12/n)

So is there a longevity effect of 2-10 years? Do the best methods we currently have allow us to make credible inferences about RD effects? What is the state of regression discontinuity? (13/13)

Read on Twitter

Read on Twitter

![One of Gelman& #39;s critique is that the raw data is very noisy. This is perhaps not entirely fair since we care about uncertainty in E[y|x]. Using rdrobust::rdplot& #39;s default selection binselect with the ci=.95 option, we see sth like this, which is not very visually compelling (4/n) One of Gelman& #39;s critique is that the raw data is very noisy. This is perhaps not entirely fair since we care about uncertainty in E[y|x]. Using rdrobust::rdplot& #39;s default selection binselect with the ci=.95 option, we see sth like this, which is not very visually compelling (4/n)](https://pbs.twimg.com/media/Eb9-HV3WsAYvPgd.jpg)

![But the effect looks much more compelling if we crank up the number of bins... you can zoom in on [-10, 10] which is the chosen bandwidth, which now looks better than some of the RDs you and I ran and convinced ourselves of an effect (5/n) But the effect looks much more compelling if we crank up the number of bins... you can zoom in on [-10, 10] which is the chosen bandwidth, which now looks better than some of the RDs you and I ran and convinced ourselves of an effect (5/n)](https://pbs.twimg.com/media/Eb9-oUDU0AIOLyu.jpg)

![But the effect looks much more compelling if we crank up the number of bins... you can zoom in on [-10, 10] which is the chosen bandwidth, which now looks better than some of the RDs you and I ran and convinced ourselves of an effect (5/n) But the effect looks much more compelling if we crank up the number of bins... you can zoom in on [-10, 10] which is the chosen bandwidth, which now looks better than some of the RDs you and I ran and convinced ourselves of an effect (5/n)](https://pbs.twimg.com/media/Eb9_9zSXsAYStFR.jpg)