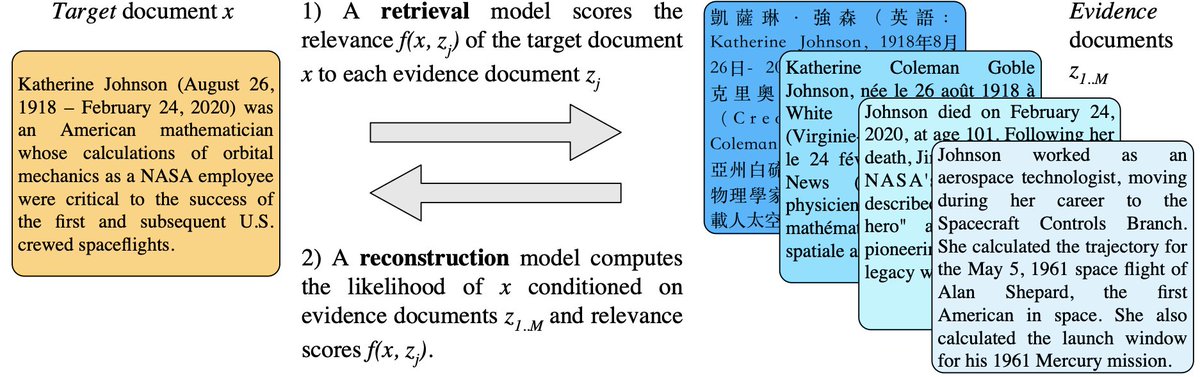

Happy to share MARGE, our new work on rethinking pre-training: given a document, we first retrieve related documents, and then paraphrase these to reconstruct the original. MARGE works well for generation and classification in many languages, sometimes without supervision. (1/6)

Unlike masked language models, this pre-training objective is closely related to several end tasks, such as summarization, retrieval and translation (e.g. BLEU scores of 35 with the raw pre-trained model). Let& #39;s build models that can do more tasks with less supervision! (2/6)

By retrieving relevant facts during pre-training, the model can focus on learning to paraphrase, rather than memorizing encyclopedic knowledge. This approach also seems to make MARGE somewhat less prone to hallucinating facts when generating. (3/6)

This auto-encoder framework simplifies the retrieval problem (compared to related recent models like REALM and RAG), allowing us to train the retrieval and reconstruction models jointly from a single objective and a random initialization. (4/6)

To my knowledge, this is the first competitive alternative to variants of masked language modelling. I hope this work both encourages exploration of other alternatives to MLMs, and leads to better understanding of what pre-training is really learning. (5/6)

Paper is here: https://arxiv.org/abs/2006.15020 ">https://arxiv.org/abs/2006.... Joint work with amazing co-authors @gh_marjan, Gargi Ghosh, Armen Aghajanyan, Sida Wang, @LukeZettlemoyer (6/6)

Read on Twitter

Read on Twitter