Excited to share our work @GoogleAI on Object-centric Learning with Slot Attention!

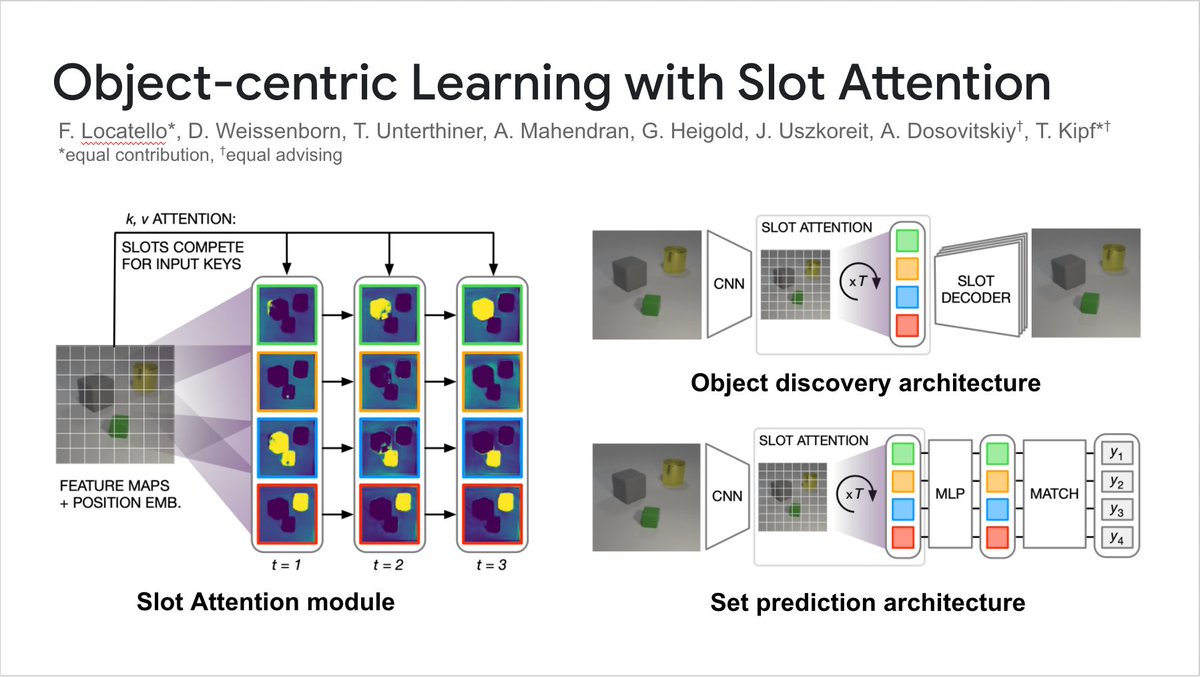

Slot Attention is a simple module for structure discovery and set prediction: it uses iterative attention to group perceptual inputs into a set of slots.

Paper: https://arxiv.org/abs/2006.15055

[1/7]">https://arxiv.org/abs/2006....

Slot Attention is a simple module for structure discovery and set prediction: it uses iterative attention to group perceptual inputs into a set of slots.

Paper: https://arxiv.org/abs/2006.15055

[1/7]">https://arxiv.org/abs/2006....

Slot Attention is related to self-attention, with some crucial differences that effectively turn it into a meta-learned clustering algorithm.

Slots are randomly initialized for each example and then iteratively refined. Everything is symmetric under permutation.

[2/7]

Slots are randomly initialized for each example and then iteratively refined. Everything is symmetric under permutation.

[2/7]

Slot Attention can be used in a simple auto-encoder architecture that learns to decompose scenes into objects.

Compared to prior slot-based approaches (IODINE/MONet), no intermediate decoding is needed, which significantly improves efficiency.

[3/7]

Compared to prior slot-based approaches (IODINE/MONet), no intermediate decoding is needed, which significantly improves efficiency.

[3/7]

The number of slots in Slot Attention can be changed dynamically without re-training and the model generalizes well to more objects and more slots at test time.

Slot Attention learns to keep slots empty if they are not needed.

[4/7]

Slot Attention learns to keep slots empty if they are not needed.

[4/7]

Slot Attention can be used as a supervised set prediction model: simply put it on top of an encoder and use the predicted output as a set. Compares favorably with prior methods.

The attention mechanism learns to pick out objects despite being trained on properties only.

[5/7]

The attention mechanism learns to pick out objects despite being trained on properties only.

[5/7]

Slot Attention is agnostic to the type of data and can in principle be used in other modalities, where grouping of representations can be beneficial, such as in point clouds or graphs, especially since Slot Attention respects permutation symmetry.

[6/7]

[6/7]

Slot Attention is joint work with amazing collaborators at Google Research in the Brain Team in Amsterdam/Berlin:

@FrancescoLocat8 (equal contrib.), @dirkweissenborn,

@TomUnterthiner, Aravindh Mahendran, Georg Heigold, @kyosu & Alexey Dosovitskiy (equal advising).

[7/7]

@FrancescoLocat8 (equal contrib.), @dirkweissenborn,

@TomUnterthiner, Aravindh Mahendran, Georg Heigold, @kyosu & Alexey Dosovitskiy (equal advising).

[7/7]

Read on Twitter

Read on Twitter

![Slot Attention is related to self-attention, with some crucial differences that effectively turn it into a meta-learned clustering algorithm.Slots are randomly initialized for each example and then iteratively refined. Everything is symmetric under permutation.[2/7] Slot Attention is related to self-attention, with some crucial differences that effectively turn it into a meta-learned clustering algorithm.Slots are randomly initialized for each example and then iteratively refined. Everything is symmetric under permutation.[2/7]](https://pbs.twimg.com/media/EbrWSk3XsAAqM1-.jpg)

![Slot Attention can be used in a simple auto-encoder architecture that learns to decompose scenes into objects.Compared to prior slot-based approaches (IODINE/MONet), no intermediate decoding is needed, which significantly improves efficiency.[3/7] Slot Attention can be used in a simple auto-encoder architecture that learns to decompose scenes into objects.Compared to prior slot-based approaches (IODINE/MONet), no intermediate decoding is needed, which significantly improves efficiency.[3/7]](https://pbs.twimg.com/media/EbrWW6rXkAAHye6.jpg)

![The number of slots in Slot Attention can be changed dynamically without re-training and the model generalizes well to more objects and more slots at test time. Slot Attention learns to keep slots empty if they are not needed.[4/7] The number of slots in Slot Attention can be changed dynamically without re-training and the model generalizes well to more objects and more slots at test time. Slot Attention learns to keep slots empty if they are not needed.[4/7]](https://pbs.twimg.com/media/EbrWmetWoAElTvW.jpg)

![Slot Attention can be used as a supervised set prediction model: simply put it on top of an encoder and use the predicted output as a set. Compares favorably with prior methods.The attention mechanism learns to pick out objects despite being trained on properties only.[5/7] Slot Attention can be used as a supervised set prediction model: simply put it on top of an encoder and use the predicted output as a set. Compares favorably with prior methods.The attention mechanism learns to pick out objects despite being trained on properties only.[5/7]](https://pbs.twimg.com/media/EbrWziIWsAIF0xR.jpg)

![Slot Attention is agnostic to the type of data and can in principle be used in other modalities, where grouping of representations can be beneficial, such as in point clouds or graphs, especially since Slot Attention respects permutation symmetry.[6/7] Slot Attention is agnostic to the type of data and can in principle be used in other modalities, where grouping of representations can be beneficial, such as in point clouds or graphs, especially since Slot Attention respects permutation symmetry.[6/7]](https://pbs.twimg.com/media/EbrXM0DWAAIHVkp.jpg)