My PhD student @samiraabnar has written a series of blogs about her two latest papers. The first is ‘bertology’: figuring out how the Transformer arrives at its predictions by tracking attention across layer. Interpreting attention patterns is tricky... https://samiraabnar.github.io/articles/2020-04/attention_flow">https://samiraabnar.github.io/articles/...

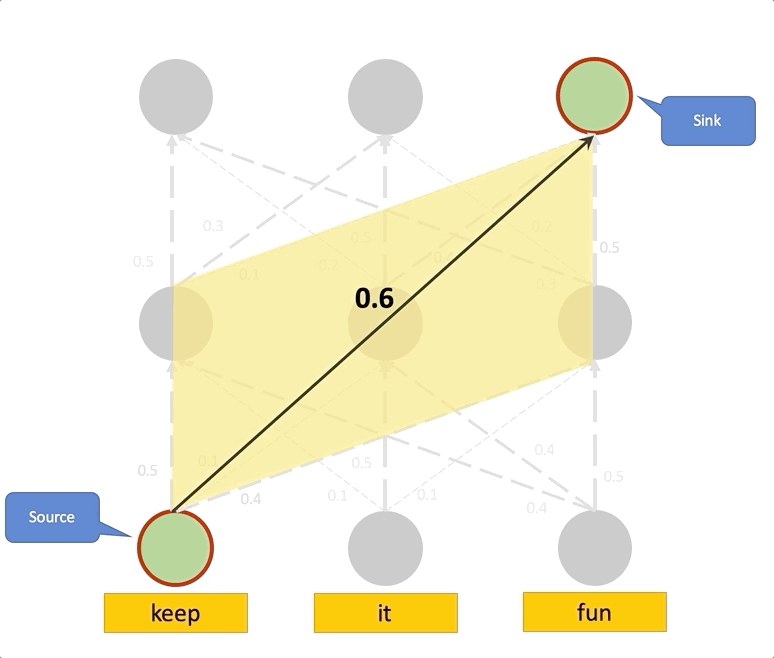

That& #39;s in part because information about a word can gets scattered over many nodes. A node at position 7 might attend to a node at position 3 one layer below, which in turn attends to a node at position 5.

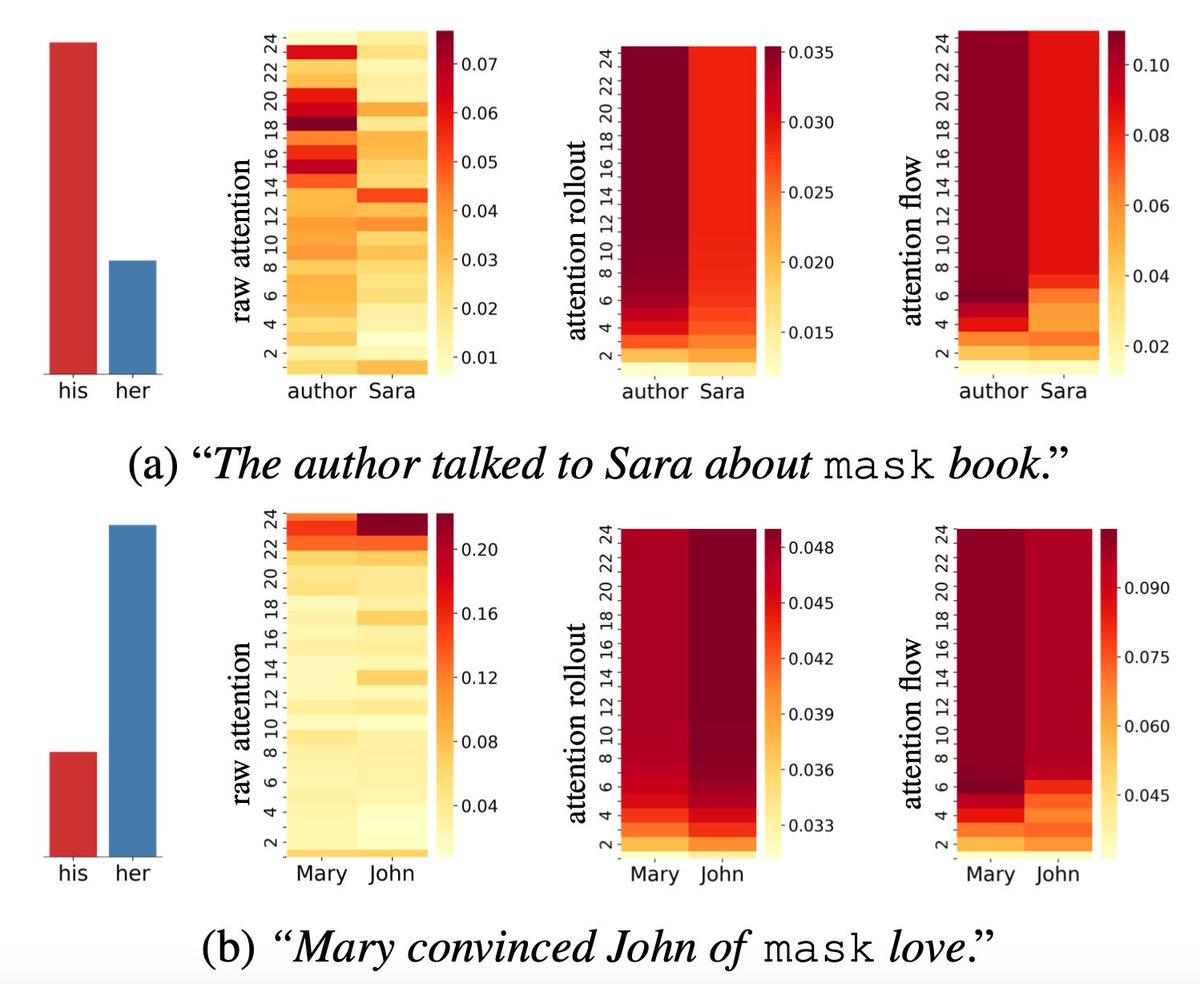

In sentence (a), the Transformer expects “his” at the mask, presumably because of & #39;author& #39; and male bias. Across its 24 layers, the raw attention pattern is not at all consistent, but Samira’s new "attention rollout" & "attention flow" correctly lay the blame on ‘author’.

The second blog post investigates why Recurrent Networks sometimes still outperform Transformers, building on findings of @ketran, @AriannaBisazza & @c_monz. Samira breaks down the & #39;recurrent inductive bias& #39; & shows every component contributes.

https://samiraabnar.github.io/articles/2020-05/recurrence">https://samiraabnar.github.io/articles/...

https://samiraabnar.github.io/articles/2020-05/recurrence">https://samiraabnar.github.io/articles/...

The 3d & 4th posts are all about comparing models, mostly LSTM and Transformer.

Samira first presents some very useful techniques to compare and visualize the similarities and differences between the representational spaces that model build up. https://samiraabnar.github.io/articles/2020-05/vizualization">https://samiraabnar.github.io/articles/...

Samira first presents some very useful techniques to compare and visualize the similarities and differences between the representational spaces that model build up. https://samiraabnar.github.io/articles/2020-05/vizualization">https://samiraabnar.github.io/articles/...

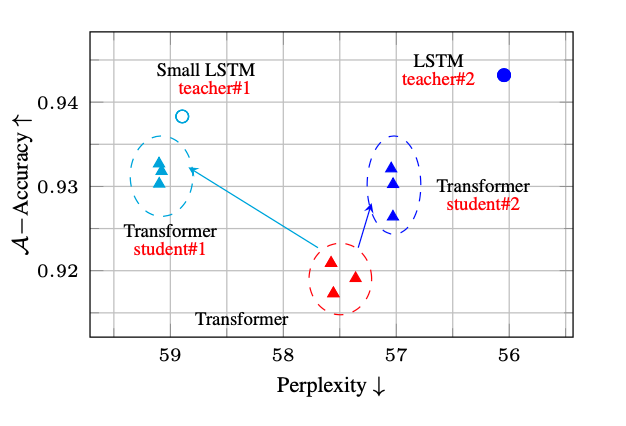

All of these concepts and techniques come together in her exciting work on "Transferring Inductive Biases Through Knowledge Distillation" (with @m__dehghani and me). Goal is to have the best of 2 worlds: LSTM& #39;s inductive bias & Transformer& #39;s flexibility. https://samiraabnar.github.io/articles/2020-05/indist">https://samiraabnar.github.io/articles/...

The exciting result is that this is indeed possible: by training a flexible "student" model (Transformer, MLP), not on the original training data but on the output of a more constrained "teacher" model (LSTM, CNN) you can let the student inherit the inductive bias of the teacher.

The perfomance of the student model does not only quantitatively, but also qualitatively start to look very similar to its teacher& #39;s (by looking at the representational space). This is a beatiful usage of & #39;distillation& #39; (a technique otherwise mostly known for model compression).

Finally, on personal note: when Samira dug up Craven & Shavlik (1996), I realized that this project might, in some ways, be the tree grown out of a small seed Jude Shavlik planted in my head in my first, mind-blowing Machine Learning course in & #39;97. Thanks!

https://arxiv.org/abs/2006.00555 ">https://arxiv.org/abs/2006....

https://arxiv.org/abs/2006.00555 ">https://arxiv.org/abs/2006....

https://twitter.com/samiraabnar/status/1275785994768396291">https://twitter.com/samiraabn...

Also see this thread, featuring some related work on knowledge distillation & grammatical structure, which I was unaware of when we wrote the papers above. https://twitter.com/wzuidema/status/1296209339074727947">https://twitter.com/wzuidema/...

Read on Twitter

Read on Twitter