The iron law of computing is GIGO: Garbage In, Garbage Out. Machine learning does not repealit. do statistical analysis of skewed data, get skewed conclusions. This is totally obvious to everyone except ML grifters whose hammers are perpetually in search of nails.

1/

1/

Unfortunately for the human race, there is one perpetual, deep-pocketed customer who always needs as much empirical facewash as the industry can supply to help overlay their biased practices with a veneer of algorithmic neutrality:

Law enforcement.

2/

Law enforcement.

2/

Here& #39;s where GIGO really shines. Say you& #39;re a police department who is routinely accused of racist policing practices, and the reason for that is that your officers are racist as fuck.

You can solve this problem by rooting out racist officers, but that& #39;s hard.

3/

You can solve this problem by rooting out racist officers, but that& #39;s hard.

3/

Alternatively, you can find an empiricism-washer who will take the data about who you arrested and then make predictions about who will commit crime. Because you& #39;re feeding an inference engine with junk stats, it will produce junk conclusion.

4/

4/

Give the algorithm racist policing data, and will pat you on the back and congratulate you for fighting crime without bias. As @HRDAG writes: predictive policing doesn& #39;t predict crime, it predicts what the police will do.

https://hrdag.org/2016/10/10/predictive-policing-reinforces-police-bias/

5/">https://hrdag.org/2016/10/1...

https://hrdag.org/2016/10/10/predictive-policing-reinforces-police-bias/

5/">https://hrdag.org/2016/10/1...

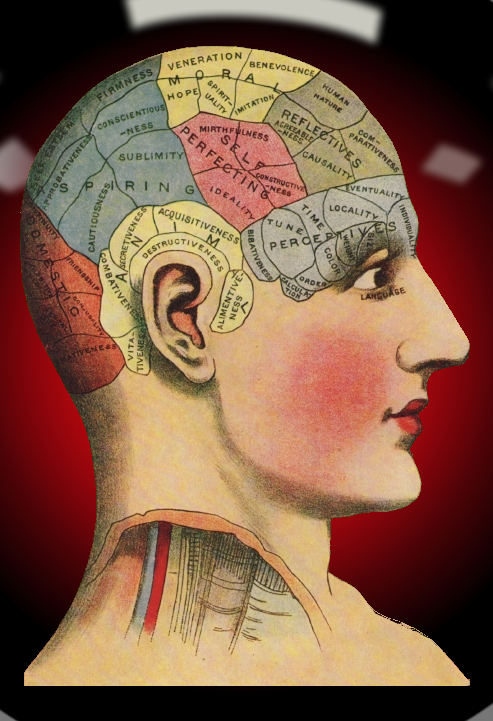

As odious as predictive policing technologies are, it gets much worse. Because if you want to really double down on empiricism-washing, there& #39;s the whole field of phrenology - AKA "race science" - waiting to be exploited.

6/

6/

Here& #39;s how that works: you feed an ML system pictures of people who have been arrested by racist cops, and call it "training a model to predict criminality from pictures."

Then you ask the model to evaluate pictures of people and predict whether they will commit crimes.

7/

Then you ask the model to evaluate pictures of people and predict whether they will commit crimes.

7/

This system will assign a high probability of criminality to anyone who looks like people the cops have historically arrested. That is, brown people.

"Predictive policing doesn& #39;t predict crime, it predicts what the police will do."

8/

"Predictive policing doesn& #39;t predict crime, it predicts what the police will do."

8/

It would be one (terrible) thing if this was merely the kind of thing you got in a glossy sales-brochure. But it gets (much) worse: researchers who do this stupid thing then write computer science papers about it and get them accepted in top scholarly publications.

9/

9/

For example: "Springer Nature — Research Book Series: Transactions on Computational Science and Computational Intelligence" is publishing a neophrenological paper called "A Deep Neural Network Model to Predict Criminality Using Image Processing."

10/

10/

The title is both admirably clear and terribly obscure. You could subtitle it: "Keep arresting brown people."

A coalition of AI practitioners, tech ethicists, computer scientists, and activists have formed a group to push back against this, called @forcriticaltech.

11/

A coalition of AI practitioners, tech ethicists, computer scientists, and activists have formed a group to push back against this, called @forcriticaltech.

11/

As its inaugural action, the Coalition for Critical Technology has published a petition calling on Springer to cancel publication of this junk science paper.

https://medium.com/@CoalitionForCriticalTechnology/abolish-the-techtoprisonpipeline-9b5b14366b16

12/">https://medium.com/@Coalitio...

https://medium.com/@CoalitionForCriticalTechnology/abolish-the-techtoprisonpipeline-9b5b14366b16

12/">https://medium.com/@Coalitio...

The petition also calls on other publishers to adopt a promise not to publish this kind of empiricism-washing in the future.

You can sign it too.

I did.

eof/

You can sign it too.

I did.

eof/

Read on Twitter

Read on Twitter