If the idea of tech not being neutral is new to you, or if you think of tech as just a tool (that is equally likely to be used for good or bad), I want to share some resources & examples in this thread. Please feel free to suggest/add additional resources! 1/

A classic paper exploring this is "Do Artifacts Have Politics?" by Langdon Winner (written in 1980), on whether machines/structures/systems can be judged for how they embody specific types of power 2/

https://www.cc.gatech.edu/~beki/cs4001/Winner.pdf">https://www.cc.gatech.edu/~beki/cs4...

https://www.cc.gatech.edu/~beki/cs4001/Winner.pdf">https://www.cc.gatech.edu/~beki/cs4...

Surveillance technologies are aligned with power. The panopticon is not going to be used to hold the warden accountable: 3/ https://twitter.com/math_rachel/status/1268296831403950080?s=20">https://twitter.com/math_rach...

Paper by @farbandish on how algorithms to externally assign/label a person’s gender are inherently anti-trans: 4/ https://twitter.com/math_rachel/status/1125225723847794688?s=20">https://twitter.com/math_rach...

Joe Redmon, one of the creators of the YOLO architecture for object detection, later stopped doing computer vision research because of the implications: 5/ https://twitter.com/pjreddie/status/1230524770350817280?s=20">https://twitter.com/pjreddie/...

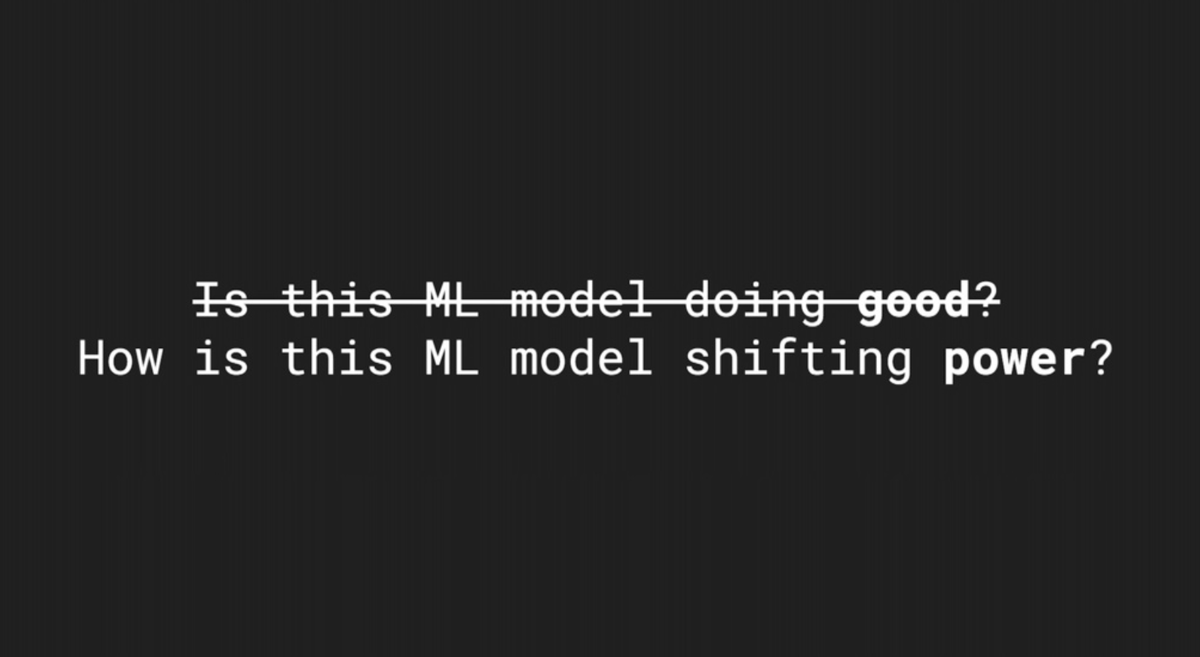

Great talk from @radical_ai_ asking us to consider how our machine learning models shift power, given at @QueerinAI #NeurIPS2019 6/

https://slideslive.com/38923453/the-values-of-machine-learning">https://slideslive.com/38923453/...

https://slideslive.com/38923453/the-values-of-machine-learning">https://slideslive.com/38923453/...

It is a pattern throughout history that surveillance is used against those considered "less than", against the poor man, the person of color, the immigrant, the heretic. It is used to try to stop marginalized people from achieving power @alvarombedoya 7/ https://twitter.com/math_rachel/status/1159243937179029504?s=20">https://twitter.com/math_rach...

Papers by @morganklauss on how computers classify/categorize identity, race, & gender, and some of the implications & issues around this: /8 https://twitter.com/morganklauss/status/1274913849871749121?s=20">https://twitter.com/morgankla...

A curriculum by @BlakeleyHPayne for middle-schoolers to explore how technology is not neutral & to start redesigning it: https://twitter.com/BlakeleyHPayne/status/1274914565608935424?s=20">https://twitter.com/BlakeleyH...

Some people mistakenly think that more diverse datasets can solve all our problems. When I teach @harini824& #39;s framework of types of bias, I particularly emphasize *historical bias*, which can& #39;t be addressed with a different dataset or model: /10 https://twitter.com/math_rachel/status/1191065892341239808?s=20">https://twitter.com/math_rach...

Yes,  https://abs.twimg.com/emoji/v2/... draggable="false" alt="💯" title="Hundert Punkte Symbol" aria-label="Emoji: Hundert Punkte Symbol"> recommend the works in this thread: https://twitter.com/mlmillerphd/status/1275031215175798785?s=19">https://twitter.com/mlmillerp...

https://abs.twimg.com/emoji/v2/... draggable="false" alt="💯" title="Hundert Punkte Symbol" aria-label="Emoji: Hundert Punkte Symbol"> recommend the works in this thread: https://twitter.com/mlmillerphd/status/1275031215175798785?s=19">https://twitter.com/mlmillerp...

Read on Twitter

Read on Twitter