Thread on AI racism!

We tend to think that AI is perfect and impartial, but it carries out the same biases humans have.

Every AI application requires data sets to learn. Data must be selected by humans. Software engineers are overwhelmingly white, so you get stuff like this. https://twitter.com/Chicken3gg/status/1274314622447820801">https://twitter.com/Chicken3g...

We tend to think that AI is perfect and impartial, but it carries out the same biases humans have.

Every AI application requires data sets to learn. Data must be selected by humans. Software engineers are overwhelmingly white, so you get stuff like this. https://twitter.com/Chicken3gg/status/1274314622447820801">https://twitter.com/Chicken3g...

AI isn& #39;t a utopian tool that is neutral to people& #39;s differences. It is oblivious to our inequalities, and thus fails to fight them.

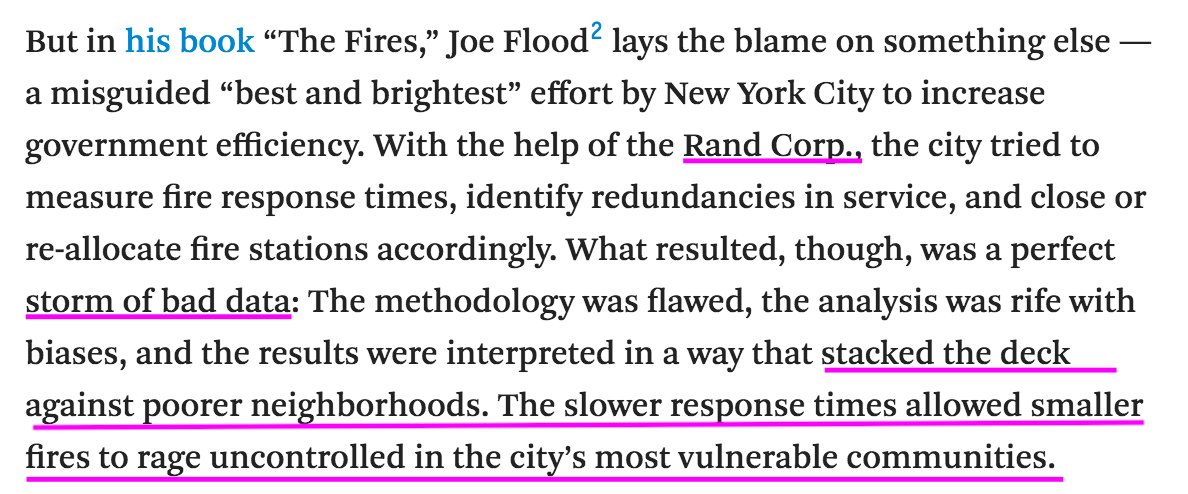

Take the RAND Fire project algorithm in the late 70s. It advised the closure of FDNY locations, mostly in poor areas. The Bronx was decimated.

Take the RAND Fire project algorithm in the late 70s. It advised the closure of FDNY locations, mostly in poor areas. The Bronx was decimated.

Here& #39;s an article on the disastrous effects of the RAND Fire project algorithm. It includes footage of South Bronx in the early 80s, many buildings charred and even burned to the ground. https://fivethirtyeight.com/features/why-the-bronx-really-burned/">https://fivethirtyeight.com/features/...

AI carries out biases as ruthlessly as humans do, in the exact same way: if it was never explicitly taught to look at certain factors, it will ignore them.

Here& #39;s another example, where an algorithm prescribed more healthcare for white people than POC https://www.nature.com/articles/d41586-019-03228-6">https://www.nature.com/articles/...

Here& #39;s another example, where an algorithm prescribed more healthcare for white people than POC https://www.nature.com/articles/d41586-019-03228-6">https://www.nature.com/articles/...

This is why it is not enough to "not be racist", you must be anti-racist. Because otherwise the same biases remain.

Today, we still see AI applications for facial recognition, self-driving cars, and even SOAP dispensers that fail to recognize Black skin. https://gizmodo.com/why-cant-this-soap-dispenser-identify-dark-skin-1797931773">https://gizmodo.com/why-cant-...

Today, we still see AI applications for facial recognition, self-driving cars, and even SOAP dispensers that fail to recognize Black skin. https://gizmodo.com/why-cant-this-soap-dispenser-identify-dark-skin-1797931773">https://gizmodo.com/why-cant-...

AI also learns from the ways we behave to each other. Here& #39;s a Microsoft chatbot that wrote messages based on what it learned from Twitter.

It started saying incredibly racist things within the first few days. https://gizmodo.com/here-are-the-microsoft-twitter-bot-s-craziest-racist-ra-1766820160">https://gizmodo.com/here-are-...

It started saying incredibly racist things within the first few days. https://gizmodo.com/here-are-the-microsoft-twitter-bot-s-craziest-racist-ra-1766820160">https://gizmodo.com/here-are-...

In the few instances where AI developers try to be considerate of Black people, it& #39;s not that great. The tech world is famous for lacking transparency and not obtaining proper consent. https://fortune.com/2019/10/08/why-did-google-offer-black-people-5-to-harvest-their-faces-eye-on-a-i/">https://fortune.com/2019/10/0...

So basically #AI is racist just like everything else. Don& #39;t trust algorithms to avoid prejudice. AI literally operates under the idea of "I don& #39;t see colour"... as we know, that doesn& #39;t work out well. #BlackLivesMatter  https://abs.twimg.com/hashflags... draggable="false" alt=""> #BLM #technology #BlackTechTwitter #thread

https://abs.twimg.com/hashflags... draggable="false" alt=""> #BLM #technology #BlackTechTwitter #thread

Shoutout to @walmartyr @RyersonU @RTARyerson for teaching this stuff, one of the most useful courses I& #39;ve ever taken

Someone asked in the replies: How can this happen, even at companies that hire plenty of East Asian, South Asian, and Middle Eastern programmers?

This is why: https://twitter.com/heyromanyk/status/1275146073187778562?s=20">https://twitter.com/heyromany...

This is why: https://twitter.com/heyromanyk/status/1275146073187778562?s=20">https://twitter.com/heyromany...

Here are some statistics about the lack of diversity in tech companies. Specifically, there has been very little progress in recruiting, hiring, and retaining Black, Indigenous, and Latinx employees. https://www.wired.com/story/five-years-tech-diversity-reports-little-progress/">https://www.wired.com/story/fiv...

Read on Twitter

Read on Twitter