no, spellchecker, please don& #39;t auto-correct "ebcdic" to "bodice"

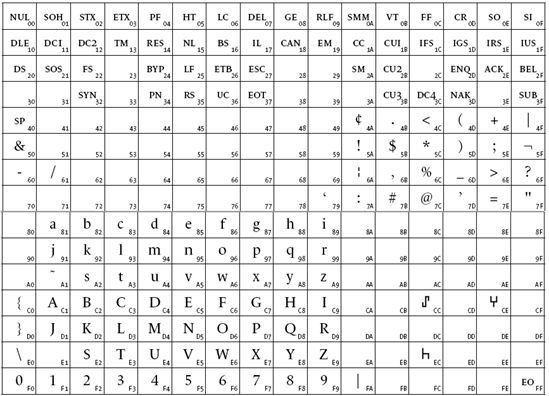

EBCDIC was devised by IBM in the early 60s, it& #39;s a family of character encodings used on their System/360 mainframes and descendants. It& #39;s based on earlier earlier punched-card encodings and is notable for being one of the only encodings to not have a contiguous alphabet

because having ABC at 0xC1-0xC3 is fine, but to go from I to J, you have to jump from C9 to D1.

The interesting thing about the timing of EBCDIC was that it was designed at the same time as ASCII.

EBCDIC didn& #39;t come out until 1963, but work on ASCII started in 1960, and IBM had four staff on the 21-member committee that designed ASCII.

EBCDIC didn& #39;t come out until 1963, but work on ASCII started in 1960, and IBM had four staff on the 21-member committee that designed ASCII.

but ASCII wasn& #39;t finalized until 1963 and IBM didn& #39;t have time to be releasing new hardware in 1963 that was using a standard made at the same time.

So they build their own, separate, encoding.

So they build their own, separate, encoding.

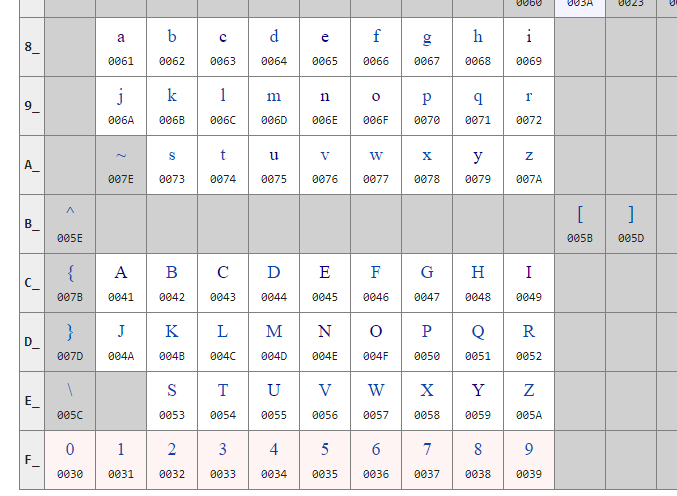

using the insanity that is EBCDIC didn& #39;t seem to stop the System/360 from being one of the most successful mainframe systems of all time, being heavily used by minor government projects like NASA& #39;s Apollo program.

Yes, we landed people on the moon using a character encoding that didn& #39;t even understand how to lay out the alphabet.

in fairness to EBCDIC the amazing success of the System/360 is probably directly responsible for the still-going-strong-after-57-years hacker hate for EBCDIC. That system was so popular and so long lasting that we& #39;ll never be free of EBCDIC, even in a world that has moved on

after all, IBM is still selling servers TODAY you can buy and then load an old System/360 program onto them, and it& #39;ll still work.

It& #39;ll probably be running inside like 4 layers of VMs, but it& #39;ll run.

It& #39;ll probably be running inside like 4 layers of VMs, but it& #39;ll run.

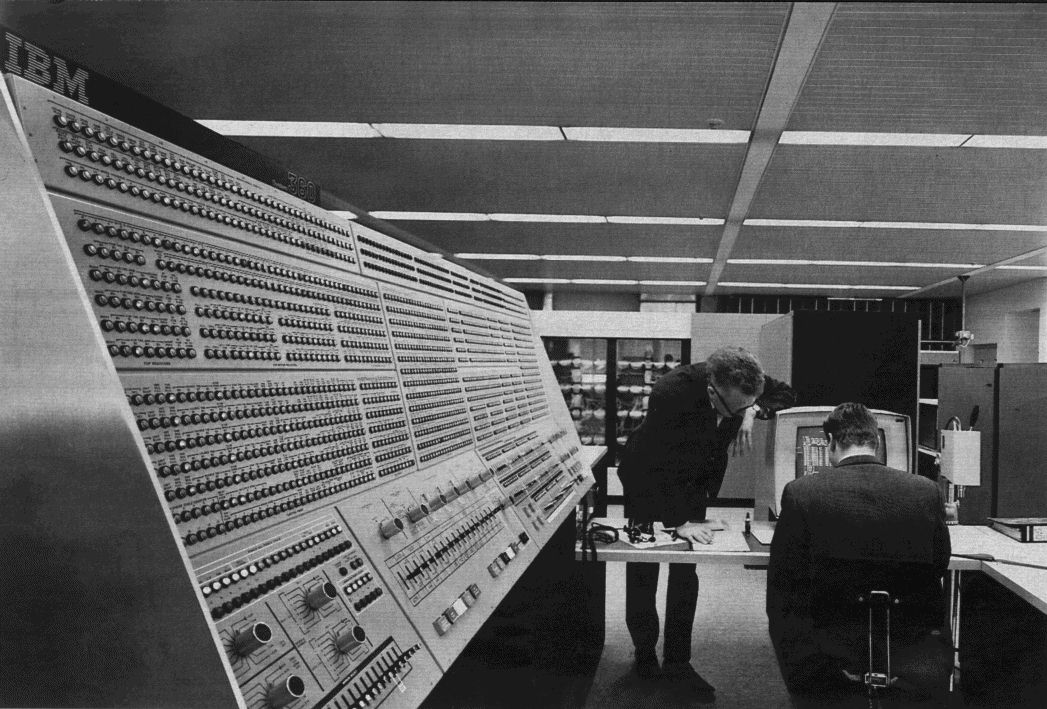

and if you, like everyone, are wondering "why the heck is it laid out like that?" the answer has to do with the "BCD" part of "EBCDIC": Extended Binary Coded Decimal Interchange Code

so Binary Coded Decimals are a way to represent digits in computers which doesn& #39;t just use the traditional binary method, it instead puts a single digit into each 4-bit nibble.

the big difference is when you go above 9.

Instead of just using the binary for 10 (1010), you go to two BCD digits:

0001 and 0000.

Instead of just using the binary for 10 (1010), you go to two BCD digits:

0001 and 0000.

which would be "16" if you treated it as an 8-bit binary number, but since you& #39;re treating it as separate 4-bit nibbles, it& #39;s a 1 and a 0, so it looks like "10"

basically BCD was used (and is still used, somewhat) for numbers that have to interact with humans: it& #39;s very easy to convert decimal numbers to BCD, and vice versa.

like high scores in video games are a common use for BCD, which is why you get them maxing out at 999 instead of 255.

So, BCD is a sort of middle-ground between how computers think of numbers (in binary) and how humans think of numbers (in decimal): so naturally BCD is going to show up in the input/output parts of computers... as that& #39;s where the computer/human interface exists.

So, for example, the IBM 704, from 1954... You programmed these with punch cards, but naturally you have to encode text and numbers into those punch cards so the computer knows what the heck you mean.

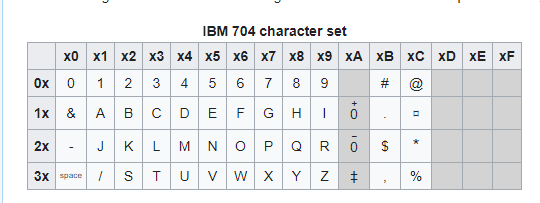

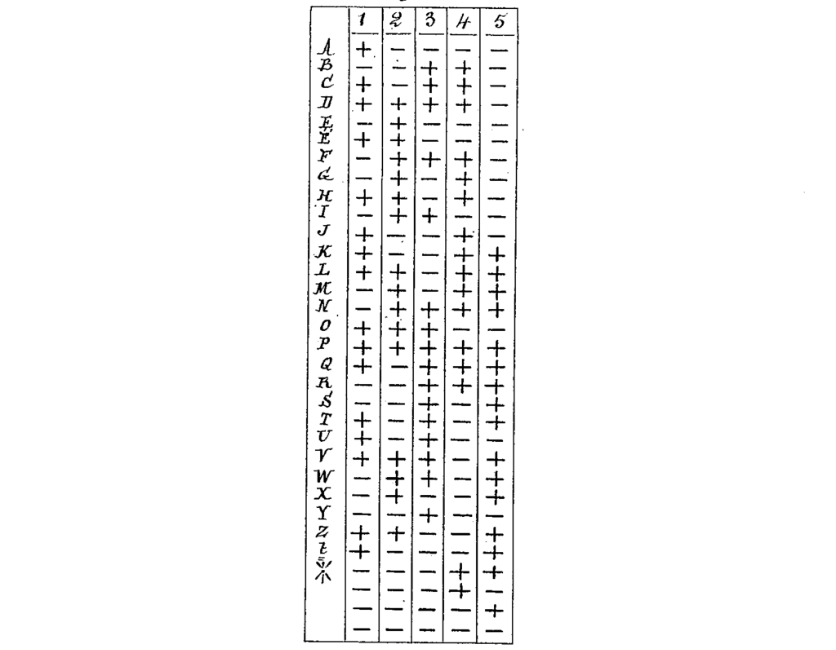

well, the IBM 704 used a character set laid out like this.

So the BCD 0-9 are just 00 through 09, then to do alphabetical numbers, you have A as 11, be as 12, C as 13, etc...

So the BCD 0-9 are just 00 through 09, then to do alphabetical numbers, you have A as 11, be as 12, C as 13, etc...

the weirdness of course is when you get to 19. in hex your next number is "1A" but instead it goes back to 21.

20 is skipped because of... reasons. zero is kinda weird in a punch card so they wanted to avoid it, but it also can make sense if you think of these letters as being laid out on a 3x9 grid.

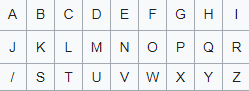

imagine you have this grid before you.

A is row 1, column 1: 11. B is row 1, column 2: 12.

J is row 2, column 1: 21.

and so on.

A is row 1, column 1: 11. B is row 1, column 2: 12.

J is row 2, column 1: 21.

and so on.

basically this encoding makes sense if you ever have to punch it out manually, and they don& #39;t want to have to expect everyone who does that to understand binary (at least not more than 4-bit binary) or 0-based counting and things like that.

so since the late 28s, IBM had been used BCD-encoded schemes like this.

(This one is from the 50s, and there& #39;s good reasons I started there...)

(This one is from the 50s, and there& #39;s good reasons I started there...)

EBCDIC just took those existing encodings they& #39;d been using for decades at that point, and embedded them inside a more modern 8-bit character encoding.

So while it seems deeply weird and unusual, it& #39;s rather sensible for the time. It has a lot of history behind it.

It just happens that it was designed at the exact moment when ASCII was designed, and basically everything since then jumped on the ASCII train

It just happens that it was designed at the exact moment when ASCII was designed, and basically everything since then jumped on the ASCII train

so it& #39;s a weird remnant of how things were designed in the pre-ASCII era, and it hung around unexpectedly long while pretty much everything else instead built on ASCII and its descendants (like 8-bit codepages, shift-jis, and unicode)

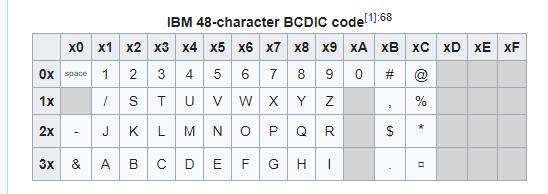

But now that I& #39;ve explained the more sensible IBM 704 encoding, let& #39;s go back to the 48-character BCDIC.

This grew out of a 37-character version from 1928, just adding new characters.

But you& #39;ll notice a fun thing about the layout: IT& #39;S BACKWARDS BY ROWS!

This grew out of a 37-character version from 1928, just adding new characters.

But you& #39;ll notice a fun thing about the layout: IT& #39;S BACKWARDS BY ROWS!

even EBCDIC isn& #39;t so mad that the code for A (31) comes AFTER the code for R (29)

anyway the moral of the story is that if you ever get mad at unicode for not being consistently encoded (UTF-8? UTF-16? which UTF-16? is it really UCS-2? Which one?), just remember, it could always be worse

UTF-8: it could always be worse (UCS-2)

UCS-2: it could always be worse (8-bit codepages)

8-bit codepages: it could always be worse (EBCDIC)

EBCDIC: it could always be worse (48-character BCDIC)

UCS-2: it could always be worse (8-bit codepages)

8-bit codepages: it could always be worse (EBCDIC)

EBCDIC: it could always be worse (48-character BCDIC)

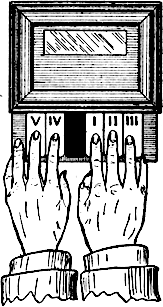

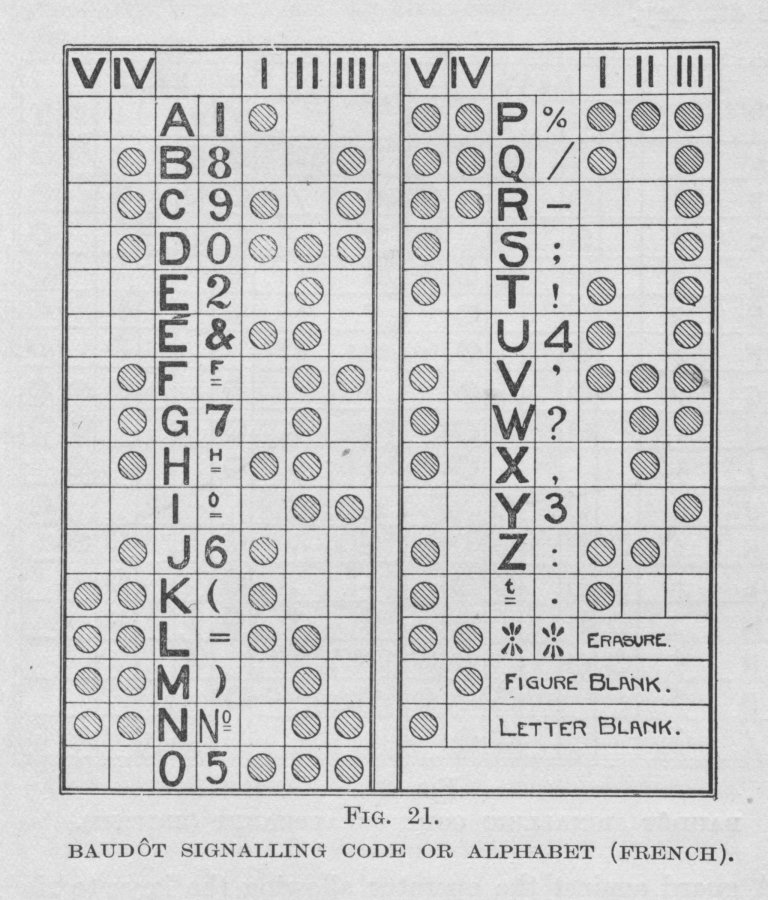

and I guess that can always be worse, because the original baudot codes (because there are multiple mutually incompatible variants!) were not laid out by any kind of binary at all: They were typed out directly by hand, and wanted to minimize hand fatigue

and it& #39;s just gonna be used by trained operators with special keyboards, so WHY NOT make them memorize all these arbitrary bit-patterns?

And you know what we call that?

I mean, besides being unnecessarily obtuse and complicated?

JOB SECURITY!

I mean, besides being unnecessarily obtuse and complicated?

JOB SECURITY!

BTW, just to prove that this was fractally insane? those 5 baudot keys have numbers, 1-5.

They& #39;re laid out like this:

They& #39;re laid out like this:

which is why a real baudot signally guide would look like this, with 1-3 on the right, and 4-5 on the left.

That 1-5 version above is way harder to read and translate into actual key presses.

That 1-5 version above is way harder to read and translate into actual key presses.

Also note that it& #39;s modal, like shift-jis: A given symbol (01000) doesn& #39;t mean anything unless you know if you& #39;re in figure mode or letter mode.

With figure mode, it& #39;s a 2. With letter mode, it& #39;s an E.

With figure mode, it& #39;s a 2. With letter mode, it& #39;s an E.

I really need to take apart a cheap kid& #39;s electronic piano and build my own baudot keyboard.

It& #39;s not really any sillier than my binary keyboard or my unary keyboard or my analog dial keyboard

It& #39;s not really any sillier than my binary keyboard or my unary keyboard or my analog dial keyboard

BTW, the unit of transmission speed, the baud? it& #39;s named after this same guy, Émile Baudot.

anyway I& #39;m gonna get out of here and try not to think about character encoding for a minute so that my head will stop hurting.

BTW, a fun thing about BCD encoding (for just numbers, not character sets) is that several CPUs include instructions for it.

The 6502 (used in the Apple II, C64, NES, Atari 8bits, etc) and the whole x86 line, for example. (Although they got turned off for 64bit mode)

The 6502 (used in the Apple II, C64, NES, Atari 8bits, etc) and the whole x86 line, for example. (Although they got turned off for 64bit mode)

the weird thing is that there& #39;s a bunch of NES games that use BCD. You& #39;d think that& #39;d be perfectly normal, since the NES uses a 6502... except it doesn& #39;t, actually.

The NES runs on a Ricoh 2A03/2A07, which is a variation of a 6502 that makes some minor changes:

1. It adds sound support, DMA, and controller polling

2. It removes the BCD instructions

1. It adds sound support, DMA, and controller polling

2. It removes the BCD instructions

So they still used BCD, even though they had to emulate it using other instructions. Kinda weird, but I guess it made sense.

BTW, the difference between the two versions is that the RP2A03 was used in NTSC territories and the RP2A07 was used in PAL.

The NTSC version runs at 1.79mhz, and the PAL version runs at 1.66mhz, in order to keep sync with the different refresh rates.

The NTSC version runs at 1.79mhz, and the PAL version runs at 1.66mhz, in order to keep sync with the different refresh rates.

As for the x86, it& #39;s weird.

It actually supports two versions of BCD, and therefore has to include two separate instructions for each.

The two modes are 4-bit and 8-bit.

4bit mode is like described above, 8-bit mode is basically the same except you use a whole byte ofr each digit

It actually supports two versions of BCD, and therefore has to include two separate instructions for each.

The two modes are 4-bit and 8-bit.

4bit mode is like described above, 8-bit mode is basically the same except you use a whole byte ofr each digit

it also doesn& #39;t have a BCD "mode" or actual BCD mathematical instructions. You can& #39;t go "all math is now in BCD!" or say "subtract a BCD number from this other BCD number"

instead, they& #39;re based on the idea of ADJUSTING INCORRECT RESULTS

instead, they& #39;re based on the idea of ADJUSTING INCORRECT RESULTS

so like you& #39;d load a register with 8, then you want to add 4 to it.

Instead of doing BCD-ADD AL, 4 you instead do a regular "add AL,4".

The result is 0x0C, which is not BCD!

Instead of doing BCD-ADD AL, 4 you instead do a regular "add AL,4".

The result is 0x0C, which is not BCD!

so then you do DAA which is "Decimal Adjust AL after Addition" which sees that AL is 0C and changes it to 0x12, which IS the correct BCD value.

Here& #39;s the fun part: So this stuff is supported for addition, subtraction, multiplication, division.

There& #39;s two modes, 4-bit and 8-bit, right?

So that& #39;s simple, 8 instructions? NOPE! there& #39;s only 6.

There& #39;s two modes, 4-bit and 8-bit, right?

So that& #39;s simple, 8 instructions? NOPE! there& #39;s only 6.

So it turns out that for addition and division, there& #39;s two: the 4bit and the 8bit modes.

For multiplication, they skipped the 4 bit mode. OK, so they just do the 8 bit mode, whatever... but division manages to be even weirder.

For multiplication, they skipped the 4 bit mode. OK, so they just do the 8 bit mode, whatever... but division manages to be even weirder.

for addition there& #39;s DECIMAL ADJUST AFTER ADDITION and ASCII ADJUST AFTER ADDITION (decimal and ascii are what it calls 4-bit and 8-bit modes)

For subtraction, there& #39;s DECIMAL ADJUST AFTER SUBTRACTION and ASCII ADJUST AFTER SUBTRACTION

For subtraction, there& #39;s DECIMAL ADJUST AFTER SUBTRACTION and ASCII ADJUST AFTER SUBTRACTION

finally multiplication has ASCII ADJUST FOR MULTIPLICATION, which again is an adjust-after thing, just only in the 8-bit ASCII mode.

Division, however?

Division, however?

Division gets AAD: ASCII ADJUST BEFORE DIVISION

It& #39;s before! All the others are after, division is before!

It& #39;s before! All the others are after, division is before!

I& #39;m sure there& #39;s some kind of mathematical/silicon reason why it& #39;s this way but it& #39;s just weird.

Also, all of them are hardcoded to use AL or AX: they& #39;re not flexible at all.

Which is kinda weird given that the x86 is one of the least RISC designs ever

Also, all of them are hardcoded to use AL or AX: they& #39;re not flexible at all.

Which is kinda weird given that the x86 is one of the least RISC designs ever

it& #39;s probably because unlike all the other instructions, this was designed at the beginning and then NEVER TOUCHED AGAIN

ahhhhhhhhhhhhhhhhhhhhhhhhh https://twitter.com/whitequark/status/1274501838876876802">https://twitter.com/whitequar...

Read on Twitter

Read on Twitter