Hello, new tech feature, let& #39;s do a speculative harm analysis to consider ways that terrible people might use you (and maybe what could be done about it?): a thread. https://twitter.com/Twitter/status/1273306563994845185">https://twitter.com/Twitter/s...

(1) It took less than an hour for a comment to appear in response to the above tweet with a voice tweet that& #39;s just audio from porn. And at least one response like & #39;I just played this in front of my dad!& #39;

(1a) Porn audio tweet is still there... does that suggest it& #39;s not a TOS/rule violation? Why isn& #39;t it marked as "sensitive content"? Falls clearly under the "adult content policy." Will rules be updated for things you can do in audio but not text? https://help.twitter.com/en/rules-and-policies/media-policy">https://help.twitter.com/en/rules-...

(2) 3... 2.... 1... until people start posting audio tweets in response to, well, everything, that& #39;s just them yelling racist/sexist/homophobic/etc. slurs at the top of their lungs.

(2a) Possible harm mitigation: Auto-transcriptions. Shouldn& #39;t someone know whether the audio this tweet contains is just someone yelling racist slurs before clicking on it to play it? Or if it& #39;s untranscribable that it might be, oh, porn?

(3) Some variety of non-consensual recording. It& #39;s not like you can force someone to type something, but recording a convo (or, say, sex) and posting it to Twitter is likely. Can that content be taken down? Is it against the rules? Can you prove it?

(4) What is the audio version of "graphic violence"? I& #39;m sure people are going to figure it out pretty quickly.

(5) LITERAL SCREAMING and/or audio manipulation to create high frequencies that will be painful to human ears. What current content rules might that fall under?

Quote from our paper about moderation for voice, led by @aaroniidx: "The introduction of new technologies to social interactions often results in unexpected challenges in behavioral regulation–particularly as bad actors find new ways to break rules." https://medium.com/acm-cscw/voice-based-communities-and-why-they-are-so-hard-to-moderate-b3339c1f0f6a">https://medium.com/acm-cscw/...

Here are some things that people do in Discord over chat, someone of which might translate to Twitter:

- play porn very loudly (check already!)

- yell racist/sexist/etc. things

- make very loud noises intended to hurt people& #39;s ears

- "raids" where a lot of people do these at once

- play porn very loudly (check already!)

- yell racist/sexist/etc. things

- make very loud noises intended to hurt people& #39;s ears

- "raids" where a lot of people do these at once

One of the takeways from the Discord study is that it& #39;s really hard to moderate real-time voice chat because you can& #39;t "delete" rule-violating content, you can only delete people. At least that isn& #39;t a problem here. (Though social VR is going to be fun to moderate!)

The problem in the immediate is going to be all of these "new" ways that people find to break rules that don& #39;t exist yet. I don& #39;t know what& #39;s happening behind the scenes - maybe Twitter already thought of all of these things and is working on (probably ML) solutions.

But based on past precedent (*ahem* YouTube) I suspect that the first thing that rolls out is some kind of content ID system designed to protect copyright holders, because audio matching is much easier than racism detection.

A line from the conclusion of the @aaroniidx-led papers on voice moderation: "It can be difficult to predict how people will abuse new technology, or how rules or enforcement

practices may need to change to prevent such abuse." https://medium.com/acm-cscw/voice-based-communities-and-why-they-are-so-hard-to-moderate-b3339c1f0f6a">https://medium.com/acm-cscw/...

practices may need to change to prevent such abuse." https://medium.com/acm-cscw/voice-based-communities-and-why-they-are-so-hard-to-moderate-b3339c1f0f6a">https://medium.com/acm-cscw/...

This problem (how will we possibly know how bad people will use this tech?!) is why we need speculative ethical consideration BEFORE a new technology is rolled out. I just came up with 5 things bad actors will definitely do off the top of my head. Then you can design to mitigate.

If you work at a tech company that wants to think about HOW to do this (scaffolding ethical speculation in the design process) I am literally writing a grant proposal about this right now and would love to talk to you. Please DM me. :)

People are commenting on this thread and suggesting even more ways that this could go horribly wrong, so I& #39;m going to keep going. (1) Voice deepfakes. We know from video that people are easily fooled by this kind of thing. https://twitter.com/htenenbaum/status/1273612371810975745?s=20">https://twitter.com/htenenbau...

(2) Adversarial audio. Even if we do get auto-transcription (which I think is a first step in mitigating harms) how easy might it be to get around that? https://twitter.com/nprandchill/status/1273615062054383617?s=20">https://twitter.com/nprandchi...

I was actually wondering if this feature was rolled out largely for accessibility reasons (i.e., for the visually impaired) but considering that it& #39;s totally inaccessible for the hearing impaired that seems unlikely. https://twitter.com/dj_diabeatic/status/1273383018418376705?s=20">https://twitter.com/dj_diabea...

Another important user persona: stalkers and/or abusive ex-partners. What are the privacy implications of audio? Can ambient background noise be used to identify where someone might be physically located?

As I said in a very similar thread about Zoombombing, speculative harms is a big reason to have diversity on a design team. Who are probably the best people to think of ways tech might be used to harass people? Marginalized people who get harassed a lot. https://twitter.com/cfiesler/status/1247914696503480321?s=20">https://twitter.com/cfiesler/...

To be clear: I am not arguing that you should shut down innovation because of speculative harms. I mean, there are some cool use cases for this tech, musicians seem to like it. https://www.billboard.com/articles/news/9404649/twitter-voice-tweets-john-legend-cardi-b">https://www.billboard.com/articles/...

HOWEVER: While innovating, you also have to think hard about all possible harms so that you& #39;re also innovating around how to mitigate these harms. It is NOT sufficient to say "once we see what harms there are to people and/or society we will figure out how to fix them."

Also: @LifeofNoods and I are framing this issue as "ethical debt" and have really been wanting to write for the popular press about it. If anyone has suggestions (better yet, contacts!) for where to pitch this, please let me know. :) (DMs are open.)

Finally (I think this is the end of this thread?) my intention isn& #39;t to call out Twitter or suggest this design team did a bad job. For all I know they& #39;ve already deeply thought about these things! But I do think this is a very good example of why it& #39;s important. Let& #39;s do good. https://abs.twimg.com/emoji/v2/... draggable="false" alt="✌️" title="Siegeshand" aria-label="Emoji: Siegeshand">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✌️" title="Siegeshand" aria-label="Emoji: Siegeshand">

Yes, this too! Ethical speculation should also be part of computing education. I& #39;m actively working on this; as just one example, see: https://howwegettonext.com/the-black-mirror-writers-room-teaching-technology-ethics-through-speculation-f1a9e2deccf4">https://howwegettonext.com/the-black... https://twitter.com/deb_lavoy/status/1273649749980319748?s=20">https://twitter.com/deb_lavoy...

More ideas in replies about what could go wrong (apologies I& #39;m not citing, a lot of replies so lost track of who said what): (1) you play an audio tweet and it& #39;s some reasonable version of "Alexa please order 100 pizzas"

(2) Even when used as intended, possible downstream effects re: the fact that, e.g., women& #39;s voices are generally perceived as less authoritative; this might just impact use patterns, perceived benefits, etc. (I don& #39;t think there& #39;s really anything to be done about this.)

(3) An unintended consequence of MY suggestion to help mitigate harm with transcriptions: audio transcription is notoriously bad with accents, which will mean disproportionate errors for non-native English speakers, etc. (Not to mention... other languages?)

This attitude = ethical debt. Also this is why it& #39;s so great for teams to include multiple dimensions of diversity. And yes, all of this is really really hard! Which is why it& #39;s important to talk about it - lessons for next time. https://twitter.com/lilririah/status/1273404316049707008?s=20">https://twitter.com/lilririah...

I apologize this thread is never-ending but the convo keeps giving me ideas!

What about transparency of process? Wouldn& #39;t it be great to know as part of a product launch "here are some ways we& #39;ve thought about possible harms"? At the very least it might help with PR...

What about transparency of process? Wouldn& #39;t it be great to know as part of a product launch "here are some ways we& #39;ve thought about possible harms"? At the very least it might help with PR...

Also: "We don& #39;t have solutions for how to mitigate this kind of possible harm yet, but here are ways that you the user might protect yourself from X, Y, and Z."

e.g., "be aware that audio tweets might contain graphic content; you may not want to click on one near your grandma"

e.g., "be aware that audio tweets might contain graphic content; you may not want to click on one near your grandma"

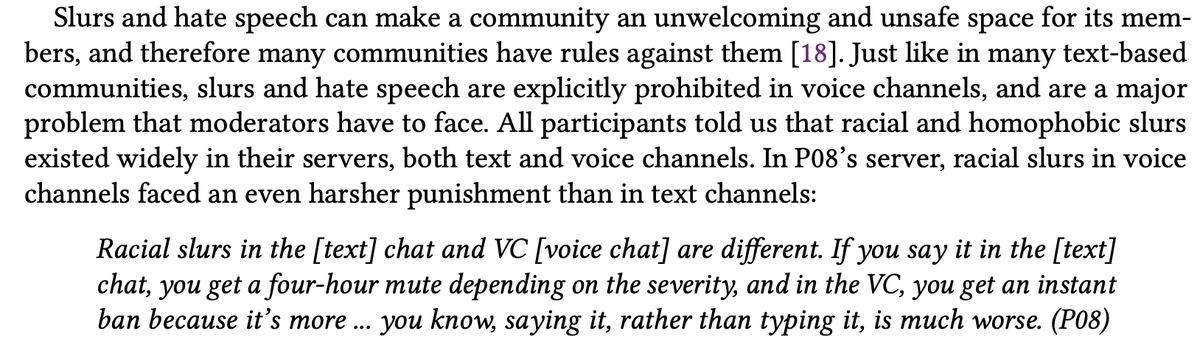

One more negative impact of voice tweets: racist speech and similar can just be psychologically worse to hear said than to see in text. This was a finding in our study about discord moderation. https://aaronjiang.me/assets/bibliography/pdf/cscw2019-discord.pdf">https://aaronjiang.me/assets/bi...

So I& #39;m not just picking on Twitter, Snapchat& #39;s "smile to break the chains!" filter is a reminder that, at the very least, a "what terrible headline could be written about this" speculative analysis is helpful for PR even if you don& #39;t care about ethics. https://nypost.com/2020/06/19/snapchat-removes-juneteenth-filter-after-criticism/">https://nypost.com/2020/06/1...

Read on Twitter

Read on Twitter