Ok, I& #39;m inspired by @MicrobiomDigest to do a bit of actual critical appraisal on this new paper by Raoult et al

There are significant issues that in my opinion make it basically worthless as an estimate of anything 1/n https://twitter.com/GidMK/status/1270163967634096128">https://twitter.com/GidMK/sta...

There are significant issues that in my opinion make it basically worthless as an estimate of anything 1/n https://twitter.com/GidMK/status/1270163967634096128">https://twitter.com/GidMK/sta...

2/n You can find the paper here: https://www.sciencedirect.com/science/article/pii/S2052297520300615?via%3Dihub">https://www.sciencedirect.com/science/a...

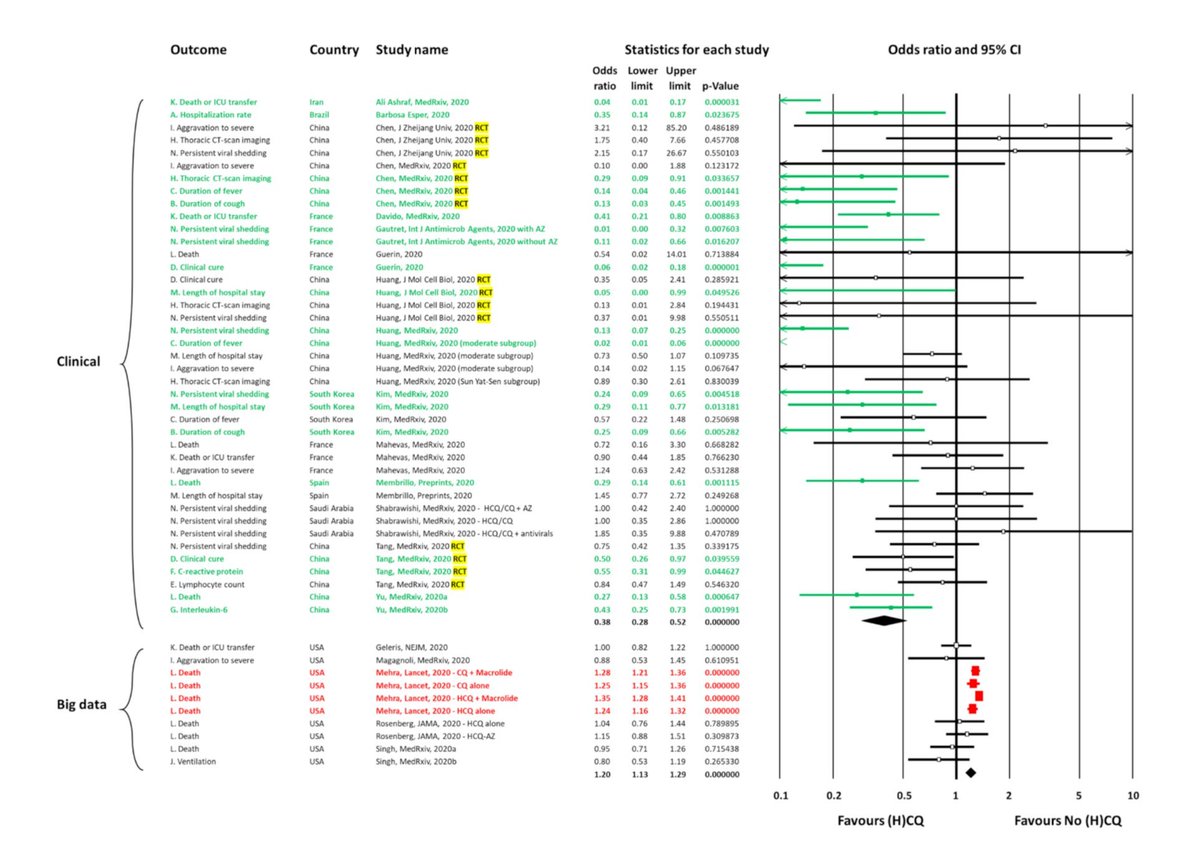

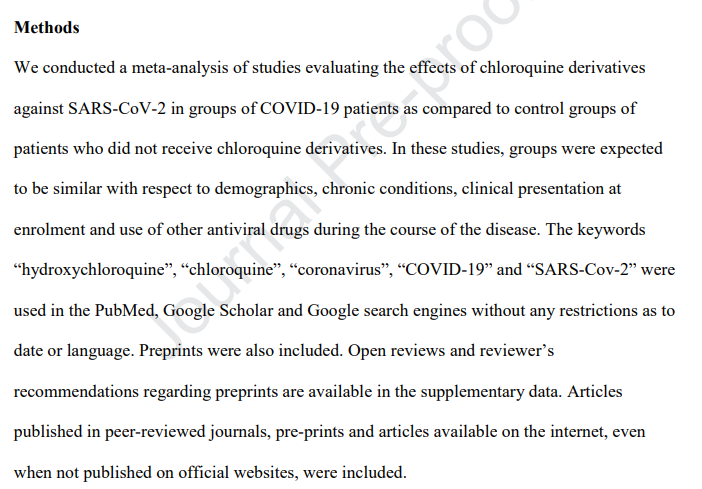

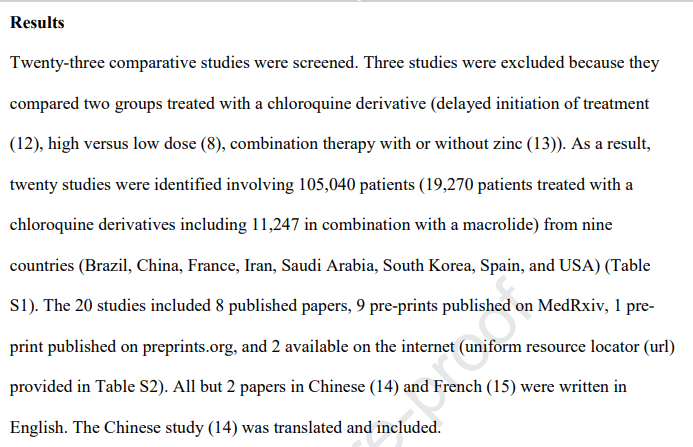

3/n The study is a fairly simple review and meta-analysis looking at hydroxychloroquine/chloroquine for COVID-19

This means the authors looked through the literature, and aggregated a bunch of different estimates of the impact of HCQ/CQ on health from different studies

This means the authors looked through the literature, and aggregated a bunch of different estimates of the impact of HCQ/CQ on health from different studies

4/n The authors (who have previously maintained that HCQ works for the disease) perhaps unsurprisingly found that, when you lumped all the evidence together, HCQ/CQ was beneficial for COVID-19

5/n Now, the authors have said publicly that meta-analyses are the most robust form of evidence thus this is the final answer on the question

That& #39;s not precisely true - meta-analyses are only as good as the included research!

That& #39;s not precisely true - meta-analyses are only as good as the included research!

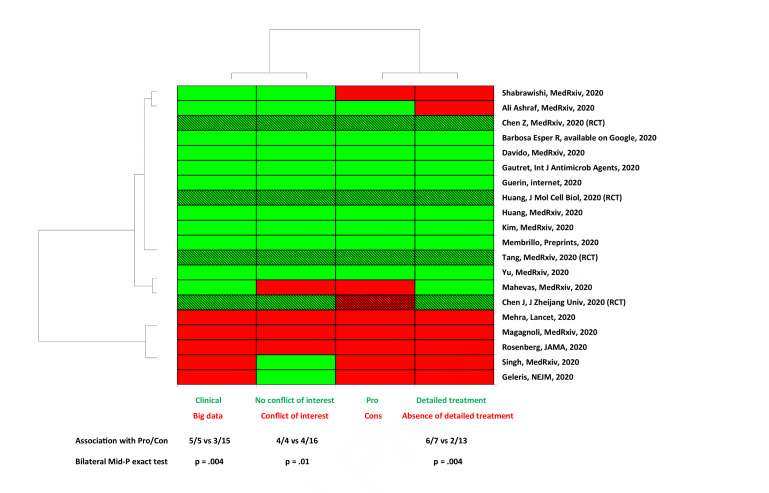

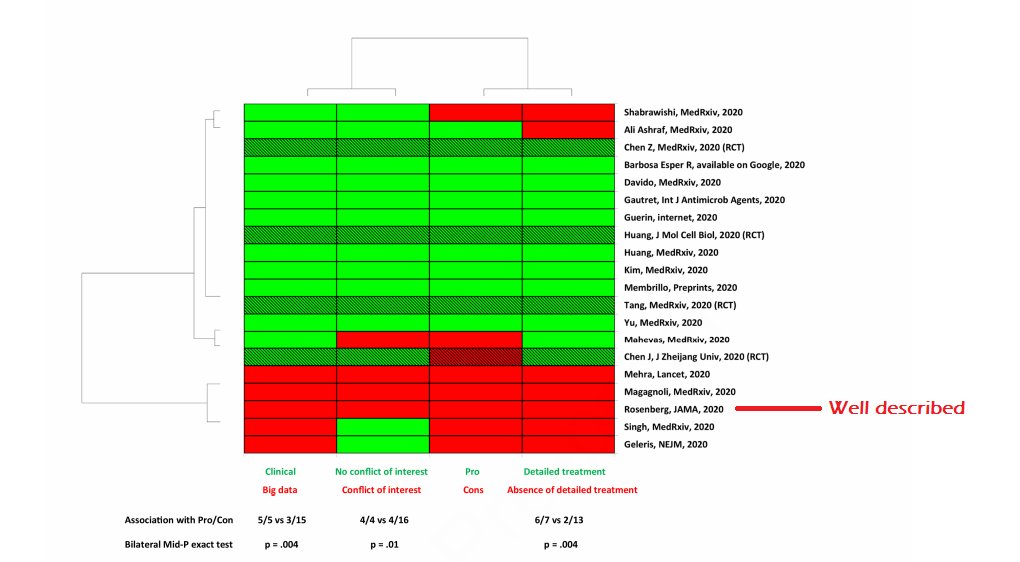

6/n So, we want to know how good the included studies are

Fortunately, the authors have created a bias rating system for us. Excellent

Fortunately, the authors have created a bias rating system for us. Excellent

7/n On closer inspection, however, this bias rating system makes absolutely no sense and is clearly not applied systematically even within the document

8/n For example, studies were rated down if they were "Big Data", which in this case appears to have almost entirely been a judgement call based on how much clinical work each study& #39;s authors do

9/n So, for example, a retrospective analysis of routinely collected data was rated down because it was lead by a public health physician, but another almost identical piece of work was given a green square because a pulmonologist was in charge  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Denkendes Gesicht" aria-label="Emoji: Denkendes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Denkendes Gesicht" aria-label="Emoji: Denkendes Gesicht">

10/n The "detailed treatment" rating was similarly biased - many of the green studies in this chart had far worse descriptions of the treatment regimen than the JAMA study, which is red

11/n There is also a column detailing which studies have rated HCQ/CQ as beneficial or not, which definitely shouldn& #39;t be included in a risk of bias segment

This is INCREDIBLY misleading

This is INCREDIBLY misleading

12/n Moreover, there is basically no discussion of how the final measure - conflict of interest - was actually collated. It wasn& #39;t from the disclosures on the studies (I checked)

13/n So, all in all, the rating of the studies makes very little sense and is at best entirely inconsistent. It& #39;s hard to know what to make of this except that it doesn& #39;t help us understand the included research at all

Moving on

Moving on

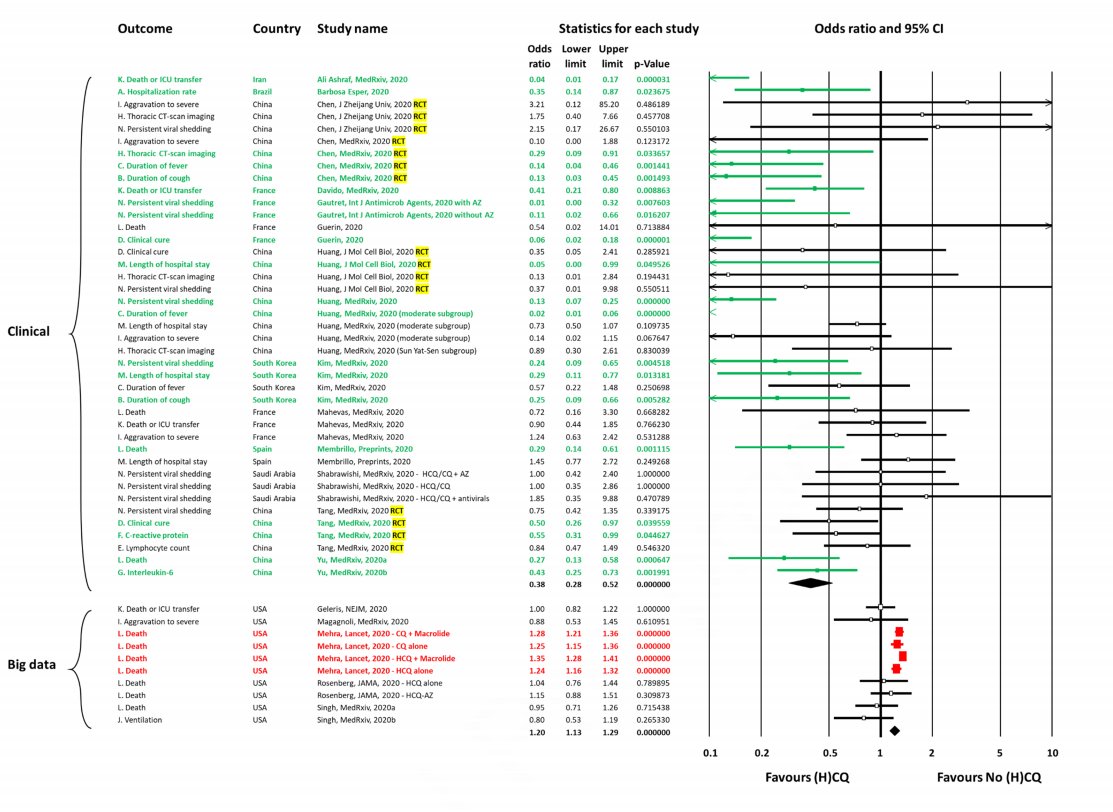

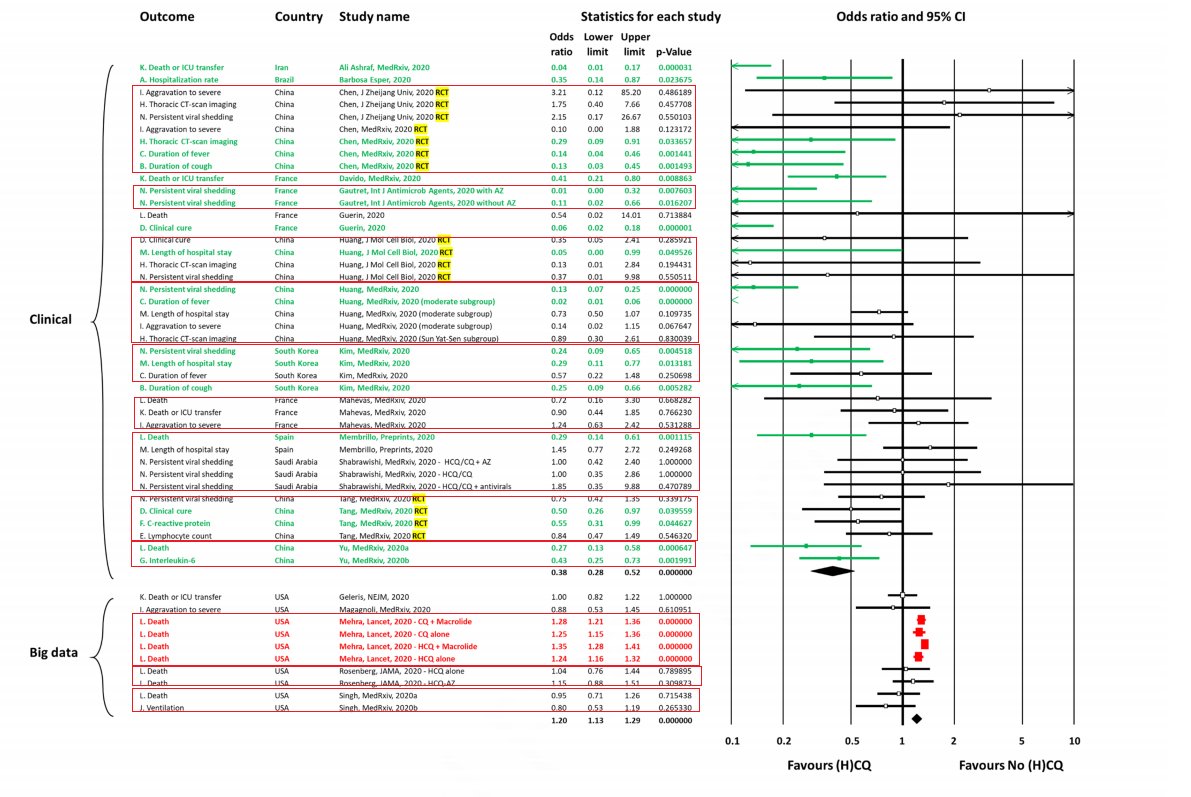

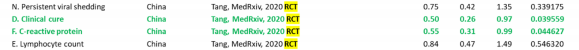

15/n Firstly, different results from THE SAME studies on THE SAME patients have been aggregated together

This is guaranteed to bias the results, and makes the aggregated estimate largely worthless

(red box = repeated)

This is guaranteed to bias the results, and makes the aggregated estimate largely worthless

(red box = repeated)

16/n (N.B. some of these estimates are not the SAME patients, they are different groups from the same study. This is also bad practice, and likely to bias the estimate, but not quite as problematic)

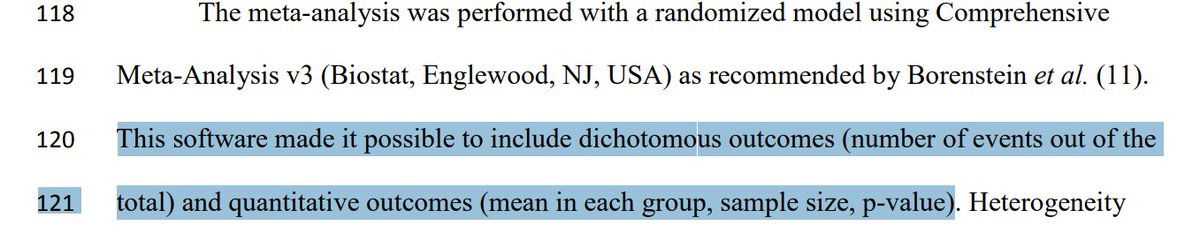

18/n Now, the authors report very opaquely on how they derived the odds ratios that are used in this table (essentially "we plugged it into the software"), but some of these figures are enormously divergent from the actual reported outcomes

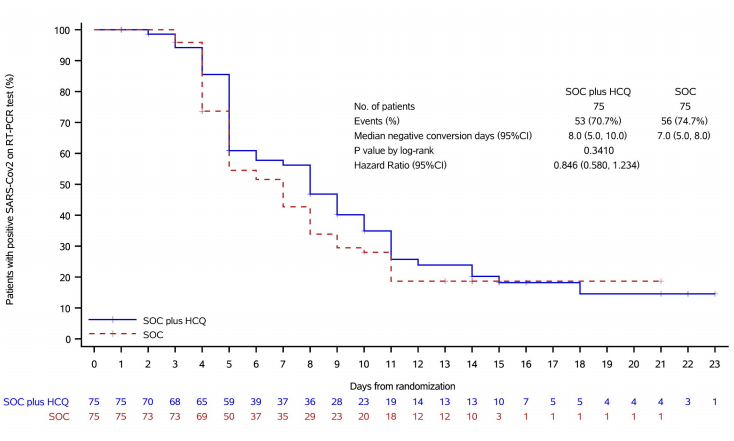

19/n For example, let& #39;s look at this preprint that was included in the research

The odds ratio for "Persistent viral shedding" is 0.75 (0.42-1.35), with lower values favoring HCQ/CQ

I.e. 25% LOWER odds of persistent viral shedding for HCQ

The odds ratio for "Persistent viral shedding" is 0.75 (0.42-1.35), with lower values favoring HCQ/CQ

I.e. 25% LOWER odds of persistent viral shedding for HCQ

20/n But if we look at the paper, this doesn& #39;t make sense

Of the HCQ group, 22/75 had a persistent viral load

Of the control, it was 19/75

If you work the odds ratio out, you get 1.22, i.e. 22% HIGHER FOR HCQ

Of the HCQ group, 22/75 had a persistent viral load

Of the control, it was 19/75

If you work the odds ratio out, you get 1.22, i.e. 22% HIGHER FOR HCQ

21/n In other words, not only is the figure in the meta-analysis wrong, it seems to be in entirely the opposite direction to the true result

That& #39;s very worrying, and hard to explain

That& #39;s very worrying, and hard to explain

22/n (One thing that gives you an answer more similar to the number they& #39;ve got is calculating odds based on the %s given in the KM curve rather than the crude figures, but that has its own issues)

23/n There& #39;s also no reasoning given for including multiple different outcomes from the SAME PATIENTS in a meta-analysis

This is very concerning

This is very concerning

24/n For example, they& #39;ve got some studies where they included numbers on the likelihood of a "clinical cure" AND "death" from the same patients

These are obviously related, so aggregating both in the same model is...problematic

These are obviously related, so aggregating both in the same model is...problematic

25/n It& #39;s also worth noting that several of these retrospective clinical audits were from the same places at overlapping times, and so probably included some of the same patients anyway

26/n There are, somehow, even more issues to examine here

For one thing, the review protocol was poorly described and hard to follow

For one thing, the review protocol was poorly described and hard to follow

27/n Remember; this is the pre-proof version of the study

In other words, the final, slightly unedited, publishable version

In that context, this methodology is FAR too opaque

In other words, the final, slightly unedited, publishable version

In that context, this methodology is FAR too opaque

28/n The results show you what I& #39;m talking about

There is NO WAY that searching with those search terms gets you only 23 studies

Even just plugging in the search terms to PubMed gives you >100 studies

There is NO WAY that searching with those search terms gets you only 23 studies

Even just plugging in the search terms to PubMed gives you >100 studies

29/n It took me less than 5 minutes to find a study that matches the inclusion criteria but was not included

That& #39;s...worrying

That& #39;s...worrying

30/n On top of that, in some cases the authors have included older versions of the included studies

That& #39;s less than ideal (newer versions CORRECT mistakes!)

That& #39;s less than ideal (newer versions CORRECT mistakes!)

31/n There is so much more I could look at here, but honestly you have to stop somewhere

This study is riddled with flaws and almost certainly does not present an accurate estimate of the effect of HCQ or CQ

This study is riddled with flaws and almost certainly does not present an accurate estimate of the effect of HCQ or CQ

32/n If you want a decent summation of the evidence for/against HCQ, a good source is CEBM:

"Current data do not support the use of hydroxychloroquine for prophylaxis or treatment of COVID-19" https://www.cebm.net/covid-19/hydroxychloroquine-for-covid-19-what-do-the-clinical-trials-tell-us/">https://www.cebm.net/covid-19/...

"Current data do not support the use of hydroxychloroquine for prophylaxis or treatment of COVID-19" https://www.cebm.net/covid-19/hydroxychloroquine-for-covid-19-what-do-the-clinical-trials-tell-us/">https://www.cebm.net/covid-19/...

33/n I forgot to mention, the paper was received, revised, and accepted within a month

While not unheard of, that& #39;s very quick for academic publishing!

While not unheard of, that& #39;s very quick for academic publishing!

34/n In summation, the paper:

- inadequately rates risk of bias

- inappropriately combines estimates...

- ...that may have been miscalculated

It is hard to know what to make of this, except to say that the paper itself is not very useful in any way

- inadequately rates risk of bias

- inappropriately combines estimates...

- ...that may have been miscalculated

It is hard to know what to make of this, except to say that the paper itself is not very useful in any way

Read on Twitter

Read on Twitter