Given last week& #39;s escalated tension between platforms and Trump, @laur_hf and I analyzed Twitter’s public interest exception and Facebook’s newsworthiness policy. Below is a thread about these policies, and the differences between policy and enforcement.

Both policies try to resolve cases where content breaks platform rules but may be valuable for the public to see. The rationale is rooted in free expression concerns about open access to information, especially about political leaders and matters of public interest.

But these concerns aren& #39;t absolute. Each platform weighs free expression against the risk of harm. This is largely discussed in terms of offline violence, but can also capture concerns like doxxing or election interference.

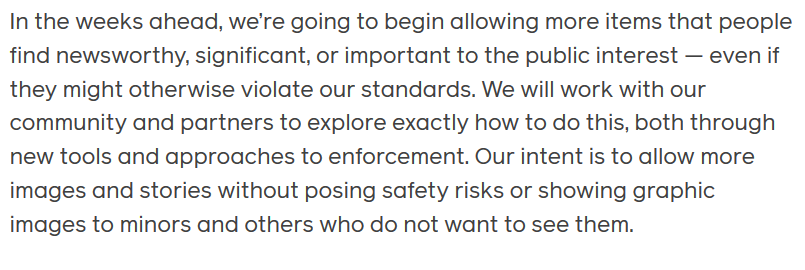

Most of the time, the choice is to remove content or leave it up. But Twitter’s policy proposes a compelling middle path: sometimes, it will add a warning label saying that a tweet breaks its rules but is being left up because it’s in the public interest. https://blog.twitter.com/en_us/topics/company/2019/publicinterest.html">https://blog.twitter.com/en_us/top...

Under either approach, the result is that public figures get more leeway in terms of what they can say, and what can be said about them. This requires case-specific value judgements. But it also reflects an overall calculation that accounts for the potential blow-back.

Platforms largely feel comfortable removing nudity & "terrorist" content, regardless of public interest. But content has remained on the platform even where the risk of incitement is high (Myanmar), or where people have been relentlessly harassed (Leslie Jones).

While these calculations have been made for years, it wasn’t until somewhat recently that these value judgments were codified in policy terms. Facebook didn’t say newsworthiness impacts takedown decisions until 2016; Twitter& #39;s defined its public interest approach in 2019.

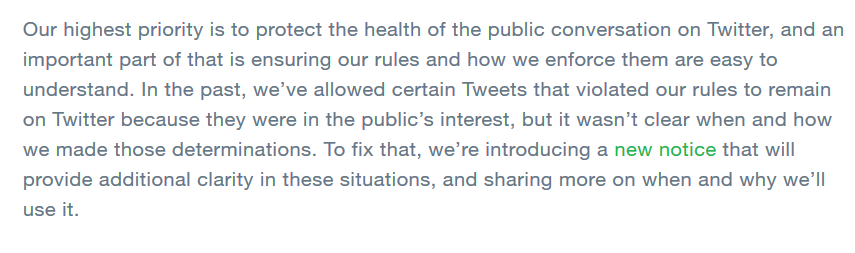

Twitter’s policy explains who is covered, what actions it will take, and who makes the decisions. Facebook says that newsworthiness impacts its removal decisions, but you have to parse blog posts and an unaffiliated legal essay to assemble an understanding https://knightcolumbia.org/content/facebook-v-sullivan">https://knightcolumbia.org/content/f...

For example: who& #39;s covered? On Twitter, its public officials or candidates that have >100K followers and verified accounts. Facebook doesn’t say; you’ll have to read @klonick’s essay to get this articulation:

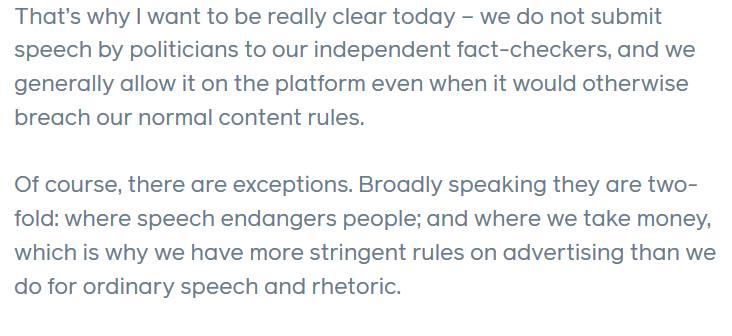

Ads are a little different. As of late 2019, Twitter prohibits political ads. Facebook allows them and says it won’t fact-check ads from politicians, but they must comply with Community Standards (somewhat). It’s unclear how much this position from Sep 2019 still holds.

Before last week’s warning labels, the biggest push-back to Trump was Facebook’s decision to remove his racist caravan ad. It’s unclear whether a similar ad would come down today, but Facebook insists that it would. https://www.cnbc.com/2018/11/05/facebook-rejects-sensational-trump-caravan-election-ad.html">https://www.cnbc.com/2018/11/0...

Despite Trump’s cries of censorship, his accounts have largely benefited from platform benevolence. The instances where Facebook and Twitter took action are hardly the only times he’s broken their rules. So how often are these policies applied?

It’s hard to tell. Facebook& #39;s approach is to err on the side of leaving content up, but won’t usually say when its newsworthy policy was applied. Twitter said it only applied its policy 5X in 2018, and as of Jan 2020, it hadn’t applied its warning label to any tweets.

Things have started shifting during the pandemic. Twitter removed covid-misinformation tweets from Presidents Bolsonaro and Maduro instead of applying warning labels. This perhaps reflected its stated "zero tolerance" in terms of health misinfo. https://www.theverge.com/2020/3/30/21199845/twitter-tweets-brazil-venezuela-presidents-covid-19-coronavirus-jair-bolsonaro-maduro">https://www.theverge.com/2020/3/30...

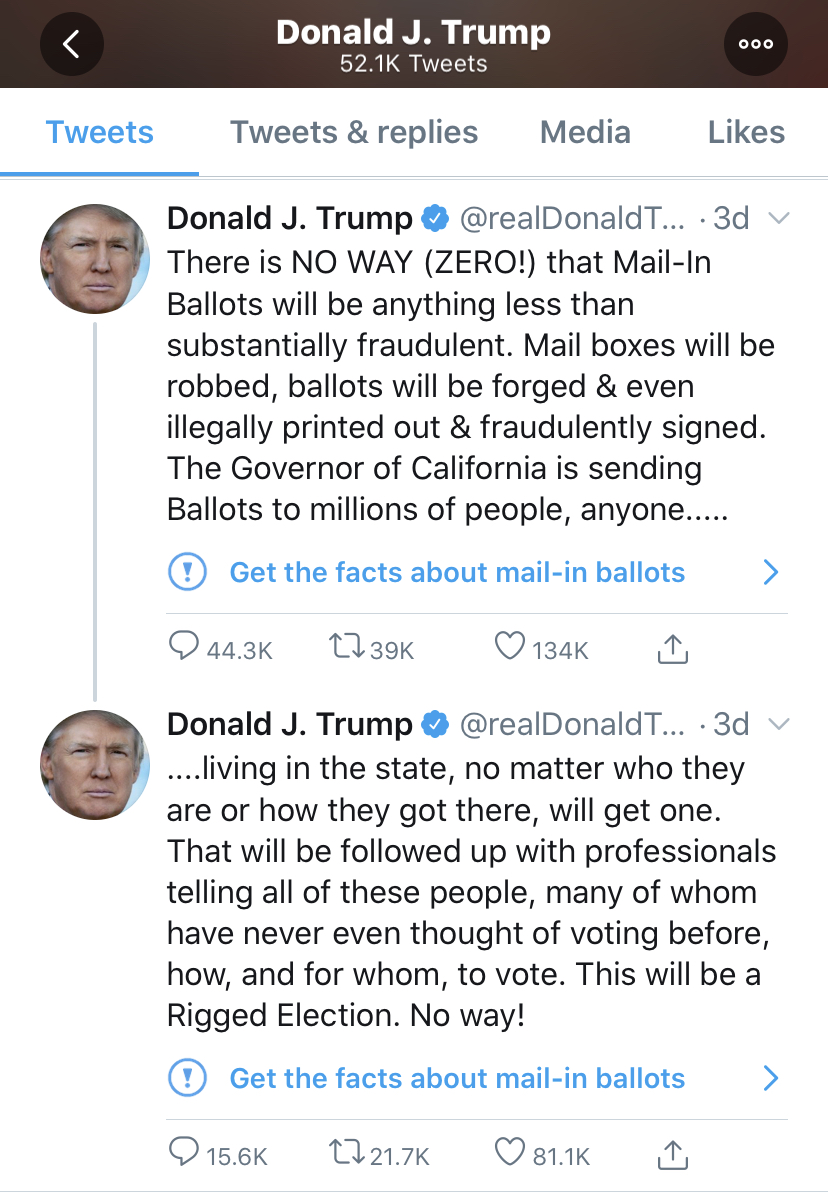

Pushing back against Trump is a different story. Even when Twitter finally applied warnings, the first two were ad hoc labels that didn’t follow its public interest policy. Instead, they just presented a link for users to “get the facts” with context from media outlets.

The warnings didn’t place the tweet behind an interstitial or mention that the tweets broke Twitter’s rules. Notably, their policy actually lists voting as a fundamental right and indicates that election interference is more likely to result in removal than a warning.

It wasn’t until Trump called for a violent response to the unrest in Minneapolis that Twitter responded with a more thorough application of its policy. This tweet was put behind a warning screen, and users’ ability to like, reply, and share it was restricted.

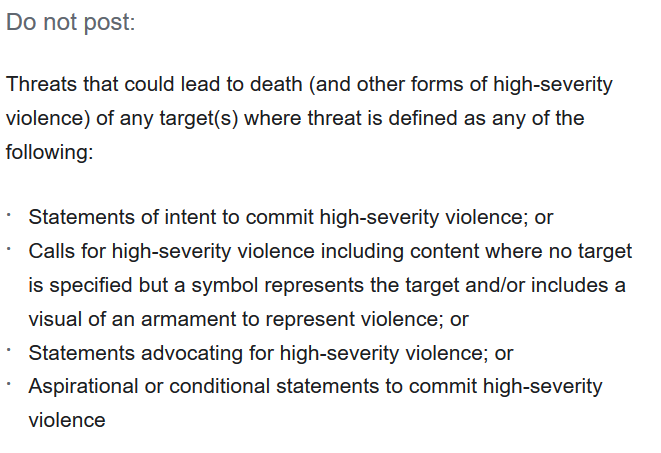

All of Trump’s comments were cross posted to Facebook. FB& #39;s voter misinformation policy is pretty narrowly focused on the time, place, and qualifications for voting. However, Trump’s MN comments appear to be a more clear-cut violation of Facebook& #39;s violence & incitement policy.

FB says its policy has an exception for discussion around state action. In other words: a newsworthiness decision. But FB also said that where a post incites violence, it will be removed even if posted by a politician. Where the line is remains hazy. https://www.facebook.com/zuck/posts/10111961824369871">https://www.facebook.com/zuck/post...

Who gets the benefit of the doubt? And for how long? The reality is that those decisions ultimately lie with the heads of each platform. While @jack appears increasingly willing to moderate Trump’s account, Zuckerberg remains hesitant.

Trump’s EO is an open retaliation for even the smallest fact-check. While much of the order is more grievance than policy, it continues a long line of threatening regulation as a means of ensuring special protection for himself and his supporters. https://www.justsecurity.org/70477/trumps-executive-order-targets-twitter-capitalizing-on-right-wing-grievance/">https://www.justsecurity.org/70477/tru...

It looks like Trump& #39;s bullying remains effective. Zuckerberg rushed to appear on Fox News and assure its audience that Facebook stands for free speech. He also indicated that he reached out to the White House in an attempt to deescalate. https://www.foxnews.com/media/facebook-mark-zuckerberg-twitter-fact-checking-trump">https://www.foxnews.com/media/fac...

Read on Twitter

Read on Twitter