Language models are few shot learners! We find that larger models can often (but not always) perform NLP tasks given only natural language prompt and a few examples in the context. No fine-tuning.

Paper: http://arxiv.org/abs/2005.14165

Illustrated">https://arxiv.org/abs/2005.... summary https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Downwards arrow" aria-label="Emoji: Downwards arrow"> (1/12)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Downwards arrow" aria-label="Emoji: Downwards arrow"> (1/12)

Paper: http://arxiv.org/abs/2005.14165

Illustrated">https://arxiv.org/abs/2005.... summary

This "in-context learning" happens entirely within the forward-pass on a single sequence. We study this in the zero-, one- and few-shot settings.

(2/N)

(2/N)

One way to think about this: In-context learning is the inner loop of meta-learning, and unsupervised pre-training is the outer loop. To do well at pre-training, a language model needs to learn to quickly recognize patterns within the context of a given sequence.

(3/12)

(3/12)

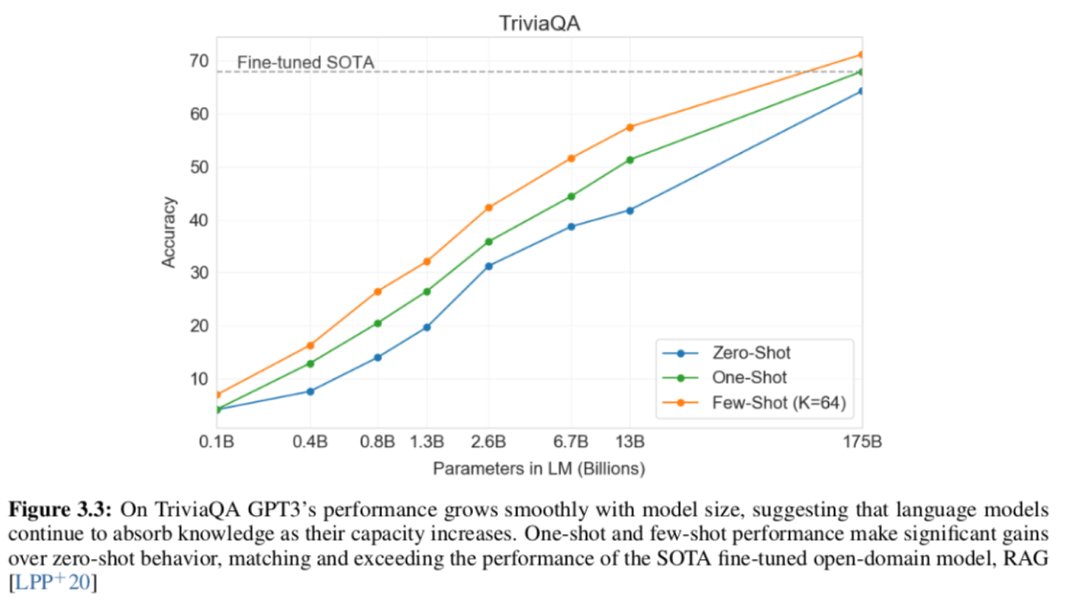

GPT-3 seems to be quite strong at question answering and trivia. We find that it can be competitive with the fine-tuned SOTA despite being shown only a few examples of the task and performing no gradient updates after pre-training. Here& #39;s TriviaQA as one instance.

For SuperGLUE, we find that GPT-3 with 32 examples per task performs slightly better than a fine-tuned BERT-large (which is far smaller than GPT-3). So in this case, massively scaling up the model massively reduced the data requirements.

(6/12)

(6/12)

Special shout out to joint first authors @8enmann, Nick Ryder and Melanie Subbiah, to @AlecRad for research guidance and to @Dario_Amodei for leading the project.

(11/12)

(11/12)

I encourage y’all to read (or at least skim) the paper. I’m really proud to have had a part in creating this work over the last 18 months and am glad to get to share it with you.

Paper: http://arxiv.org/abs/2005.14165

Samples">https://arxiv.org/abs/2005.... & Data: http://github.com/openai/gpt-3

(12/12)">https://github.com/openai/gp...

Paper: http://arxiv.org/abs/2005.14165

Samples">https://arxiv.org/abs/2005.... & Data: http://github.com/openai/gpt-3

(12/12)">https://github.com/openai/gp...

Read on Twitter

Read on Twitter (1/12)" title="Language models are few shot learners! We find that larger models can often (but not always) perform NLP tasks given only natural language prompt and a few examples in the context. No fine-tuning.Paper: https://arxiv.org/abs/2005.... summary https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Downwards arrow" aria-label="Emoji: Downwards arrow"> (1/12)" class="img-responsive" style="max-width:100%;"/>

(1/12)" title="Language models are few shot learners! We find that larger models can often (but not always) perform NLP tasks given only natural language prompt and a few examples in the context. No fine-tuning.Paper: https://arxiv.org/abs/2005.... summary https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬇️" title="Downwards arrow" aria-label="Emoji: Downwards arrow"> (1/12)" class="img-responsive" style="max-width:100%;"/>