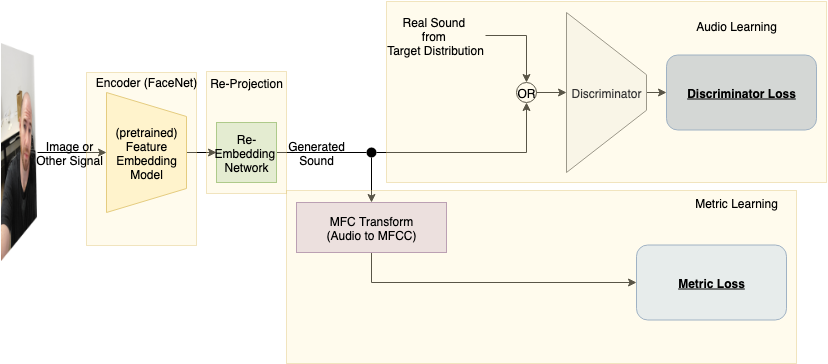

#Earballs: Neural transmodal translation https://arxiv.org/abs/2005.13291 ">https://arxiv.org/abs/2005.... "The goal of this work is to propose a mechanism for providing an information preserving mapping that users can learn to use to see (or perceive other information) using their auditory system"

Unlike The vOICe visual-to-auditory sensory substitution, "our system does not seek to encode or preserve the pixel information, but instead the higher-level information extracted by a learned feature embedding"

About The vOICe: "Their audio generation mechanism is based on direct mapping of pixel data from the image to audio frequency, panning, and loudness data (vertical position to frequency, left-right position to scan time (encoded in stereo), and brightness to sound loudness)."

About Earballs: "The GAN successfully found a metric preserving mapping, and in human subject tests, users were able to successfully classify images of faces using only the audio output by our model."

Read on Twitter

Read on Twitter