So here’s a bit of a thread for #WebArchiveWednesday on what I’ve been working on for the last month or so…

tl;dr – #webarchives are fun (and very useful for research)

tl;dr – #webarchives are fun (and very useful for research)

Thanks to @NetPreserve & @anjacks0n, I’ve been working on a set of Jupyter notebooks using data from #webarchives.

But what sort of data can you get from web archives?

But what sort of data can you get from web archives?

Web archives are HUGE, and the original archived web resources often can’t be accessed in bulk because of things like copyright.

But there’s lots of easily accessible data about the contents of web archives that you can explore without downloading terabytes of data.

But there’s lots of easily accessible data about the contents of web archives that you can explore without downloading terabytes of data.

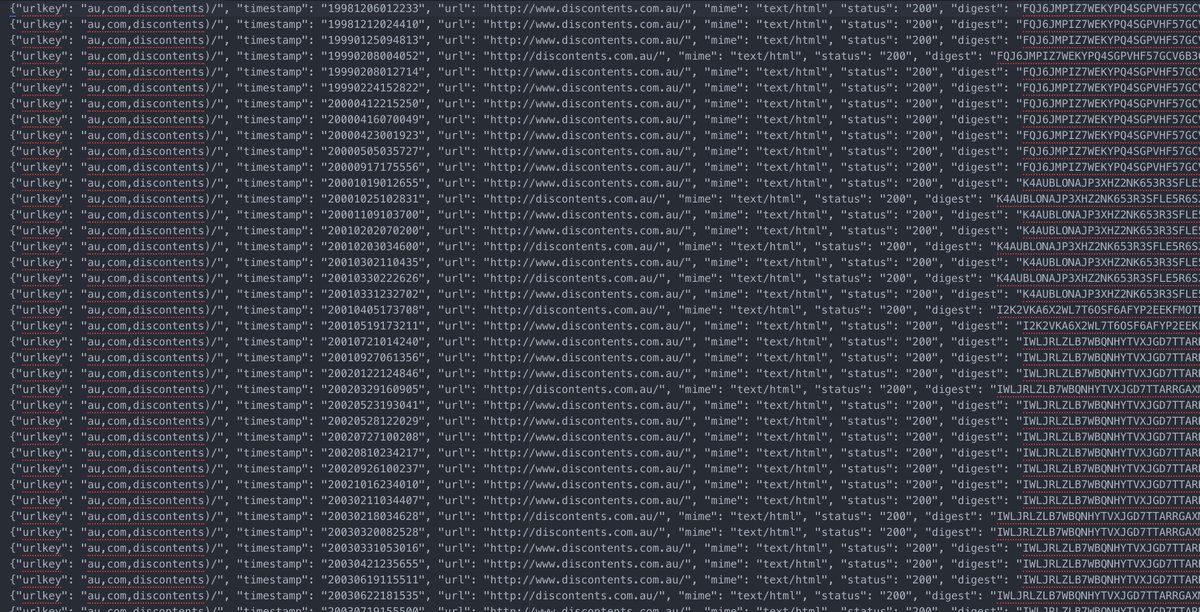

For example, if you’d like to get a list of all the times @NLAPandora has archived my blog, it’s as easy as this: https://web.archive.org.au/awa/timemap/json/discontents.com.au

This">https://web.archive.org.au/awa/timem... url will retrieve a machine readable list of captures, which includes the capture dates, the file mime type, http status, & more.

This">https://web.archive.org.au/awa/timem... url will retrieve a machine readable list of captures, which includes the capture dates, the file mime type, http status, & more.

This sort of list is called a Timemap, and it’s part of the Memento protocol for getting capture data from web archives. You can do the same thing with @UKWA, @NLNZ, @internetarchive, & any other Memento compliant system.

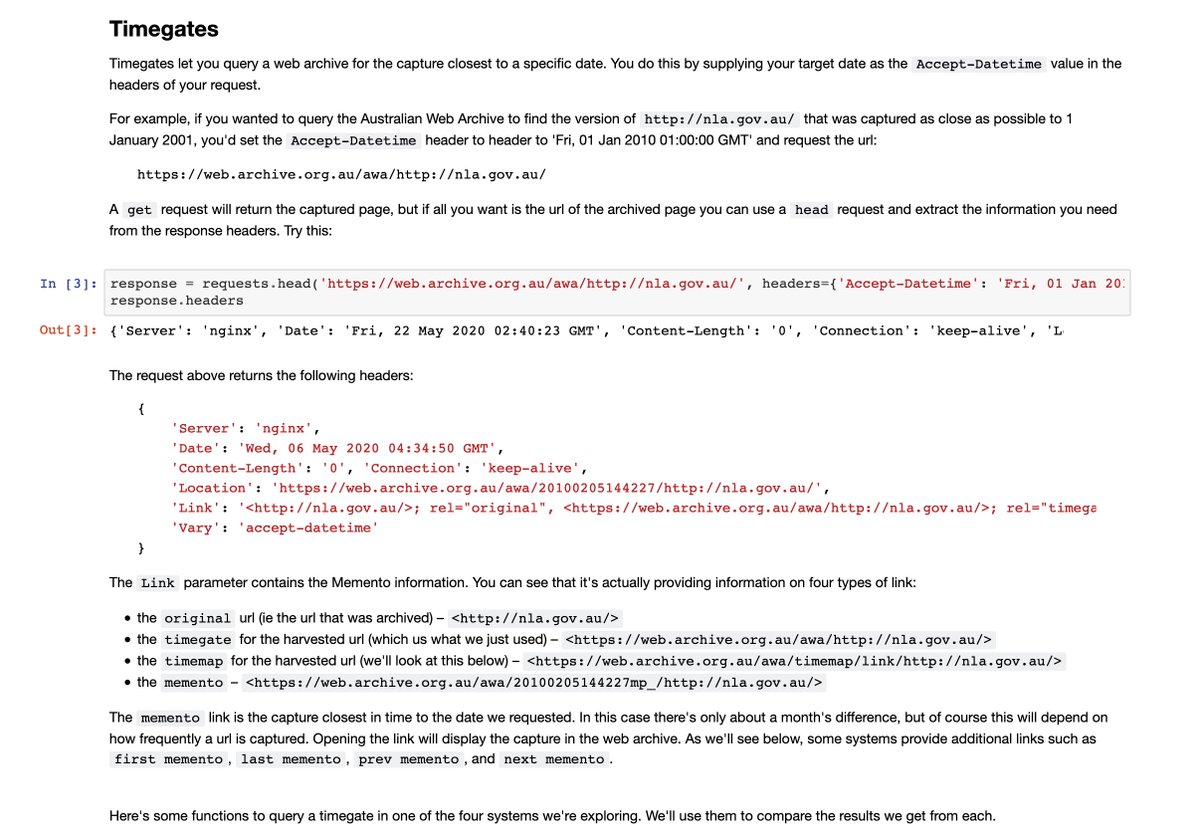

There are also Timegates in Memento systems! Query a Timegate with a date and a url and it will send you back details of the archives& #39; capture closest to your target date.

Timemaps, Timegates, & Mementos are cool, but there are a few little differences in the way they& #39;re implemented across #webarchives systems. I documented the differences I found in a notebook to save you having to puzzle through it yourself. https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/memento.ipynb">https://nbviewer.jupyter.org/github/GL...

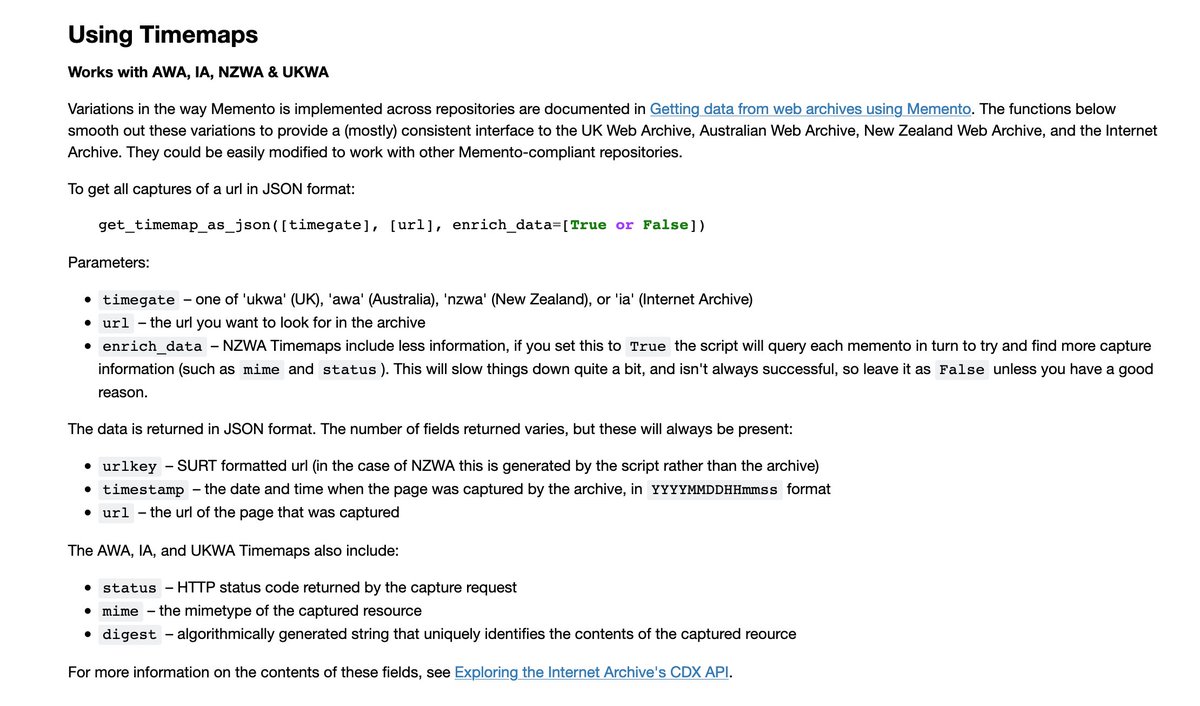

Based on what I found, I wrote some code to smooth out the differences between @NLAPandora, @ukwa, @nlnz, & @internetarchive so you can easily grab data from any of these systems for your own research projects. There& #39;s a notebook for querying Timegates: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/get_a_memento.ipynb">https://nbviewer.jupyter.org/github/GL...

And another notebook to get all captures of a particular url using Timemaps (& also CDX APIs which I& #39;ll mention later): https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/find_all_captures.ipynb">https://nbviewer.jupyter.org/github/GL...

But what could you do with the data from a Timemap? I& #39;m glad you asked...

What about creating a creating a series of annual screenshots, showing how a web page changed over time? There& #39;s a notebook that shows you how: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/screenshots_over_time_using_timemaps.ipynb">https://nbviewer.jupyter.org/github/GL...

What about creating a creating a series of annual screenshots, showing how a web page changed over time? There& #39;s a notebook that shows you how: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/screenshots_over_time_using_timemaps.ipynb">https://nbviewer.jupyter.org/github/GL...

ooops short intermission for school pick up...

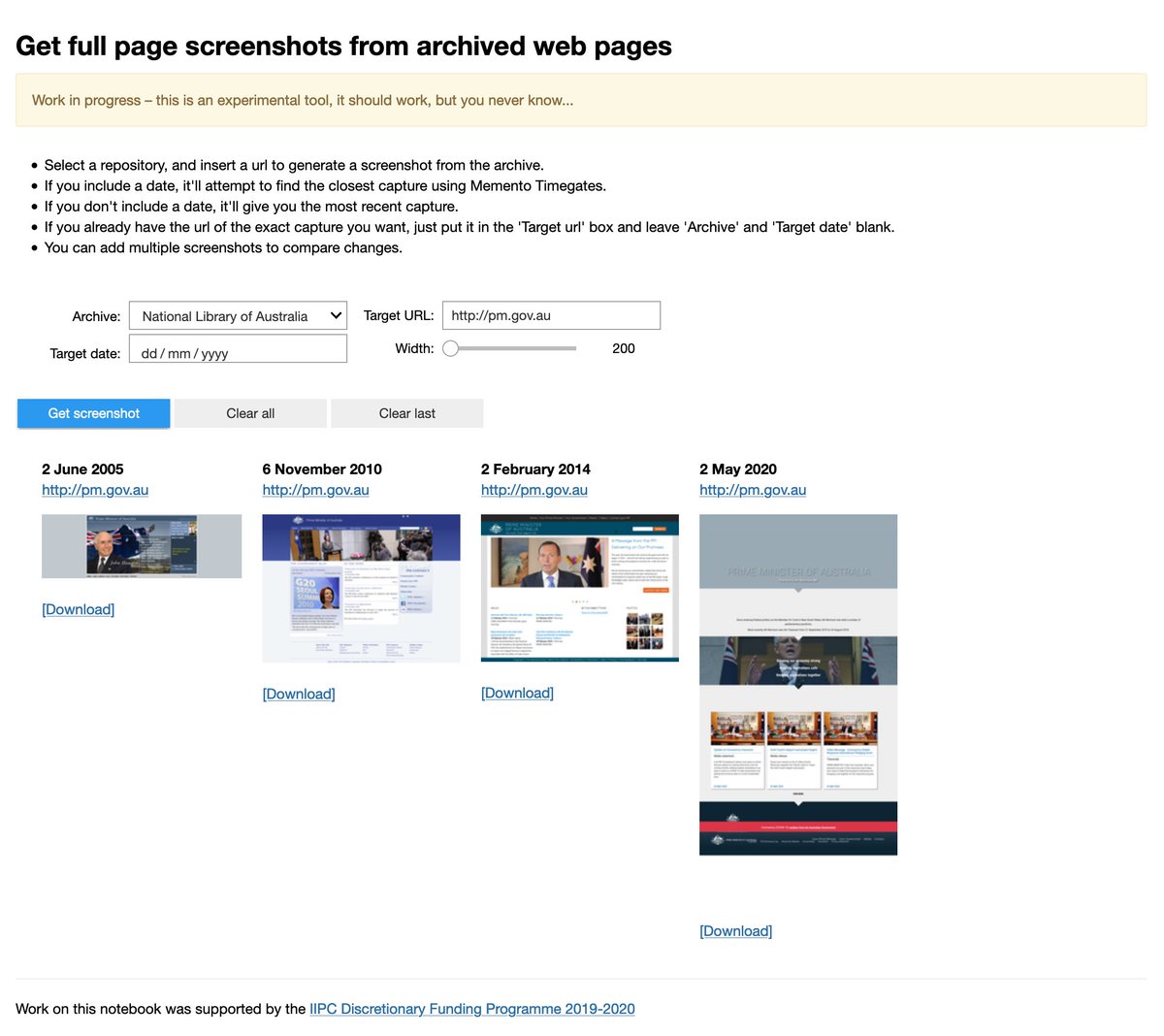

If you want screenshots, I also made an app (Jupyter notebook running in appmode) that lets you compare screenshots from different dates, or different archives. It uses Timegates to find the captures, so works across a number of systems. #create-and-compare-full-page-screenshots-from-archived-web-pages">https://glam-workbench.github.io/web-archives/ #create-and-compare-full-page-screenshots-from-archived-web-pages">https://glam-workbench.github.io/web-archi...

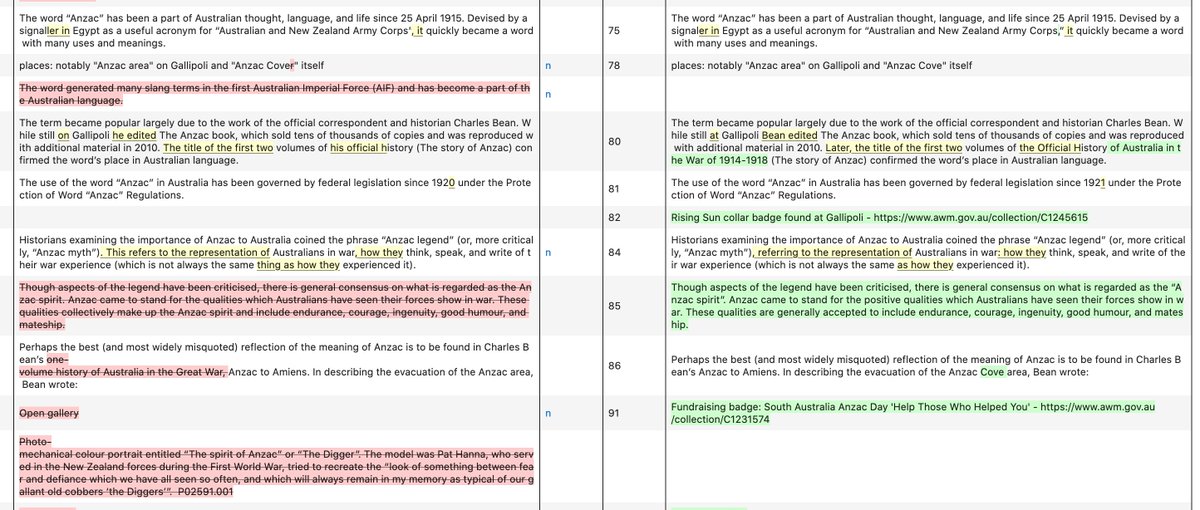

There are lots of ways we can use Timemaps to explore change in a page. This notebook grabs each web capture in turn from a Timemap and looks at how the text has changed between captures – displaying deletions, additions, & changes over time. #display-changes-in-the-text-of-an-archived-web-page-over-time">https://glam-workbench.github.io/web-archives/ #display-changes-in-the-text-of-an-archived-web-page-over-time">https://glam-workbench.github.io/web-archi...

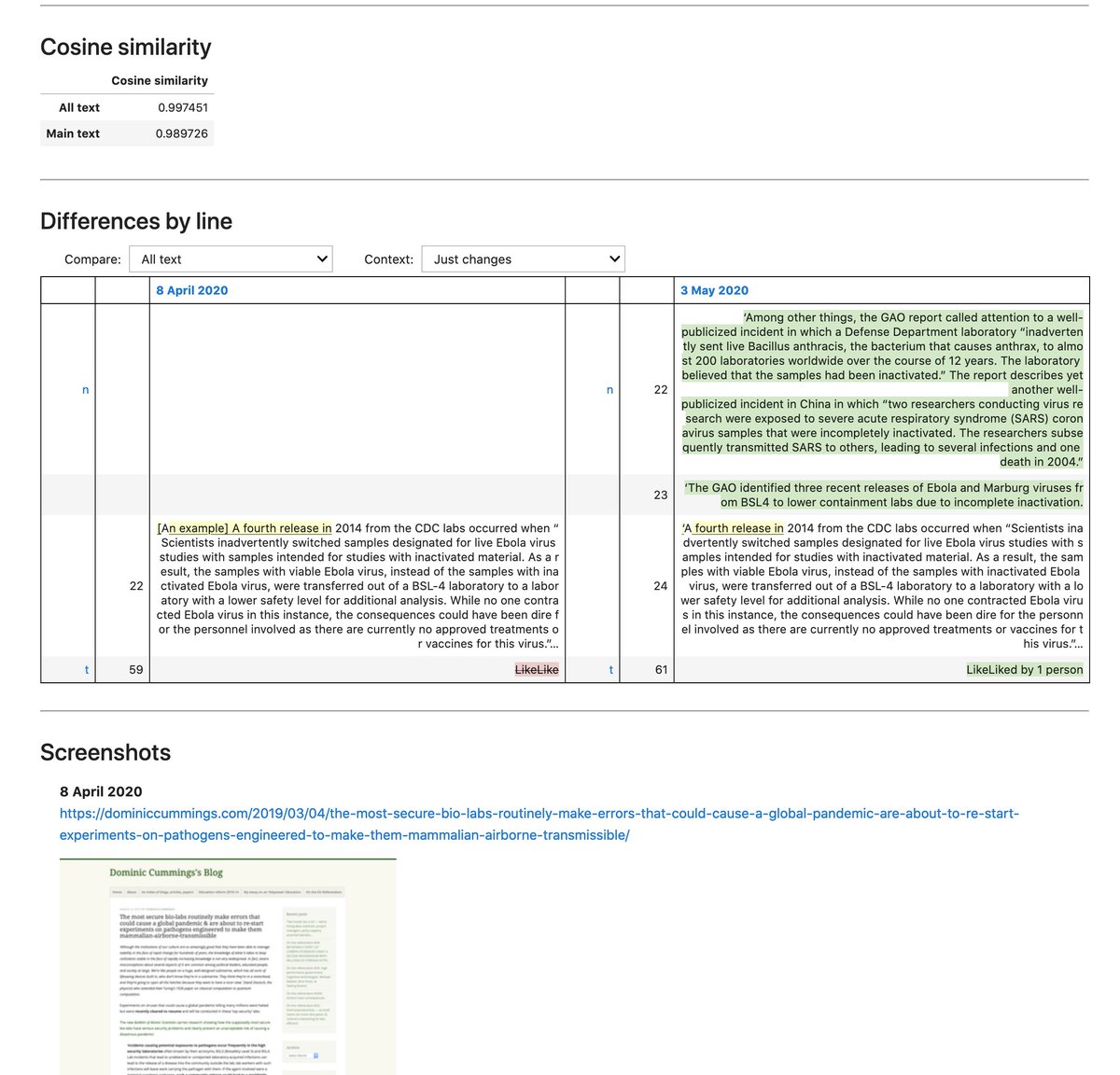

That last notebook also runs as an app, and automatically generates a url you can share to load a particular page for analysis. So if you wanted to explore the history of that now notorious bio labs / pandemic blog post by Dominic Cummings, just go here: https://mybinder.org/v2/gh/GLAM-Workbench/web-archives/master?urlpath=/apps/display-text-changes-from-timemap.ipynb%3Furl%3D%22https%3A//dominiccummings.com/2019/03/04/the-most-secure-bio-labs-routinely-make-errors-that-could-cause-a-global-pandemic-are-about-to-re-start-experiments-on-pathogens-engineered-to-make-them-mammalian-airborne-transmissible/%22%26archive%3D%22ia%22">https://mybinder.org/v2/gh/GLA...

And yeah, thanks to @mybinderteam for being awesome.

If you just want to compare two different captures of a page, there& #39;s another app-ified notebook that gets the captures (via Timegates) and then compares them in a number of different ways – metadata, stats, links, cosine similarity, text/code changes: #compare-two-versions-of-an-archived-web-page">https://glam-workbench.github.io/web-archives/ #compare-two-versions-of-an-archived-web-page">https://glam-workbench.github.io/web-archi...

Have you ever wanted to find out when a particular word or phrase first appeared in (or disappeared from) a web page? Timemaps to the rescue! This notebook/app searches through all the captures until your phrase appears (or disappears). #find-when-a-piece-of-text-appears-in-an-archived-web-page">https://glam-workbench.github.io/web-archives/ #find-when-a-piece-of-text-appears-in-an-archived-web-page">https://glam-workbench.github.io/web-archi...

In that last notebook you can also choose to see all occurrences of a word or phrase, in which case it works through the entire Timemap and creates a little chart showing its frequency over time.

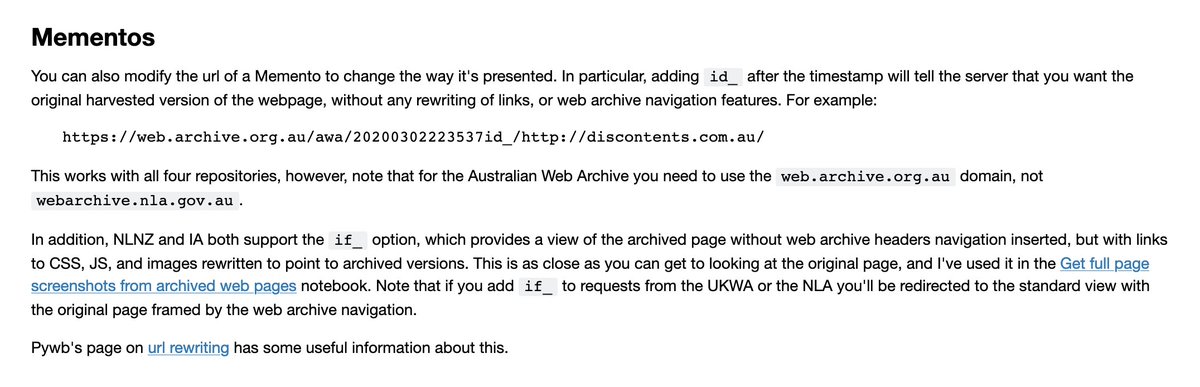

You might be wondering how I get the actual content of the archived pages without the navigation etc that web archives add in their UI. There& #39;s a little trick. Just get a link to a capture from the UI and add & #39;id_& #39; immediately after the 14 digit timestamp.

In the case of @NLAPandora you also need to change the domain from & #39; http://webarchive.nla.gov.au"> http://webarchive.nla.gov.au & #39; to & #39; http://web.archive.org.au"> http://web.archive.org.au & #39;.

As well as Timemaps & Timegates, some web archives make capture data available through CDX APIs. These are big indexes to all captures in the archive and can deliver LOTS of data. But you can easily filter the results.

I documented the @internetarchive& #39;s CDX API here: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/exploring_cdx_api.ipynb

I">https://nbviewer.jupyter.org/github/GL... also examined how this differed from other CDX APIs: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/comparing_cdx_apis.ipynb">https://nbviewer.jupyter.org/github/GL...

I">https://nbviewer.jupyter.org/github/GL... also examined how this differed from other CDX APIs: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/comparing_cdx_apis.ipynb">https://nbviewer.jupyter.org/github/GL...

CDX APIs deliver much the same data as Timemaps, except that you can broaden your query from a single page to a whole domain. You can also filter by date, status, mimetype & more. Here& #39;s a notebook that describes harvesting domain-level data: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/harvesting_domain_data.ipynb">https://nbviewer.jupyter.org/github/GL...

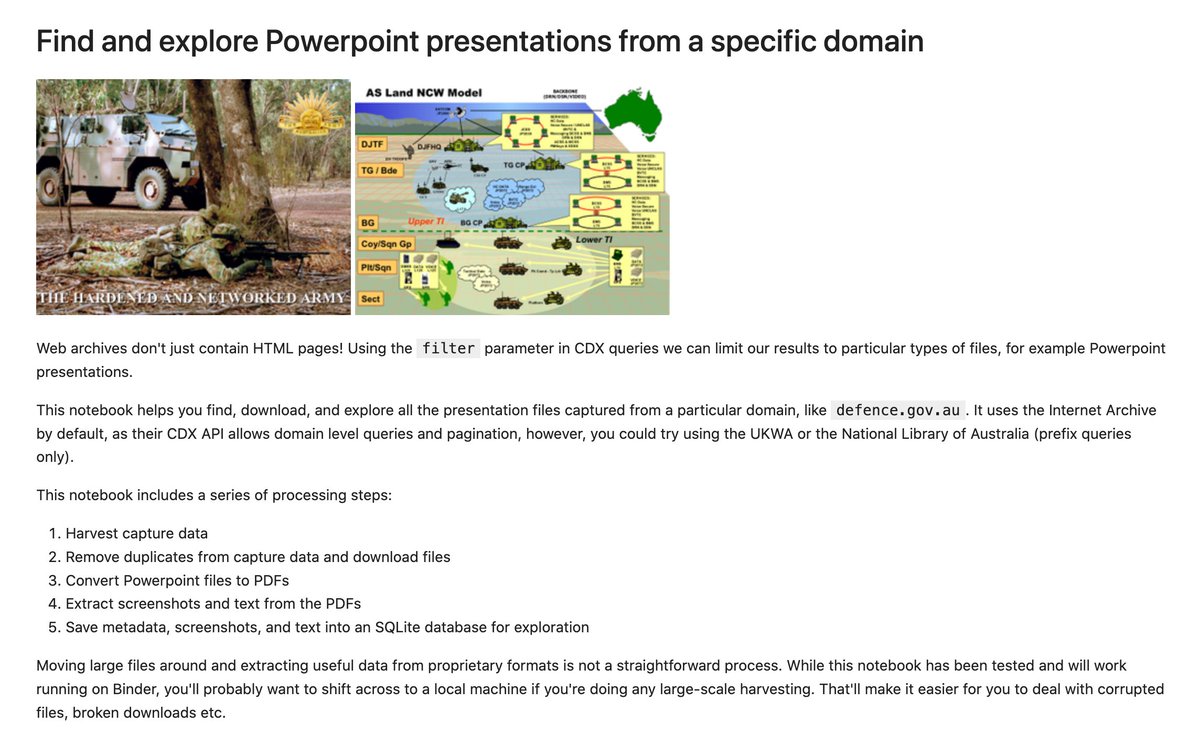

Using the CDX API you can also do intersting things like finding all the archived Powerpoint files in a particular domain! Here& #39;s a notebook that no only finds PP files, it downloads them, converts them to PDFs, creates screenshots & extracts text! https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/explore_presentations.ipynb">https://nbviewer.jupyter.org/github/GL...

That last notebook also saves all the metadata, screenshots, and text into an SQLite database, and fires up Datasette to explore it. I put a demo on Glitch with all the archived Powerpoint files (many no longer accessible) from http://defence.gov.au"> http://defence.gov.au : https://defencegovau-powerpoints.glitch.me/ ">https://defencegovau-powerpoints.glitch.me/">...

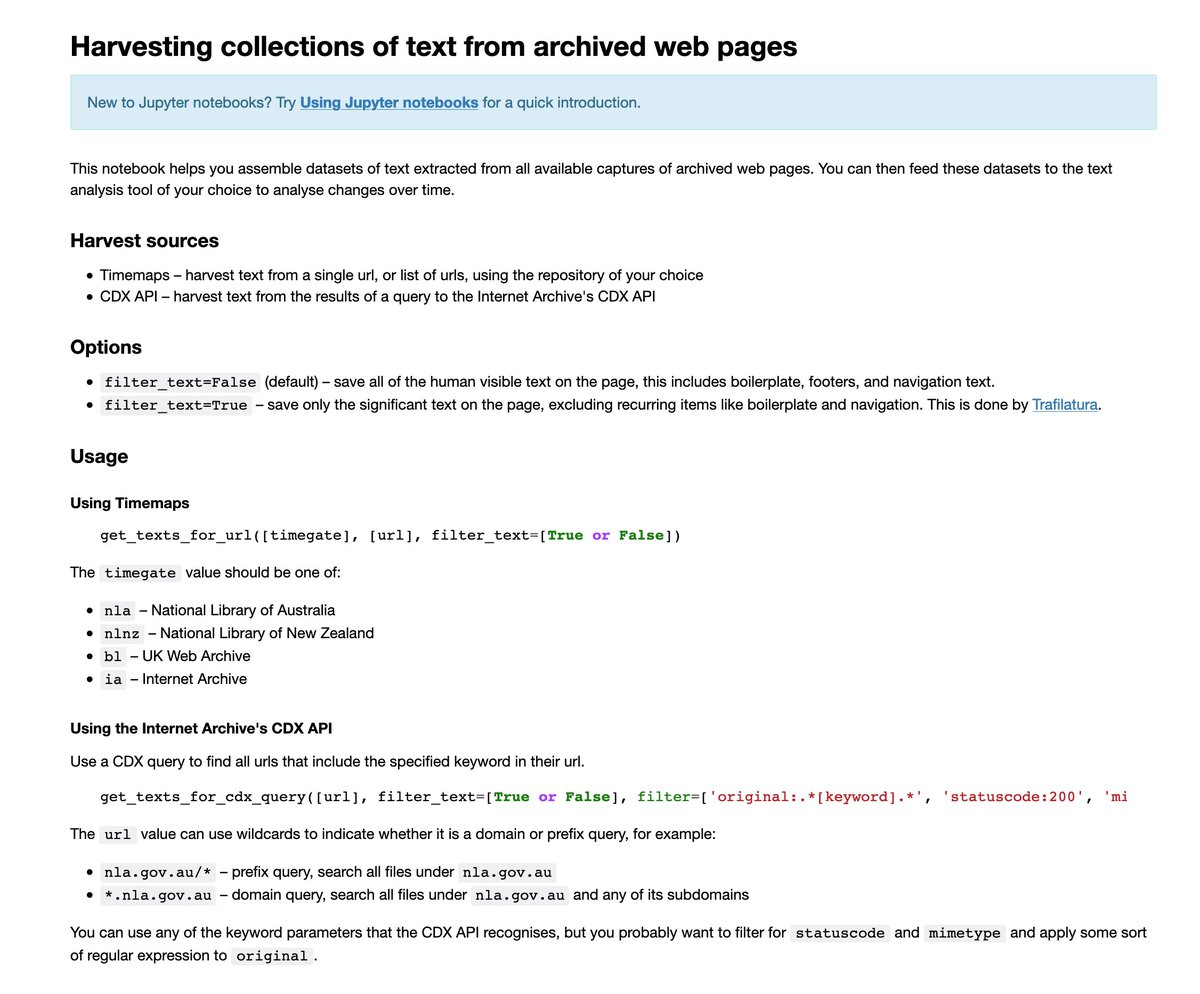

You can also use the CDX API to harvest all the text from a selection of web pages. The API accepts regular expressions, so you can look for words in urls. Here& #39;s a notebook for generating text datasets from web archives: https://nbviewer.jupyter.org/github/GLAM-Workbench/web-archives/blob/master/getting_text_from_web_pages.ipynb">https://nbviewer.jupyter.org/github/GL...

So you could of course harvest lots of text across time from a selection of pages/sites and then feed them into your text analysis program of choice. You can choose to get *all* the text (menus & navigation) or just what looks to be the & #39;main& #39; text.

Most of these examples work with a small subset of the masses of data available from web archives. You can start small and work up as required, rather than grappling with terabytes of data.

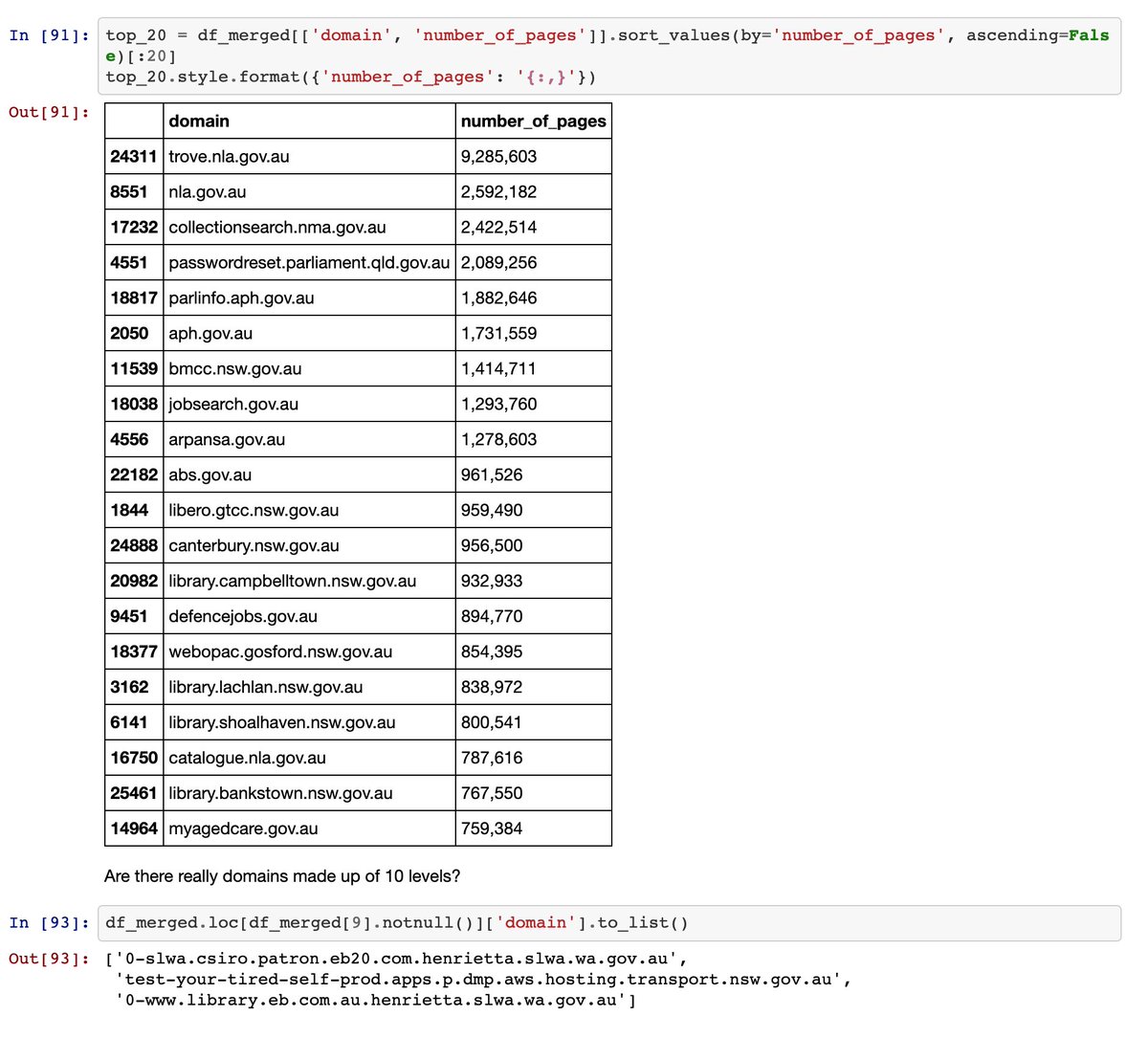

But I thought I& #39;d also try something a little more ambitious, so using the IA CDX API I made an attempt to find all of the subdomains that have been captured from the http://gov.au"> http://gov.au domain...

it took a while...

time passing...

it took a while...

time passing...

... eventually I ended up with about 60gb of data, details of more than 189 million captures.

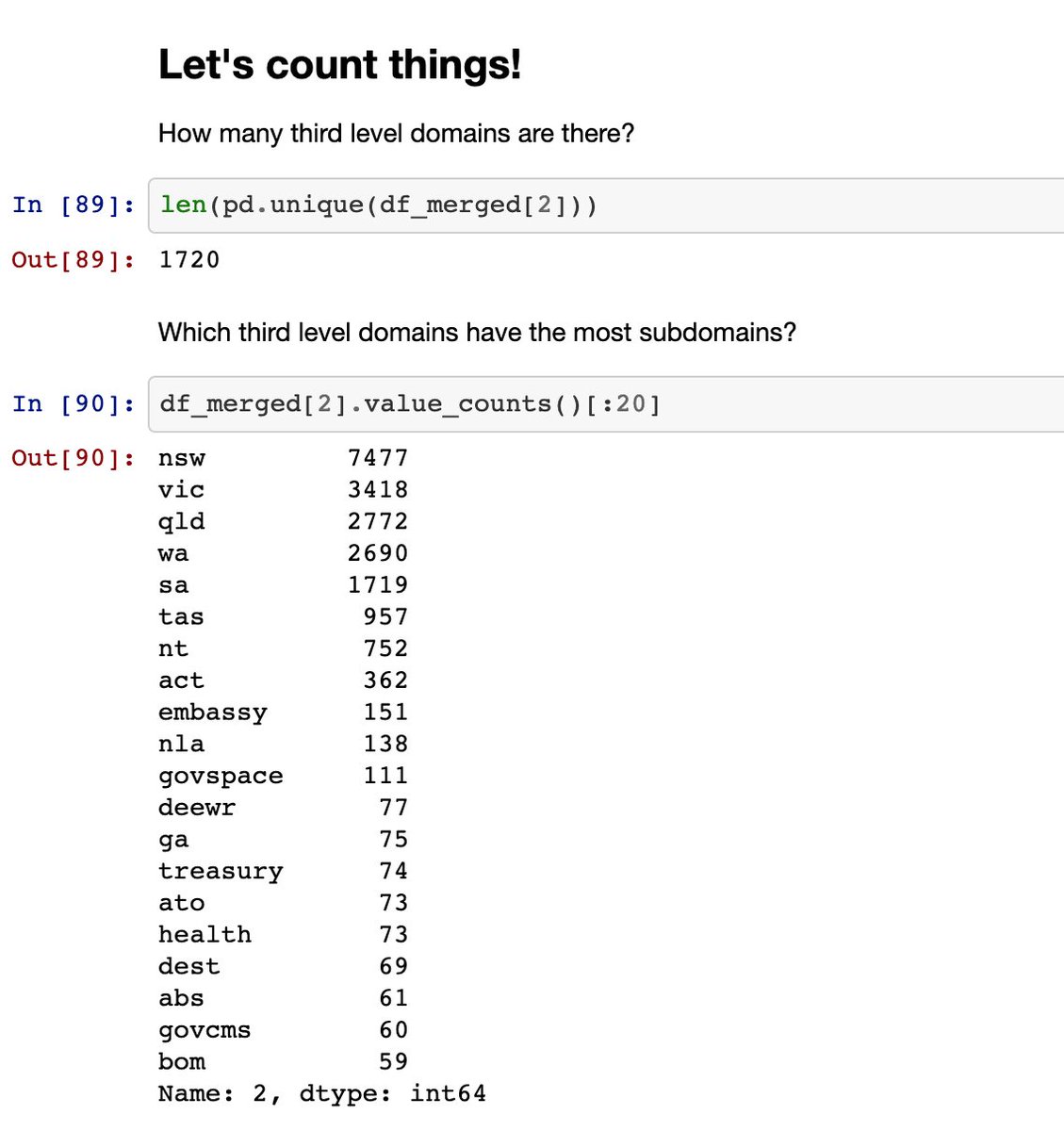

After a bit of manipulation I could find the unique subdomains – there& #39;s 26,233 of them. See the notebook: #exploring-subdomains-in-the-whole-of-govau">https://glam-workbench.github.io/web-archives/ #exploring-subdomains-in-the-whole-of-govau">https://glam-workbench.github.io/web-archi...

After a bit of manipulation I could find the unique subdomains – there& #39;s 26,233 of them. See the notebook: #exploring-subdomains-in-the-whole-of-govau">https://glam-workbench.github.io/web-archives/ #exploring-subdomains-in-the-whole-of-govau">https://glam-workbench.github.io/web-archi...

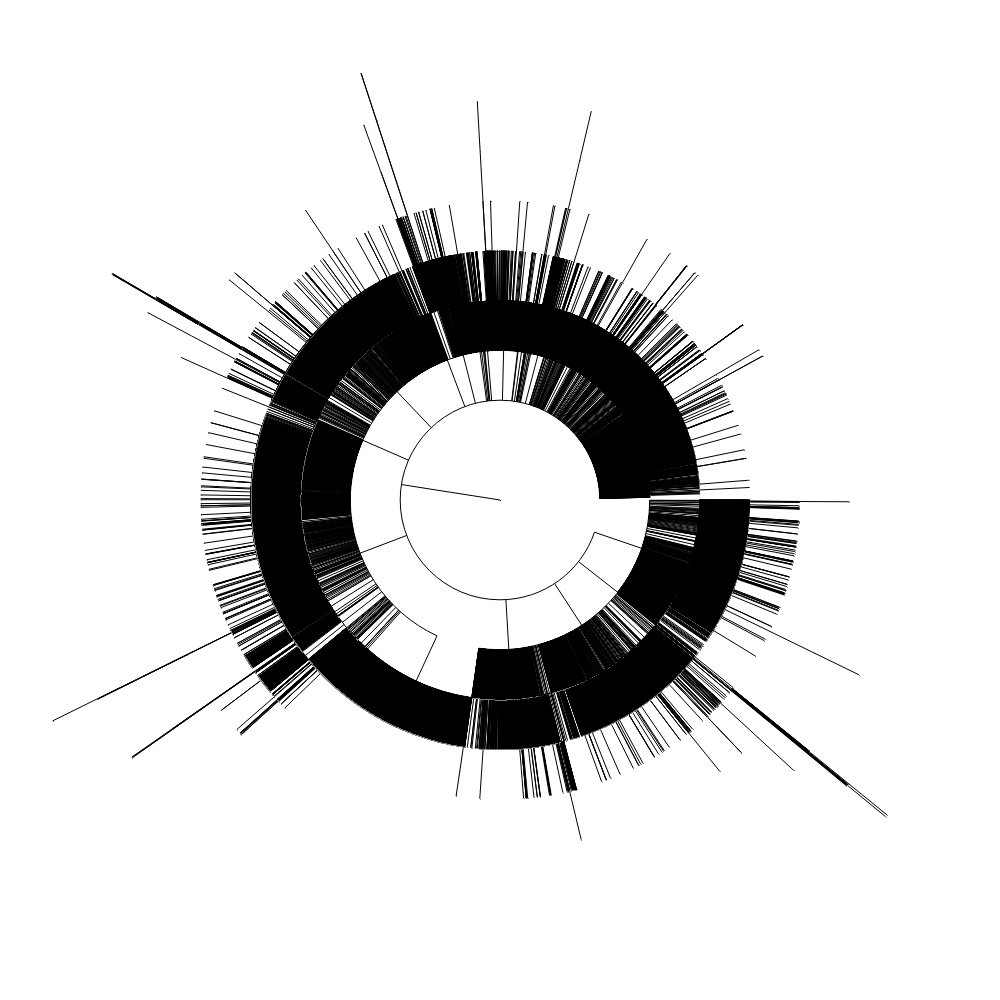

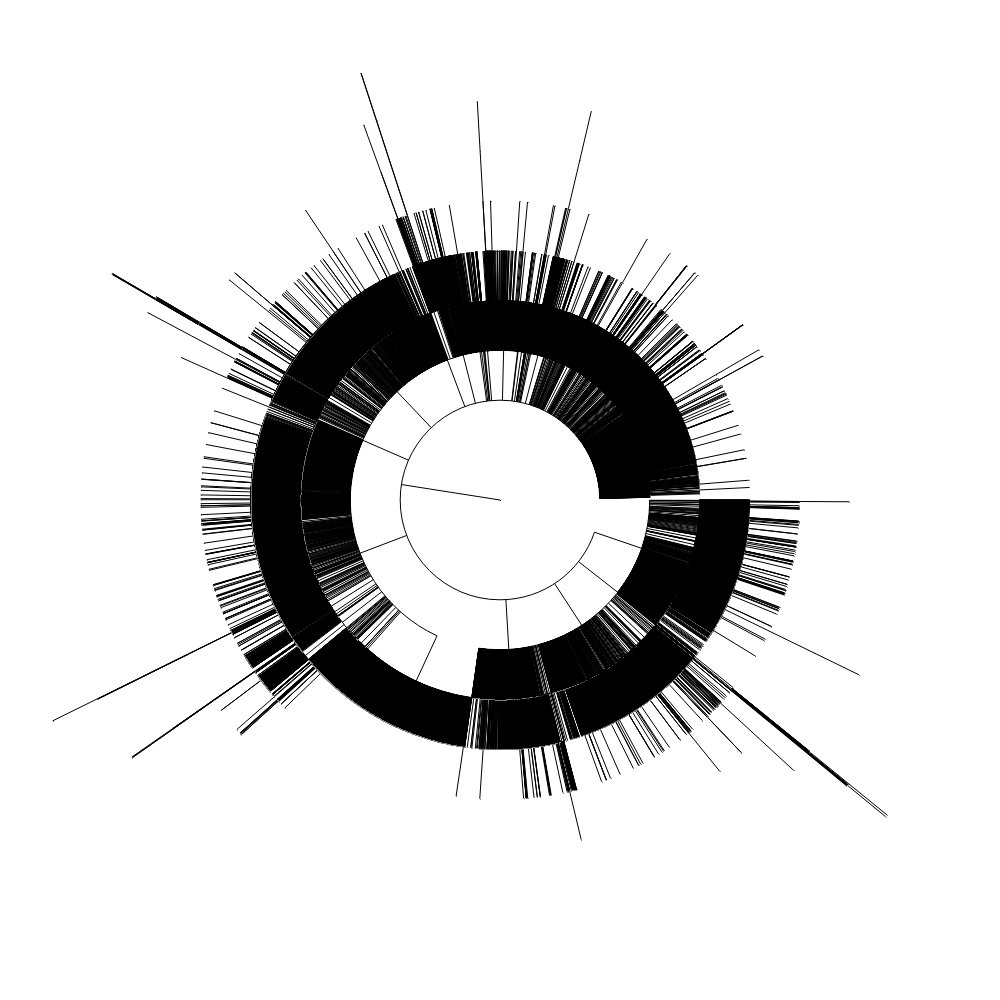

I wanted to have a go at visualising all those subdomains as a circular dendrogram. The scale means none of the text is readable, but hey, it looks cool. So here it is, subdomains in the http://gov.au"> http://gov.au domain as captured by the Internet Archive.

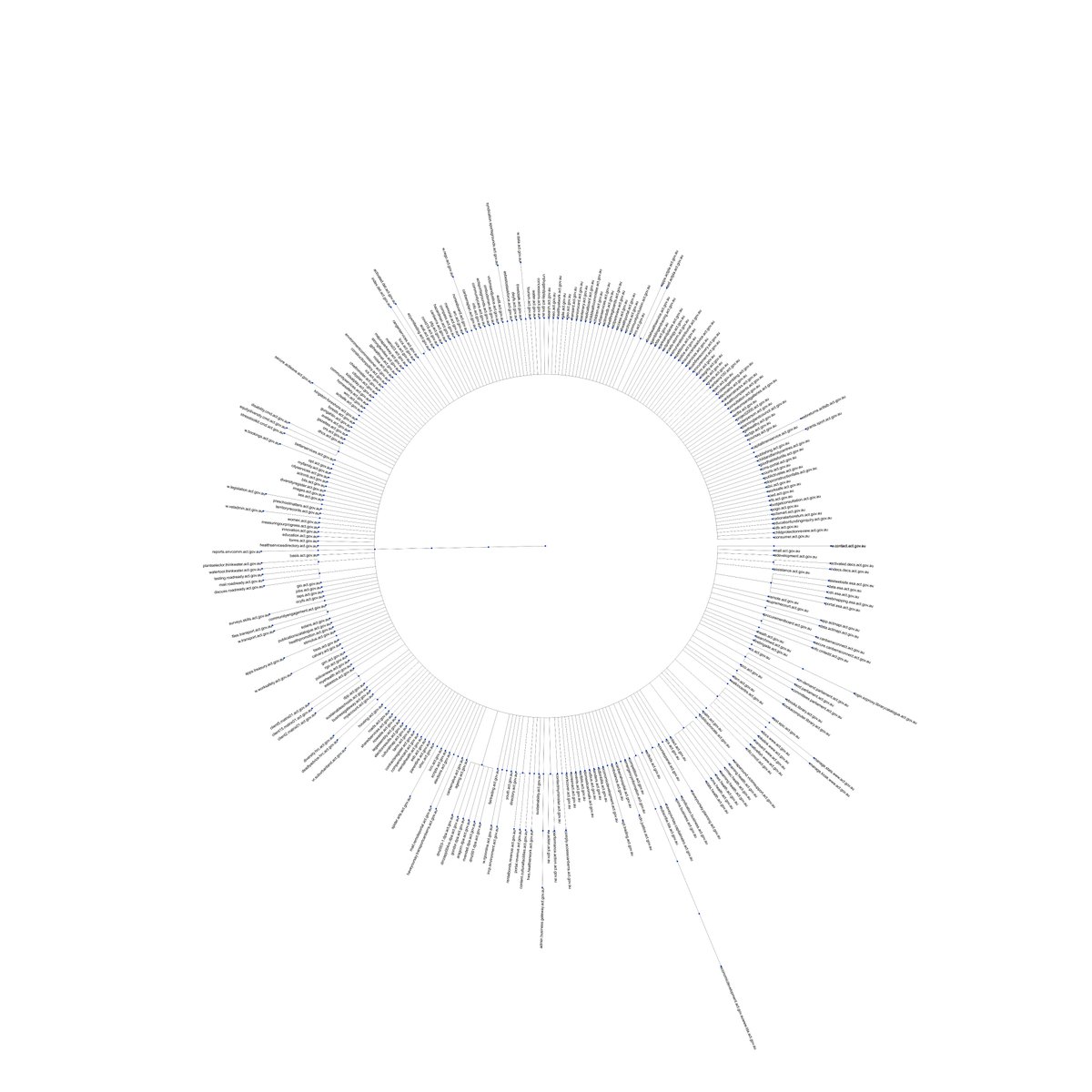

And shifting up to third level http://gov.au"> http://gov.au subdomains here& #39;s all of the Australian states and territories.

So I hope this thread has got you thinking about ways you might start to use web archives in your own research. I& #39;ve learnt a huge amount in the last few weeks and plan to do more exploration in the future.

Read on Twitter

Read on Twitter