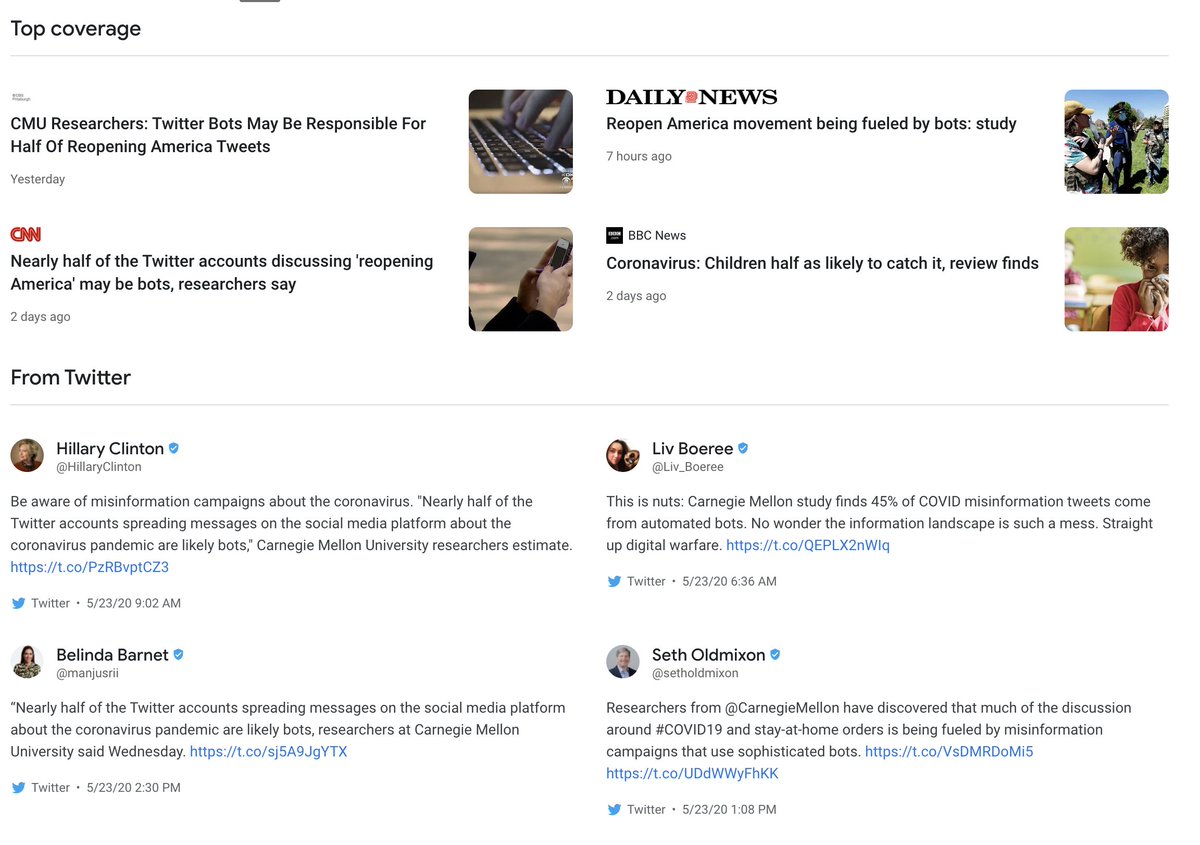

The media needs to stop amplifying extraordinary claims ("Of the top 50 influential retweeters, 82% are bots") without extraordinary (or in this case any) evidence.

There was some value to automated bot detection by researchers in 2016, but much less now. Why? https://twitter.com/ngleicher/status/1264614994475315200">https://twitter.com/ngleicher...

There was some value to automated bot detection by researchers in 2016, but much less now. Why? https://twitter.com/ngleicher/status/1264614994475315200">https://twitter.com/ngleicher...

Because since 2016 the major social platforms have invested heavily in automated bot detection, and they have way more data than we can get on the outside (IPs, device IDs, browser fingerprints, signs of human interaction from apps and JS).

It& #39;s possible that the companies have been conservative in using this data to shut down accounts (the classic precision/recall tradeoff of all ML content moderation), but researchers should not get clicks by arbitraging their lower standards against the duty of care of platforms.

At @stanfordio, we avoid automated judgments of authenticity and focus on clustering behaviors and accounts and tying the work to actors whenever possible. It is extremely hard to beat Twitter/Google/FB in a race to feed data into ML, but humans can bring specialized skills.

There isn& #39;t a paper or even a blog post to judge here, and without evidence or methodologies that others can double check these claims do not deserve this level of influence.

Disinformation about disinformation is still disinformation, and is harmful to the overall fight.

Disinformation about disinformation is still disinformation, and is harmful to the overall fight.

From one of the best in this space: https://twitter.com/katestarbird/status/1264597524393758720">https://twitter.com/katestarb...

Read on Twitter

Read on Twitter