Information-theoretic generalization bounds go brrrrr....

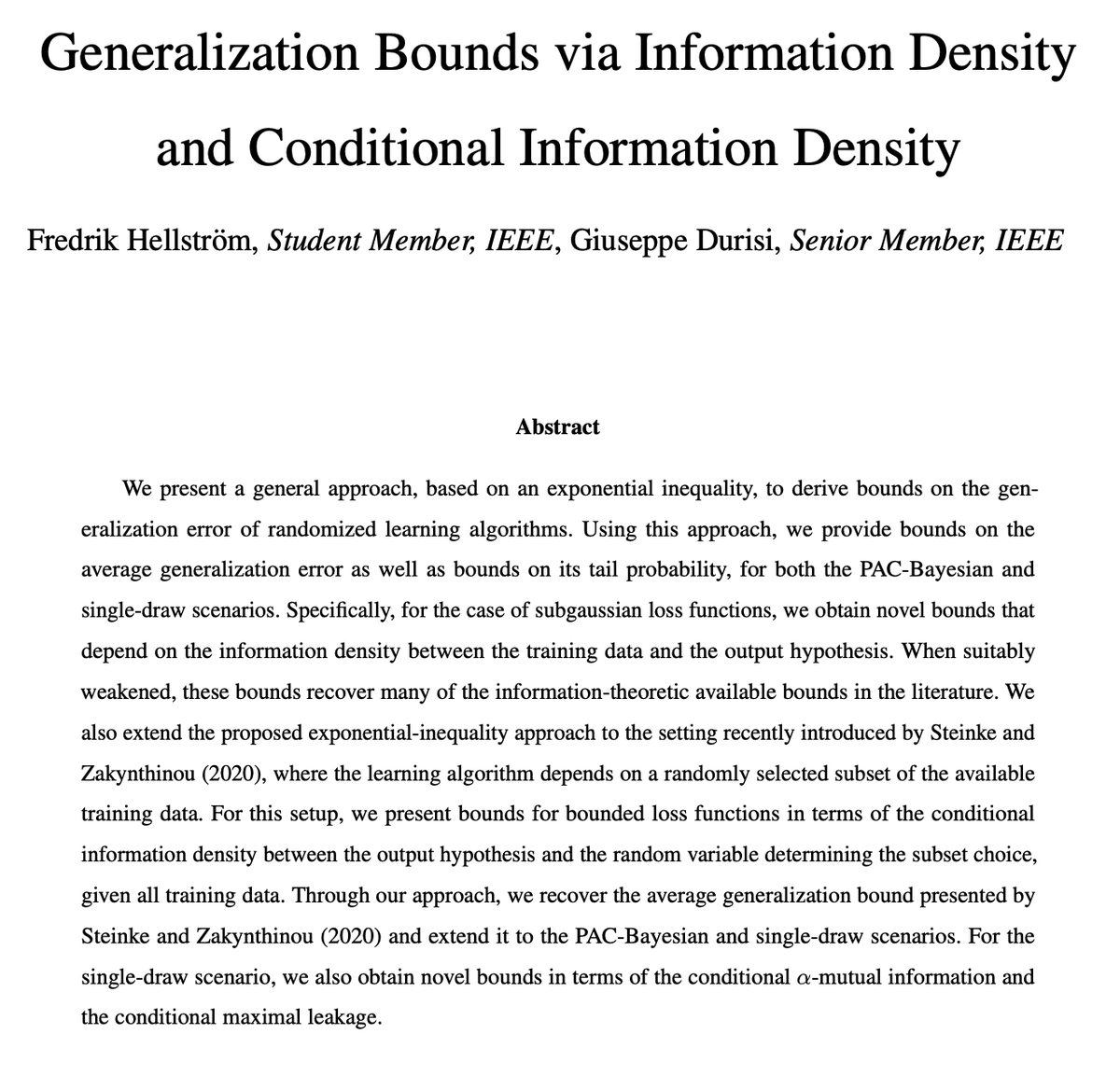

https://arxiv.org/abs/2005.08044

Nice">https://arxiv.org/abs/2005.... ideas in here combining Catoni& #39;s single draw setting and the @shortstein @zakynthinou framework. I appreciate multiple cites to my group& #39;s recent work.

https://arxiv.org/abs/2005.08044

Nice">https://arxiv.org/abs/2005.... ideas in here combining Catoni& #39;s single draw setting and the @shortstein @zakynthinou framework. I appreciate multiple cites to my group& #39;s recent work.

Our most recent paper extending Steinke--Zakynithinou is most relevant: https://arxiv.org/abs/2004.12983

Yes,">https://arxiv.org/abs/2004.... we assume bounded loss, but subG bounds are trivial.

Yes,">https://arxiv.org/abs/2004.... we assume bounded loss, but subG bounds are trivial.

They also refer to our NeurIPS paper on data-dependent estimates:

https://arxiv.org/abs/1911.02151

Until">https://arxiv.org/abs/1911.... our new CMI-style bounds, these bounds were the tightest for Langevin by a large factor.

https://arxiv.org/abs/1911.02151

Until">https://arxiv.org/abs/1911.... our new CMI-style bounds, these bounds were the tightest for Langevin by a large factor.

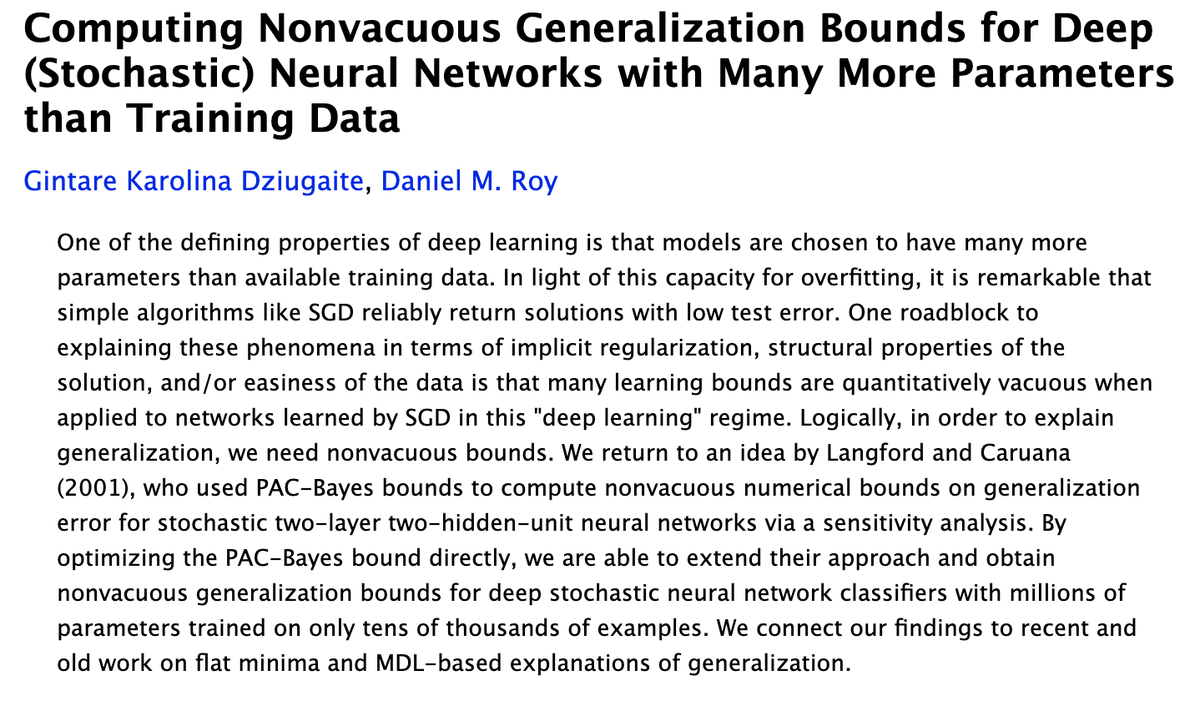

Finally they cite the work that started @KDziugaite and I down this road, i.e., our UAI 2017 paper on nonvacuous bounds. We& #39;ve learned a lot since then, but the key messages are still valid: we don& #39;t know why our bounds are so loose.

https://arxiv.org/abs/1703.11008 ">https://arxiv.org/abs/1703....

https://arxiv.org/abs/1703.11008 ">https://arxiv.org/abs/1703....

One thing I& #39;ve learned though: if you make your title sound as if it summarizes the entire content of the paper, people will happily forego reading your paper and think they know what it& #39;s about. If you& #39;re in that category, go read the paper.

I thought I recognized these authors. Indeed, they had very recent work introducing this unifying perspective (but without the conditional information density perspective).

https://arxiv.org/abs/2004.09148

Hello,">https://arxiv.org/abs/2004.... @giuseppe_durisi. Is the student on Twitter?

https://arxiv.org/abs/2004.09148

Hello,">https://arxiv.org/abs/2004.... @giuseppe_durisi. Is the student on Twitter?

I think the boundedness assumption we make is not so important to highlight. It& #39;s trivial to extend this. The thing to highlight is the generality of your perspective as it includes single draw, PAC-Bayes, etc. I suspect our advances over the SZ bounds are complementary to yours.

@shortstein I believe this answers your recent question. They call the log RN derivative the information density and so its evaluation at a point drawn from the measuring being dominated should likely be called something similary... instantaneous information density?

Read on Twitter

Read on Twitter