The most common feature-flagging pain I hear of is "feature flag debt" - stale flags clogging up your codebase with conditionals and dead code.

Uber just open-sourced Piranha, an internal tool for detecting and cleaning up stale feature flags.

Let& #39;s talk about it a bit...

Uber just open-sourced Piranha, an internal tool for detecting and cleaning up stale feature flags.

Let& #39;s talk about it a bit...

Piranha is a tool specifically for managing feature flag debt in Uber& #39;s mobile apps: https://eng.uber.com/piranha/

They">https://eng.uber.com/piranha/&... also have a really interesting academic paper describing it, along with lots of interesting details on feature flagging @ Uber in general: https://manu.sridharan.net/files/ICSE20-SEIP-Piranha.pdf">https://manu.sridharan.net/files/ICS...

They">https://eng.uber.com/piranha/&... also have a really interesting academic paper describing it, along with lots of interesting details on feature flagging @ Uber in general: https://manu.sridharan.net/files/ICSE20-SEIP-Piranha.pdf">https://manu.sridharan.net/files/ICS...

Besides being an interesting approach to a very common problem, their discussion of Piranha also provides some very interesting insights into an organization that& #39;s *heavily* invested in feature flagging...

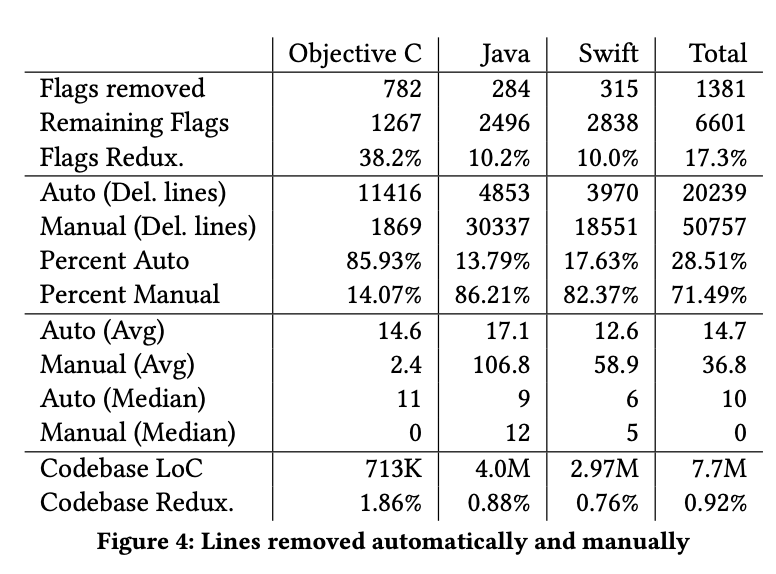

And when I say heavily invested, I mean it. The paper describes 6601 feature flags, just in their mobile codebases. Now apparently that& #39;s 7.7 *million* lines of code, but regardless it& #39;s still a lot of feature flags.

As one would expect given that count, Uber use flags for a lot of purposes. Experimentation, controlled rollout, safety, and sometimes for long-term operational things like kill switches and testing in prod (more on that in a moment).

They seem to be big fans of managing features by geo/market - turning on a feature for a specific market out before rolling it out more broadly.

I& #39;ve seen this approach at other orgs that are naturally segmented by market. Makes it easier to understand the scope of a rollout.

I& #39;ve seen this approach at other orgs that are naturally segmented by market. Makes it easier to understand the scope of a rollout.

Uber& #39;s flagging system has the ability for flags to be more than just on or off - a flag state can be an integer or a double, for example. However, these "parameter flags" comprise only a "very small set of flags" - the rest are simple on/off booleans.

Uber& #39;s flagging system has the ability for flags to be more than just on or off - a flag state can be an integer or a double, for example. However, these "parameter flags" comprise only a "very small set of flags" - the rest are simple on/off booleans.

All these flags come with a cost. They make Uber& #39;s code harder to understand, and slow down build+test.

They also introduce the risk of accidentally flipping a stale flag in prod, with untested and scary consequences (c.f. the Knight Capital Knightmare) https://www.bugsnag.com/blog/bug-day-460m-loss">https://www.bugsnag.com/blog/bug-...

They also introduce the risk of accidentally flipping a stale flag in prod, with untested and scary consequences (c.f. the Knight Capital Knightmare) https://www.bugsnag.com/blog/bug-day-460m-loss">https://www.bugsnag.com/blog/bug-...

Interestingly, the paper *doesn& #39;t* mention a cost that I often hear people concerned about - the combinatorial challenge of testing a large number of flags. I suspect this is because Uber have been feature flagging long enough to have developed good practices to mitigate this.

So, too many flags are bad - Uber should remove stale flags. However, identifying inactive flags is a surprisingly tricky problem. Some are "kill switches" which are almost never used, but need to be left available in prod. Others are in place for debugging or testing in prod.

as an aside, I think that a flag categorization scheme would help here, making it easier to distinguish between long-lived feature flags like these, vs short-lived Release or Experiment Toggles.

See #CategoriesOfToggles">https://www.martinfowler.com/articles/feature-toggles.html #CategoriesOfToggles">https://www.martinfowler.com/articles/... for more discussion of this idea.

See #CategoriesOfToggles">https://www.martinfowler.com/articles/feature-toggles.html #CategoriesOfToggles">https://www.martinfowler.com/articles/... for more discussion of this idea.

The paper also discusses the idea of requiring an expiration date when a flag is initially defined. This is another idea I& #39;ve advocated for, and seen a few teams have some success with. Not a panacea though.

Lack of flag ownership looks to be a considerable problem at Uber. I& #39;d think in part due to the nature of their org - hyper-growth, lots of churn and movement of folks. They also focus on ownership of flags by an individual dev, rather than a team. That seems questionable to me.

They also discuss a lack of aligned incentives when it comes to cleaning up feature flag debt - working on new features is rewarded more. I was very interested to hear that iOS devs have a more concrete incentive to remove stale flags - a limit from Apple on app download size.

Uber have used focused debt paydown efforts (e.g. "fixit weeks") to try and keep flag debt in check. I& #39;ve heard of other orgs doing this too. It& #39;s been described to me as "the best tool we& #39;ve found, but not a great tool" (paraphrasing a little here).

The stats in the paper about cleaned-up flags are interesting. It& #39;s not uncommon for a flag to be associated with over 100 lines of code, but flag-associated code tends to be restricted to a small number of files - 80% of flag-removal diffs involving 5 files or fewer.

This runs counter to the concerns I& #39;ve heard from some folks getting started with feature flags that each new feature flag could lead to a bunch of conditionals strewn around a codebase.

Uber have added custom extensions to their automated testing frameworks to make it easier to declarative manage flag state in tests which are sensitive to that state - something I often see at orgs that are using flags heavily, but not something I& #39;ve seen discussed much.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">

That wraps up what I found most interesting in this paper. Kudos to Uber for discussing this stuff so openly!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🙌" title="Raising hands" aria-label="Emoji: Raising hands">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🙌" title="Raising hands" aria-label="Emoji: Raising hands">

What other nuggets did *you* get from the paper?

Anyone have any other interesting reports from orgs talking about their usage of feature flagging in the wild?

What other nuggets did *you* get from the paper?

Anyone have any other interesting reports from orgs talking about their usage of feature flagging in the wild?

Read on Twitter

Read on Twitter

" title="Uber have added custom extensions to their automated testing frameworks to make it easier to declarative manage flag state in tests which are sensitive to that state - something I often see at orgs that are using flags heavily, but not something I& #39;ve seen discussed much. https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">" class="img-responsive" style="max-width:100%;"/>

" title="Uber have added custom extensions to their automated testing frameworks to make it easier to declarative manage flag state in tests which are sensitive to that state - something I often see at orgs that are using flags heavily, but not something I& #39;ve seen discussed much. https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">" class="img-responsive" style="max-width:100%;"/>