ooooooh  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👀" title="Eyes" aria-label="Emoji: Eyes"> https://github.com/opendifferentialprivacy/whitenoise-core">https://github.com/opendiffe...

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👀" title="Eyes" aria-label="Emoji: Eyes"> https://github.com/opendifferentialprivacy/whitenoise-core">https://github.com/opendiffe...

OK, diving into the various repos under https://github.com/opendifferentialprivacy">https://github.com/opendiffe... …

(Current status: trying to make one of the notebook examples work. A clear list of instructions would be nice; currently I& #39;m kind of left guessing which dependencies are missing https://abs.twimg.com/emoji/v2/... draggable="false" alt="😶" title="Face without mouth" aria-label="Emoji: Face without mouth">)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😶" title="Face without mouth" aria-label="Emoji: Face without mouth">)

(Current status: trying to make one of the notebook examples work. A clear list of instructions would be nice; currently I& #39;m kind of left guessing which dependencies are missing

Aha! The "python" repository tells you to `pip3 install opendp-whitenoise-core` but you actually want to `pip3 install opendp-whitenoise` to run the samples.

The "SQL queries" notebook fails because of a misconfiguration, though https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">

The "SQL queries" notebook fails because of a misconfiguration, though

OK, I don& #39;t have time to dive into the code to figure out what to do here, let& #39;s try the histogram notebook.

It seems to work! I do get the shiny histograms advertised on the tin (with a somewhat gory x-axis for the "sex").

It seems to work! I do get the shiny histograms advertised on the tin (with a somewhat gory x-axis for the "sex").

Some documentation notes are a bit weird, though.

Rounding to the nearest integer is a good first step, but it& #39;s not enough to completely remove floating-point vulnerabilities — you have to do some clamping & rounding to a power of 2 that is parameter-dependent.

Rounding to the nearest integer is a good first step, but it& #39;s not enough to completely remove floating-point vulnerabilities — you have to do some clamping & rounding to a power of 2 that is parameter-dependent.

More importantly, if you know that naive Laplace noise is vulnerable to floating-point attacks, why support it in the first place?

Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">

Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation

Aaaah! I left for a thing and I come back to a long discussion thread.

Just to make sure people get the right idea: I& #39;m jumping straight to "let& #39;s find holes in this" but it is *amazing* and super positive to get DP code open-sourced like this! I& #39;m super excited & thankful!

Just to make sure people get the right idea: I& #39;m jumping straight to "let& #39;s find holes in this" but it is *amazing* and super positive to get DP code open-sourced like this! I& #39;m super excited & thankful!

And as Ira points out, you need *some* Laplace mechanism. It might not be the best option to have for histograms but it totally makes sense to have it in the library! https://twitter.com/Ira_Sass/status/1263134575267581952">https://twitter.com/Ira_Sass/...

Alright, let& #39;s try to debug that SQL error. I really want to see what their version of differentially private SQL does.

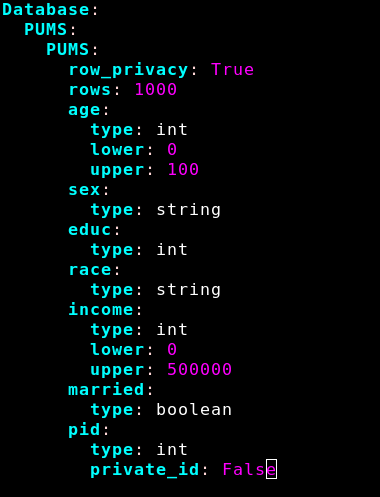

The error says "row privacy is set, but metadata specifies a privacy ID". This looks like a mismatch between privacy units. https://twitter.com/TedOnPrivacy/status/1263101678993043458">https://twitter.com/TedOnPriv...

The error says "row privacy is set, but metadata specifies a privacy ID". This looks like a mismatch between privacy units. https://twitter.com/TedOnPrivacy/status/1263101678993043458">https://twitter.com/TedOnPriv...

"Row privacy" suggests that the privacy unit is a single row: the "neighboring databases" in DP differ by at most one row.

Tagging a column "Privacy ID", on the other end, suggests that the privacy unit is "all rows with the same ID".

Let& #39;s try to change row_privacy to false…

Tagging a column "Privacy ID", on the other end, suggests that the privacy unit is "all rows with the same ID".

Let& #39;s try to change row_privacy to false…

It works!

Now, the next question is: to make a GROUP BY query like this differentially private, you don& #39;t only need to add noise to counts, you also need to protect the GROUP BY keys that are released. I& #39;ll check how it works later.

Now, the next question is: to make a GROUP BY query like this differentially private, you don& #39;t only need to add noise to counts, you also need to protect the GROUP BY keys that are released. I& #39;ll check how it works later.

OK, so let& #39;s change all instances of "married" to "1" in the CSV file (second-to-last column), and run this again.

The count is still noisy… but we only have one row as an answer. I can try it 10 times, it always returns one column for "True".

The count is still noisy… but we only have one row as an answer. I can try it 10 times, it always returns one column for "True".

Now, if I switch *one* user to "0" (or "False") as "married", it *also* only returns one row. That& #39;s good news: some thresholding is applied to remove low counts (like in https://desfontain.es/privacy/almost-differential-privacy.html).

Experimentally,">https://desfontain.es/privacy/a... the count needs to be larger than ~34 to be released.

Experimentally,">https://desfontain.es/privacy/a... the count needs to be larger than ~34 to be released.

Better, if I switch the "married" column to "2" for a single user, or make it empty, or some nonsense like "foo", it still returns one row without complaining.

This is good: it means you can& #39;t e.g. throw an error if one user has a special value, and detect it (breaking DP).

This is good: it means you can& #39;t e.g. throw an error if one user has a special value, and detect it (breaking DP).

We can also try to duplicate a single value 100 times for the same user (for exemple, everyone is "married" except user n°1, and his record appears 100 times).

Things work as intended: if the privacy unit is "user ID", you can& #39;t detect this (it also still returns the True row).

Things work as intended: if the privacy unit is "user ID", you can& #39;t detect this (it also still returns the True row).

I want to check using "row" as a privacy unit, to see the difference, but it doesn& #39;t seem to work (even though row_privacy *is* set). I must be doing something wrong, but I can& #39;t find the documentation for this YAML config, ah well.

OK, how far can we push this SQL thing? Let& #39;s go back to the original dataset, but try to *cheat* on the query  https://abs.twimg.com/emoji/v2/... draggable="false" alt="✨" title="Sparkles" aria-label="Emoji: Sparkles">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✨" title="Sparkles" aria-label="Emoji: Sparkles">

Uh-uh! See what I did there? Can you guess whether user n°1 is married? https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">

Uh-uh! See what I did there? Can you guess whether user n°1 is married?

Trying to do silly things like dumping the entire database does… something weird. Not sure what& #39;s happening here. Only one row is returned, so there must be default aggregations?

But which ones? And why do the aggregations of age vs. income return such different values?

But which ones? And why do the aggregations of age vs. income return such different values?

Let& #39;s try something a bit more complicated: averaging attacks. I& #39;ll ask the same thing many times over and average the results.

Doing this naively. doesn& #39;t work: the results are cached (by the privacy engine or the SQL engine), so you just get the same thing multiple times.

Doing this naively. doesn& #39;t work: the results are cached (by the privacy engine or the SQL engine), so you just get the same thing multiple times.

Let& #39;s try and cheat again, pretending we& #39;re not computing the same thing when we actually are  https://abs.twimg.com/emoji/v2/... draggable="false" alt="😈" title="Smiling face with horns" aria-label="Emoji: Smiling face with horns">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😈" title="Smiling face with horns" aria-label="Emoji: Smiling face with horns">

This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549).

This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549).

When we average over many more measurements… we get a much more precise idea of what the true number is.

I tried it a few times and I& #39;m always within ±2 of the true values. So I strongly suspect the privacy budget is not split between aggregations https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimacing face" aria-label="Emoji: Grimacing face">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimacing face" aria-label="Emoji: Grimacing face">

I tried it a few times and I& #39;m always within ±2 of the true values. So I strongly suspect the privacy budget is not split between aggregations

Note: I& #39;m actively trying to attack this, but the problems above are serious even in the "trusted" use case.

Changing aggregation values based on some other (private) info or doing multiple aggregations per query is natural & useful! Well-meaning analysts will do that!

Changing aggregation values based on some other (private) info or doing multiple aggregations per query is natural & useful! Well-meaning analysts will do that!

You know who else will do that? The library authors themselves  https://abs.twimg.com/emoji/v2/... draggable="false" alt="😃" title="Smiling face with open mouth" aria-label="Emoji: Smiling face with open mouth">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😃" title="Smiling face with open mouth" aria-label="Emoji: Smiling face with open mouth">

To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2.

To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2.

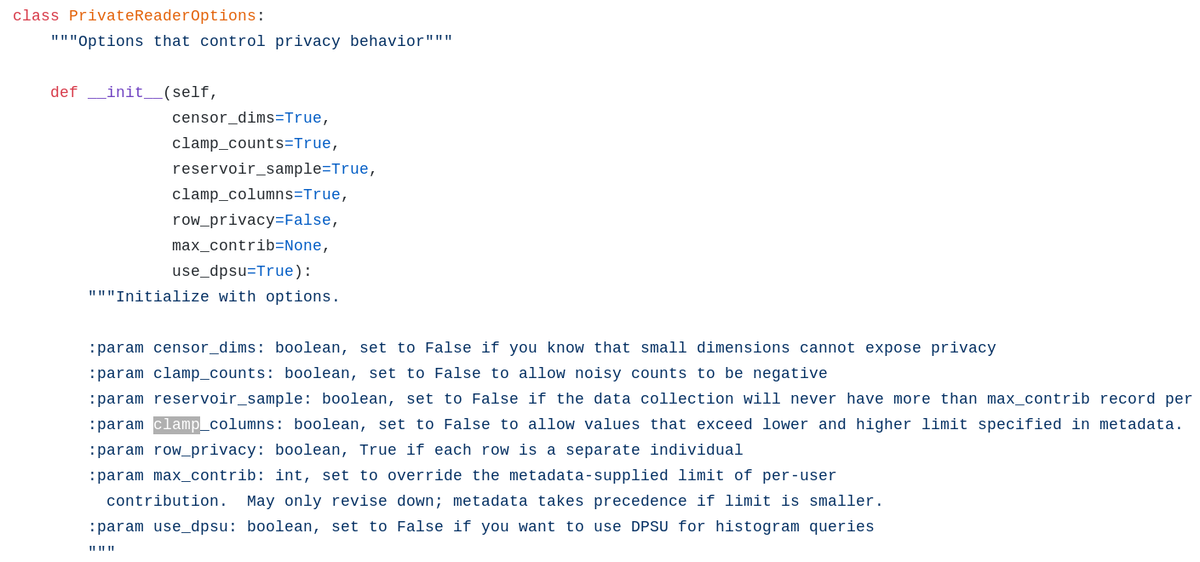

Digging into the code (to understand what led to the bugs above) does not spark joy.

Look at this list of options. I count 3 that basically throw privacy guarantees out of the window if set, and one that is a duplicate of what& #39;s in the dataset metadata config.

Look at this list of options. I count 3 that basically throw privacy guarantees out of the window if set, and one that is a duplicate of what& #39;s in the dataset metadata config.

"censors_dims" is False? A user with a rare value for one of their attributes can get it leaked.

"reservoir_sample" is False? A user with many contributions isn& #39;t protected.

"clamp_columns" is False? You might leak the data of all users with outlier values.

"reservoir_sample" is False? A user with many contributions isn& #39;t protected.

"clamp_columns" is False? You might leak the data of all users with outlier values.

Strongly held opinion: a privacy library should not have a "no privacy" option.

If you really need one, it should be named something super explicit like "danger_breaks_privacy_do_not_use__clamp_columns" instead of just "clamp_columns".

But you probably really don& #39;t need one.

If you really need one, it should be named something super explicit like "danger_breaks_privacy_do_not_use__clamp_columns" instead of just "clamp_columns".

But you probably really don& #39;t need one.

Arg I can& #39;t look at this code for more than a few minutes without finding more scary things in it.

Some of it is hard to describe from the outside, like the fact that the formula to compute the threshold is inconsistent with the type of noise used.

Some of it is hard to describe from the outside, like the fact that the formula to compute the threshold is inconsistent with the type of noise used.

Another puzzling thing: OpenDP is supposed to provide fundamental building blocks from which you build complex tools.

But the SQL rewriter only uses the plain Gaussian mechanism from these building blocks. All the rest of the privacy logic is re-implemented.

But the SQL rewriter only uses the plain Gaussian mechanism from these building blocks. All the rest of the privacy logic is re-implemented.

Just to make sure you don& #39;t miss it: plz also read this thread on the benefits of open-sourcing things early, and on why it& #39;s understandable that problems exist in code like this. https://twitter.com/TedOnPrivacy/status/1265545408446631940">https://twitter.com/TedOnPriv...

Read on Twitter

Read on Twitter " title="Aha! The "python" repository tells you to `pip3 install opendp-whitenoise-core` but you actually want to `pip3 install opendp-whitenoise` to run the samples.The "SQL queries" notebook fails because of a misconfiguration, though https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">" class="img-responsive" style="max-width:100%;"/>

" title="Aha! The "python" repository tells you to `pip3 install opendp-whitenoise-core` but you actually want to `pip3 install opendp-whitenoise` to run the samples.The "SQL queries" notebook fails because of a misconfiguration, though https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Thinking face" aria-label="Emoji: Thinking face">" class="img-responsive" style="max-width:100%;"/>

" title="More importantly, if you know that naive Laplace noise is vulnerable to floating-point attacks, why support it in the first place?Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">">

" title="More importantly, if you know that naive Laplace noise is vulnerable to floating-point attacks, why support it in the first place?Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">">

" title="More importantly, if you know that naive Laplace noise is vulnerable to floating-point attacks, why support it in the first place?Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">">

" title="More importantly, if you know that naive Laplace noise is vulnerable to floating-point attacks, why support it in the first place?Laplace noise in WhiteNoise simply calls the Rust standard library, which predictably uses the problematic implementation https://abs.twimg.com/emoji/v2/... draggable="false" alt="😟" title="Worried face" aria-label="Emoji: Worried face">">

Uh-uh! See what I did there? Can you guess whether user n°1 is married? https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">" title="OK, how far can we push this SQL thing? Let& #39;s go back to the original dataset, but try to *cheat* on the query https://abs.twimg.com/emoji/v2/... draggable="false" alt="✨" title="Sparkles" aria-label="Emoji: Sparkles">Uh-uh! See what I did there? Can you guess whether user n°1 is married? https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">" class="img-responsive" style="max-width:100%;"/>

Uh-uh! See what I did there? Can you guess whether user n°1 is married? https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">" title="OK, how far can we push this SQL thing? Let& #39;s go back to the original dataset, but try to *cheat* on the query https://abs.twimg.com/emoji/v2/... draggable="false" alt="✨" title="Sparkles" aria-label="Emoji: Sparkles">Uh-uh! See what I did there? Can you guess whether user n°1 is married? https://abs.twimg.com/emoji/v2/... draggable="false" alt="😅" title="Smiling face with open mouth and cold sweat" aria-label="Emoji: Smiling face with open mouth and cold sweat">" class="img-responsive" style="max-width:100%;"/>

This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549)." title="Let& #39;s try and cheat again, pretending we& #39;re not computing the same thing when we actually are https://abs.twimg.com/emoji/v2/... draggable="false" alt="😈" title="Smiling face with horns" aria-label="Emoji: Smiling face with horns">This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549)." class="img-responsive" style="max-width:100%;"/>

This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549)." title="Let& #39;s try and cheat again, pretending we& #39;re not computing the same thing when we actually are https://abs.twimg.com/emoji/v2/... draggable="false" alt="😈" title="Smiling face with horns" aria-label="Emoji: Smiling face with horns">This seems to bypass caching. Below, we average the count 10 times, and get different results. Unclear how much noise is added (real values are 451 and 549)." class="img-responsive" style="max-width:100%;"/>

" title="When we average over many more measurements… we get a much more precise idea of what the true number is.I tried it a few times and I& #39;m always within ±2 of the true values. So I strongly suspect the privacy budget is not split between aggregations https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimacing face" aria-label="Emoji: Grimacing face">" class="img-responsive" style="max-width:100%;"/>

" title="When we average over many more measurements… we get a much more precise idea of what the true number is.I tried it a few times and I& #39;m always within ±2 of the true values. So I strongly suspect the privacy budget is not split between aggregations https://abs.twimg.com/emoji/v2/... draggable="false" alt="😬" title="Grimacing face" aria-label="Emoji: Grimacing face">" class="img-responsive" style="max-width:100%;"/>

To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2." title="You know who else will do that? The library authors themselves https://abs.twimg.com/emoji/v2/... draggable="false" alt="😃" title="Smiling face with open mouth" aria-label="Emoji: Smiling face with open mouth">To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2." class="img-responsive" style="max-width:100%;"/>

To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2." title="You know who else will do that? The library authors themselves https://abs.twimg.com/emoji/v2/... draggable="false" alt="😃" title="Smiling face with open mouth" aria-label="Emoji: Smiling face with open mouth">To compute AVG, the SQL rewriter simply divides SUM by COUNT… which makes sense, but *only if you split the privacy budget*! Otherwise, you& #39;re underestimating ε by a factor of 2." class="img-responsive" style="max-width:100%;"/>