2/ Nvidia announced A100 this morning and you can read about it from any number of outlets, e.g. https://www.nextplatform.com/2020/05/14/nvidia-unifies-ai-compute-with-ampere-gpu/">https://www.nextplatform.com/2020/05/1...

3/ Nvidia, as usual, have themselves published a great set of detailed blogs about the architecture and specs, really diving into the approaches they& #39;ve taken and how they might impact workloads. https://devblogs.nvidia.com/nvidia-ampere-architecture-in-depth/">https://devblogs.nvidia.com/nvidia-am...

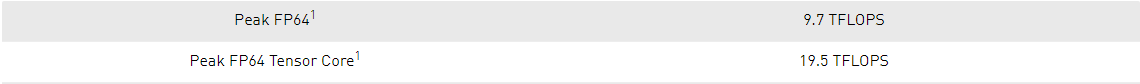

4/ There& #39;s a specific couple of lines in the overview that I think are worth talking about as an #HPC community. Here they are:

5/ A100& #39;s peak DP performance of 9.7 TF is a 30% uplift from V100& #39;s 7.5 TF, however this is coming at a socket-level power uplift of 33%. This is a 7nm chip versus V100& #39;s 12nm. So where did all the new capability go?

Specialization.

Specialization.

6/ A100 obviously has a ton of new interesting features (e.g. acceleration for structured sparsity, TF32) that are focused on delivering boosts to common data and compute patterns. The one I want to call out here is the expansion of the "Tensor Core" all the way to FP64.

7/ The new DP Tensor Core uses a "DMMA" operation to multiply two 2x4 FP64 matrix panels in a single instruction. Using this enhanced throughput, they produce a second performance number: 19.5 TF

Nvidia& #39;s math libraries will make use of this heavily. https://blogs.nvidia.com/blog/2020/05/14/double-precision-tensor-cores/">https://blogs.nvidia.com/blog/2020...

Nvidia& #39;s math libraries will make use of this heavily. https://blogs.nvidia.com/blog/2020/05/14/double-precision-tensor-cores/">https://blogs.nvidia.com/blog/2020...

8/ Those familiar with microarchitecture will understand that this is a far more efficient way to do a matrix calculation than individual FMAs - you skip all the control flow of instruction issue while also avoiding the need for register writes/reads of all the intermediate sums.

9/ What is more impactful to things like #Top500 is that this DMMA acceleration works for HPL, but does narrow (further) the application space that can take advantage of that level of FLOPS. It& #39;s not something that can be captured currently in the #Top500 metrics.

10/ This is the nature of "late-Moore" specialization: resources are applied to subsets of workloads (in this case, dense matrix math) to accelerate them, while having limited value to others.

11/ It is important for #HPC - as a community - to be more detail-oriented and to keep track of what is being accelerated and what is being left behind as we move into more of this specialization. We really need a broader set of metrics and benchmarks to do that.

12/ P.S. a lot of people have leapt to thinking about "whole-chip" specialization in this era. A100 (and Intel x86 extensions, and SVE...) shows there& #39;s still a ton of room for applying specialization within existing cores and architectures in a way that makes adoption easy.

Read on Twitter

Read on Twitter