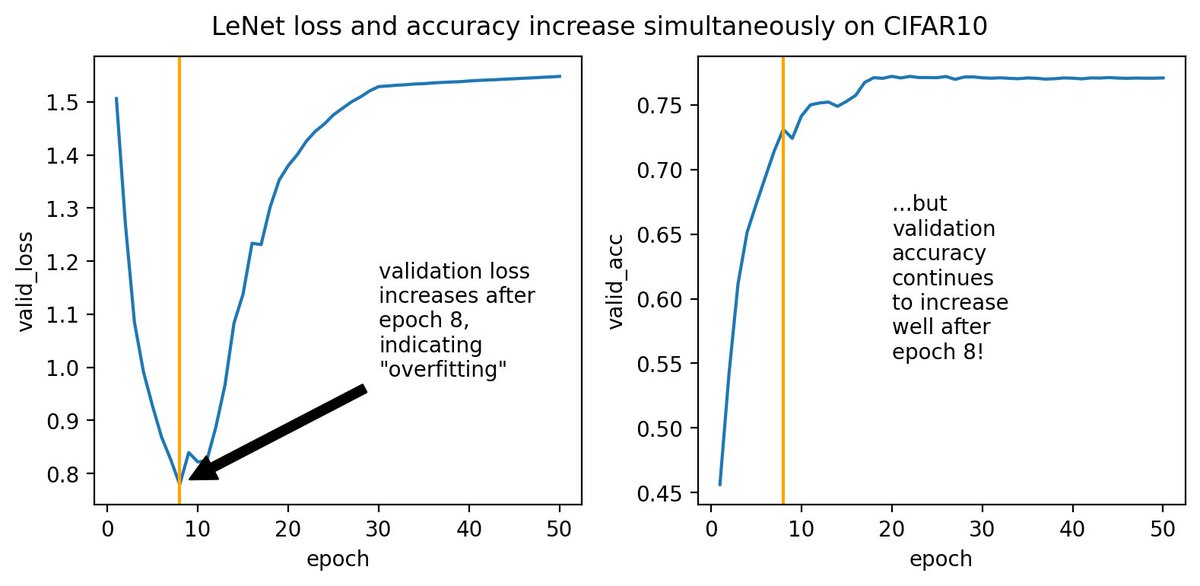

1/4 WTF guys I think I broke ML: loss & acc 🡅 together! reproduced here https://github.com/thegregyang/LossUpAccUp.">https://github.com/thegregya... Somehow good accuracy is achieved *in spite of* classic generalizn theory (wrt the loss) - What& #39;s goin on? @roydanroy @prfsanjeevarora @ShamKakade6 @BachFrancis @SebastienBubeck

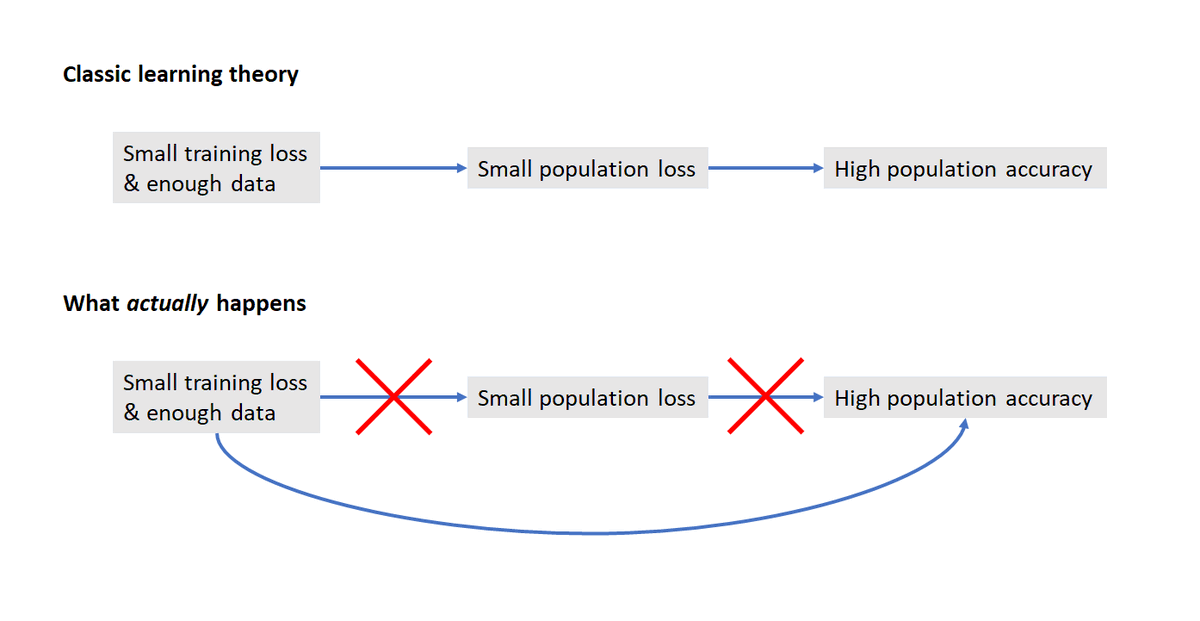

2/4 More precisely, classic theory goes like this "when we train using xent loss, we get good pop loss by early stopping b4 valid loss 🡅. B/c xent is a good proxy for 0-1 loss, we expect good pop accuracy from this procedure." But here we got good acc w/o getting good pop loss

3/4 Practically, this is no biggie if we can track some quality metric like accuracy. Butwhatabout e.g. language modeling that only tracks loss/ppl? How do we know the NN doesn& #39;t learn great language long after val loss blows up? @srush_nlp @ilyasut @nlpnoah @colinraffel @kchonyc

4/4 Disclaimer: others have talked about this phenon b4 me (e.g. https://stackoverflow.com/questions/40910857/how-to-interpret-increase-in-both-loss-and-accuracy">https://stackoverflow.com/questions... https://arxiv.org/abs/1706.04599 )">https://arxiv.org/abs/1706.... but I thought to make the few points above I didn& #39;t see made elsewhere. Tweet at me or file a pull request to https://github.com/thegregyang/LossUpAccUp">https://github.com/thegregya... if you have thought about them!

Read on Twitter

Read on Twitter