I just came across this! unfortunately, the mechanism proposed is not incentive compatible. Proof to follow. 1/N https://twitter.com/TroublesomeF/status/1213014750658883585">https://twitter.com/Troubleso...

@ATabarrok proposes that "the NYTimes should bet a portion of Silver’s salary, at the odds implied by Silver’s model, randomly choosing which side of the bet to take, only revealing to Silver the bet and its outcome after the election is over". 2/N

He writes, "A blind trust bet creates incentives for Silver to be disinterested in the outcome but very interested in the accuracy of the forecast." Is this true? 3/N

If Silver believes that an event happens with probability p, a fair bet wins (1-p)/p if the event happens and loses 1 otherwise. Suppose we ask Silver to report p, and randomize which side of the bet he takes. 4/N

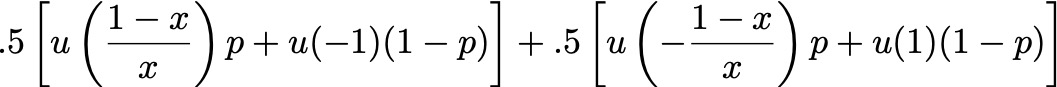

Silver then faces the following objective function, where x is his report, p is his true belief, and u(.) is his utility function. Ideally we would like this to be maximized by reporting x =p. 5/N

Dropping terms that don& #39;t depend on x, this is equivalent to maximizing this objective function. As you can see, this doesn& #39;t depend on p at all! 6/N

Proposition: If x is an optimal report for some belief p, then x is an optimal report for all beliefs. Alas, regardless of his utility function u, Silver has no incentive to submit accurate forecasts in the proposed mechanism. 7/N

Incentivizing accurate beliefs is tricky! Experimental economists have developed incentive-compatible methods to elicit beliefs. @pauljhealy has a great summary here: https://healy.econ.ohio-state.edu/papers/Healy-ExplainingBDM_Note.pdf">https://healy.econ.ohio-state.edu/papers/He... 8/8

Read on Twitter

Read on Twitter