A few of us at the @mrc_ieu have a preprint discussing collider bias with a focus on coronavirus research. We& #39;re of the opinion that collider bias is a big banana skin that a lot of studies may be stepping on. A brief thread (1/20)...

https://www.medrxiv.org/content/10.1101/2020.05.04.20090506v1">https://www.medrxiv.org/content/1... #COVID19 #epitwitter

https://www.medrxiv.org/content/10.1101/2020.05.04.20090506v1">https://www.medrxiv.org/content/1... #COVID19 #epitwitter

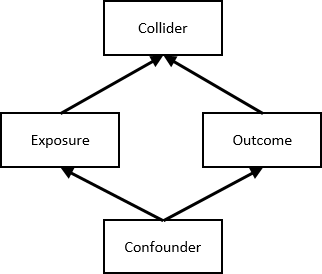

1st, collider bias is unintuitive. A collider is a variable that is influenced by two other variables of interest. Kinda the opposite of a confounder. While we want to adjust for a confounder (to break the induced association btw variables), we don& #39;t want to adjust for a collider

On the face of it this seems easy to deal with: don& #39;t condition on a collider in model = problem solved.

Sadly, it& #39;s not as easy as this. The act of using a dataset can induce collider bias if the variables of interest influence being in the dataset. Confused? An example...

Sadly, it& #39;s not as easy as this. The act of using a dataset can induce collider bias if the variables of interest influence being in the dataset. Confused? An example...

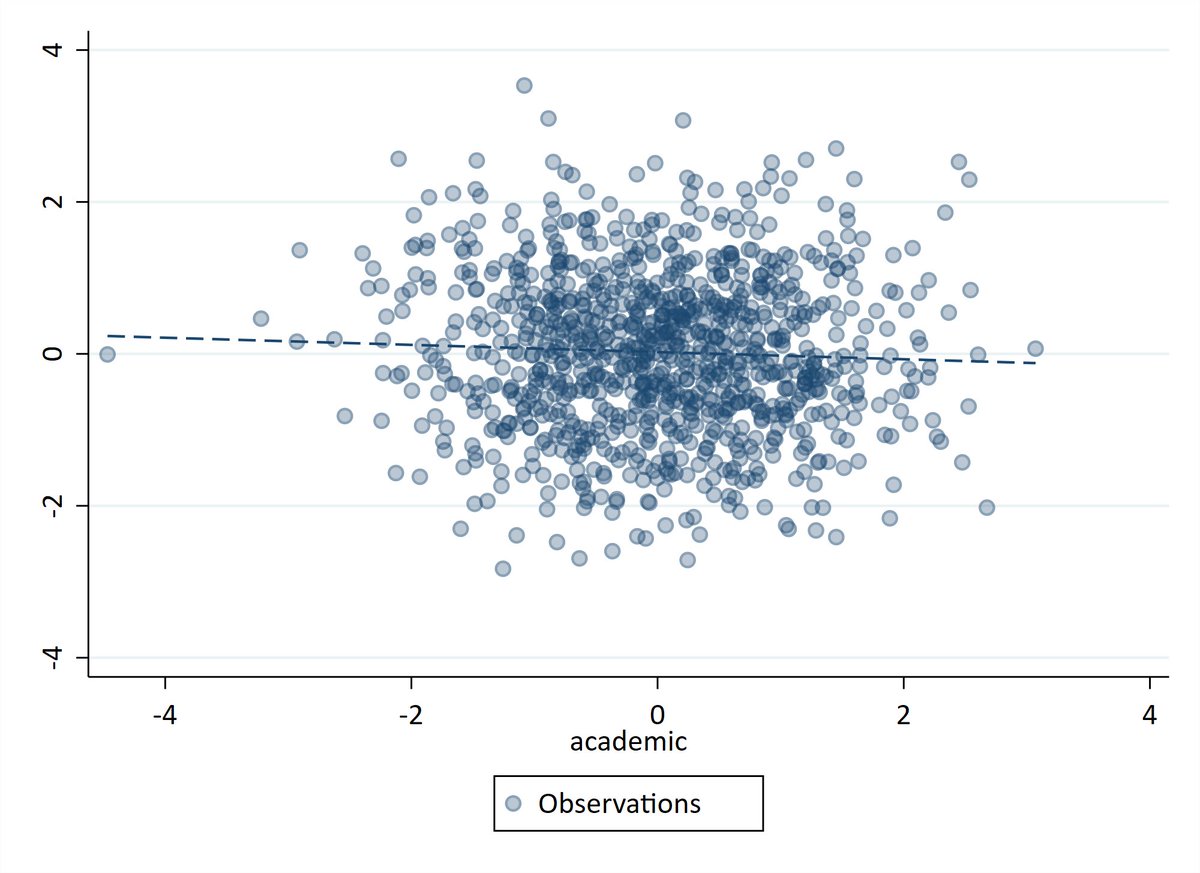

Suppose that sporting ability and academic ability are both normally distributed and independent in the population. That is, sporting ability has no bearing whatsoever on academic ability, and vice versa.

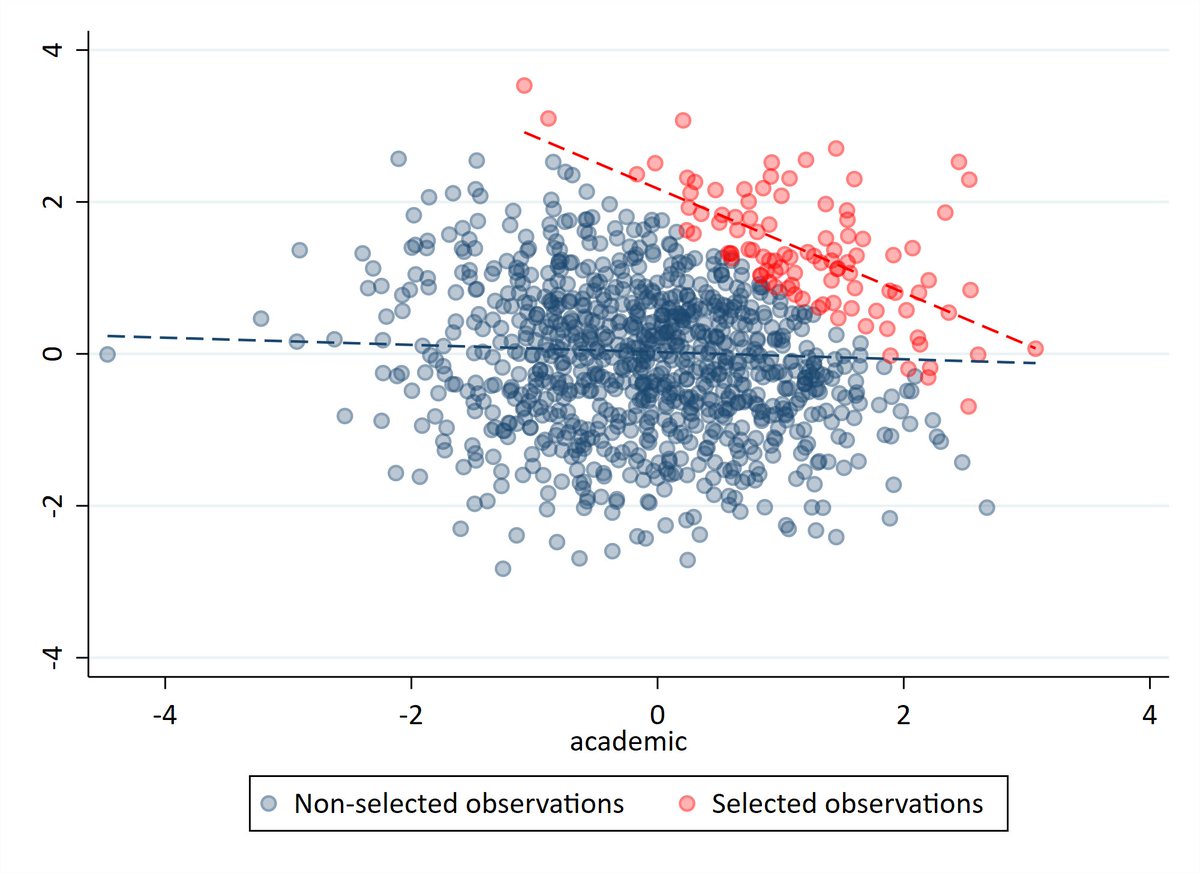

Let& #39;s now suppose that a prestigious, highly selective school chooses to enrol children who have high sporting or academic ability. For ease, let& #39;s just say that it has sufficient capacity to enrol the top 10% of pupils (combined sporting and academic scores) from the population.

Because sporting and academic ability are independent in the population and we have selected on the top of these distributions, enrolled pupils are likely to be EITHER sporting OR academic. This induces a negative correlation in our school despite none existing in the population.

That is, if we analyse sporting and academic ability in the school, we will conclude that the two are inversely related. If you see a pupil in the school& #39;s uniform swing to kick a football, miss and fall on their arse, then you reliably conclude that they must be really smart.

The point is that we have conditioned on non-random selection into the sample. That is, collider bias is induced by selection into the dataset. This is an extreme example, but it nicely demonstrates how observed associations in-sample may not hold more broadly/out of sample.

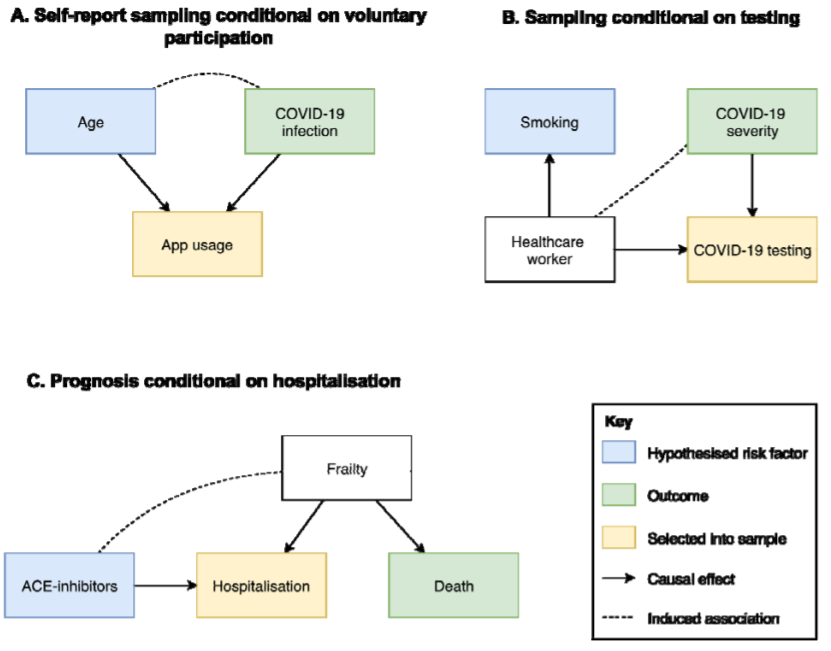

How does this relate to coronavirus? Well, many of the current COVID-19 datasets rely on non-random participation with strong selection pressures. We present a few different examples in the preprint to highlight this (there are many more!).

These selection pressures can throw up some weird associations and may be partly responsible for some of those already observed such as smoking appearing protective ( https://www.medrxiv.org/content/10.1101/2020.05.06.20092999v1;">https://www.medrxiv.org/content/1... https://www.qeios.com/read/WPP19W.3 )">https://www.qeios.com/read/WPP1... and ACE-inhibitors appearing harmful ( https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3583469)">https://papers.ssrn.com/sol3/pape...

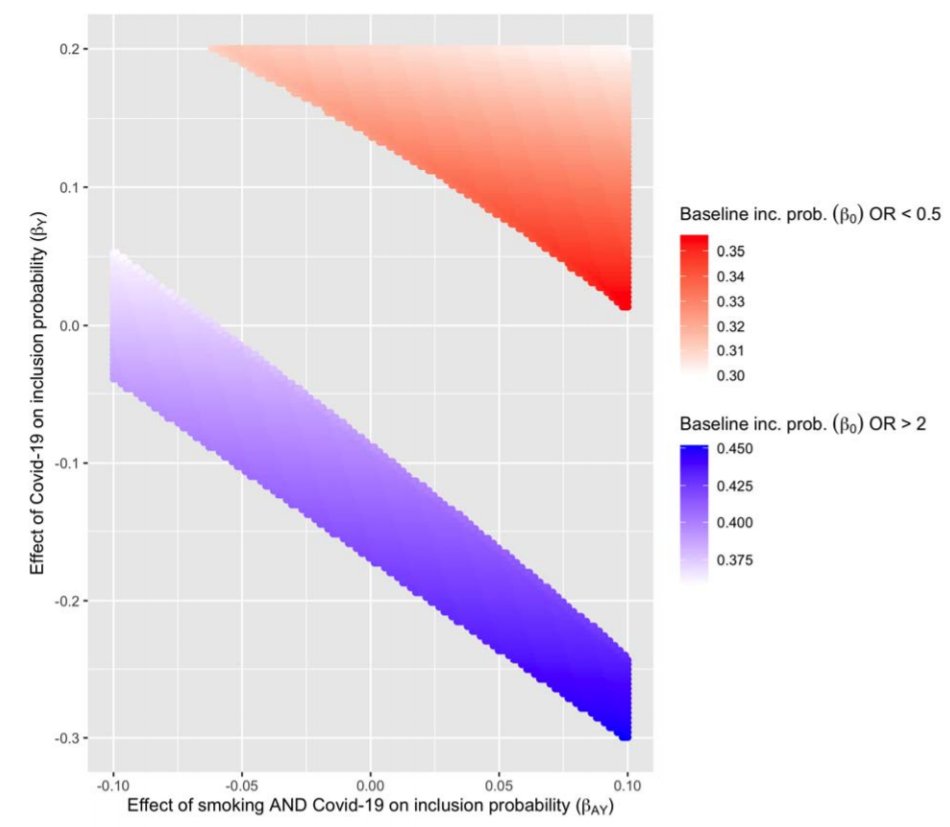

We ran some simulations under a similar scenario to one of the smoking papers demonstrating how 2-fold protective/risk effects of smoking on COVID-19 infection could appear due to selection (no true underlying causal relationship).

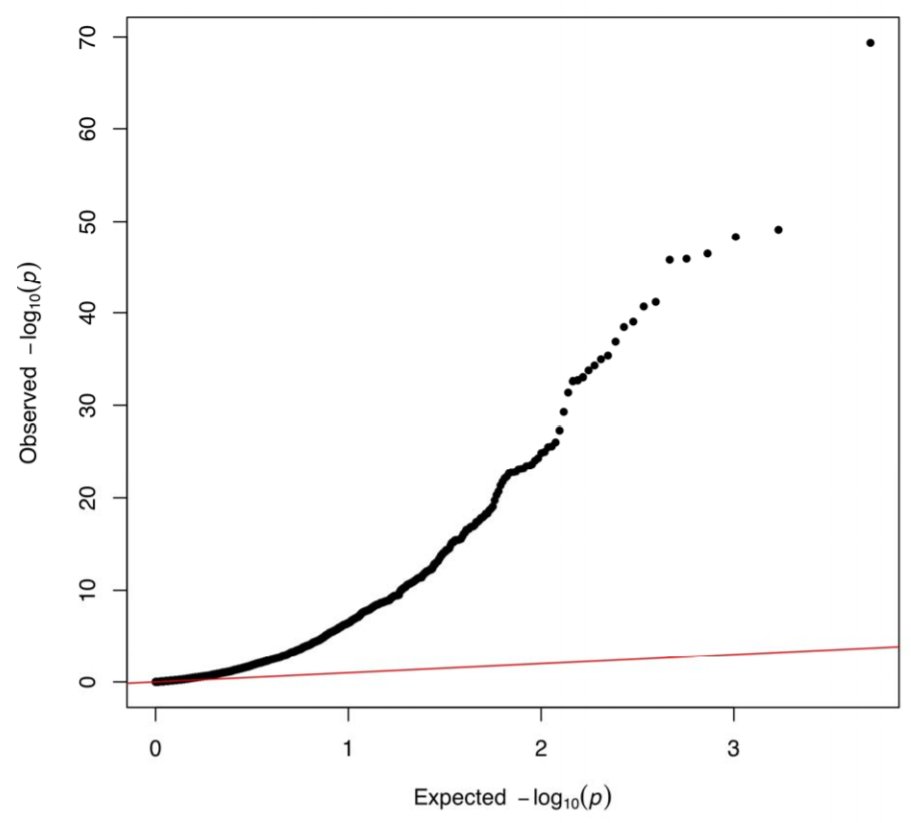

We also looked at how 2,556 traits in @uk_biobank associate with being tested for COVID-19 (note this is being tested, NOT a positive test result). 32% of the traits associated with testing (false discovery rate of <0.05). The QQ plot here shows the enrichment for associations. n

So in the UK Biobank, lots of things strongly associate with having received a COVID-19 test, and can therefore be expected to associate with each other within this sub-sample even where they do not in the wider cohort or the wider UK population (note the double selection here).

Are these observed associations true or the result of collider bias? Well, it& #39;s difficult to tell from the data, but there are some sensitivity analyses that can be run. These largely depend on the data available on non-participants though.

The only way to be completely confident that collider bias doesn& #39;t underlie observed associations is to use representative population samples with random participation and attrition patterns. This is obviously unlikely, so using sampling strategies that minimise the problems...

of collider bias and doing everything we can to ensure that samples are as representative and as free from strong selection pressures as possible is really important. Beyond this, we must do our best to hold in mind the likely selection pressures when interpreting (COVID-19) data

@Garethjgriffith also created a brilliant Shiny app (available at http://apps.mrcieu.ac.uk/ascrtain/ )">https://apps.mrcieu.ac.uk/ascrtain/... where you can play around with various selection parameters to look at collider bias.

So in summary, there is potential for collider bias when using highly-selected samples to investigate risk factors for COVID-19 infection/severity. It is of vital importance that associations between variables of interest are interpreted in light of this.

Results from samples that are likely not representative of the target population should be treated with caution by scientists and policy makers, as naive interpretation of results could lead to public health decisions that fail or even cause unintentional harm.

Finally, I do not claim ownership of this. It was the brainchild of @explodecomputer and a massive team effort from @Garethjgriffith, @tudballstats @AnnieSHerbert Giulia Mancano @Lindsey_Pike @ammegandchips Tom Palmer, @mendel_random Kate Tilling @Luisa_Zu @nm_davies and myself

Any comments, criticism or suggestions would be gratefully received and extremely useful

@ammegandchips @Lindsey_Pike and I have also tried to summarise the paper in an accessible way here:

https://ieureka.blogs.bristol.ac.uk/2020/05/10/collider-bias-why-its-difficult-to-find-risk-factors-or-effective-medications-for-covid-19-infection-and-severity/">https://ieureka.blogs.bristol.ac.uk/2020/05/1...

https://ieureka.blogs.bristol.ac.uk/2020/05/10/collider-bias-why-its-difficult-to-find-risk-factors-or-effective-medications-for-covid-19-infection-and-severity/">https://ieureka.blogs.bristol.ac.uk/2020/05/1...

Read on Twitter

Read on Twitter