SSRN: https://bit.ly/2xeTFvq

Dropbox:">https://bit.ly/2xeTFvq&q... https://bit.ly/3eTSoLe

Thread">https://bit.ly/3eTSoLe&q...

This paper is motivated by a problem I started thinking about as a founding member of the algorithmic pricing team at @AirbnbData - total average treatment effect (TATE) estimates from online marketplace randomized experiments are likely biased, due to interference.

There are many reasons this may be the case: items might substitute (or complement) one another, sellers may observe each others& #39; behavior and mimic it, etc.

Previous studies (by @AndreyFradkin and others) have found that naive estimates overstate treatment effects by up to 2x.

Previous studies (by @AndreyFradkin and others) have found that naive estimates overstate treatment effects by up to 2x.

There are existing solutions to this problem, but they rely on somewhat non-generalizable techniques (like randomizing at the level of well-defined product categories) or doing a lot of structural modeling (which many firms may be hesitant to do).

This paper thinks about whether or not we can generate a "network of items," where edges exist when two items substitute or complement one another. If so, we can then use network experiment designs and estimators to reduce bias in online marketplace experiments.

We use scraped Airbnb data (courtesy of Tom Slee) to create a simulation of searchers with heterogeneous preferences visiting Airbnb and trying to make bookings for one calendar night.

We then use this simulation to measure the true TATE of different pricing-related treatments.

We then use this simulation to measure the true TATE of different pricing-related treatments.

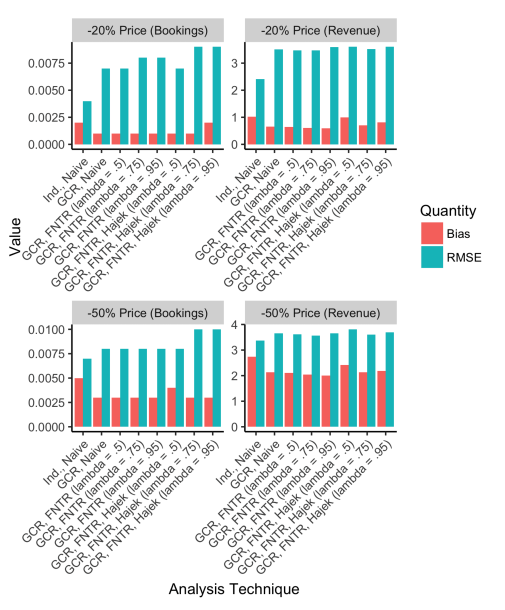

Once we have estimates of the "true" TATE, we can create a network of listings and simulate experiments to measure the efficacy of a few different network experimentation methods:

1) GCR

2) Exposure modeling

3) The Hajek estimator

relative to a baseline Bernoulli experiment.

1) GCR

2) Exposure modeling

3) The Hajek estimator

relative to a baseline Bernoulli experiment.

Overall, we find that graph cluster randomization is effective at reducing bias. After implementing GCR, exposure modeling (and exposure modeling + the Hajek estimator) may help reduce bias further, but not by much.

More importantly, these methods INCREASE RMSE BY A LOT!

More importantly, these methods INCREASE RMSE BY A LOT!

This raises an interesting question for practitioners; as academics, we think a lot about bias, but a practitioner might prefer a slightly biased experiment if it has much more power.

This is especially true for large firms who often ex ante expect tiny effects.

This is especially true for large firms who often ex ante expect tiny effects.

Despite power concerns, we think this is an idea worth thinking about more, and there are many areas for potential iteration (e.g., how should clusters be defined? How can one augment this approach to reduce bias, but also keep RMSE under control?).

@sinanaral and I also have some interesting follow-up work on this topic coming soon, which takes these ideas and tests them in the field.

I& #39;ve been sharing this work for awhile, and am grateful for comments from participants at a number of conferences, including @CODEConference, WISE, and WEBEIS.

This paper also started as a final project for @deaneckles& #39;s PhD seminar, and has benefited greatly from his feedback!

This paper also started as a final project for @deaneckles& #39;s PhD seminar, and has benefited greatly from his feedback!

Read on Twitter

Read on Twitter