Because many are curious about antibody tests and seroprevalence, I figured I& #39;d cover the http://covidtestingproject.org"> http://covidtestingproject.org results. This project systematically compared SARSCoV2 (COVID19) antibody kits. If we are going to do antibody tests, then we should use good ones...(1/n)

The covidtestingproject has done a great service by comparing 10 tests using the same samples. To me the important metric is specificity: how many times negative sera are measured as negative by the test. For COVID19 samples from 2018 should be reliable negative standards. (2/n)

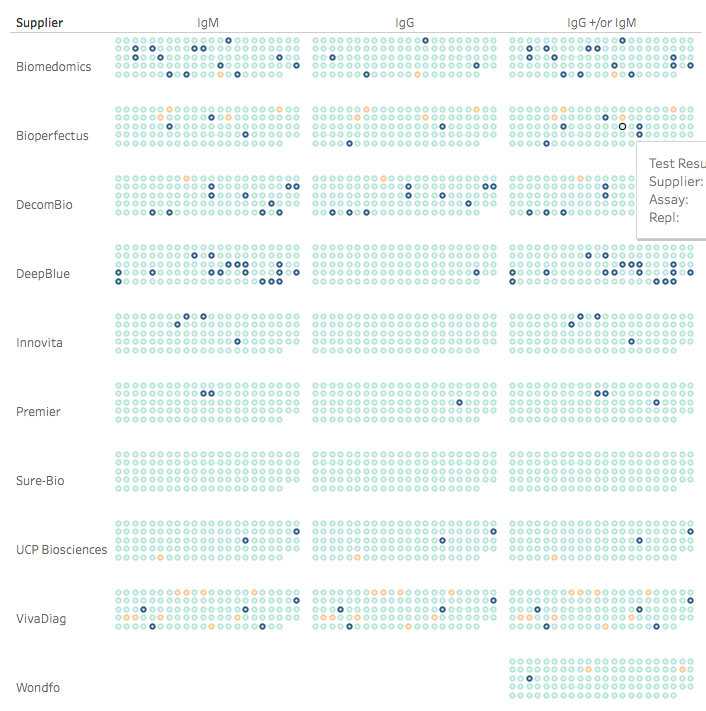

The project asked how many false positives each test found in 108 negative sera. 3 tests were clearly better than the rest: Sure-Bio (0 false positives), Premier (3), & UCP (2). Below, dark circles are the false positives; just look at the 3rd column which combines IgG+IgM. (3/n)

Interestingly Premier was the test used by the Stanford and USC studies (the Stanford group shared the data regarding test performance). They had found 2 false positives out of 371. So the covidtestingproject is giving a false pos rate of 3/108=2.8% vs Stanford 2/371=0.5%. (4/n)

You may recall 1 of the criticisms of the Stanford study was that the measured positive rate of 1.5% in the population was not significantly different from the false pos rate of 0.5% for the Premier test measured by the Stanford group themselves. (5/n)

And now we have a second measurement of the false positive rate for the Premier test as 2.8%, which suggests again suggests that the actual seroprevalence in the Stanford study could have been as low as 0% and all 1.5% measured positives could have been false positives. (6/n)

One thing to consider is if the negative sera, which in both studies came from a pre-COVID time, might have been affected by long-term storage. Freezing, storage, and thawing may have denatured some proteins. Both increases or decreases in false pos rates are conceivable. (7/n)

The one disappointment in the http://covidtestingproject.org"> http://covidtestingproject.org work is that they could only get 108 negative sera. You& #39;d think between UCSF and MGH they could scrounge up more than 108 negative samples. In fact there are almost as many authors (71) on the study as negative samples https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face"> (8/n)

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face"> (8/n)

It would be nice if the http://covidtestingproject.org"> http://covidtestingproject.org could get ≥1000 neg sera to measure specificity with better confidence. Take-home lesson is, to measure the rate of something occurring n% of the time, you need a test with a false pos rate an order of magnitude lower. (9/n)

Finally, I think the attention paid to antibody tests is premature. I& #39;m not sure why this is such a hot topic; maybe its& #39; from the press and academics trying to get a jump on the next big thing. Until we are at 50% infection and 1M deaths, it won& #39;t help us get back to work. (n/n)

And now U Miami is stepping on the same low-specificity landmine https://abs.twimg.com/emoji/v2/... draggable="false" alt="💥" title="Collision symbol" aria-label="Emoji: Collision symbol">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="💥" title="Collision symbol" aria-label="Emoji: Collision symbol"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Loudly crying face" aria-label="Emoji: Loudly crying face">They just claimed 6% of Miami-Dade county has COVID19 antibodies ( https://www.miamiherald.com/news/coronavirus/article242260406.html).">https://www.miamiherald.com/news/coro... Sorry to break this to you but the Biomedomics test you used ( https://cbsloc.al/3aO40gl#.XqPNveHnyfw.twitter)">https://cbsloc.al/3aO40gl... has 13% (!!!) false-positives.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😭" title="Loudly crying face" aria-label="Emoji: Loudly crying face">They just claimed 6% of Miami-Dade county has COVID19 antibodies ( https://www.miamiherald.com/news/coronavirus/article242260406.html).">https://www.miamiherald.com/news/coro... Sorry to break this to you but the Biomedomics test you used ( https://cbsloc.al/3aO40gl#.XqPNveHnyfw.twitter)">https://cbsloc.al/3aO40gl... has 13% (!!!) false-positives.

Read on Twitter

Read on Twitter