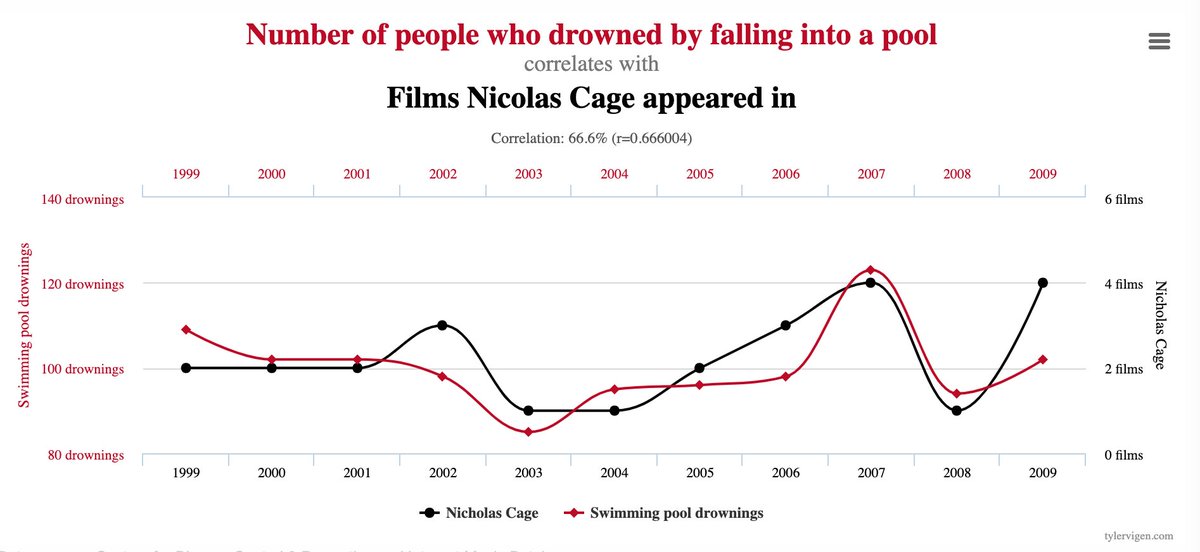

The covid-19 is causing an outbreak of pseudo-science. I thought I& #39;d spend some time talking about what science actually is. It can be summarized in the following graph:

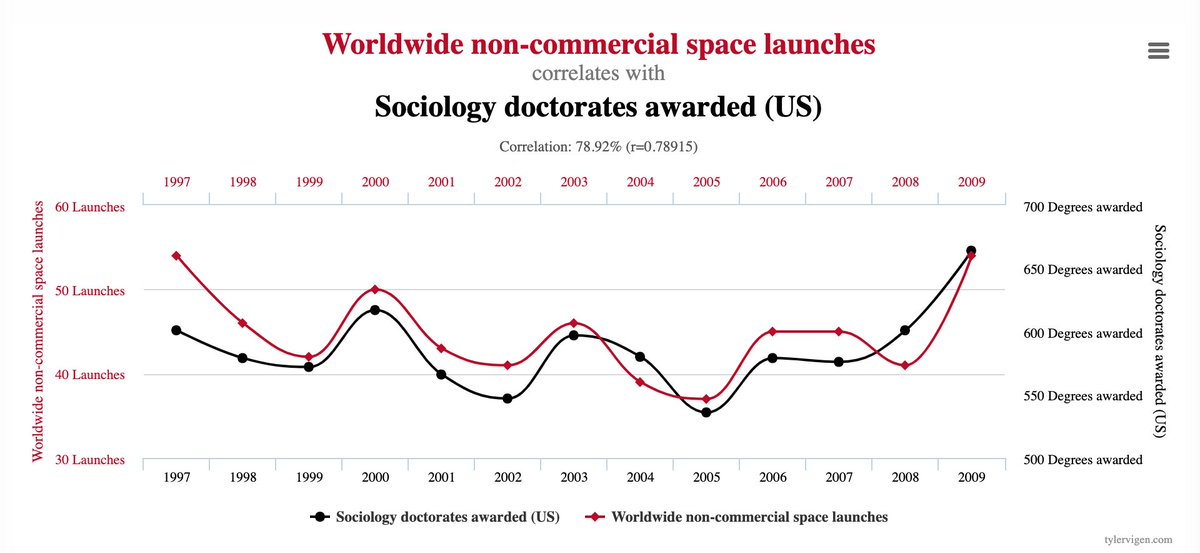

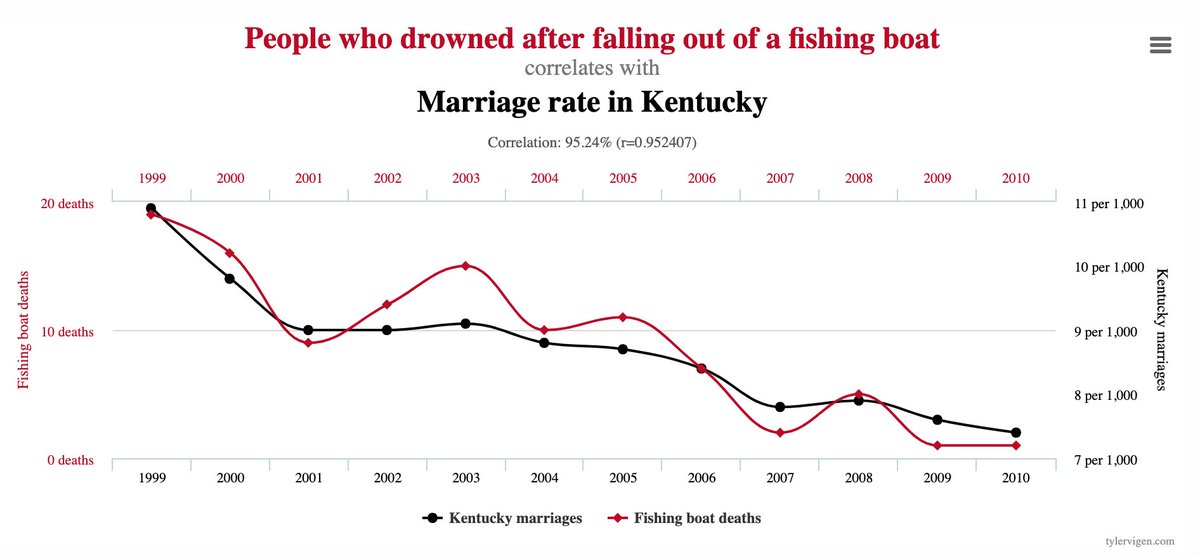

This is from a site called "spurious correlations" that evaluates a zillion data sets looking for things that can be correlated against each other, pretending there is correlation when there is none.

The drug hydrochloroquinine may work, which robust studies in the future will tell us more definitively. But all the studies we have now fail to show that it works. One of their consistent flaws is this spurious correlation.

One of the most cited pieces of "evidence" that hydrochloroquinine works is this study. However, if you read the paper, you find that the correlation is spurious indeed. https://www.medrxiv.org/content/10.1101/2020.03.22.20040758v3">https://www.medrxiv.org/content/1...

What the study did was measure a lot of different things, then went hunting for things that may correlate with efficacy. This is called "p hacking" or "data dredging". It keeps changing the criteria until they find something statistically meaningful.

https://en.wikipedia.org/wiki/Data_dredging">https://en.wikipedia.org/wiki/Data...

https://en.wikipedia.org/wiki/Data_dredging">https://en.wikipedia.org/wiki/Data...

This is why we see a bunch of studies, all showing that the drug "works", but with different definitions of how it helps. Some define it was getting rid of the virus. Others define it as easing symptoms. Others as faster recovery.

In my own field of cybersecurity, this is essentially a form of what& #39;s known as the "Birthday Paradox", that you can create spastically implausible results by working both ends towards the middle.

To guard against this, medical studies are supposed to register the success criteria BEFORE they start taking measurements. That& #39;s how we know the study above is unbelievable: the did register their study, but then completely changed the criteria after they took the measurements.

In other words, they failed to find the thing they were looking for and kept massaging the data until they found an outlier, and published that instead. This is just nonsense -- they created a result out of thin air the same way Spurious Correlations does.

Another example of this effect is the Stanford study that found much higher rates of symptomless infections, meaning the death rate is dramatically lower than assumed. https://www.mercurynews.com/2020/04/20/feud-over-stanford-coronavirus-study-the-authors-owe-us-all-an-apology/">https://www.mercurynews.com/2020/04/2...

The actual paper is here, and it& #39;s full of similar crap. It& #39;s hard to tell exactly what the results are because it sure looks like a lot more spurious correlation. https://www.medrxiv.org/content/10.1101/2020.04.14.20062463v1">https://www.medrxiv.org/content/1...

Here& #39;s what the methodology and results actually are. They put on advertisement on Facebook looking for subjects in Santa Clara, then tested them for antibodies. They found 1.5% of the subjects had antibodies. The test has around a 0.5% false-positive rate.

By spurious statistical manipulation, they get a headline result that as much as 5% of the population may have already been infected with the disease and not know it, when a more responsible view of the numbers could be as low as 0.2%.

Again, we have the same problems in my field of cybersecurity with false-positive and false-negative rates (specificity and sensitivity) and Bayesian inference from those results.

Anyway, what I want to get to here is the difference between science and pseudo-science. Science is when you make all the data available to your detractors and help them as much as possible disprove your results, and when they fail, your results are proven.

Pseudo-science is when you claim spectacular results, but hide the data and methods behind obfuscation such that your detractors can tell it& #39;s crap, but have difficulty pointing out your precise errors because you& #39;ve hidden them.

The two papers I& #39;ve cited here may actually turn out to be robust, and they if they made all their methods, code, and results available, we& #39;d be able to see that. But they& #39;ve chosen to hide the specifics.

Again, there& #39;s a parallel in my own field of cybersecurity. Naive practitioners believe in trying to keep all the details secret. "Trust us, our product is secure, but no, we can& #39;t reveal the details to prove this because it would help hackers".

The opposite stance is full transparency, "Trust us, our product is secure, here is the full source code and design documents, and when (not if) you find a security bug, we& #39;ll give you this large bounty to report it to us so we can fix it".

Read on Twitter

Read on Twitter