#tweeprint time for our new work out on arXiv! https://abs.twimg.com/emoji/v2/... draggable="false" alt="📖" title="Open book" aria-label="Emoji: Open book">We& #39;ve been trying to understand how recurrent neural networks (RNNs) work, by reverse engineering them using tools from dynamical systems analysis—with @SussilloDavid. https://arxiv.org/abs/2004.08013 ">https://arxiv.org/abs/2004....

https://abs.twimg.com/emoji/v2/... draggable="false" alt="📖" title="Open book" aria-label="Emoji: Open book">We& #39;ve been trying to understand how recurrent neural networks (RNNs) work, by reverse engineering them using tools from dynamical systems analysis—with @SussilloDavid. https://arxiv.org/abs/2004.08013 ">https://arxiv.org/abs/2004....

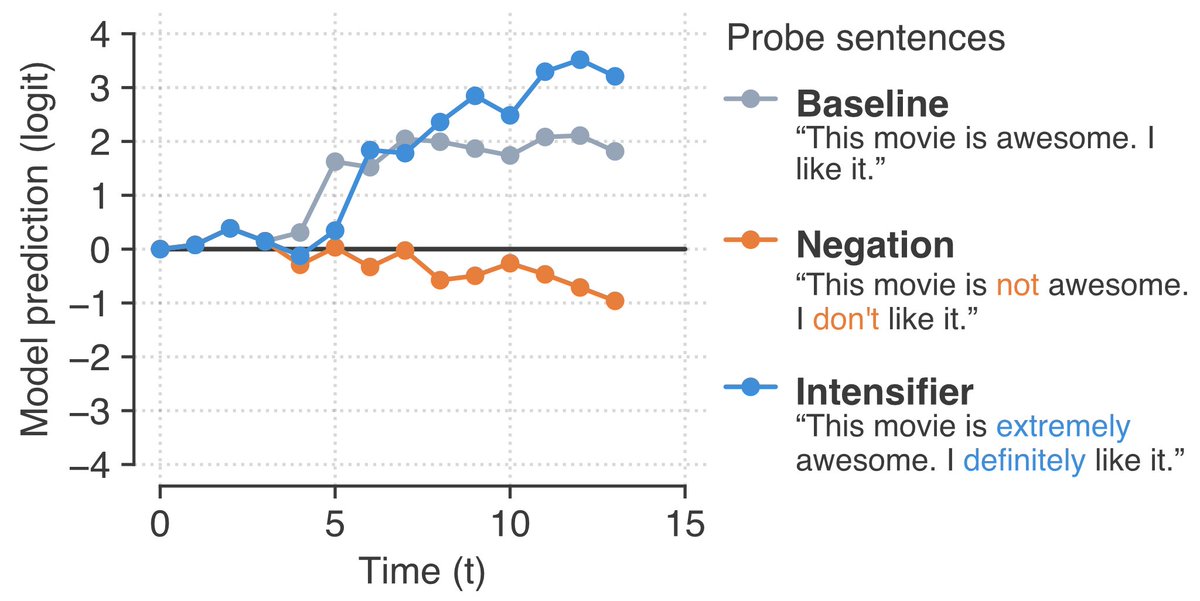

We wanted to understand how neural networks process contextual information, such as phrases like “This movie is not awesome.”  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👎🏾" title="Thumbs down (medium dark skin tone)" aria-label="Emoji: Thumbs down (medium dark skin tone)"> vs “This movie is extremely awesome.”

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👎🏾" title="Thumbs down (medium dark skin tone)" aria-label="Emoji: Thumbs down (medium dark skin tone)"> vs “This movie is extremely awesome.”  https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words.

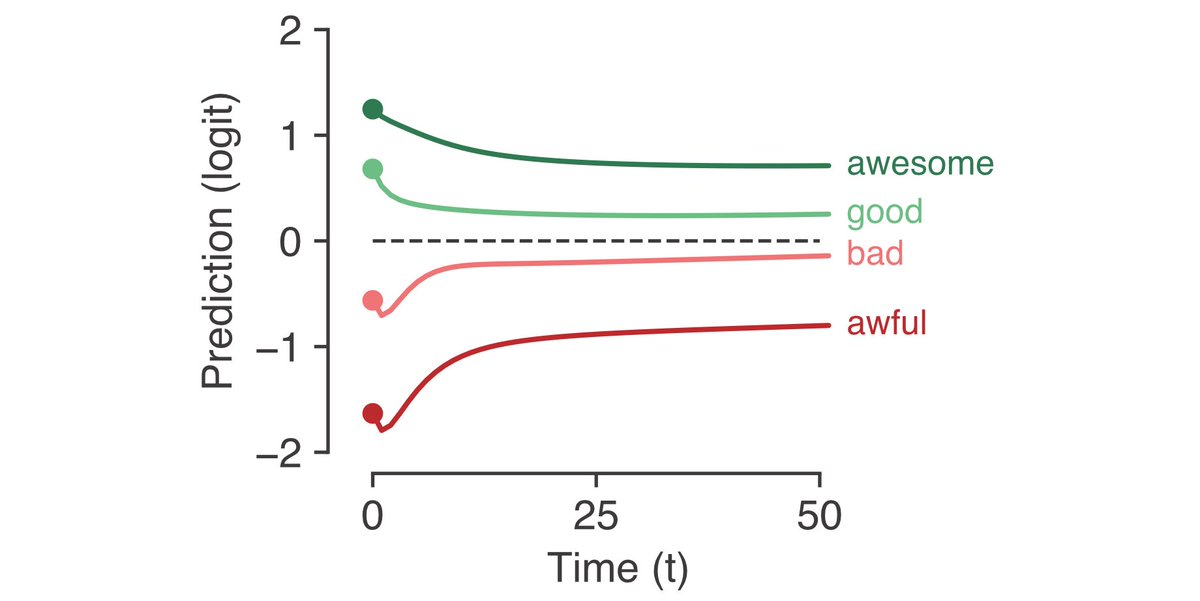

At last year& #39;s NeurIPS, we presented work that showed that RNNs trained on sentiment classification use a line attractor to integrate positive/negative valence from words in a review (with Alex Williams, Matt Golub, & Surya Ganguli). http://papers.nips.cc/paper/9700-reverse-engineering-recurrent-networks-for-sentiment-classification-reveals-line-attractor-dynamics">https://papers.nips.cc/paper/970...

However, line attractor dynamics cannot explain how modifier words (eg “not”) change the meaning of valence words (eg “good”), so we were left with a big mystery… but now, we’ve solved it!  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Party popper" aria-label="Emoji: Party popper">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎉" title="Party popper" aria-label="Emoji: Party popper"> https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎊" title="Confetti ball" aria-label="Emoji: Confetti ball">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🎊" title="Confetti ball" aria-label="Emoji: Confetti ball">

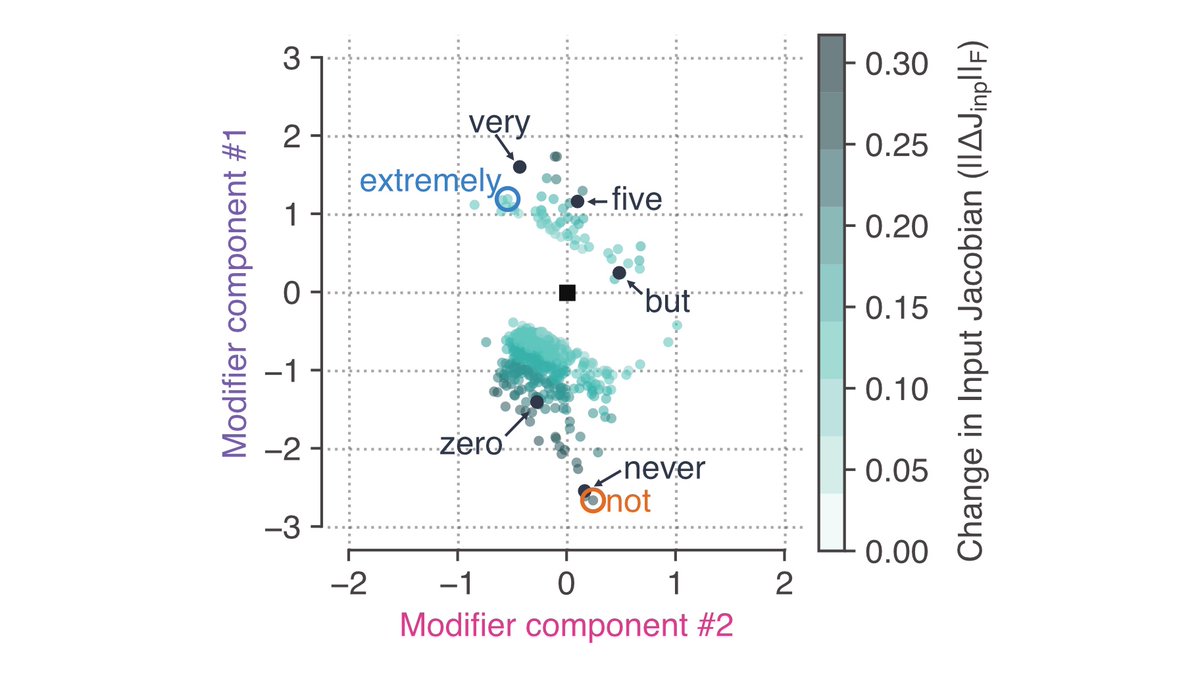

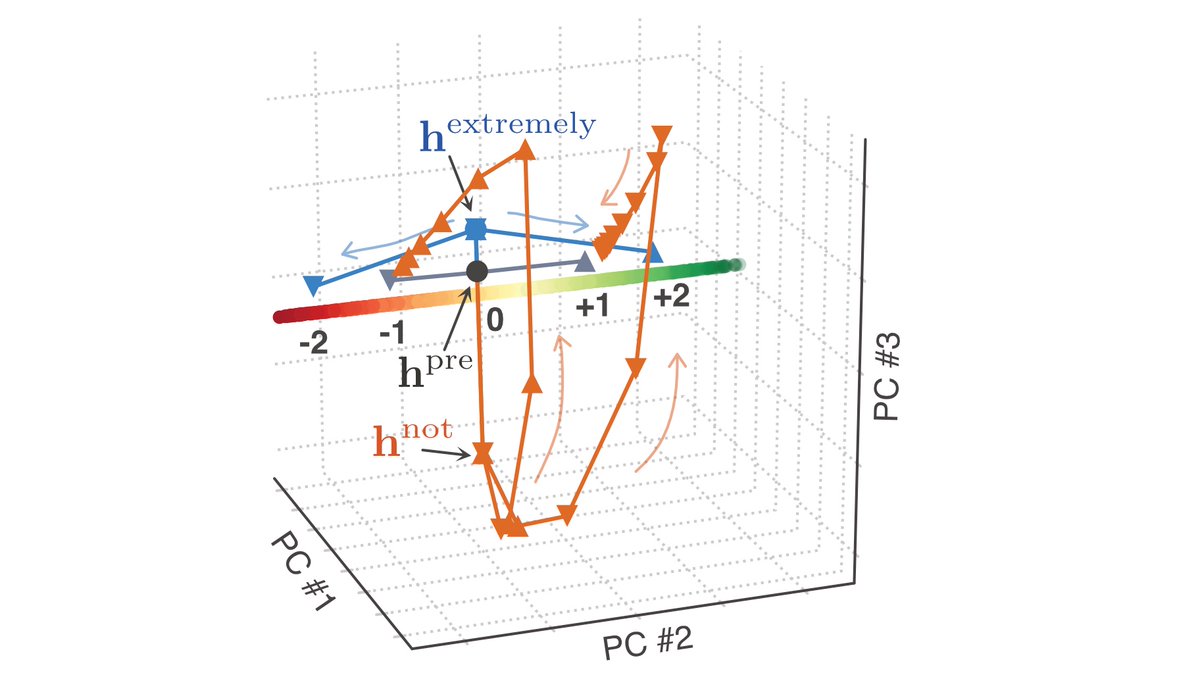

We show that modifier words place the RNN state in a low-d “modifier subspace”. In this modifier subspace, the valence of words changes dramatically, e.g. potentially flipping sign (“not”) or being strongly accentuated (“extremely”).

There are transient dynamics in this modifier subspace, which lets us quantify the strength and timescale of modifier effects. Moreover, preventing the RNN from entering the modifier subspace completely abolishes the network’s ability to understand modifier words.

This work on understanding the modifier subspace also led us to understand new types of contextual effects that we had no idea were lurking inside the RNNs:

First, we figured out that RNNs emphasize words at the beginning of reviews. This is implemented by making the trained initial condition project into the modifier subspace The initial condition (t=0) itself is an intensification modifier, like “extremely”!

Second, we figured out that RNNs accentuate the end of reviews, by projecting fast decaying modes onto the readout. Thus if the review ends, the transient valence is counted. We could understand all of this through analyses of linear approximations.

As Feynman wrote, “What I cannot create, I do not understand.” We augmented Bag-of-Words baseline models with modifier effects based on our analyses, and found that we could recover nearly all of the difference in performance between the Bag-of-Words model and the best RNN.  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Gear" aria-label="Emoji: Gear">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚙️" title="Gear" aria-label="Emoji: Gear">

We think of this work as building new tools for reverse engineering neural networks to really understand their learned mechanisms and how to perturb/amplify/isolate their effects.

For more information, check out the paper! https://abs.twimg.com/emoji/v2/... draggable="false" alt="😍" title="Smiling face with heart-shaped eyes" aria-label="Emoji: Smiling face with heart-shaped eyes"> https://arxiv.org/abs/2004.08013 ">https://arxiv.org/abs/2004....

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😍" title="Smiling face with heart-shaped eyes" aria-label="Emoji: Smiling face with heart-shaped eyes"> https://arxiv.org/abs/2004.08013 ">https://arxiv.org/abs/2004....

For more information, check out the paper!

Read on Twitter

Read on Twitter vs “This movie is extremely awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words." title="We wanted to understand how neural networks process contextual information, such as phrases like “This movie is not awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👎🏾" title="Thumbs down (medium dark skin tone)" aria-label="Emoji: Thumbs down (medium dark skin tone)"> vs “This movie is extremely awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words." class="img-responsive" style="max-width:100%;"/>

vs “This movie is extremely awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words." title="We wanted to understand how neural networks process contextual information, such as phrases like “This movie is not awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👎🏾" title="Thumbs down (medium dark skin tone)" aria-label="Emoji: Thumbs down (medium dark skin tone)"> vs “This movie is extremely awesome.” https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍🏾" title="Thumbs up (medium dark skin tone)" aria-label="Emoji: Thumbs up (medium dark skin tone)"> Here, the words “not” and “extremely” act as modifier words." class="img-responsive" style="max-width:100%;"/>