Ok I threatened y& #39;all with a thread about this and now there is no escape. It is technical in places; I mostly understand it but not completely. https://twitter.com/IneffectiveMath/status/1252222727785775104">https://twitter.com/Ineffecti...

There are (at least) two relevant issues:

1) a technical point about what sorts of penalty terms should be used in a ridge regression

2) an output point about distributions of impact, broken down by position

turns out, the two things are linked together, in a strange way.

1) a technical point about what sorts of penalty terms should be used in a ridge regression

2) an output point about distributions of impact, broken down by position

turns out, the two things are linked together, in a strange way.

The first point is the kind of thing that specialists argue about at distressing length. Last year, my model included a so-called "individual prior" and the evolving wild twins model used a "population prior" instead, like my model had done the previous year, just for an example.

The difference between the two is, in implementation, extremely small. Individual priors involve biasing each player towards some known value; each player has their own value. Population priors do the same thing, only you use the same value for every player.

The motivations for the two are slightly different: For individual priors, if you know something about an individual, like about a hockey player who has played games from before the data you& #39;re considering, you might want to use that to inform your regression.

For a population prior, you might have suspicions about group tendencies that are /because they are in that group/, not the result of some calculation where you assemble knowledge about this guy and that one and this thing over there.

The population priors in sports are older and more common, they usually just go by "ridge regression" and they were a useful technical trick to avoid overfitting long before anybody knew that they were actually the same thing as specifying prior information.

The reason they work well for tamping down overfitting is that they, roughly, draw a big circle on the ground and say "that& #39;s what nhl players look like" and so your regression gets a little zap when it tries to give you results that are too far outside the circle.

"this schmuck played twenty shifts and his team took forty shots, he& #39;s the next coming of zeus"

*bzzt, not what nhl players are like*

so you drag the estimates back and fit the data worse but the phenomena better

*bzzt, not what nhl players are like*

so you drag the estimates back and fit the data worse but the phenomena better

When I moved from magnus 1 (off-season of 2018) to magnus 2 (off-season of 2019) I thought "replacing population priors with individual priors, I& #39;ll get all of the benefits, but with more precision" and I was wrong wrong wrong.

If you did /ordinary/ least squares fitting, you& #39;d get some really outlandish overfit results, back in my undergrad days we& #39;d have said "non-physical" results with a dismissive wave. Using individual priors /only/ amounts to a kind of slow-motion overfitting, over many years.

Every season one set of players overperforms their true abilities, and another set underperforms. If you set the mark for the next seasons as where they landed the previous year without any kind of movement back towards "group normal", you slowly bake unrealistic expectations in.

I loathe and despise comparisons between old models and new ones, just like I hate wearing old clothes that don& #39;t fit once I& #39;ve already bought the new clothes, but today I make one small exception.

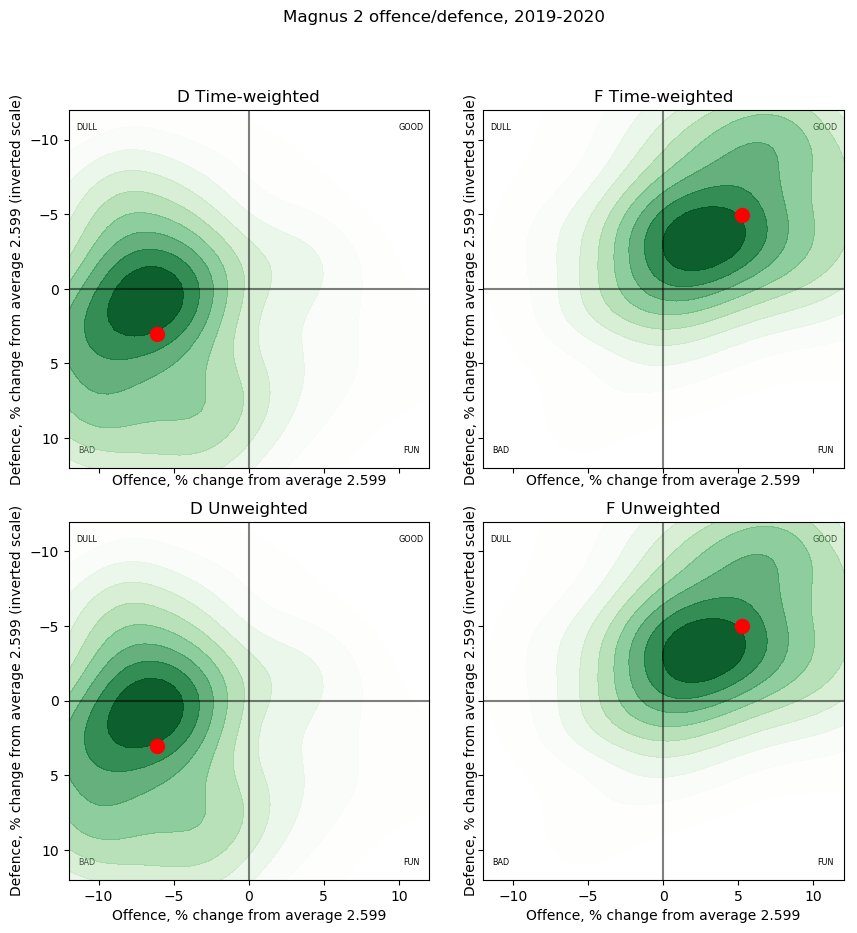

In the old model (it& #39;s already dead, it won& #39;t be coming back, don& #39;t get any ideas), the discrepancy between the estimates for an average forward and average defender as of right now was about 14%; straining credulity.

On its face I could hold my nose and tolerate it, after all, letting myself be taught by my work is part of being a disciplined analyst. But when I noticed that the discrepancy steadily grew, about 1% each year, that was the big red flag that there was a technical fault instead.

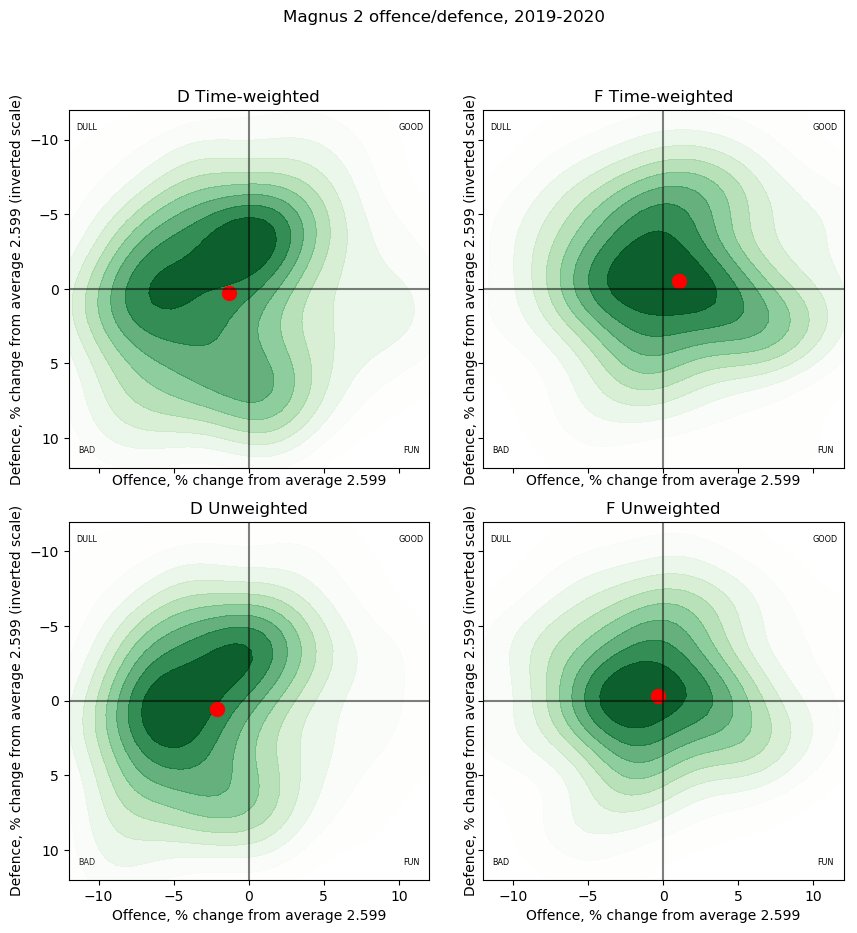

The (a?) solution was to include /both/ an individual prior and a population prior. After all, even at the individual level, they both have different meanings. "This guy is an NHLer" and "I saw him do this thing" are related but different pieces of information.

With both in place, the discrepancy between the two positions is much smaller, about 2% or so, depending on if you decide to weight by icetime or not.

So the kernel of truth appears to be as before: that forwards, on average, really are slightly more impactful players. That& #39;s not enough to produce the "slow-motion overfitting" that I saw, though, I don& #39;t think, and here is the thing I only dimly understand.

If the increasing D-F discrepancy were caused /solely/ by overfitting, that would require the overperformances/underperformances in a given season to be, on average, also biased along F/D divisions, respectively.

So, why might the lucky (lucky in the sense that they did not continue) seasons accrue mostly to forwards? Here I have nothing but guesses and no practical way of testing any of my guesses. Way out over my skis in any case now.

So all up:

if you are completely sure about what the discrepancy between positions "has to" be, I regard you, as previously, with great skepticism;

different sorts of priors mean different things even when formally similar and you may need to apply several to your model.

if you are completely sure about what the discrepancy between positions "has to" be, I regard you, as previously, with great skepticism;

different sorts of priors mean different things even when formally similar and you may need to apply several to your model.

And on a more going-about-my-day-job-going-forward note, if you complain about my model "favouring" forwards, I will think nasty thoughts about you when I look at your twitter handle.

Read on Twitter

Read on Twitter