Misleading Stanford Covid diagnostic study is all over news. Flaws w/ this study (authors acknowledge) could trick you into thinking that getting shot in the head has a low chance of killing you. I invest in diagnostics. I see this often. A dead body... https://www.medrxiv.org/content/10.1101/2020.04.14.20062463v1.full.pdf">https://www.medrxiv.org/content/1...

…is lying in the street of a small town. There’s a bullet hole in the person’s head. Someone declares “Oh no, any one of us could be next!”. But the Mayor steps forward & replies reassuringly, “Hold on, we don’t know that getting shot in the head is dangerous.” The townsfolk…

…like this news and ask, “you mean we don’t have to hide in our homes from this killer?” The Mayor says, “Let’s run a study. Let’s first see how many people have been shot in the head at some point in their lives, maybe when they didn’t notice, and then…

…we can see what the lethality of a bullet really is. As a diagnostic for whether someone has ever been shot in the head, we’ll look for signs that a bullet hit their skin. It should leave a scar.” Now this is a large town of 20,000 people and the Mayor didn’t have all day…

…so he asked for volunteers. And 500 people came forward to have their heads inspected. The Mayor found that 10 of them, 2%, had round scars on their heads. So he declared, “Since 2% of these 500 folks have scars on their heads, then we can assume 2% of the town has scars.”

“So there must be 400 people in this town who have bullet scars, and therefore we have 401 people who were shot in the head but, as you can see, only one was killed. That means only 0.25% of those who get shot in the head die. Bullets are clearly not very lethal. Relax everyone.”

And so the town relaxed. The next day, the killer among them shot 3 more people dead. Is this a silly story? Yes. But it has the basic elements to allow anyone to see how we have to be careful about interpreting such “surveillance” data. What did the Mayor get wrong?

There are actually two major flaws in his logic. One is that his test has false positives and the second is that his sample wasn’t truly random. Let’s talk about the first flaw: clearly, we can’t assume every scar on the head is evidence of an encounter with a bullet.

Lots of people have round scars from acne or chicken pox. Those result in false positive. In the case of covid testing, the Stanford researchers reported that “Among 371 pre-COVID samples, 369 were negative.” That means that a test that was looking for antibodies to SARS2…

…said that 2 out of 371 people had them even though we know they couldn’t have since those 371 blood samples were taken before SARS2 arrived on our shores. (Some will call this evidence that SARS2 was here earlier, but that’s not the case. It’s like saying “all those scars are…

…evidence that hundreds of people in our town have been unknowingly shot.” They weren’t. False positives really happen. I explain what antibodies are (police dogs analogy) & how SARS2 diagnostic tests can have false-positive results in another thread. https://twitter.com/PeterKolchinsky/status/1249475238636765184?s=20">https://twitter.com/PeterKolc...

But for now, just consider that SARS2 is related to coronaviruses that cause common colds, so it’s not hard to imagine that an antibody test that looks for antibodies to SARS2 will sometimes say a sample is positive when it’s really detecting antibodies to those other viruses.

Is it possible that some people survived an encounter w/ a bullet? Yes, it happens. Depends on caliber, where it hits, angle, etc. So the lethality of a bullet is not 100%. But it’s also definitely not 0.25%. Key to knowing is a good test to find out who& #39;s really been been shot.

But back to the Stanford study. 2 false-positives out of 371 is only 0.5%. That means that the test correctly calls 99.5% of people who were never infected with SARS2 as being negative. The trouble is, and the authors of the study acknowledge this, that 0.5% isn’t…

…the true false-positive rate. It’s an estimate. Maybe if they had tested another set of samples, the test would have called 4 of them false-positive or only 1. So the authors reported that “our estimates of specificity are 99.5% (95 CI 98.1-99.9%)”. That’s hard to decipher.

You need to know that "specificity" is how many negatives a test correctly calls negative. It’s the true-negative rate. 1-specificity is therefore the false-positive rate. A test w/ a 98.1% specificity has a 1.9% false-positive rate. False-positives can be a real problem.

Imagine a drug test that says 1.9% of people are taking drugs but aren’t. Their careers can be ruined. Imagine a radar gun that says that 1.9% of people who are going 65 are actually going 70. That’s one reason police tend to focus on people going much faster than that.

Stanford reported a specificity of 99.5% (95 CI 98.1-99.9%), which means 99.5% of neg samples were called negative, but we aren’t totally Confident in that # (the C in “CI” means “Confident” and I means Interval). We are 95% confident that correct answer is between 98.1-99.9%.

So this test could have around a nearly 2% false-positive rate. And with a 2% false-positive rate, as you may recall from the story, you can turn something that is nearly 100% fatal (a bullet to the head) into something that looks like it’s only 0.25% likely to kill you.

Specifically, the Stanford study tested 3,330 people in Santa Clara. If test really has a 2% false-positive rate, then it would have called as many as 66 people positive for SARS2 antibodies even if they weren’t. But the test called 50 people positive. So maybe the test has a…

…1.5% false-positive rate & none of those people were positive. Or maybe it has a 1% false-positive rate (33 out of 50 weren’t really infected) & therefore 17 people really were infected (which is 0.5% rate of infection). So w/ small tweaks to assumptions, we can get any number.

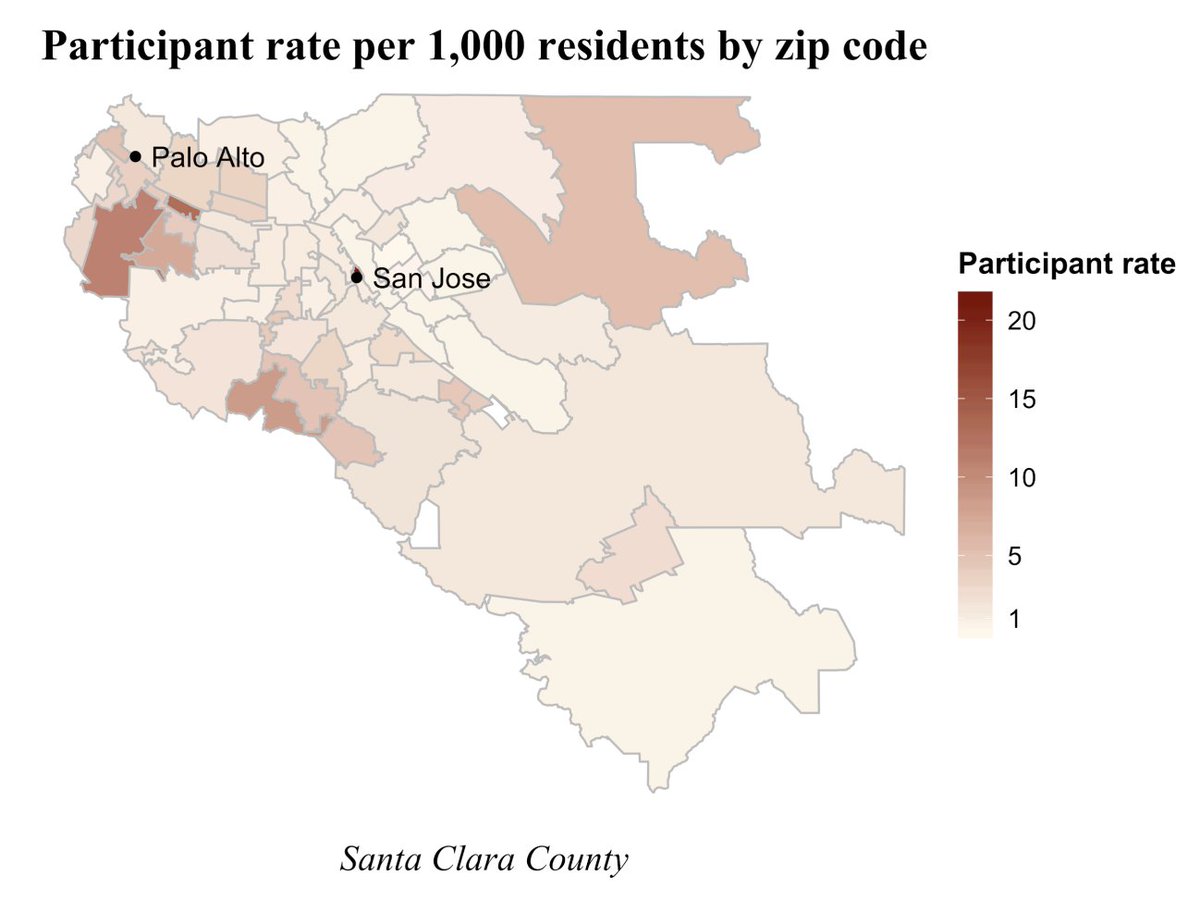

Now let’s consider the other flaw, that the people tested weren’t representative of everyone. You can see from this graphic that most came from a few regions, particularly closer to Palo Alto. The participants were more likely to be white, younger, and female.

So researchers made some adjustments by extrapolating the data they had for tested 20-30 years olds to all 20-30 year olds, from tested Hispanics to all Hispanics, and from tested men to all men. But what if the people who step forward for testing are ones who had some symptoms…

…and were most eager to see if they really were positive? In the case of the bullet analogy, what if those people who did have scars on their heads were more likely to step forward to be inspected. Then fact that 2% of tested people had scars would not mean that 2% of town did.

That’s not an adjustment that the researchers made or could have made, because they can’t know what motivated people to volunteer for this study. So the results are even more uncertains than just b/c of the false-positive range.

In any case, after making the adjustments for age, gender, and race that they could make to extrapolate from their small set of tested people to the whole county, they estimate that 2.8% of Santa Clara county (1.9M people) was infected, which is around 53,000 infected.

But if there were an enrichment of people who had symptoms in the 3,330 who volunteered for testing, then the extrapolated 2.8% positive rate is an over-estimate. The authors of the Stanford study say as much, but that’s buried in the fine print.

What’s this mean? Santa Clara had 1094 reported cases and 50 deaths, though the authors reasonably estimated that maybe another 50 of these cases may yet die. So that’s an estimated 9% lethality (100/1094). Yet it’s certainly an overestimate b/c there are…

…definitely many mild & asymptomatic cases out there. That’s exactly what this Stanford study was trying to measure. Data from the Diamond Princess suggested that maybe half of all infected people are asymptomatic, though even that might be an underestimate.

And so this virus doesn’t kill 9% of the people it infects. The Stanford test suggests that 2.8% of Santa Clara County was likely infected, which would be 53,000 people. So if 100 of them die, then that’s a 0.19% lethality rate, which seems reassuring. But if we assume…

…the test had even a 1% false-positive rate, then 1% of the county was infected, which is 19,000 people & a 0.5% lethality rate (100/19,000). And if we assume that people who had symptoms were just twice as likely to go for testing, then only 0.5% of the county was infected.

So that would mean that 9,500 in Santa Clara were infected, of which 100 die, which is a lethality of ~1%. 1% lethality is in the ballpark of other calculations of lethality from other regions. If 90% of Americans were to be infected & 1% were to die, that would be 3M deaths.

So as much as the Stanford study may seem reassuring, the uncertainties in this study leaves us still uncertain about how lethal SARS2 is & far from certain that it’s mild enough that we can just ease up on social distancing, which is what everyone of us is yearning for.

But there’s another common sense way to consider the Stanford data on their face. Consider all the pain and death we’ve already seen. If that’s what we get when only 2.8% of the population has been infected, then we’ll get far more when the next 2.8% is infected…

…and the next 2.8%, and so on. 38,000 Americans have died to far from COVID19. Let’s say there is herd immunity when we have 25x 2.8% of Americans infected (which would be 70% of the country). That would translate to nearly 1M Americans dead.

And if only 1% of Santa Clara were infected, which is entirely possible given the uncertainties in the Standard study, and we assume that this is representative of the country, then we need 70x more Americans infected, which means 70x 38,000 deaths, which is over 2.5M deaths.

My math is crude & needs adjusting for delays in death, but it doesn’t change fact that we’re talking about a lot of death yet to come unless we keep flattening the curve.

So while the Stanford study will provide some joy to anyone who reaches for every bit of evidence that SARS2 is not that scary a virus, we need to be careful that we aren’t just seeing what we want to see in a very uncertain data set.

I’ve shown you that the flaws in such a study could make a bullet to the head seem less dangerous than it is. Such a study can certainly make a lethal virus, one that may only kill 1% of those it infects, seem 10 times less dangerous. But that doesn’t make the study right.

To find out how lethal the virus really is, we’ll need larger studies with more accurate tests. We not have more accurate tests, which means that we may have to wait until the virus has spread more before knowing its lethality with more confidence.

For example, if number of positive cases Stanford found among 3330 test subjects was 600, then difference between a 0.1% and 2% false-positive rate wouldn’t have mattered. 0.1% would mean 3 of the 3330 get a false-positive result, and a 2% rate would mean 66 do.

So that would mean that we could be sure that there were at least 540-597 positives among the 3330 (and that’s not adjusting for false-negatives, which is another story but not that significant). So we would then know that 16-18% of everyone was infected.

And if there really were only 100 deaths in Santa Clara out of an estimated 16% of everyone infected, then we could say that the lethality was tiny. But sadly they didn’t find a lot of positives. They found so few that most of them could have been caused by false-positives.

And even the true-positives could have been enriched in the group they tested because they didn’t test the population at random but rather asked volunteers to step forward.

So it’s going to take other studies with more accurate tests (not clear yet if we have any more accurate) done on random samplings of people and maybe at a time when the virus has spread more widely before we really know its lethality.

Until we know, it’s a reflection of each our priorities whether we choose to wishfully believe SARS2 is less lethal or approach these early data with caution and act as if it’s dangerous (which means social distancing, masks, wash hands, work on treatments and vaccines).

Need to characterize a test well enough that we know its specificity w/ more precision. Let’s say narrow it to 99.1-99.2%. Then select 3000 people at random & test. That would yield a useful answer. If see 50 positives, would know half are, so have a 0.8% infection rate.

Read on Twitter

Read on Twitter