Hi folks - let& #39;s talk about 3D image* processing! --DMP https://twitter.com/MinoritySTEM/status/1250404401312907264">https://twitter.com/MinorityS...

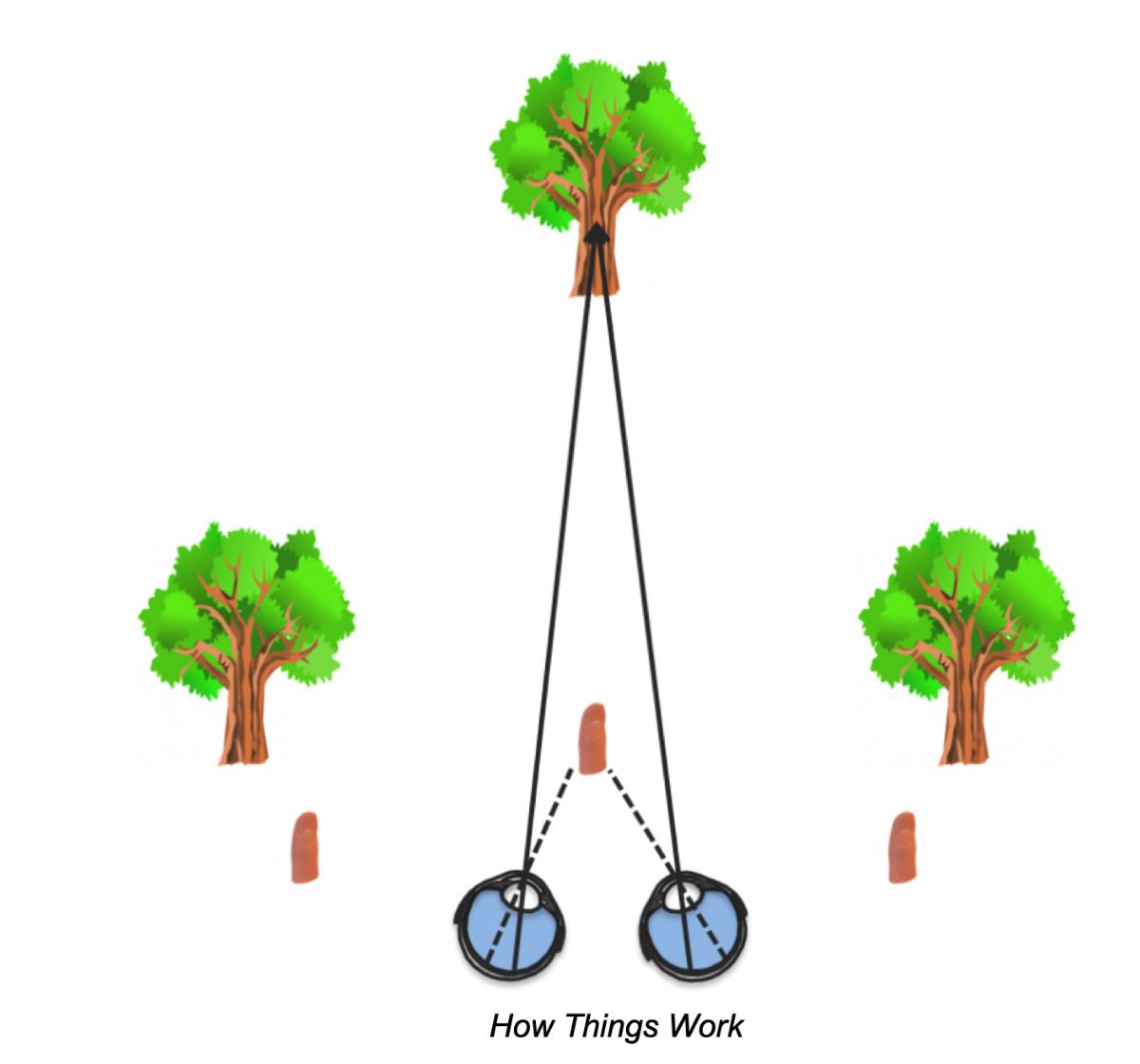

Humans perceive images (and sound) in three dimensions because of the spacing between our eyes (and ears).

This space means that each eye sees an object from a slightly different angle.

This space means that each eye sees an object from a slightly different angle.

Our brains use this & other info to perceive depth. Most of this is unconscious. Imagine a scene in miniature, like cars, that looks realistic. At some point our brains figure out it& #39;s wrong because of shadows and depth.

(I have been watching Thunderbirds and noticing this!)

(I have been watching Thunderbirds and noticing this!)

Image processing software can do something similar. We just need:

1. 2 images of the same area, taken at different angles

2. A program that can extract depth information given the two images, and additional info (like where the satellite was in space when it took these images)

1. 2 images of the same area, taken at different angles

2. A program that can extract depth information given the two images, and additional info (like where the satellite was in space when it took these images)

(GIF description: two black and white satellite images of a river channel on Mars, at slightly different angles and showing different features of the channel; the GIF flickers between them)

These 3D images, which made using satellite (or sometimes rover) images are called digital terrain models OR digital elevation models (depends on your school of thought, Earth vs. other planets, preference).

These terrain models are 3D files that are made up of points on a 3D grid. Some software can interpret this information and display it in 3D, or allow you to make 3D measurements of elevation.

Some vis software also lets you drape images onto the 3D models. This is where the excitement happens!

This video shows Victoria Crater, an exploration site of Opportunity Rover (the black speck at 0:17!!!)

Vis: mine in NASA DERT

Data: @MSSL_Imaging https://www.youtube.com/watch?v=IpHiQmijICY">https://www.youtube.com/watch...

This video shows Victoria Crater, an exploration site of Opportunity Rover (the black speck at 0:17!!!)

Vis: mine in NASA DERT

Data: @MSSL_Imaging https://www.youtube.com/watch?v=IpHiQmijICY">https://www.youtube.com/watch...

3D processing has several steps and it depends on the images as well as the software. During my Ph.D. I& #39;ve used two suites for making 3D terrain models using two different Mars cameras.

Both processing suites (in-house modifications to VICAR and ASP, for the nerds) generally:

1. find matching pixels between the images

2. use camera models (based on the image ID) to figure out where the images were taken

3. solve for matching

4. make a 3D cloud/grid

1. find matching pixels between the images

2. use camera models (based on the image ID) to figure out where the images were taken

3. solve for matching

4. make a 3D cloud/grid

This isn& #39;t that different from how iPhone apps might make a 3D image, or how 3D movies work. 3D movies in red-blue show us two views of the same scene, coded in different colors, and red-blue glasses help our brains put those images together.

BUT these images are HUGE. On a lower-resolution dataset with one program, processing one terrain model might take a few hours. On a higher-resolution dataset with another, it takes 3-5 days. I don& #39;t use HiRISE to make terrain models, but that can take up to two weeks.

A lot of the processing comes from finding matches, and refining those matches based on camera models and statistical methods. It& #39;s very computation-heavy, and, thankfully automated. I& #39;m not a computer scientist! :)

For me, my steps are:

1. Pick images. Some cameras are dedicated stereo cameras, meaning they& #39;re meant to take two images at different times of the same spot so people like me can make 3D models. ESA& #39;s Mars Express has the High-Resolution Stereo Camera is an example.

1. Pick images. Some cameras are dedicated stereo cameras, meaning they& #39;re meant to take two images at different times of the same spot so people like me can make 3D models. ESA& #39;s Mars Express has the High-Resolution Stereo Camera is an example.

However, cameras like the Context Camera (CTX) on the NASA Mars Reconnaissance Orbiter, are not stereo cameras. This means I have to find my own stereo pair using maps of all the images taken by CTX - looking for good quality images that overlap but at least 70%.

2. Change parameters in the program files - where the images are located, where I want to save the end result, what matching tools I want to use and how. This is where some experimentation happens because things can go wrong!

3. Run. and WAIT. Read a paper. Send some emails. Work on my thesis. Do some outreach.

More realistically, I set up all sorts of files I need for when the processing is done. But the wait can be long :)

More realistically, I set up all sorts of files I need for when the processing is done. But the wait can be long :)

Last, I check my models (if they succeeded) in mapping software. GIS, or geographic information systems, software are mapping packages that let you display 2D information. I can check for any bad matches (fuzzy bits, missing pixels, weird artefacts)

THEN I do a bunch of things in eager anticipation of ~3D VISUALIZATION!!!~ which include:

1. statistical tests to look at the quality of the products in all 3 dimensions

2. make figures for my thesis using different colors

3. sigh longingly about visualization

1. statistical tests to look at the quality of the products in all 3 dimensions

2. make figures for my thesis using different colors

3. sigh longingly about visualization

4. fix any problems / reprocess

5. get side-tracked (let& #39;s be real)

6. do some final processing to prep the data for visualization software

5. get side-tracked (let& #39;s be real)

6. do some final processing to prep the data for visualization software

BUT to build anticipation but also because we& #39;re after scientific rigor here, folks, this is still an important problem.

I need to make sure my 3D products are all in the same *space* https://twitter.com/MinoritySTEM/status/1250403405413130240">https://twitter.com/MinorityS...

I need to make sure my 3D products are all in the same *space* https://twitter.com/MinoritySTEM/status/1250403405413130240">https://twitter.com/MinorityS...

"But Divya they& #39;re all in space. They& #39;re on Mars"

Right? But things aren& #39;t so easy. Mapping is an art and relies on all sorts of assumptions, like:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> how big we tell a computer Mars is

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> how big we tell a computer Mars is

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> what SHAPE we tell a computer Mars is

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> what SHAPE we tell a computer Mars is

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> what the extra nonvisual data in the images say

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🐋" title="Whale" aria-label="Emoji: Whale"> what the extra nonvisual data in the images say

Right? But things aren& #39;t so easy. Mapping is an art and relies on all sorts of assumptions, like:

"DIVYA?? Hello?? The SHAPE of MARS??"

Yeah. The shapes of planets and moons (and the Earth) is pretty much a whole field unto itself. It& #39;s difficult to figure out the exact size and shape of a planet without detailed study with usually more than one satellite.

Yeah. The shapes of planets and moons (and the Earth) is pretty much a whole field unto itself. It& #39;s difficult to figure out the exact size and shape of a planet without detailed study with usually more than one satellite.

As the models of the sizes and shapes of planets are refined with further study, how we encode images - including about where the camera is in space when it takes an image - will change. Which feeds into the computer programs that calculate depth from the images...

It& #39;s like illusions - our brains need more information to actually resolve what the heck is going on or else it& #39;ll calculate something wrong!

To make sure 3D models of different resolutions, from different cameras, all agree with each other, we have to do some processing using geographic tools. Then, we can align them to each other.

Our only "ground truth" or what we consider the most factually correct elevation map of Mars is from the Mars Orbiter Laser Altimeter, which is a LASER that shoots at Mars (my fave instrument ever). It times the return of the light pulse to get the height of the surface.

For alignment, the High-Resolution Stereo Camera (HRSC) is actually automatically aligned to MOLA! Then I align the next lowest-res terrain to HRSC, then the next to that, etc. Alignment is done in 3D until everything agrees.

THEN WE CAN VISUALIZE! Which is another thread ;) Time for some croquet in the sun.

Read on Twitter

Read on Twitter